Late last year I was looking for an adapter or something and stumbled across these PCIe switch cards that insert in a PCIe slot in a PC and fan out to multiple slots. I was already using 16x GPU cards in 1x slots with surprising success, and thought it would be very interesting if these could be passed through to VM’s in the Hydra.

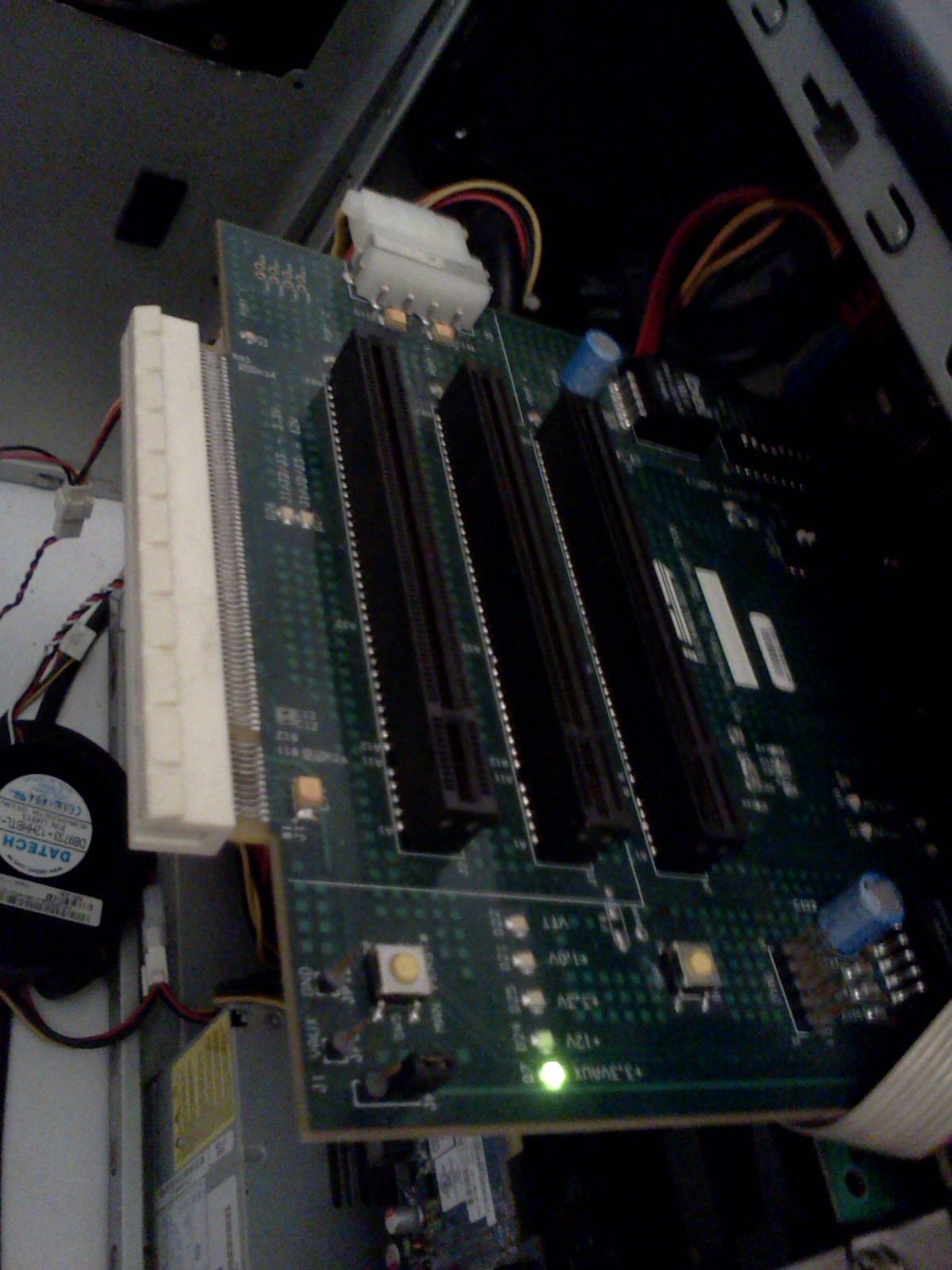

I found these cards on Ebay for $15, so how could I not try it :

They aren’t in perfect condition, one with a fried cap and the other with broken off dip switches. I figured I could move the switch from one to the other and have one good card for testing. They are from PLX, actually 1st gen cards way at the bottom of the list. They only have 8 lanes & 5 ports, while the 3rd gen chips go up to 96 lanes & 24 ports. I had already gone through the NDA process with them, and had access to all the docs & schematics. I realize the “NDA” word doesn’t exactly fit with open hardware, but it was relatively easy to comply & gain access to the materials. I will of course have to comply with the NDA in my description here, but I am focusing on the functionality of the complete system, rather than the design of PCIe switching hardware. Turns out I needed the broken switch to be “open” so no need to fix it.

http://www.plxtech.com/products/expresslane/pex8508

These are RDK boards to aid in the design of a PCIe switching solution, so what I have tested here is a proof of concept, and will lead us to a block diagram conceptual design that incorporates this switching technology in form factor that I have seen in PLX demo videos & product announcements. What has not been demonstrated is how PCIe switching behaves when passed through to virtual machines.

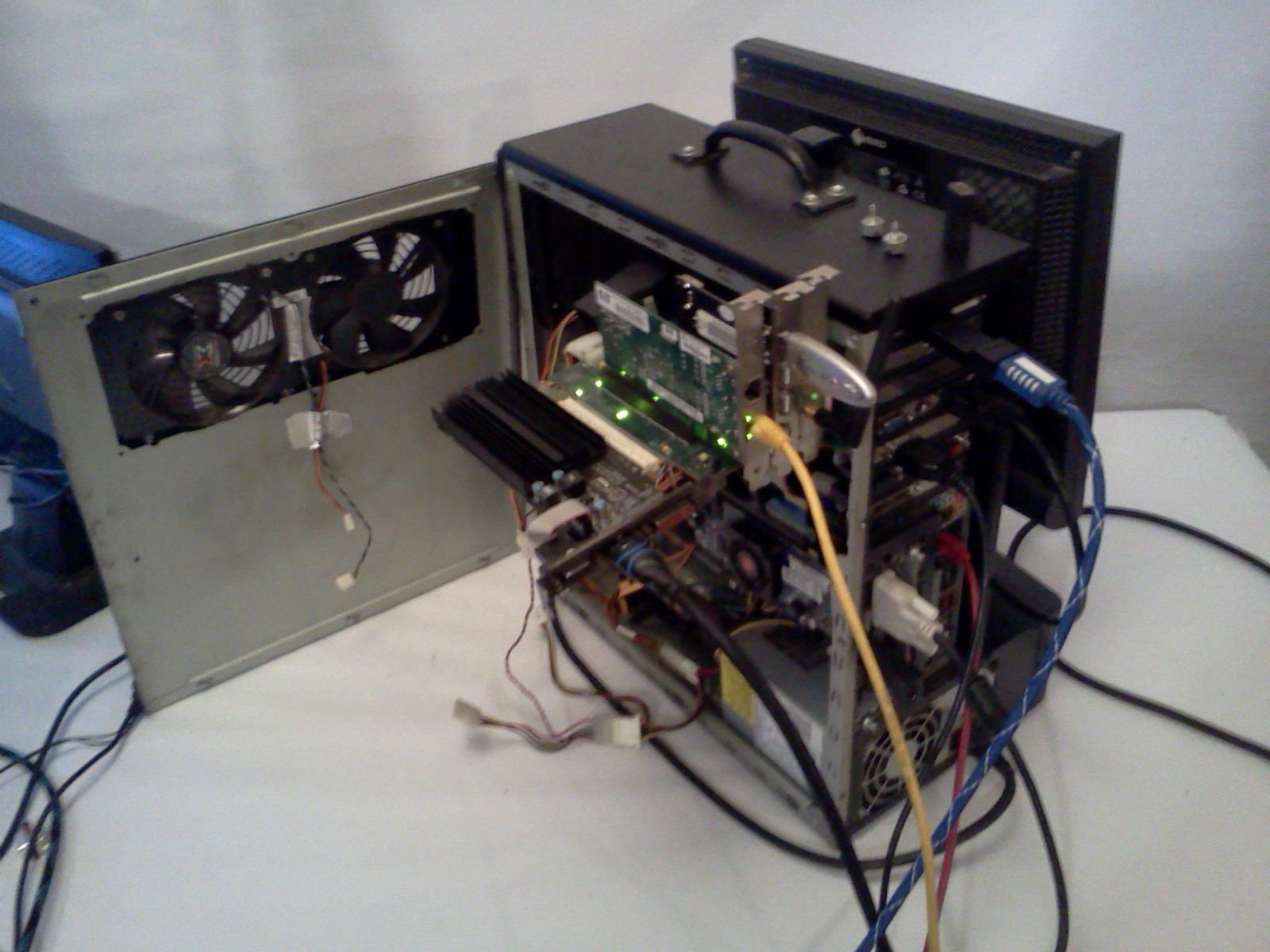

Here you see it installed in the Hydra, it’s a 4x card so I had to remove a HD6450 card from a 16x slot. Moved the card to the switch, and populated the other 3 slots with:

HP dual GB nic

5 Port USB card + stick for demo

Atheros Wifi card

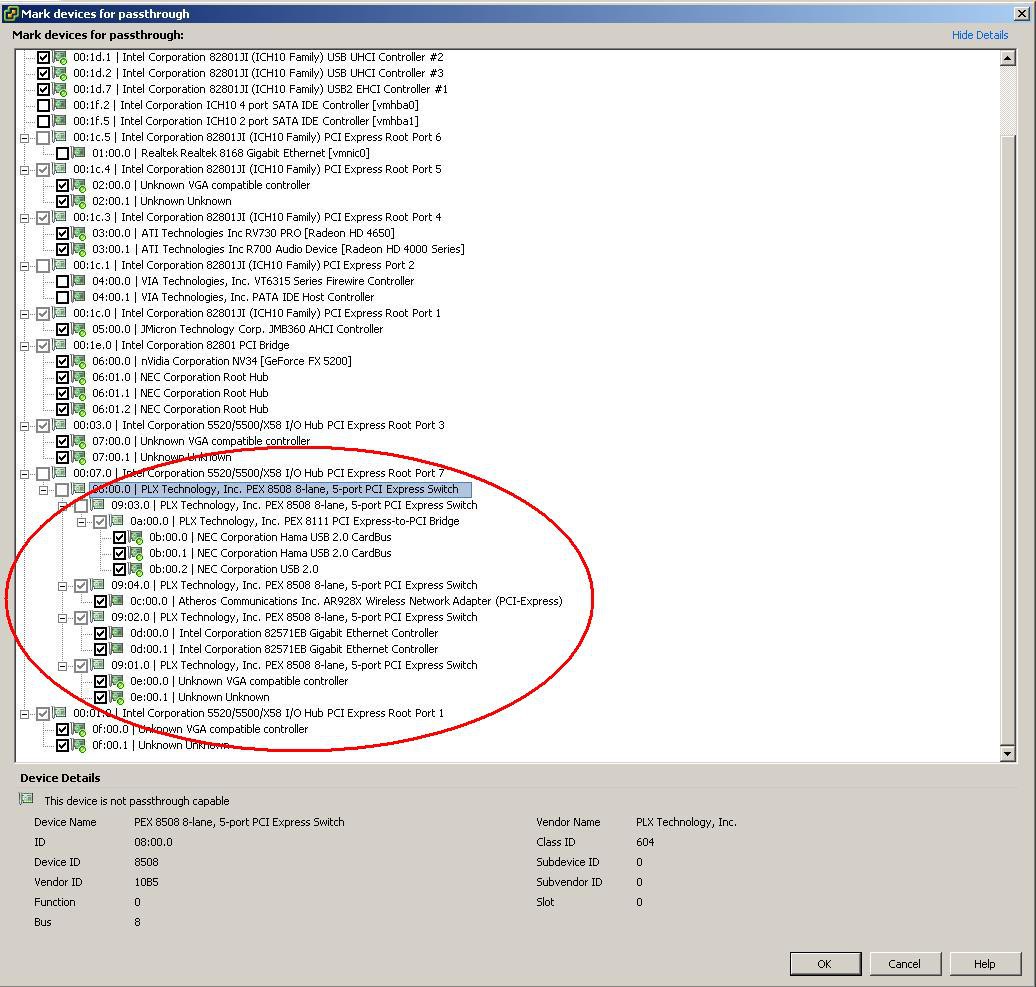

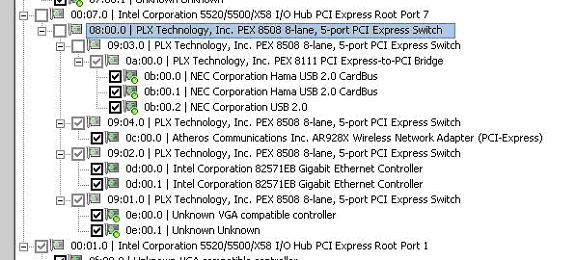

Booted and in Vsphere enabled the card and it’s children for passthrough.

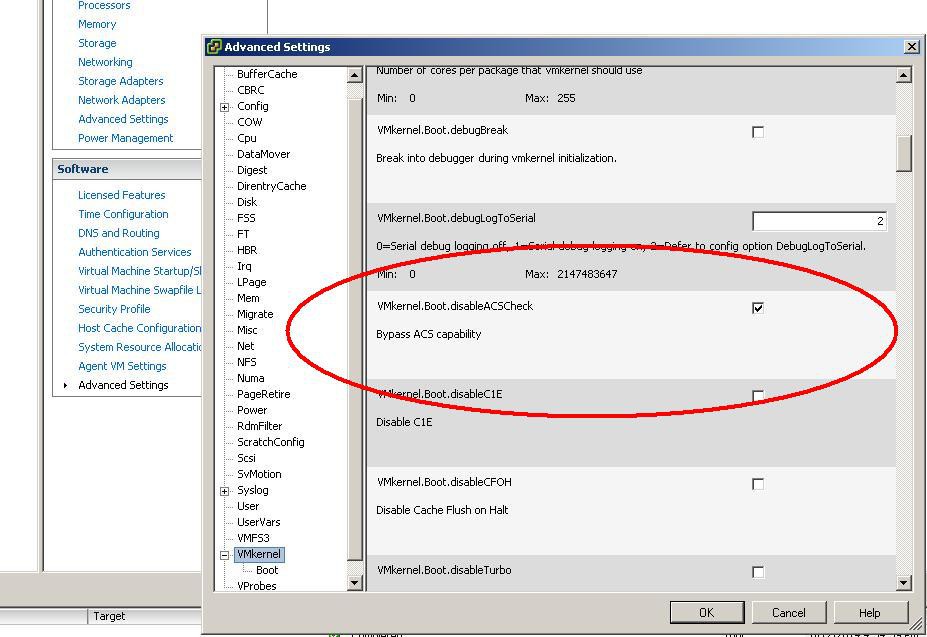

I also had to disable this setting for ACS (Access Control Services) checking, which needs to be off for the switch cards themselves to be passed through, since this early generation card does not support ACS. Subsequent generations of these switches from PLX do support ACS so this setting should only be necessary for these older switches.

Reboot to enable the changes…….

I would have assumed I would need to give the whole switch and it’s child devices to only 1 VM, but that is not the case. I am able to INDIVIDUALLY assign any card in this switch to a single VM.

So I have 4 VMs, and the HD6450 goes to #1, the HP Dual GB nic to #2, USB 3.0 to #3, and the Atheros Wifi card to #4.

2,3 &4 are also getting a GPU from other slots on the system board.

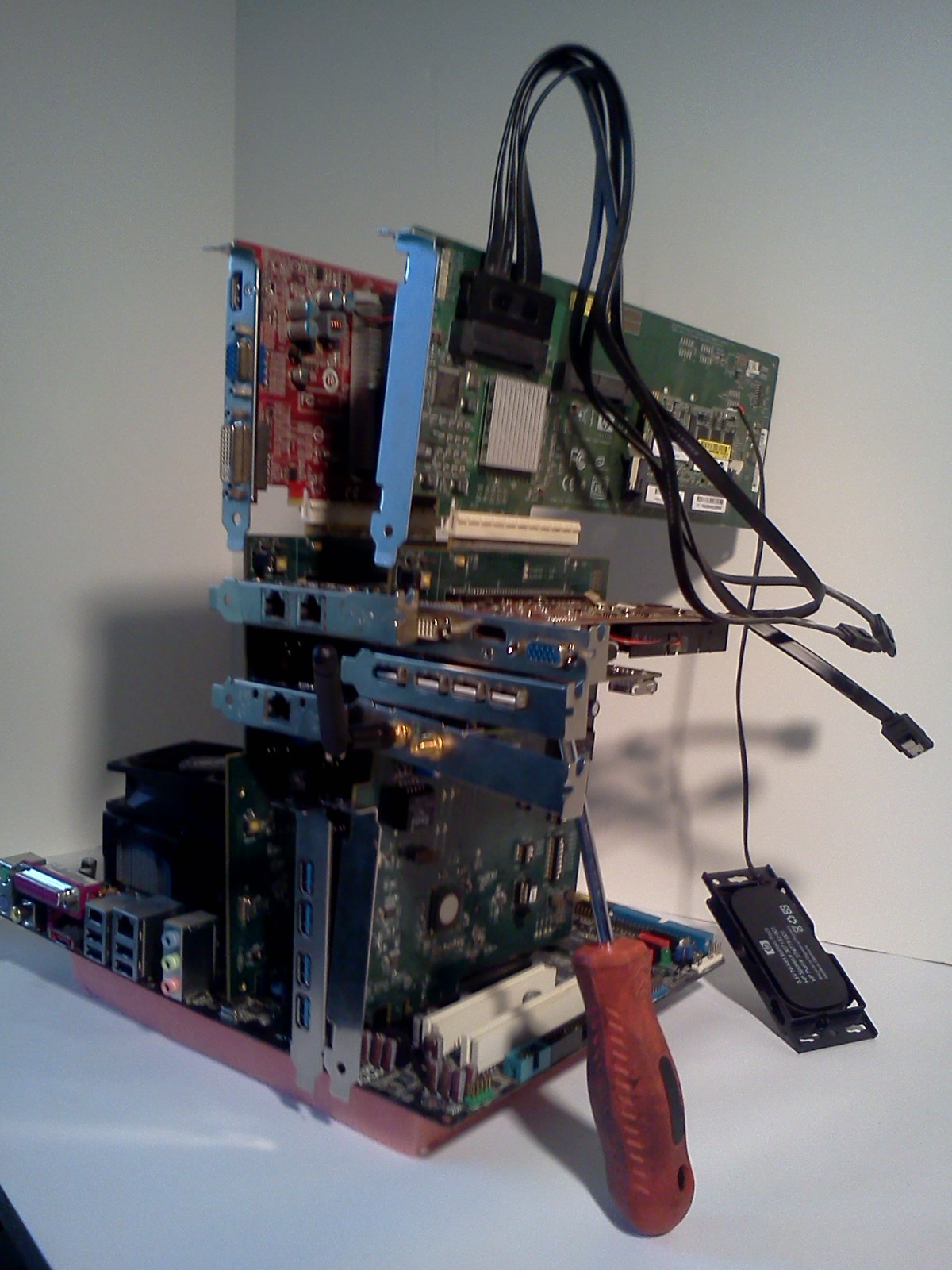

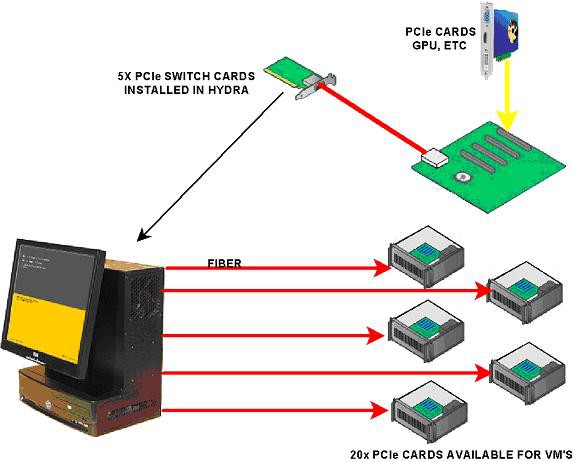

Consequently, we can get a VTd compatible system board etc. with five 16x slots, and theoretically jam it full of these cards. Not these RDK boards, while the theoretical configuration pictured here is VALID and looks cool, it’s impractical to say the least:

But, refer to these videos by PLX:

Avago Optical PCIe Gen 3 Using MiniPOD Optical Modules

https://www.youtube.com/watch?v=HvFmJhQOIgw

PCI Express Over Optical Cable

https://www.youtube.com/watch?v=he8YCnHLj-A

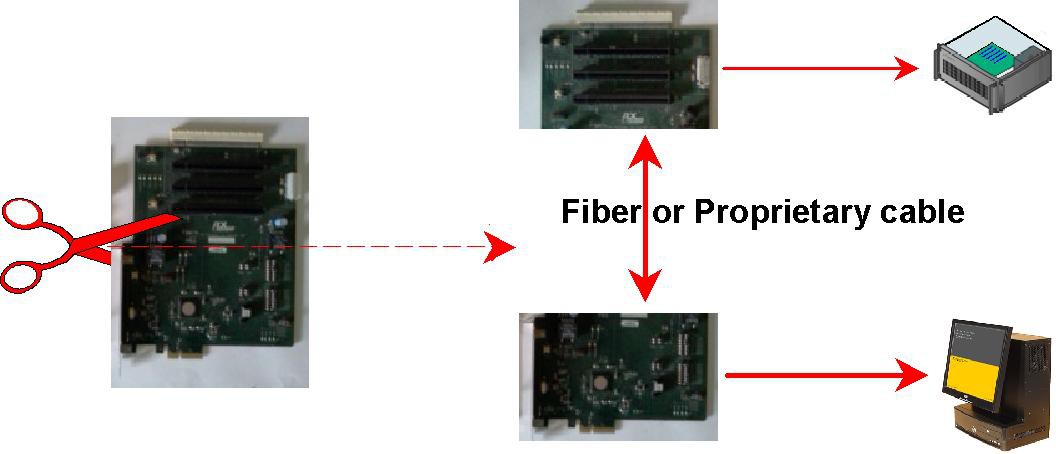

And you see we can separate the card into 2- a sending card, and a receiving card that is inserted in a powered backplane. These cards can be connected by either a

Proprietary cable or fiber optic.

Here’s the idea:

And for me, this scenario comes to mind:

Which means you can have a Hydra computer driving VM’s with full GPU capability that are spread out according to the limits of FIBER, not HDMI or USB. And as I have shown, you can assign cards in ANY combination to the VM’s – such as a TV card in one backplane with the GPU in another.

Also, you are not limited to a single receiver card in the backplane, which would allow you to add Hydra computers to an existing backplane infrastructure to provide additional CPU & RAM or fault tolerance. Not that it matters since PLX also makes THIS:

http://www.plxtech.com/about/news/pr/2013/0909

http://semiaccurate.com/2013/09/20/plx-shows-top-rack-pcie-switch/

It’s kind of funny, if you read the article regarding the switch the author asks “Why???”

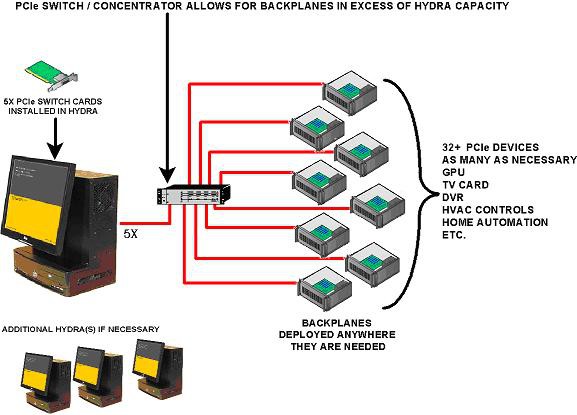

SO you can have this:

So imagine a smart home with 1 or 2 Hydra’s, and backplanes of varying form factors where appropriate in ALL the places you need them. An iSCSI or ATA over Ethernet SAN could either reside on a storage VLAN available to all connected Hydra’s, or the HBA (or multiple redundant HBA’s) can reside in a backplane.

Controlling HVAC

Security system

Home entertainment

Lights, blinds, & all other home automation.

Centralized fault tolerant iSCSI storage for all VM’s

Crypto currency mining?

eric

eric

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.