Introduction and aims

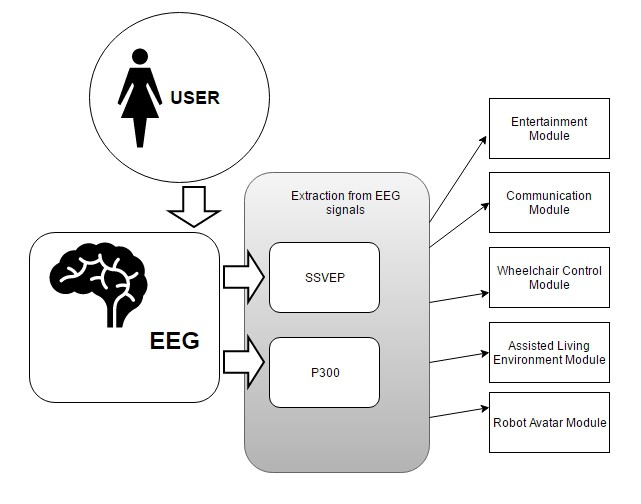

The aim here is to replace the traditional mouse/keyboard interface system with something a little more direct, since locked-in people can't use their limbs, or even move their eyes significantly in some cases (thus ruling out eye-tracking). We will use an EEG device, initially the OpenBCI, to acquire signals from the surface of the skull. These signals will be subjected to amplification, ADC, software-based signal analysis and pre-processing, in order to extract two special signals which are widely used in the field of brain-computer interfacing: p300 and SSVEP.

These signals will be used to control cursors (SSVEP), select characters for text input (SSVEP and p300)., and select from image-based menus (p300). You can see examples of these in other people's projects by looking at this project log. These input functions, in place of traditional mouse/keyboard, will allow the user to:

- communication with text-to-speech (both locally and remotely via robot-avatar)

- control web browser

- read books

- control video and audio playback, and selection

- control motor functions of a wheelchair

- control a robot avatar

- control a smart-home (e.g. lights on/off, temperature control)

All of this has been accomplished within academia, but our aim is to accomplish this with a low-cost extensible open-source system (<£1500) that people affected can actually afford to make or buy!

Locked-in Syndrome

Locked-in syndrome is caused by damage to specific portions of the lower brain and brainstem.. "Locked-in syndrome was first defined in 1966 as quadriplegia, lower cranial nerve paralysis, and mutism with preservation of consciousness, vertical gaze, and upper eyelid movement.1 It was redefined in 1986 as quadriplegia and anarthria with preservation of consciousness".

Neuroscience involved: EEG, ERPs, p300 and SSVEP

What is an ERP?

Event-related potentials are voltage fluctuations in the ongoing EEG (electroencephalogram) which are time-locked to an event (e.g. onset of a stimulus). The ERP manifests on the scalp as a waveform comprising a series of positive and negative peaks varying in amplitude, and polarity as the waveform manifests itself over time. It’s usual for ERP researchers to assume a peak represents the underlying ERP component – although this is not actually the case as it’s the components between peaks that reflect neural processes. The scalp voltage fluctuations obtained by EEG reflect summation of postsynaptic potentials (PSPs) which are occurring in cortical pyramidal cells within the brain. The PSPs themselves result from changes in electrical potential due to ion channels on the postsynaptic cell membrane opening or closing – thus allowing ions to flow in/out of the cell. If a PSP occurs at one end of a cortical pyramidal neuron, the neuron will become an electrical dipole – one end positive and one negative. If the PSPs occur in multiple neurons which all have their dipoles in the same direction – the dipoles will sum to form a large dipole. This current dipole will be large enough to detect on the scalp surface! Thousands of neurons are required to produce such a summed dipole, called an equivalent current dipole. This is most probable to occur in the cerebral cortex where groups of pyramidal cells are lined up together perpendicular to the cortical surface.

The distribution of positive and negative voltages manifested on the scalp for a given equivalent current dipole will be determined by the location and orientation of the dipole in the brain. So it’s important to note that each dipole will produce both positive and negative voltages on the scalp. (Oxford Handbook of ERPs, Luck & Kappenman, 2013)

What is p300?

P300 is an event-related potential (ERP) that is elicited within the framework of the oddball paradigm. In the oddball paradigm, a participant is presented with sequence of events that can be classified into one of two categories. Events in one of the categories are rarely presented to the participant, whilst events in the other category are frequently presented to them. The participant is assigned a task that can not be completed with categorizing the events. Now events in the rare category will elicit the p300 ERP. The oddball paradigm was adapted by Farwell and Donchin into the most famous of all BCIs – the p300-based speller. They created an oddball paradigm by setting up a 6x6 matrix of characters. Subsequently they randomly intensified/highlighted a row or column of the matrix. In each trial the participant was asked to focus attention on a target character. The total sequence of events was divided into two categories: the first (constituting 1/6 of the intensifications) being the cell which the participant focused attention on, and the second being the remaining cells. Thus the events containing the focused cell (being the rare 1/6 events) should elicit the p300 ERP!

To summarize the operation of their p300 speller:

- 6x6 matrix where each cell contains character

- columns and rows are intensified (flashed/highlighted) for 100ms each in random order

- user asked to count number of times chosen (target) character is intensified

- flashing the 12 elelments of the matrix creates oddball paradigm with the row/column containing chosen character being in the rare category and thus eliciting p300

The BCI detects the chosen character by detecting which row/column elicits the p300. The initial work by Donchin et al found participants could achieve a 7.8 wpm typing rate!

[Further Info: http://ieeexplore.ieee.org/xpls/icp.jsp?arnumber=1642774 ]

[For the first p300 speller, see: L. A. Farwell and E. Donchin "Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials" Electroenceph. Clin. Neurophysiol., vol. 70, pp. 510-523, 1988]

What is SSVEP?

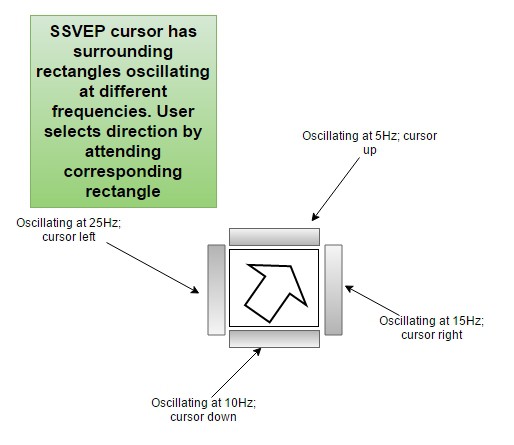

This is a steady-state response (not an ERP) that is evoked when someone focusses their visual attention upon a flickering stimuli. The SSVEP occurs over the occipital brain region and is at the same frequency as that which the stimuli is flickering at. The typical SSVEP-based BCI will have users viewing a variety of flickering items (e.g. flickering rectangles on-screen). The BCI will then determine which of the items the user is focusing their attention on by calculating which SSVEP frequency extracted from the EEG is strongest. An advantage is these require little training. However, the disadvantage would be a small risk of seizure.

You can view examples of these in this project log.

SYSTEM OVERVIEW

The BCI

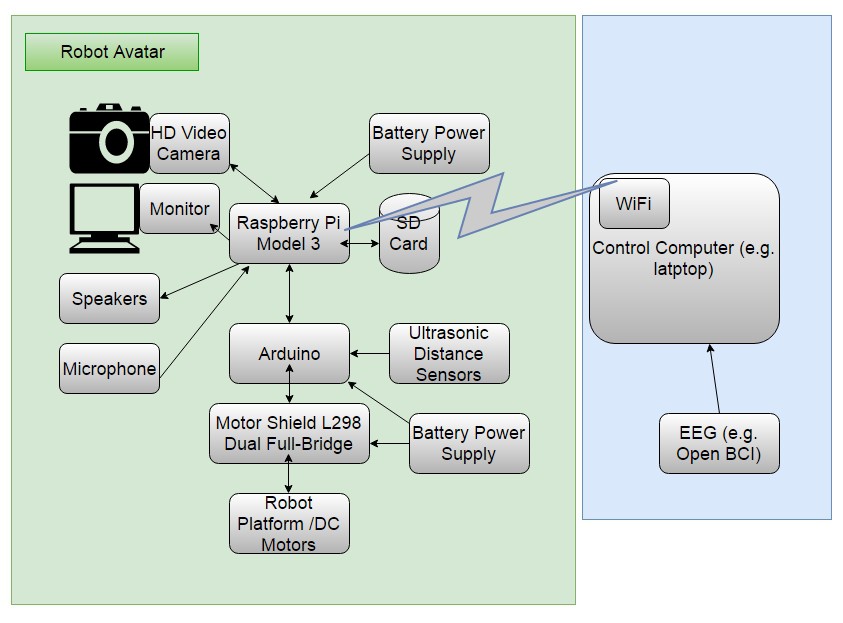

The EEG device detects signals from the skull - amplifies these and converts them from analogue to digital. The TI ADS1299 is a low-noise, multichannel, simultaneous-sampling, 24-bit, delta-sigma (ΔΣ) analog-to-digital converter (ADC) with a built-in programmable gain amplifier (PGA), internal reference, and an onboard oscillator, that is commonly used for this purpose [cost: ~$50usd] (e.g. by OpenBCI). Next, patterns (such as SSVEP and p300) are extracted from the digital EEG signal and classified using software, and these extracted patterns are used for passing commands to software modules. OpenVibe is one piece of open-source software for this purpose.

Software

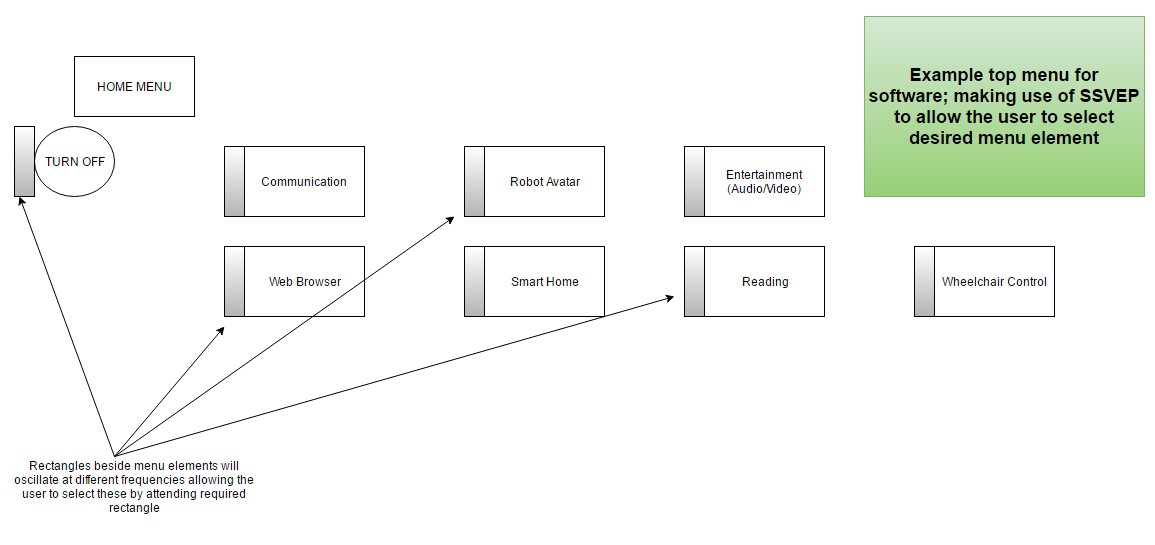

The menu to allow access to the various software modules will be SSVEP-based with rectangles alongside menu elements oscillating at different frequencies; the user will attend the rectangle corresponding to the desired menu element in order to select that element.

Communication Module

This is a software module to allow the user to select letters and numbers from a grid using either p300 or SSVEP. The selected strings can be sent to text-to-speech; or sent to a browser, SMS gateway, email software, robot avatar text-to-speach, etc.

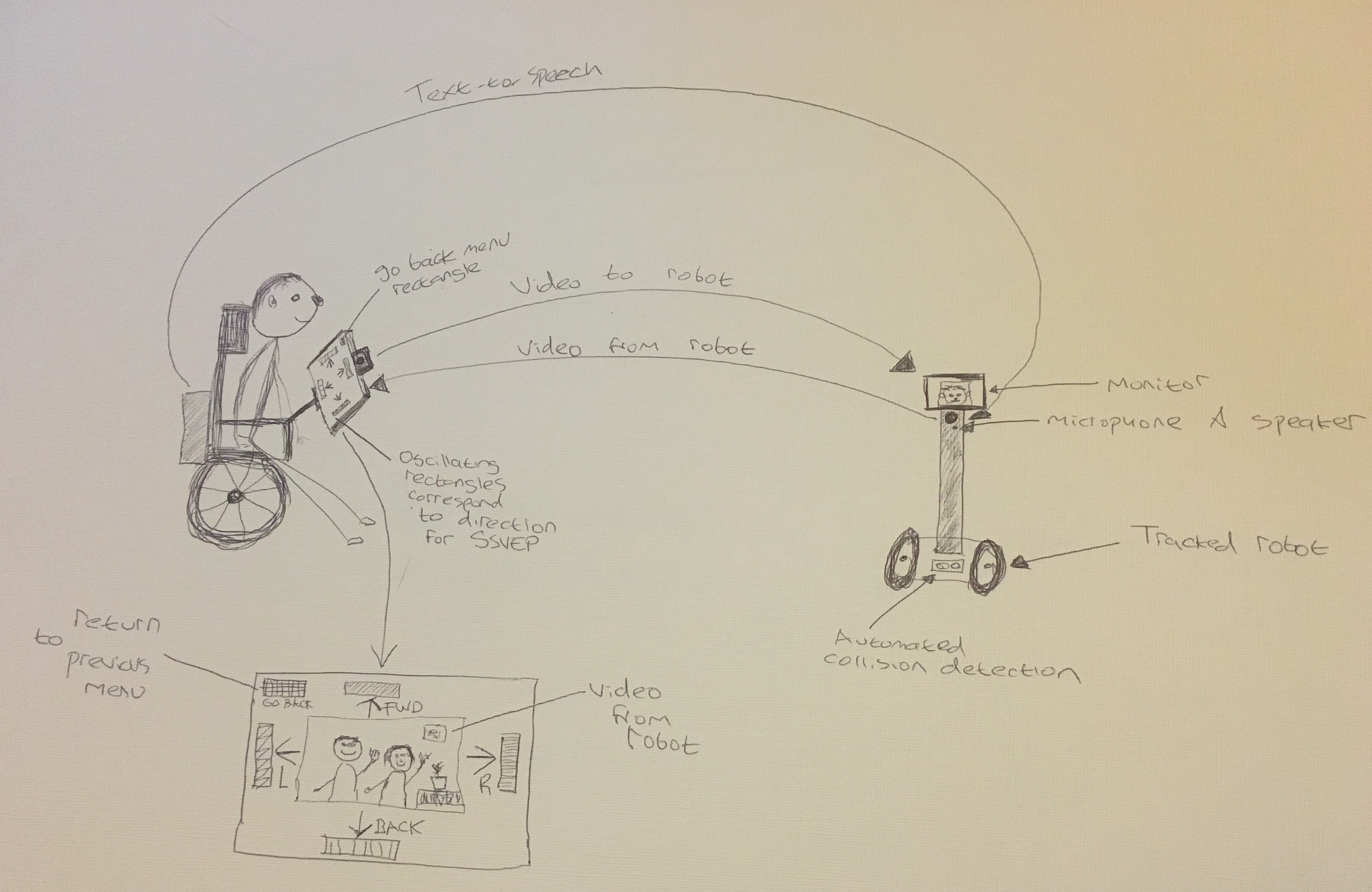

Robotic Avatar Module

This is a software and hardware module. When only able to move slowly in a wheelchair (or not at all, if feeling unwell and confined to bed) it could be frustrating to hear noises etc. and wonder what they are. Or perhaps users may wish to explore the garden and look at plants, ponds etc. Or watch TV with friend whilst the friend is in another room and user is in bed. This is accomplished using a robot avatar. Simply a tracked robot with a ~1m vertical extension featuring video camera, microphone, LED display, and speaker.

Example features of robotic-avatar:

- Robot can be controlled directly, or move semi-autonomously to fave locations.

- An automated rescue feature for robot to reach safety in case of getting stuck in corners and frustrating the user.

- The robot could feasibly leave the house and be accompanied by friend on visits to shops, parks, galleries, museums car rides. Communicating via 4G to user stuck at home. Obviously not the best since the person would prefer to visit in-person!

- The robot could feature a nerf gun to attract attention (copied idea from EyeDriveOMatic!)

The robot avatar is controlled for direction using SSVEP and the communication module will send text-to-speech which will be outputted by the robot. Video is sent both ways between user and robot (if desired by user).

Overview schematic for the robot-avatar, showing WiFi (TCP) link with the control computer:

Web Browser Module

This will make use of an SSVEP-based cursor to allow the user to select hyperlinks in HTML documents, mouse onclicks, and control basic browser functions such as back/forward/home. The rectangles surrounding the arrow oscillate at different frequencies to control cursor direction, whilst the arrow itself oscillates to control a mouse click. For entering URLs, the communication module will be used. See this log for example of an SSVEP-based cursor

Modules: Wheelchair Control, Reading/Entertainment, Assisted-Living Environment/Smart-Home

These will similarly make use of SSVEP-based menus. The lights and heating can be controlled via RF or TCP. These parts of the project will be the last to implement, and should be quite straight-forward in the case of the wheelchair control and assisted-living modules, although perhaps more tricky using the e.g. Netflix SDK and Kindle SDK for the entertainment/reading module.

Open Source

Any software written for the BCI-based assisted living and robot-avatar system project is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License version 3 See http://www.gnu.org/licenses/

Any hardware plans for the BCI-based assisted living and robot-avatar system project, including 3d design files and stl files, etc. are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. See http://creativecommons.org/licenses/by-sa/4.0/

Neil K. Sheridan

Neil K. Sheridan