Hello,

So progress is quick recently! Last time we saw the robots moving under control from ROS, so the next problem for a proper closed loop system is how do you know your location. The robots do have odometry on them in the form of stepper motors, if we know how many steps each motor and in what order we can calculate where we are. That is as long as there is no dust, or hair, a mild breeze or bad omens. To properly know where we are we need some absolute positioning.

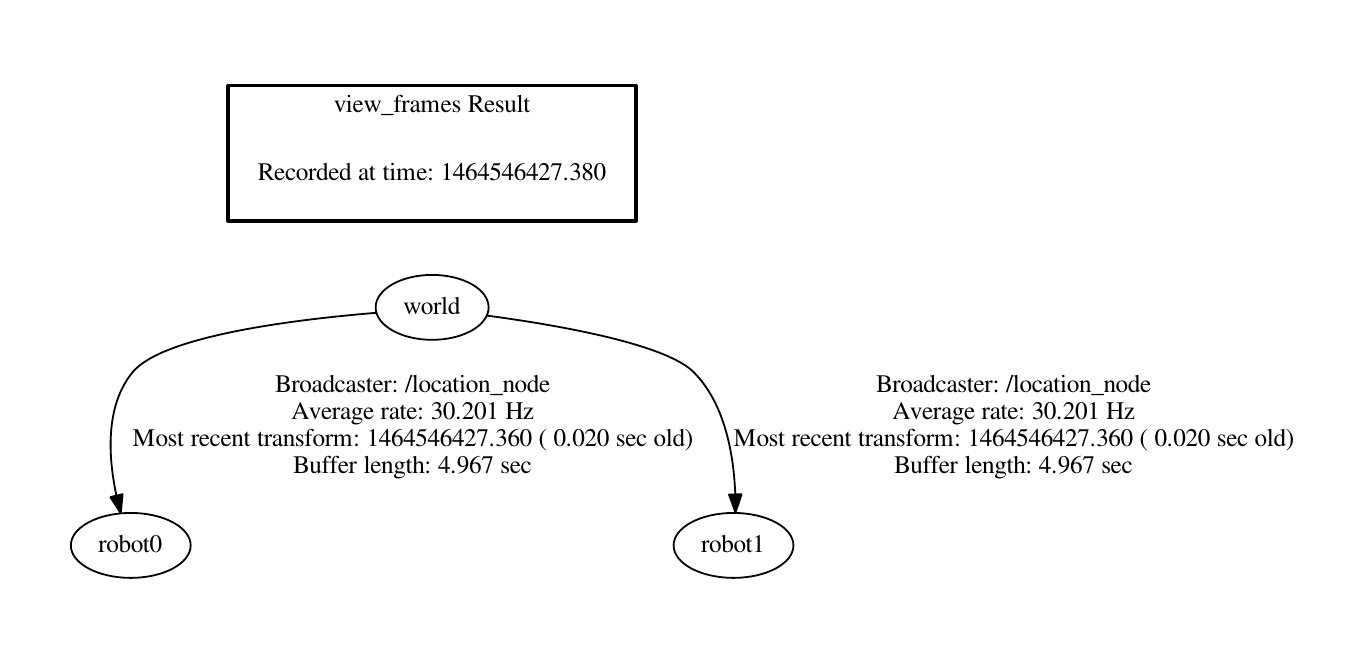

For this purpose we are developing a Computer Vision (CV) system to find the robots, calculate their position and rotation and publish this onto the TF tree, which is ROS's need little bag of tricks for keeping track of everything. We are using TF rather than a standard topic because TF does nice things like buffering past transforms, this way you can see where things were at a critical time in the past. Where were the robots last time I sent them a movement command? Easy peasy. Controlling robots well that are this fast (5g can get going quickly) we need to be very careful about latency and correcting for it.

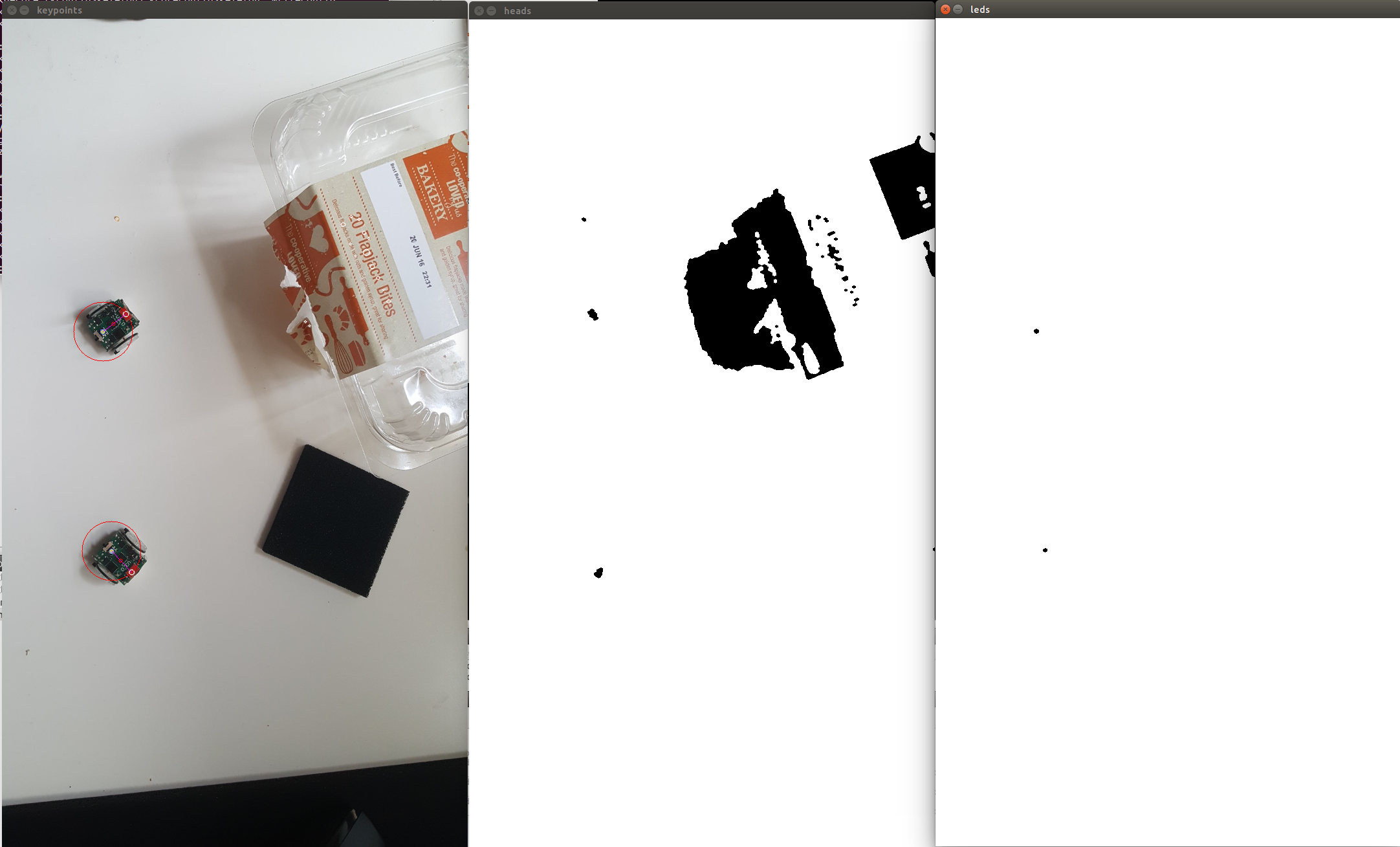

So alas how are we finding the robots visually in a potentially noisy environment? There is an on board led, which we can set to any colour we want, in this case blue. Also the connector has been painted with red nail varnish. Using these 2 points we can find the location and heading of the robot. To separate out these features simple colour segmentation has been implemented, giving us many blobs of 'Red' and 'Blue'. We know how far away from the ground the camera is, so we can know how many pixels should be separating the LED and the painted connector, we can also filter the blobs by how large they are. So using the LED blobs as a reference we find the distance to all red blobs for each blue LED blob, we then select the one that is closest to the correct value, tossing away any LED's that don't have a good match. Then, fingers crossed, we have found all of the robots. Here is a demonstration, LED's on the right, red 'front connector' blobs in the middle, and the result on the left. The red circle indicates the radius that was searched for (red blobs on that circle will score highly). There is also a thin red line indicating the heading of the robots, and a red dot indicating the centre of rotation. The cakes are empty, because I like cake.

A little bit of vector algebra later and we have the position in the frame and the rotation relative to the x axis. This can then be published to TF!

Joshua Elsdon

Joshua Elsdon

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.