Holoscope - Superresolution Holographic Microscope

Subpixel imaging using the Raspberry Pi and an Android Smarthpone. The lightsource is represented by an LCD.

Subpixel imaging using the Raspberry Pi and an Android Smarthpone. The lightsource is represented by an LCD.

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

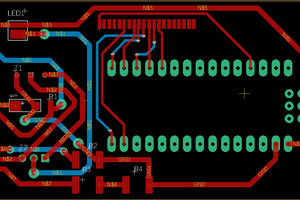

This is necessary for writing the illumination patterns/apertures.

A very cheap method to realize a spatial light modulator one can simply use an off-the-shelf LCD module driven by an Arduino. When removing the backlight and putting the LCD in the optical path, it acts as a very cheap mask. Controlling the device via MATLAB or PYTHON is an easy task to do!

In my work I tried to implement a SERVER<-> Client interarction, where Matlab sends command and the Arduino executes them.

Writing an entire RGB image using the slow Serial protocol provided by the ARDUINO is not very efficient and takes up to 2 minutes .. Therefore one can define special patterns like rings, segments, etc. and send only the parameters to the arduino. This then selects the right shape and displays it using the parameters provided by the SERIAL connection as seen in the image bellow

Calling a patter from MATLAB is as simple as this:

%% Declaration of Serial Port

com_port= ‚COM3‘;

serial_port=serial(com_port);

serial_port.BaudRate=57600;

warning(‚off‘,’MATLAB:serial:fscanf:unsuccessfulRead‘);

%Open Serial Port

fopen(serial_port);

pause(2)

% Set the global center/pixel of the LCD

setGlobalCentre( serial_port, x_centre, y_centre )

pause(2)

% Display Aperture on LCD for Fourier Ptychogrphy (just an example!)

setScanAperture( serial_port, round((X(i) + x_centre)), round(Y(i) + y_centre), dAperture, exposureTime ) % show centre dot (0,0)

the Arduino code as well as the MATLAB and PYTHON code can be found in my Github repository here:

https://github.com/beniroquai/MATLAB-ARDUINO-IL9341-LCD-Serial-Communication/tree/master

This is the last – and hopefully last – concept, how a portable lensless microscope, using only opensource and cheap components could look like. It holds all necessary parts. The Raspi as the sychonisation for image acquisition and hardware controle as well as a server for pushing the images taking with the PiCamera to the handheld device. The CPU/GPU of the Pi is not strong enough to decode the images, therefore a smartphone acts as a viewfinder and image-processor

In order to recover phase, it’s a good way to capture images from illuminated from multiple angles and at multiple Z-distances. An IFTA (Iterative Fourier Transfrer Algorithm) can, by back and forthpropagating the electric magnetic field (amplitude and phase) converge the phase – will explain this soon.

Therefore an old zoom lens acts as the Z-stage. It’s precise enough to move the object. A used linear-stage from ebay gives requiered robustness

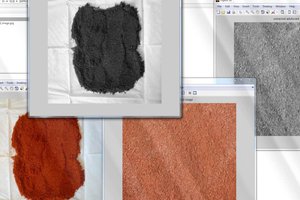

In order to shift the object on the sensor, to get sub-pixel-resolution, a collimated high power led is illuminating the LCD whichs backlight is removed. By witching on/off several pixel patterns, each pixel acts as a point-source and thus forms a ~spherical wave – the reference wave. Shifting the pixels, also shifts the sperical wave which follows in a shift of the object on the sensor.

The drawings are made with Autodesk Inventor 2015 and will be uploaded to the GITHUB repository. They have been 3D printed with PLA material. It works!

So, what does this mean? Super-Resolution? This simply means, that it’s possible to extend the support of available information coming from the sensor by simply shift the objects image. In the first section of the lens-less project thing I mentioned, that one is capturing an interference pattern of the object multiplied with the reference wave and the reference wave itself. A so called hologram. Waves are continuous, the detectors used are not, so there is always a sampling which is depending on the size of the pixels – in this case.

The smaller the pixels, the higher the resolution – as long as there are no optics in between. The image bellow shows the process of discretization. Just imagine you scale an image from 200×200 to 100×100 px. The pixelsize is now doubled. A lot of information is unrecoverable lost.

Now, shift this downsampled image over a high frequency sampling grating, here represented by a mid-resolution image sensor. Each shift gives another combination of each pixel. When knowing the exact position of each shift one can simply calculate the sub-pixels which form the intensities which are giving the sub-image.

Finally you have an image which has – in best case – n-times higher resolution, where n is the number of images used in the capturing process.

May approaches in the past have simply mechanically shifted the object or the sensor. Mechanics in optics are always a problem. Because in most cases a lot of things changes which should stay the same ideally. Another approach was investigated by Ozcan et al. They’ve virtually shifted the light source. The following graphic shows, that the effect is more less the same. I results in a shift of the hologram on the sensor. In case the distance between the LEDs and object compared to the on between the object and detector is great enough, the shift is linear.

By switching on/off the LEDs 1..5 sequentially one can get 5-shift images, which results in sqrt(5) bigger pixels (ideally).

But as I’ve mentioned earlier; Doing this LED thing was time consuming and efficiency is not great. Light distribution is also not great if you’re not working precisely.. and I’m not. Definitely! So using an old DMD-projector/chip does everything at once. Pupil shift, has high efficiency, is affordable as an off-the shelf part and you can even control the degree of coherence. This everything is possible by simply writing different patterns on the DMD-Chip.

The shift can adjusted even though it’s always a discrete shift. The pattern (seen bellow) was displayed by an Android MHL-connection. This is an HDMI-Standard for Mobile devices. Google allows android-devices to use secondary screens in their apps. Great stuff, but a hassle to debug. Anyway, I’ll publish the code in the future on github!

The shift can be calibrated post-experimentally with an OpenCV implementation of the optical flow-algorithm.

Optical Flow? This is a class of algorithms which calculates a shift-difference of two images. Just imagine a care moving from left to rigth (fram1->frame2). Then the difference is the „flow“ of a car. This i soften used to detect motions for example.

It detects anchor-points in the initial frame and tries to find them in the next frame. The movement of each anchro produces a flow-map as seen bellow. One could simply calculate the mean of all shifts and shift the second frame back to the original position, BUT:

As I’ve mentioned earlier, the shift of the images is linear as long as the condition of distances is fulfilled. In the experiments, that was obviously not the case. The different patterns gave a weired magnification/distortion (like defocus) effect, due to the point-source-effect. The spherical wave coming from the point source gave a fish-eye like distortion. Higher magnification at the boarders.

Therefore the „Shift“-map can be generated depending on the position in the image. The two images below show the adequate shift...

Read more »In the last post I was talking about the efficiency of the setup. When illuminating the sample with a but-coupled LED one is loosing about 95% of the LEDs light-power. In case of the DMD it’s a bit less, but without the trouble to make the light-guide fitting in the LEDs housing.

The spectral-bandwidth of the in-line microscope should not extend 20nm measured at full width half maximum. Therefore one can use a nodge-filter. To get the highest power from the LED, the spectrum of the LED with smallest bandwidth has to be determined therefore.

In the Graph below, the normalized emission spectrum of the projector (with RGB LEDs) is shown. Peak intensity is at blue-line at 445 nm. Smaller wavelength also gives the opportunity to image smaller details which can later be seen.

To find the video signal, which raises the blue LED, a RGB-ramp is displayed over the VGA-output of a laptop. The following Graph shows the setting for the highest intensity at smallest wavelength-bandwidth. R=0, G=81, B=255; The other „pure“ maxima (Green and Red peak) are way less and decrease the overall efficiency.

After filtering the DMD signal, the spectra looks as follow:

The rainbow in the graph shows a weired behaviour oft he DMD. The way how the DMD-projector shows a specific colour is, that the 3 channels (RGB) are displayed sequentially. The eye is not „recording“ fast enough to see each channel separately, but the camera does. There is a „beat“ (https://en.wikipedia.org/wiki/Beat_(acoustics)) between the capturing frame rate and the refresh rate oft he DMD.

This can be avoided by using a different LED (disassemble the setup) or put a capacitor in parallel which removes the frequency of the LED-switching voltage.

This setup has the main advantage, that the highest intensity of the system is used and the spectral bandwidth is still quiet small. This follows in less pixel-noise and better results of hologram-reconstruction.

Efficiency of the coupling:

There are some losses which have to be taken into account. Geometrical losses for example which results from the power which doesn’t go into the fibre: diameter of fibre < Source. Then Fresnel losses which equals tot he power which is lost du to radiation and reflection. Etendue ..

LED-Specs

Fiber-specs

Setup of coupling an darranging the fibers

Alternative: Projektor – DMD/DLP-Technologie

The projector used is an old Optoma Pico PK 101 – LED-Projektor with an DLP-Chip at 480×320 px. And 0.3-Inch (7.62 mm)

Principle and Setup of the new illumination system with the DLP

Mixing the colours either by a colour wheel or PWM driven RGB LEDs which causes a heavy interference over time. That’s probably one of the major drawbacks of this system! The projector was then modified by putting a high power LED with a specific wavelength peak for fluorescent probes (GFP at 450nm – royal blue)

Camera

LOGITECH WEBCAM

The first version of the Holoscope used an old webcam with a 720p, ca. 1.3 MP chip. The pixelsize was estimated with 3µm. The Webcam has a USB-connector which goes straigth into the smartphone. Most of the newer smartphones are capable to drive those as an USB – on the go device. Webcam drivers are available on github. This soloution was quiet nice though, but the resolution is not good enough.

SONY XPERIA Z1

A used Sony XPERIA Z1 Smartphone with a cracked screen for a reasonable price has one of the best cameras in todays smartphones. The camera itself has a large 1/3,06 inches sensor at 20 megapixels. This results in pixelsizes of 1,12 µm. Smal! When disassembling the lens module one can be astonished that the entire module costs just 20 Euro. Engineering-power at a glance. The clear-aperture has a value of F2.2 at a focal length of 28mm at 35mm FILM equivalent.

A good side-effect is, that the software which will reconstruct the object can run on the smartphone itself. It has a quad-core with an OpenCL driven GPU. A powerhouse for less than 100 Euro..

Another awesome aspect is, that ANDROID devices are able to drive MHL-devices which is the mobile standard for HDMI. The DMD-projector has a VGA port. So you need the following que:

Smartphone -> MHL-cable (which has a HDMI-port) -> HDMI-VGA-adapter -> projector.

Funny thing: It won’t work out of the box, because the max. resolution of the projector is less than the standard MHL-resolution of the Sony. Can’t remember how, but I figured out, that when pressing #*#*SERVICE*#*# (Service in T9-numbers) in the dialer – the service menu opens. Under settings, one can set up the MHL resolution. Tadah. It works.. when rebooting it always forgets this setting, so one need to reset it.

Sharen mit:

Basics about spatial coherence:

No interference

From the figures above it can be seen directly, that either the distance from the object to the sensor has to be small or the interference-partners have to be close together to get the required fringes.

From the figures above it can be seen directly, that either the distance from the object to the sensor has to be small or the interference-partners have to be close together to get the required fringes.

Lensless Microscope – New Approach (Version 2)

When building the first version of the Holoscope using but-coupled LEDs, it turned to be really hard putting the fibres in to the right position of the LEDs. The fibres are really thin and the efficiency degrades dramatically if the fibre is not positioned directly on the led’s dye.

Lensless Microscope – New Approach (Version 2)

When building the first version of the Holoscope using but-coupled LEDs, it turned to be really hard putting the fibres in to the right position of the LEDs. The fibres are really thin and the efficiency degrades dramatically if the fibre is not positioned directly on the led’s dye.

Another idea is to use a pico projector which uses a DMD-Display. It’s a micro-mirror device which has a matrix with thousands of small mirrors which can be switched on and off. The ratio of this on/off-thing gives the grayvalue. When illuminating the DMD with the three basic colours red-green-blue sequentially one could mix all possible colours.

The new optical setup shows the illumination-concept. Good side-effect is, that the coherence ratio can be setup depending on the diameter of the aperture. The aperture thus can be scanned of the matrix, which gives the required shift for superresolution.

Different illumination patterns are possible (Line instead of point, etc.). So this system holds some advantages compared to a traditional lightmicroscope. There is no expansive optics needed which could aberrate the image. It’s quiet cheap. In total one spends about 200 Euro for the Webcam + DLP and so.

Due to the Subpixel superresolution one can get high resolution images at a high FOV (field of view) . This system is portable and more-less robust.

Introduction

Many health applications in 3rd world countries need proper equipment for low price. Many research groups have started to develop cheap diagnostic modules such as the foldscope (the 1Dollarscope) or the lensless on-chip microscope from the MIT and Cellscope (Waller) Berkley. TH Cologne definitly needs one too!

The Germany was lagging a system. Goals were:

Lensless Microscope – WHAT??

Starting from the figure above (well, still German); There is a light source, which is almost monochromatic and of small bandwith. This coherent light source makes it possible to capture fringes coming from diffraction at the object (you see these rings around the flower in the grayscale image).

These fringes are coming from the interaction of the spherical waves coming from the LED-source with the transmission-function of the object. Therefore condition of coherence has to be fulfilled (temporal and spatical coherence). The LED is great, because it fits this circumstance and doesn’t produce any speckle pattern (color-spectrum ~20nm, high efficiency, small-chip~spatial-coherence)

These fringes are coming from the interaction of the spherical waves coming from the LED-source with the transmission-function of the object. Therefore condition of coherence has to be fulfilled (temporal and spatical coherence). The LED is great, because it fits this circumstance and doesn’t produce any speckle pattern (color-spectrum ~20nm, high efficiency, small-chip~spatial-coherence)

Interference is possible, when the wave have a more less strong phase relationship to each other. Destructive and constructive interference of the spherical object-wave and the object-filtered reference-wave are producing a so called in-line hologram (look up the Gabor-Hologram on Wikipedia if you like).

Interference-pattern only right after the object. So capture it with a sensor. This causes the „On-Chip“-imaging methodogy. Further shrinking the source-shpape enlarges the distance of coherence. Possible by placing a pinhole right after the LED, but-coupling the Led into a wave-guide OR using a DMD/DLP-Projector…

Backpropagation of the field – magic field

Well, so the gotten image is an interference at a distance Z after the Object plane on the sensor of two components:

After doing some magic with the numerical Fresnel-integral we can simply back-propagate the electrical field

Goal is: Doing it on a phone. Android+OpenCV will do the job. Most Phones have Quad-Cores already, why not using them?

already, why not using them?

Due to the short coherence length, each fraction of the image can be backpropagated independently.

Setup

Following from the explanation above, one needs a more less coherent source. An LED. This needs to be placed at a large distant far away from the object. One can use the advantage of „scaling“ the system by placing a pinhole aperture right after the LED. This decreases light’s efficiency, but shrinks the system! Good parameters will be:

Optional: When trying to recover objects phase (usually an object consisnts of amplitude eg. Dust and phase information eg. Cell-structure), it’s helpful to take several images at diferrent Z-positions. Z-Resolution should be around 10-15µm..

Create an account to leave a comment. Already have an account? Log In.

I like this project a lot, but I would like it even better if the images weren't broken :)

Become a member to follow this project and never miss any updates

Christian Walther

Christian Walther

Sir Michael II

Sir Michael II

G.Vignesh

G.Vignesh

images removed?