DIY Stereo Camera

Creating an open source and economical Stereo camera dev kit. For VR and 3d video use.

Creating an open source and economical Stereo camera dev kit. For VR and 3d video use.

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

https://hackaday.io/project/163679-luxonis-depthai/log/180804-announcing-opencv-ai-kit-oak

The newest stereo cameras have done most of what I was hoping to accomplish here with this project. It's not super-cheap, but it can be run on next to any source since it has onboard processing.

I've just started playing with mine, but so far it was cheap enough that I grabbed 3 Oak-Ds just in case I could get them to work for a project.

While I still think that 2k cameras aren't enough for pure-stereo vision with quality depth, hopefully the powerful onboard controller chip (the Movidius-X, the same chip in the Neural Compute Stick 2.) may allow for enough processing (including AI) to make the depth signal high quality and clear.

As time goes one, more and more options are becoming available. This is of course, as I expected, I only hopped to be farther along on this project when this time came.

Right now, there is a kickstarter going on for a new SOC board. You can find it at https://www.kickstarter.com/projects/1771382379/firefly-rk3399-six-core-64-bit-high-performance-pl?ref=user_menu . This board is interesting because it contains dual MIPI-CSI ports. This is, as you know, a critical part for moving on. The board is rather pricey at $160 each (A few $140 early birds available at the time of writing). I've sent a message to the makers of this board and hope to talk to them more about it's future developments.

Another project that I've been following since Maker Faire this summer is http://www.sub2r.com/ . They're aiming to offer FPGA powered cameras. Now, they do intend to offer dual camera boards, but also interestingly because of the FPGA and the design, it's possible to link two of these cameras into a makeshift dual camera setup. This is an extremely pricey but amazingly powerful system since you can do anything you need, all the way down to the firmware. They're hoping to demonstrate full frame uncompressed 4k video in the next few weeks, I'm excited to see that and have been in contact with the men behind the project. They're keeping me up to date with the developments and are excited with getting their cameras into our hands.

Finally, I've gone and gotten myself Lytro Illum camera. These are interesting because they can do a lot of stereoscopic camera tricks with a single lens! By capturing the light field instead of just a 2d array of pixels, the camera can recreate the 3d environment after the fact. This is amazingly cool and it lets you play with 3d photos using a single lensed camera. The company behind them is actually developing the technology into high end VR camera systems.

The project itself is still in a sort of holding pattern, but I'm excited to see what comes out soon. As always, if you're willing to help out feel free to toss me a line and let me know what you'd be interested in doing.

I recently purchased a Lytro Illum camera. This camera is much father from my goal of a DIY Stereo camera, but it provides some interesting features I think are worthy of talking about. The Lytro is a light field camera, meaning it doesn't just capture a 2d image, but captures both the "normal" data of color and intensity as well as adding another piece of information, direction. This lets the camera do many of the features of stereoscopic cameras with a single sensor!

This is pretty incredible as it allows 3d photos from a single lens and eliminates many of the calibration issues of a stereo camera. It does cause other issues, in that you're relying on highly technical work done by other people and can't get to all the data. Lytro does have some very open scripts (written in python) that let you do a lot of things with the camera and it's data, but I'm still checking all that out.

In general, Lytro is not going to be the answer for a DIY camera, but it's useful to see what the different technologies let us do.

Sorry about the lack of updates. This was not because of a lack of interest or because of a lack of progress. Instead, this was due primarily due to a lack of advances. I've been working on the project but have not found anything particularly interesting.

VisualSFM

VisualSFM has proven to be an annoying, if capable program. It's buggy, it's slow and it's extremely frustrating due to a lack of control or error messages. It seems to have been developed in a haphazard way by someone who doesn't care about providing information to the user. It doesn't fail gracefully and often requires redoing large amounts of work after a crash.

It's still the best solution I've discovered so far. But ideally, we could abandon it, as it's poorly designed and an awful package designed for research more than practical use. Further, it poorly supports native stereo vision and is extremely slow. It can take 24 hours to process a stream of photos. This is in contrast to the ZEDfu which can do real-time analysis of a video stream.

ZEDfu

ZEDfu has proven itself to have serious drawbacks as well. It seems to scale poorly, if you use it for a sustained period of time, it starts "skipping" and losing tracking, this can be remedied by recording a video and processing it later, but that leaves open a problem of not knowing when you lose tracking.

The program also only goes through a video as a stream, meaning that even if it regains tracking later on, it cannot "recover" the middle chunk to create a contiguous map. Though you can start the mapping at any point in a video but it will only continue on from that point. This would give multiple maps ZEDfu is obviously best for short live maps.

Other tools may be useful to combine the multiple maps created by ZEDfu. I've been checking out point cloud systems that might automatically combine maps. This is an equally difficult problem to creating the maps (involving a lot of the same problems) but have found that Meshlab can do a decent job once it's been given some comparison points manually. This is less than ideal as it requires manual intervention, but it may be possible to advance their algorithms to create a better automated process.

Hardware

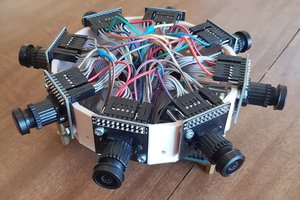

On the hardware front, little work has been done. I'm primarily waiting for the next generation Realsense cameras and keeping an eye out for new SBCs that support multiple camera inputs at high speeds. Most of the work done could be done with limited CPU, just so long as two cameras could be encoded together into a single stream. The Compute module can do this for relatively low resolution video (1920 width is maximum, leaving 960 px per camera in sbs). It's also possible to run the Compute module at a lower framerate, alternating left or right frames (Giving 15fps per camera at 1080p). Neither of these are perfect, but due to 4k video, future SBCs may support a better video option.

The funny thing, is that nearly all chips on these dev boards support multiple cameras, they're just not exposed to headers. A custom board would easily solve this problem, but is beyond my current ability. Another option is an FPGA. Even a basic FPGA should be able to read in from 2 cameras, though they may not be able to combine and compress the video without going to a higher end FPGA. Further investigation will need to be done to get the hardware side figured out.

My initial analysis of a few available software suites follows. For those not interested in reading, VisualSFM is interesting for recreating static scenes, but cannot (effectively) be used for more advanced reconstructions. Other software has serious limitations that prevent it's use in various circumstances.

A major player in the "Structure From Motion" (or photogrammetry) is VisualSFM http://ccwu.me/vsfm/ .

VisualSFM is a free for non-commercial use application for Windows OSX and Linux which stitches many images together into a 3d pointcloud. The interesting thing about VisualSFM is that it does not require any information about where the cameras were situated. VisualSFM itself isn't actually doing any of the work in the photogrammetry, except for providing a GUI to the software it uses which include SiftGPU and PMVS. SiftGPU finds the camera positions while PMVS creates a pointcloud from the matched photos.

When you run the program, it will go through all the data files and match points in them to find where the cameras were in 3d space. This means that it can work with stereo images just as well as single images. I thought that time to process would be the problem, but it turns out that finding where the cameras were located is one of the quickest processes in the whole pipeline. Using a second camera immediately to the side of the first camera for each shot seems to add mere fractions of a second for each camera. This really doesn't seem like you need optimization, but VisualSFM does have an advanced system that lets you submit photos in "pairs". I haven't examined this feature in too much depth yet due to the negligible gain at this point. As we develop the camera further this might be a good feature to understand.

VisualSFM cannot natively support video files and cannot handle SBS images on their own. This is not a major problem as this the process of converting SBS videos into images splitting the left and right views into new locations is easily automatable using other software.

The major issues with VisualSFM are as follows:

In general, Visual SFM is a good tool for taking something static and importing it feature-complete into a 3d environment, but it requires additional processing and skills to make a perfect environment. There is a great tutorial from a guy names Jesse who learned VisualSFM in a few weeks and created a complete workflow. You can find the tutorial at http://wedidstuff.heavyimage.com/index.php/2013/07/12/open-source-photogrammetry-workflow/ . I highly recommend the tutorial as it gives you a very good idea of what is going on during each step of the process while also guiding you clearly through two extremely complicated programs.

In that same tutorial he mentions 123d Catch as a similar tool. Yes, the tool works almost exactly the same, but quality of the final results are much lower and restrictions are much worse. For these reasons I haven't examined the 123D catch very extensively. I did feed it the same data, and it returned workable data, but the errors were much more evident in the 123D catch data as well as being much lower quality.

I downloaded and ran Reconstructme, but it requires cameras which I've already discounted as untenable. It is unable to operate with the ZED or any other cameras I currently have, so I will have to address this one later....

Read more »With this project, I'm starting from Square 1. I don't have experience with cameras or with building boards this complicated. After some research I've discovered the following difficulties that I will have to overcome.

USB cameras are simply not an option. USB 3.0 is hard to get in dev boards, and impossible to sync two cameras which send their video through the USB connection. In addition, mounting USB cameras is difficult when dealing with stereo calibration. Unless some new discoveries are made, USB is out.

Mipi CSI-2 is a high speed LVDS bus with very tight tolerances due to the speed. In order to use this, I will need to be able to run many traces with identical length, but different paths so that they don't interfere with each other. Ideally this should be done on as many layers as possible, but in attempting to keep the board price down, excessive layers should be avoided. Hopefully this can be done reliably with 4 layers. This can be helped by the fact that we have 2 cameras and they can have separate mounting points, it might also be possible to have their traces different lengths, though even if they're just mirrored it's easier than designing all traces.

There are many choices for chips that have sufficient CSI lanes, but they all have problems of their own.

Many would require NDAs to get low-level access to the chip's GPU. This would be unfortunate as it makes the idea of open hardware difficult (if not impossible, depending on what exactly falls under the NDA).

Others do not have the ability to use all the CSI lanes simultaneously (they are often designed for a forward and rear camera which can be used one at a time), but you can't know that until AFTER you read the datasheet which often requires NDA.

Others are hideously expensive which would prevent their use in an accessible camera.

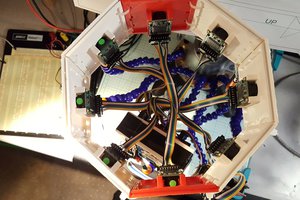

Dev kits are extremely difficult to find with dual CSI headers. The ones that exist today are:

| Nvidia Jetson TX1 | It has several exposed CSI lanes, but they're on complicated connectors and would require a specialized breakout board. Further it's cost ($299 for a module alone and $599 for a full dev kit) put it outside the reach of most reasonable hobbyists. |

| Raspberry Pi Compute Module | It is currently discontinued. Meaning that it is harder and harder to get ahold of. It only supports 2 camera modules (The OV5647 and the Sony IMX219). This cannot be increased because Broadcom has locked down the GPU on the chip and wont allow anyone to get access to the documents and source code required to implement new cameras. It can't provide full resolution encoding for both cameras in stereo mode, and it's rather slow making additional development difficult. |

| DragonBoard 410c | It has several CSI lanes on a complicated connector. It's GPU is locked down nearly as hard as broadcom's, but it may be available under NDA. There are only 6 CSI lanes, meaning that any cameras used will probably only run at ~75% of the rated framerate due to bandwidth limitations. Support for CSI cameras seems to be non-existent despite many users asking. |

In addition to CPUs, there is a possibility to use FPGAs. FPGAs offer a lot of advantages over CPUS, but they also have some serious drawbacks. An FPGA can easily decode and convert the video from the cameras, but it could be difficult to get the video data out of the FPGA onto a CPU to process. FPGAs are also a lot more expensive, and could make the camera excessively expensive.

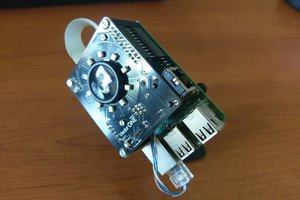

I have ordered the Jetson TX1 and the Compute Module dev kits. The Jetson has a lot of power that will be helpful during development, even if we move to a cheaper board later on. The Raspberry Pi has an existing workflow for 3d vision, so I can set it up quickly and begin experimenting with calibration.

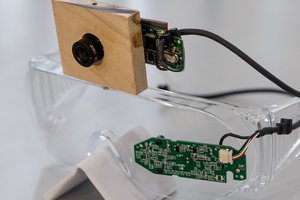

I have also bought some dedicated hardware in the form of a StereoLabs Zed. The Zed is a great stereoscopic camera that includes an accessible and powerful SDK.

I have ignored the Realsense and Kindle cameras for now, as they only have a single camera which prevents...

Read more »

Create an account to leave a comment. Already have an account? Log In.

I haven't seen this specific video before, but I have looked at the Jetson TX1 already. The problems with it are really 2-fold. The first is the cost. The TX1 costs $600 in the dev kit form and $300 for just the module. This is above what I'd like to price the DIY camera at. The second is that in order to write the drivers, Nvidia requires an NDA for the GPU docs. This is really a dealbreaker as we really want everything to be open source.

Finally, this particular video shows one more problem. They only allow 2 CSI lines per camera, this forces the resolution to no better than 1080p (and I think even that requires some shortcuts).

I do actually own a Jetson TX1. Mainly so I could play with the StereoLabs Zed camera. This camera is subject to one of my logs and is a fascinating and cool piece of kit. It's capable of recording two separate 2k streams at 15fps or 1080p at 30. This is where I was able to verify that 1080p quality just isn't enough. The 2k wasn't a whole lot better, mainly because of the framerate.

Please feel free to send me anything that you notice like this, I'm always looking at what is available and possible.

Become a member to follow this project and never miss any updates

Robert Gowans

Robert Gowans

Mark Mullin

Mark Mullin

John Evans

John Evans

Have you seen this?

The hardware is quite expensive but in terms of processing power it'll be overkill....