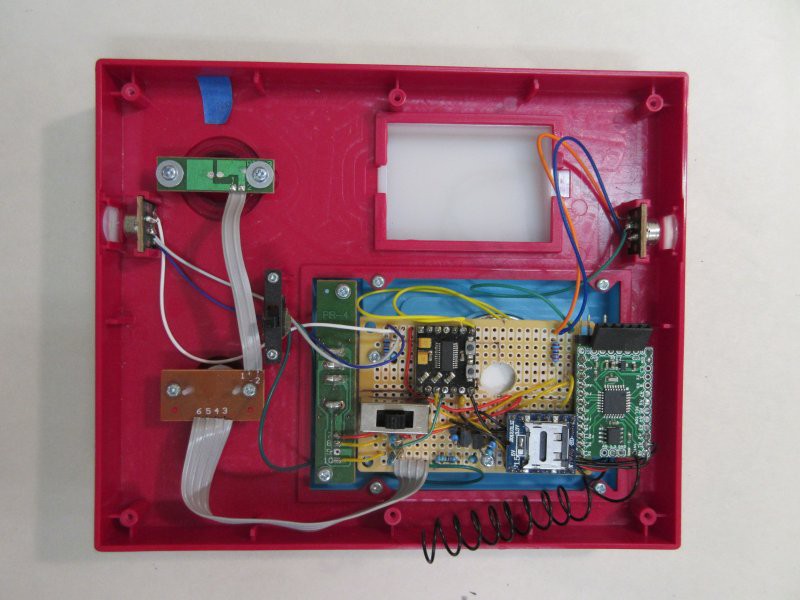

Here's a picture of one of the earliest Integrons. A very early Reactron is visible, it is a small Arduino clone with a HopeRF RFM12B transceiver. It is fitted to a wireless doorbell. The Arduino clone ran the code to integrate to my network. The doorbell provided bi-directional human interface - push the button (input), and hear a sound and light a small LED (output). Rudimentary, but it was the first step in a connected, human-integrating system.

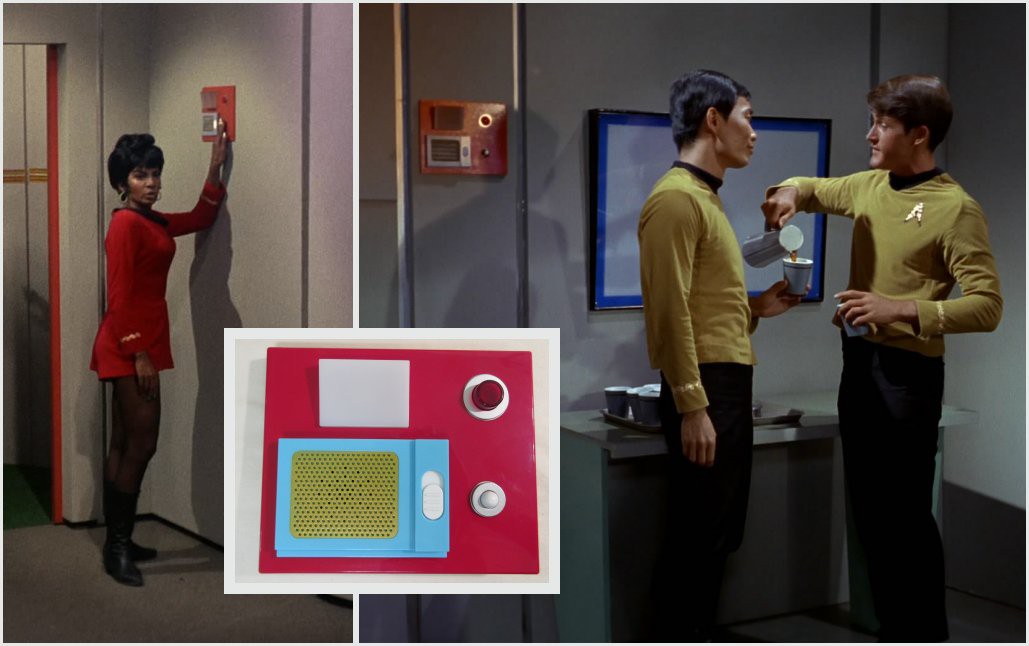

Here is a picture of a later, but still early, Integron. Originally a Star Trek door chime, it came with a control board, two PIR sensors, an LED, a few switches, and a speaker - with my upgrades it acted almost like the piece of ubiquitous hallway equipment on the show. The intent was to have an ever-present, "ambient" interface, even if the level of interface was just a light to be seen. Star Trek had it first! The people were always only a few steps away from one of these devices.

I removed the control board and replaced it with one of my own, with a WTV020-SD-16P module, which can play 512 different sound files, and a PAM8803 amplifier. The Arduino clone in this picture is the green board at the right, and on the reverse is a HopeRF transceiver - the coil is the antenna.

This was a more sophisticated human interface. It could detect movement and have its switches pressed as input, and could light up and/or play sounds for output. It was a nice set of components and form factor for the price. I later added a microphone and did a bunch of work on voice control using frequency analysis of voice samples. (I gave up on this eventually, due to too many false positives from ambient sound initiating actions I did not want, after the frequency analysis normalized and simplified the data. The ATMega328P is just not the right processor for speech analysis. Even when working flawlessly, it could only "recognize" some token words with known patterns to match against, a small set of static commands.)

The recorded sound files were initially a handful of signal noises, but then I added pre-recorded speech output like individual numbers and common words, so I could piece together meaningful voice responses, even if they were narrowly specific, and mostly numerical. I did have 512 slots, after all. This worked well enough, but required me to maintain the sound file store, and ultimately I wanted a bit more sophistication. I decided to make the jump to an SBC Linux board so I could run speech recognition as well as text to speech, for generalized voice input and output. I also wanted something nicer to look at than a bright red box on the wall, something with a more visible light and at least coarse gesture detection instead of merely motion detection.

Kenji Larsen

Kenji Larsen

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.