Almost all speaking people in the world don't know sign language. It creates a huge barrier between the deaf & mute community and the rest of the world. This reduces the potential for everyday communication and transfer of opportunities across the barrier. Today, for a deaf/mute person to get a job or do business in this world, their company needs to hire an interpreter for them. Many companies prefer to hire a speaking employee so that the cost of an interpreter can be avoided.

This project is designed as a serious effort at tackling the technical problems of sign language translation so that we can take down the communication barrier that exists between deaf & mute communities and the rest of the world. The requirements have been thought through carefully to ensure ease of use and adoption of this glove, so that people from deaf & mute communities can interact with the rest of the world and make their mark on it!

Hardware

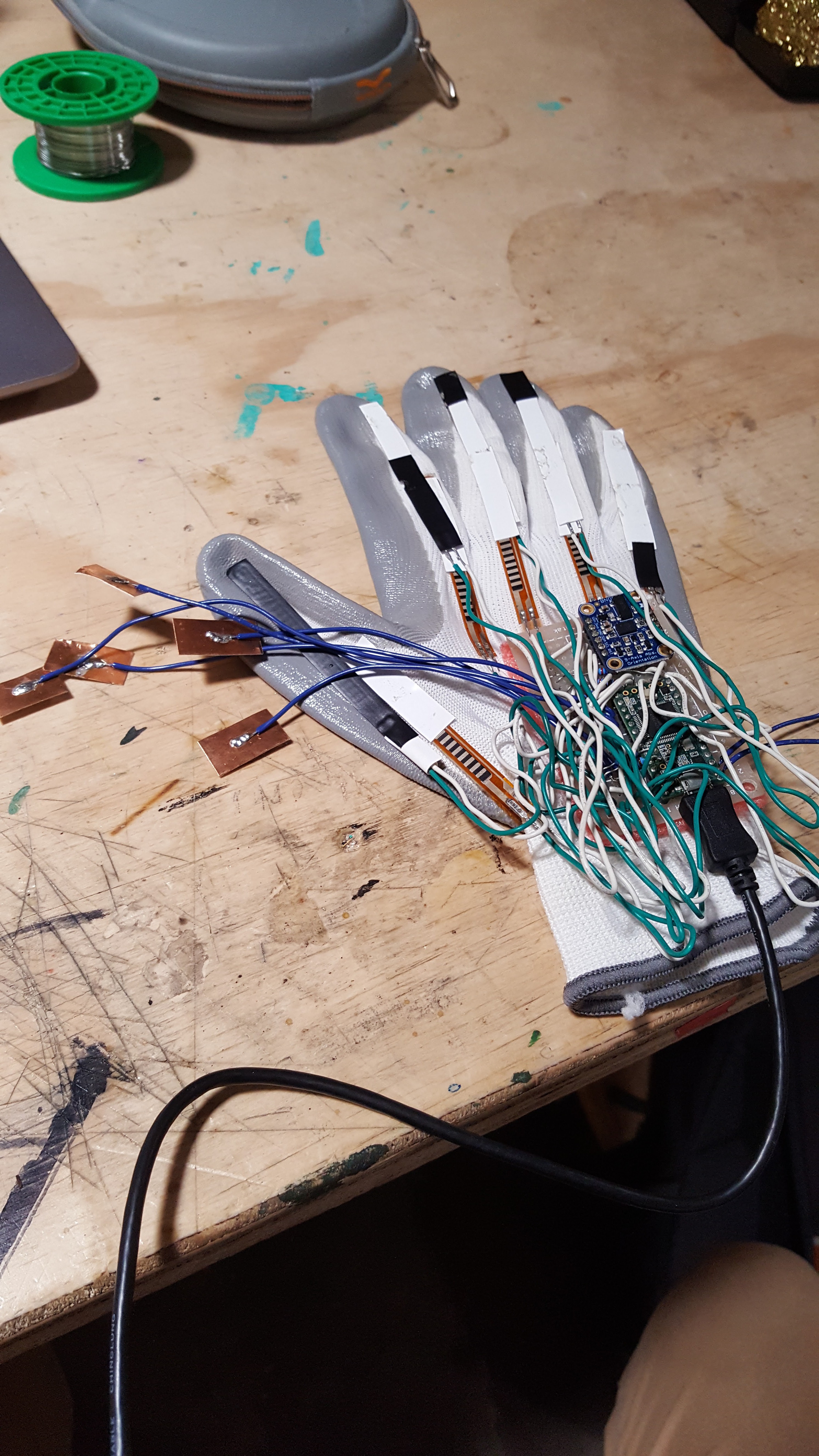

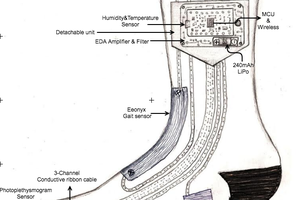

The hardware on Hands|On should be capable of sensing and collecting all the necessary data about the hand's movements while being lightweight and portable. With these needs in mind the hardware was designed as follows:

Sensors:

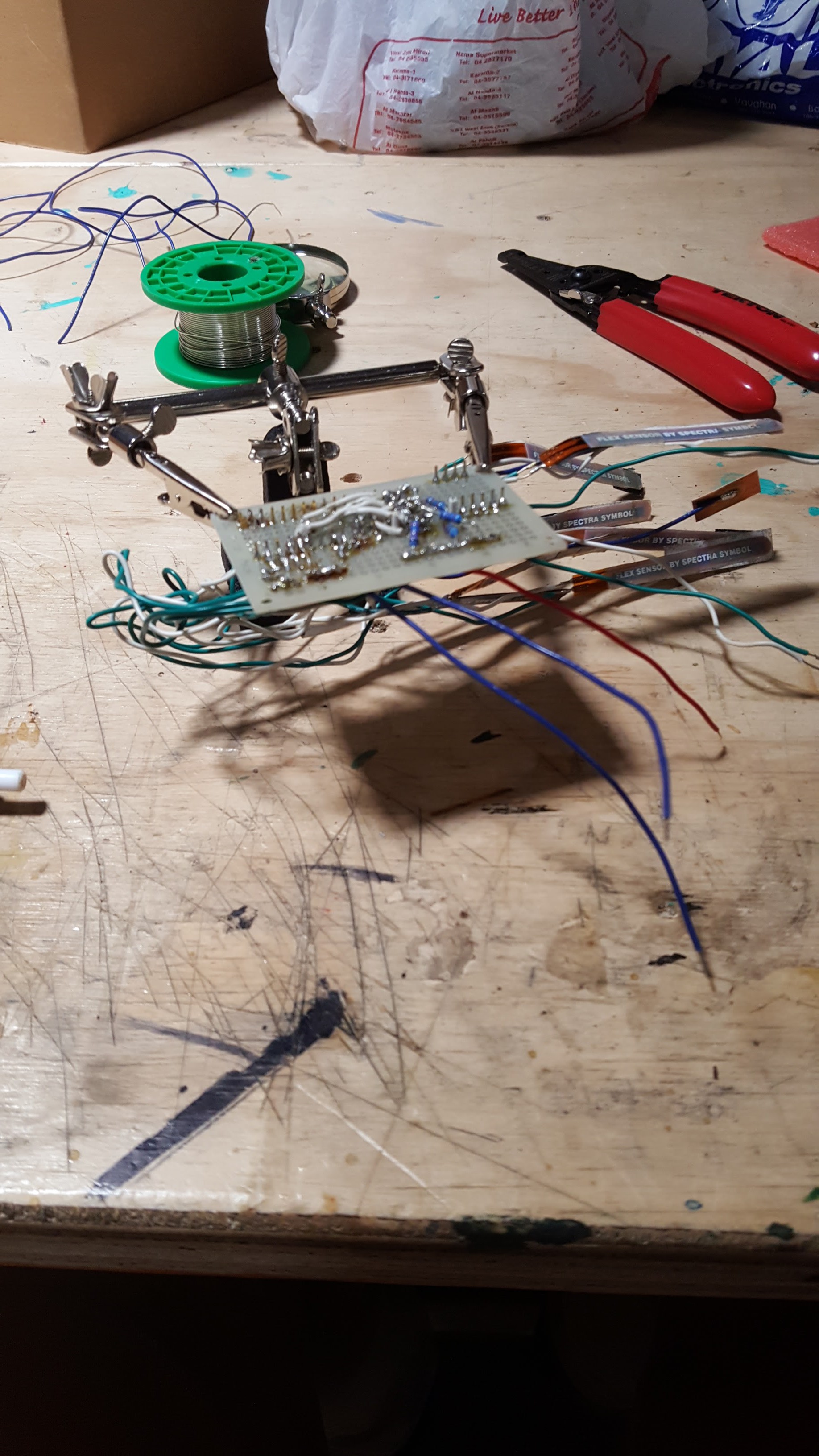

- Flex sensors: these rad sensors (from the days of the Nintendo PowerGlove) are thin strips of film-like material, whose resistance changes with bending. We use 9 of these to measure the bending of the important finger joints.

- 9-DOF IMU: measures the orientation of the hand in space.

- Capacitive touch sensors: a fancy name for pieces of copper tape stuck to a wire. When the copper tape is touched, its capacitance is altered resulting in a measurable response at the microcontroller.

Data handling:

- Teensy microcontroller: the Teensy is a very lightweight, small form factor microcontroller with the power and capabilities of an Arduino. The best part about it is that it has 20 analog inputs! This is what we need to plug all our flex sensors into. Also, many of these inputs are easily configurable as 'touch' inputs so it was too easy to set up our capacitive touch sensors in the code :)

- HC-06 bluetooth module: no one wants to be connected by USB cable to a computer all day. Unless you're into that. Bluetooth will send data to the PC and make the device portable.

- Rechargeable battery.

Software

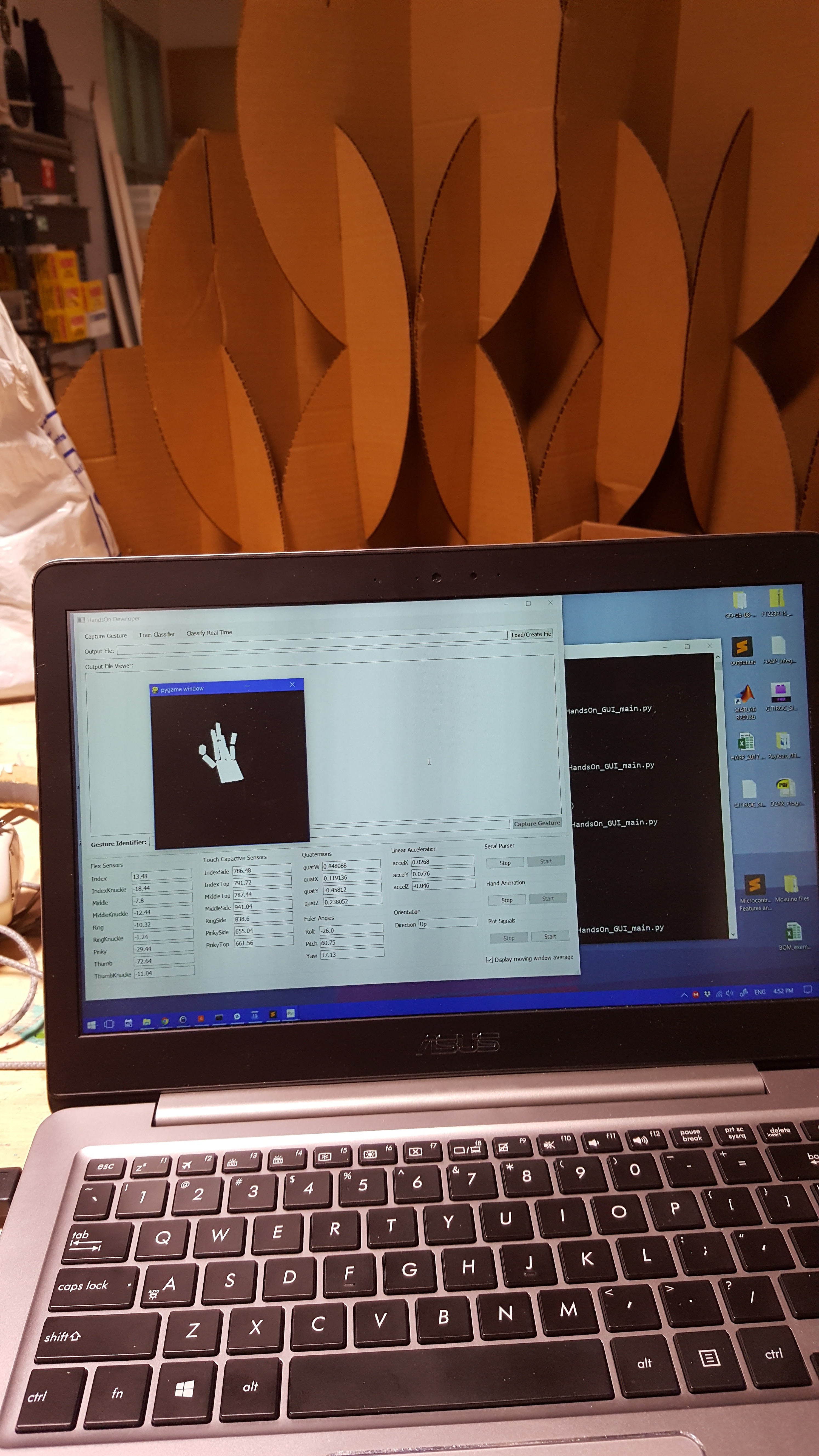

Hands|On's software should translate signs with high accuracy, and allow the user to train the machine with their own hand gestures. It should also capture and process data very fast, and have an intuitive interface. Below is the software design:

- Serial communication: the Arduino code on the Teensy transmits the sensor data, after minimal processing, over a serial port (virtual COM port) to the computer. The data is picked up using the PySerial library in Python.

- Real-time 3D hand rendering: the parsed and processed sensor data is used to show a live 3D animation of the user's hand on the screen. This is done using PyOpenGL. The animated hand's orientation changes and fingers bend in sync with the user's hands!

- Machine learning: hand signs and gestures are captured by saving the sensor data to a file. Machine learning is performed using the SVM algorithm. The API used for machine learning is the fantastic Scikit-learn library.

- Text to speech: the letters outputted by the machine learning algorithm are sent to a text to speech engine (Pyttsx) so that you can hear what was being signed!

- Qt interface: the GUI is designed using PyQt5 because Qt is slick and extremely cross-platform (https://doc.qt.io/qt-5/supported-platforms.html). The Qt Creator was very intuitive to use to get our GUI up and running quickly.

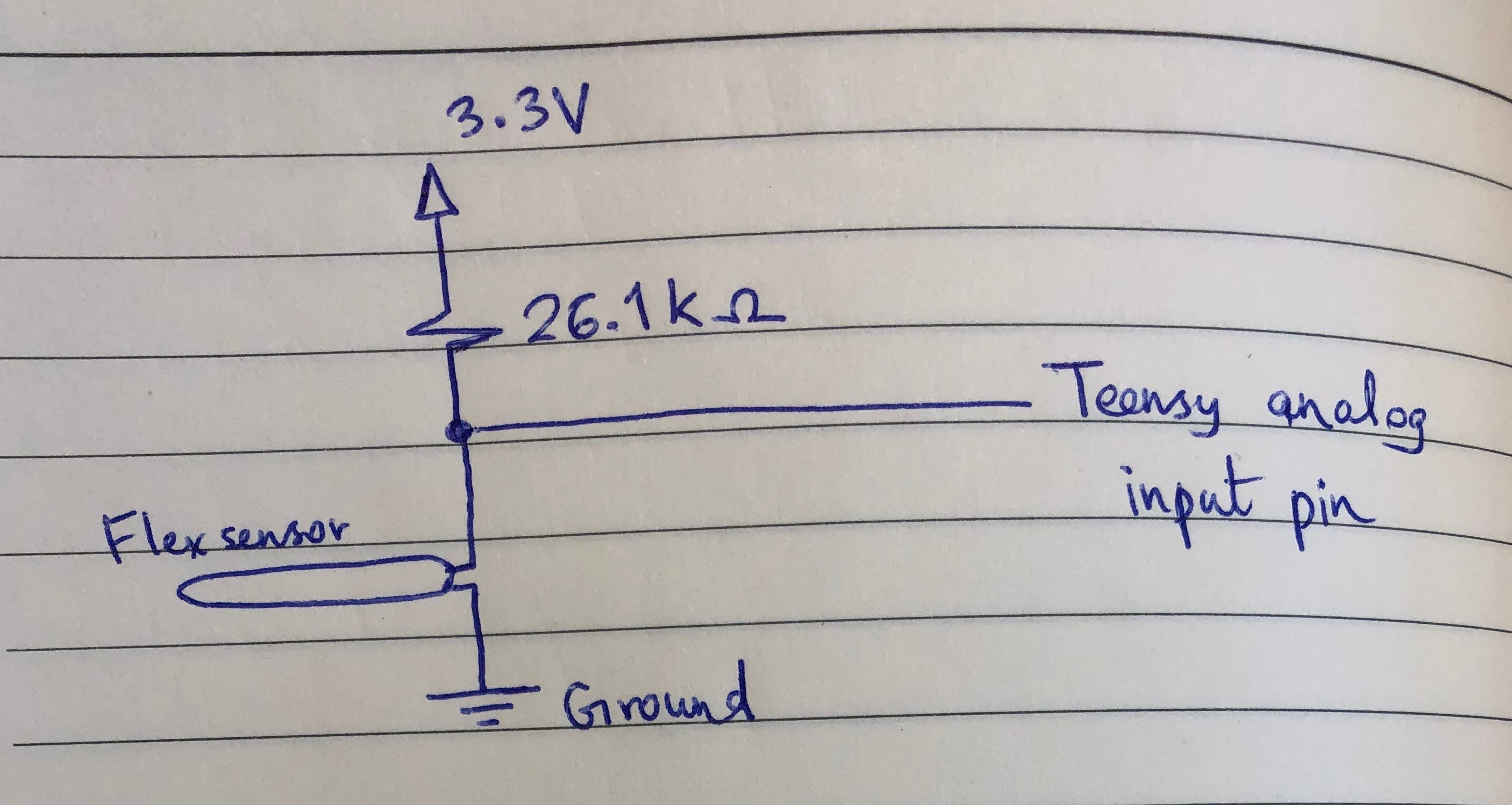

Drawings

1. Flex Sensor Connection Schematic

Bhavesh Kakwani

Bhavesh Kakwani

Dr Andy Woods

Dr Andy Woods

TURFPTAx

TURFPTAx

Anthony I Stewart

Anthony I Stewart

Naveen Sridharan

Naveen Sridharan