Imagine you could use nothing but your hands to create sounds and music… like the conductor of an invisible electronic orchestra.

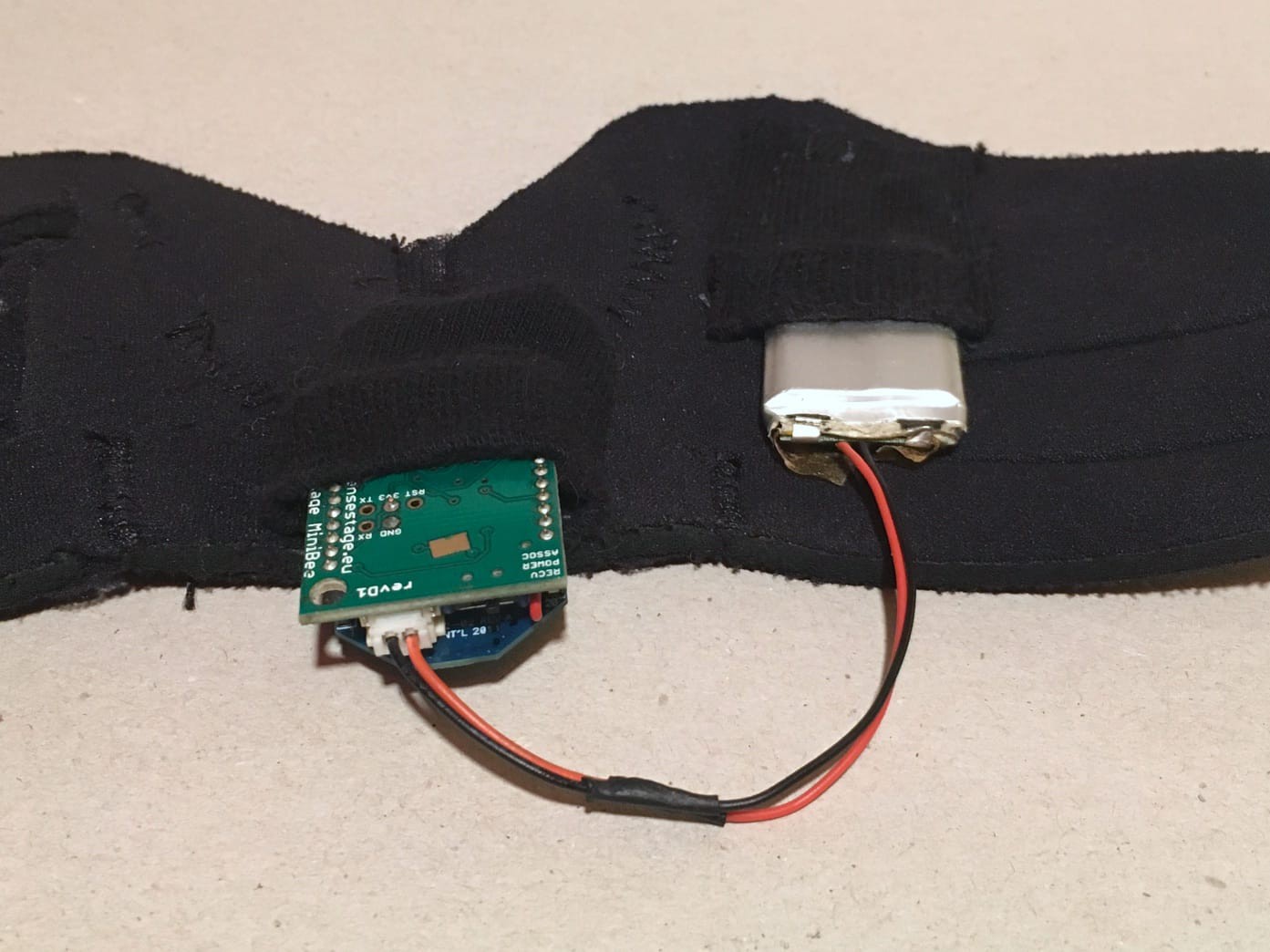

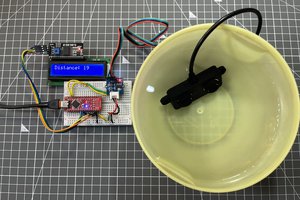

Through a small sensor worn on the wrist it captures motion data and transmits it to a computer, where the movement is analysed and translated into sound. Hands are the ultimate interfaces and we all constantly use them to perform complex gestures in our daily live. That makes GePS very intuitive and natural to use. GePS is an open source project, so anyone with a little Maker experience can assemble the sensor according to our hardware tutorials, download the code and setup their very own unit for free!

Our goal is to make GePS a standalone well-documented open-source electronic instrument, that is accessible to everyone.

The work with gestural interfaces on which GePS is based on has been presented in peer-reviewed publications and presented at conferences, fairs and concerts across the globe, including HopeX New York City 2015, 2016 Close Encounters Festival Tbilisi Georgia, 2015 International Conference on Music and Sonic Art (MuSA) in Karlsruhe Germany, 2015 Audio Mostly Conference on Interaction with Sound in Thessaloniki Greece. It was a semi-finalist at the 2015 Guthman Musical Instrument Competition at Georgia Tech.

GePS is developed by the Swiss artistic duo Cedric Spindler and Frederic Robinson.

Technology to enable Creative Freedom

The accuracy of the sensors and the high transmission speed allows the capturing of tiny gestures, flicks with the fingers as well as big movements and strong impacts. This gives the performer a wide range of expression and a fine grained control over the sound - resulting in great creative freedom.

Technical Details

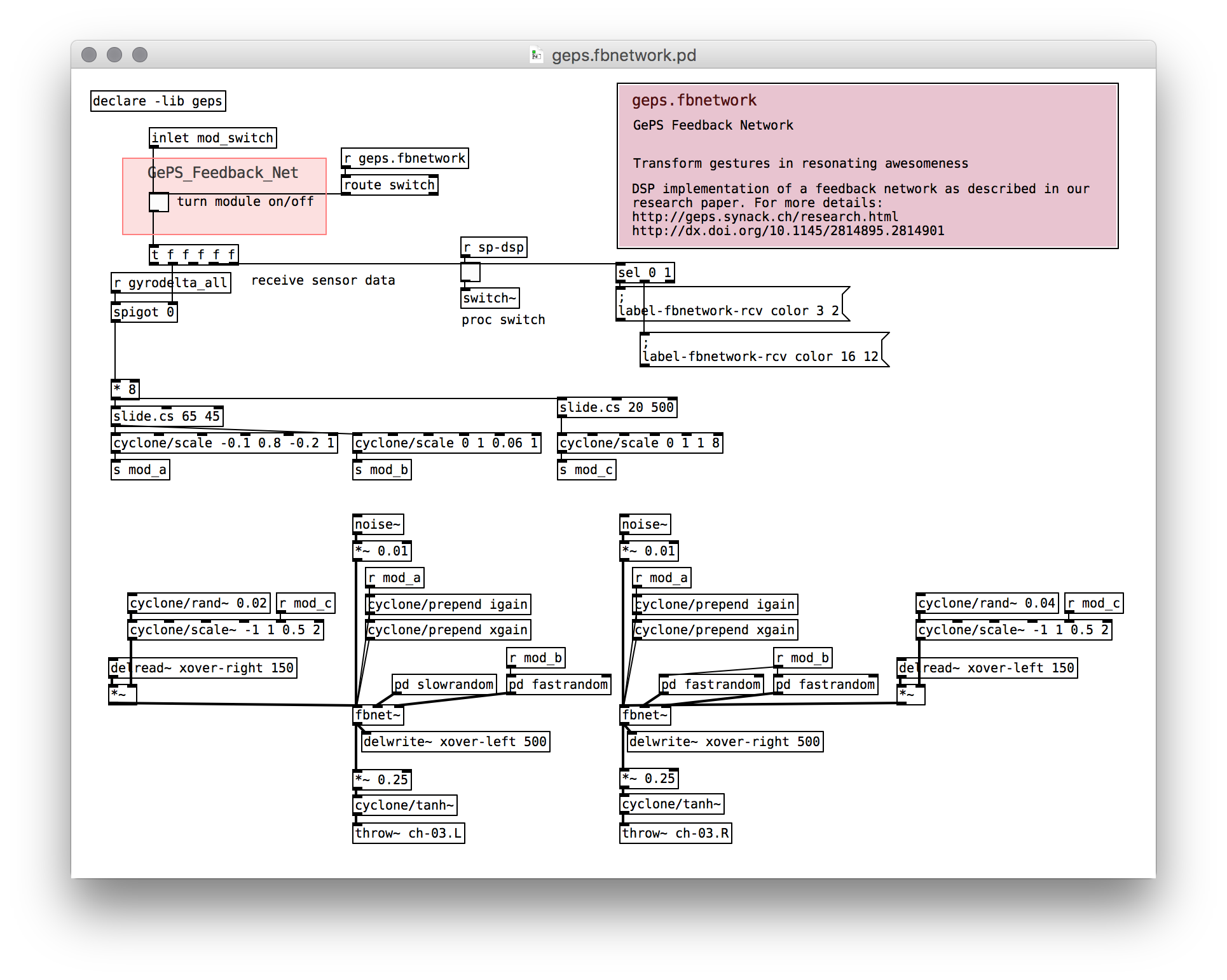

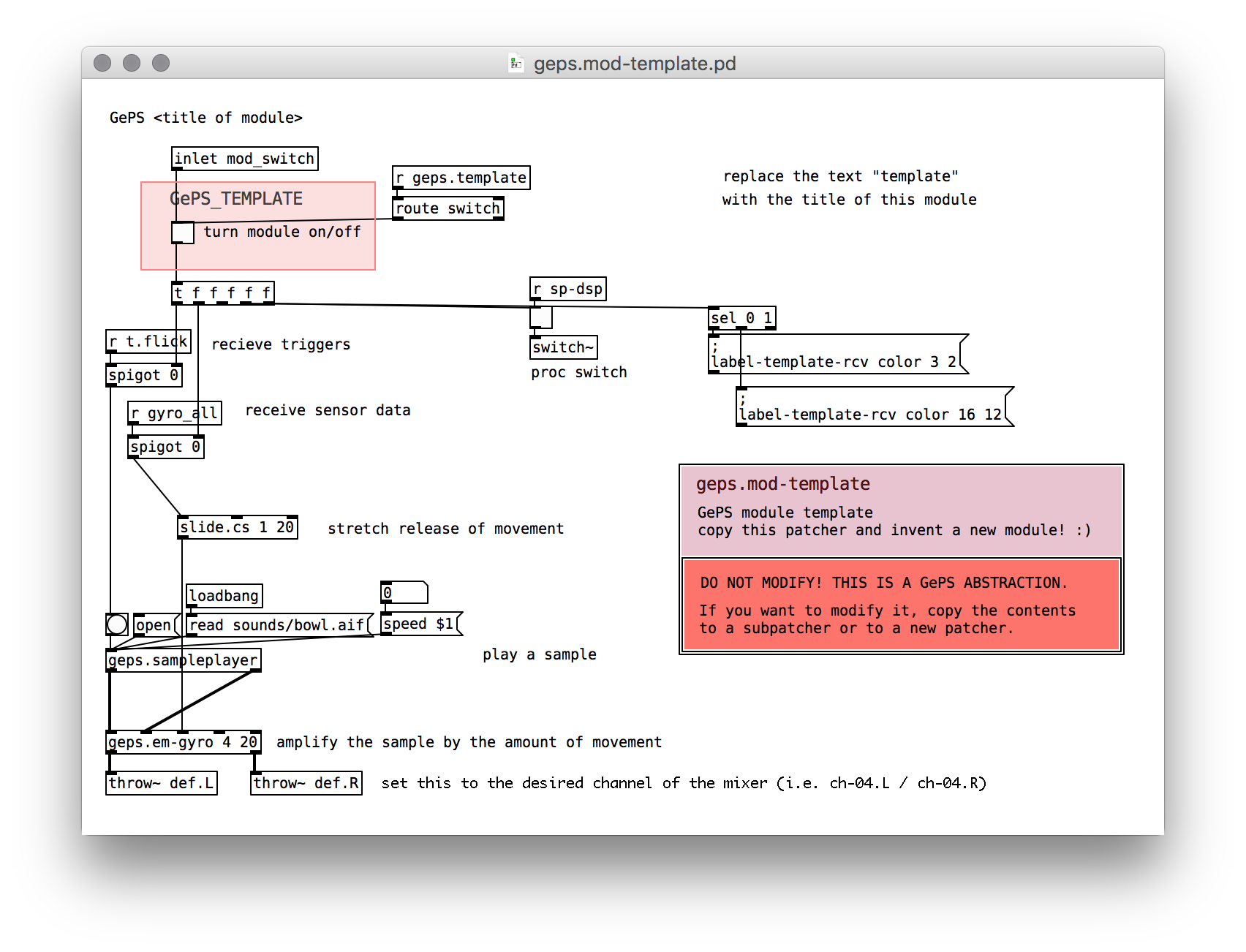

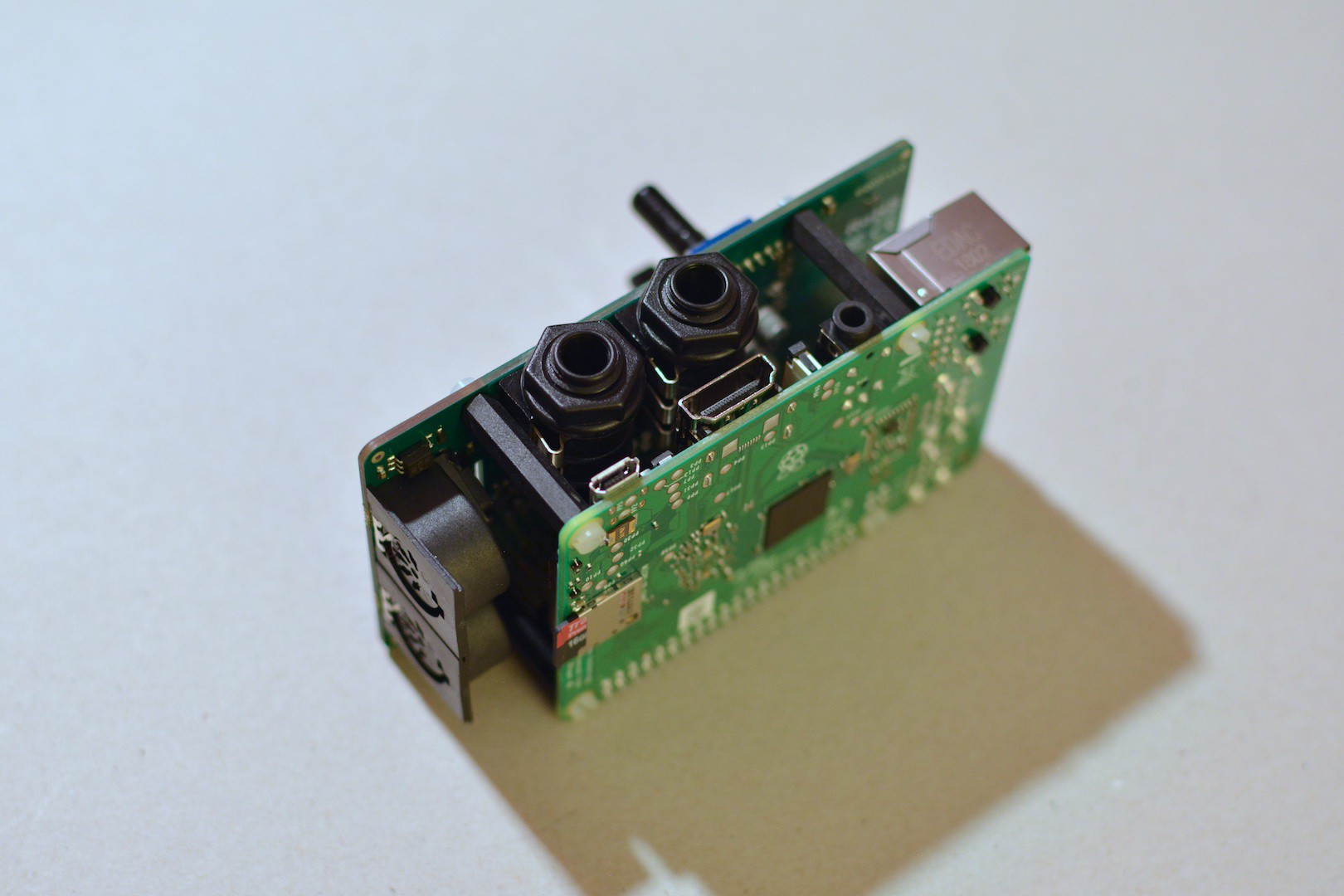

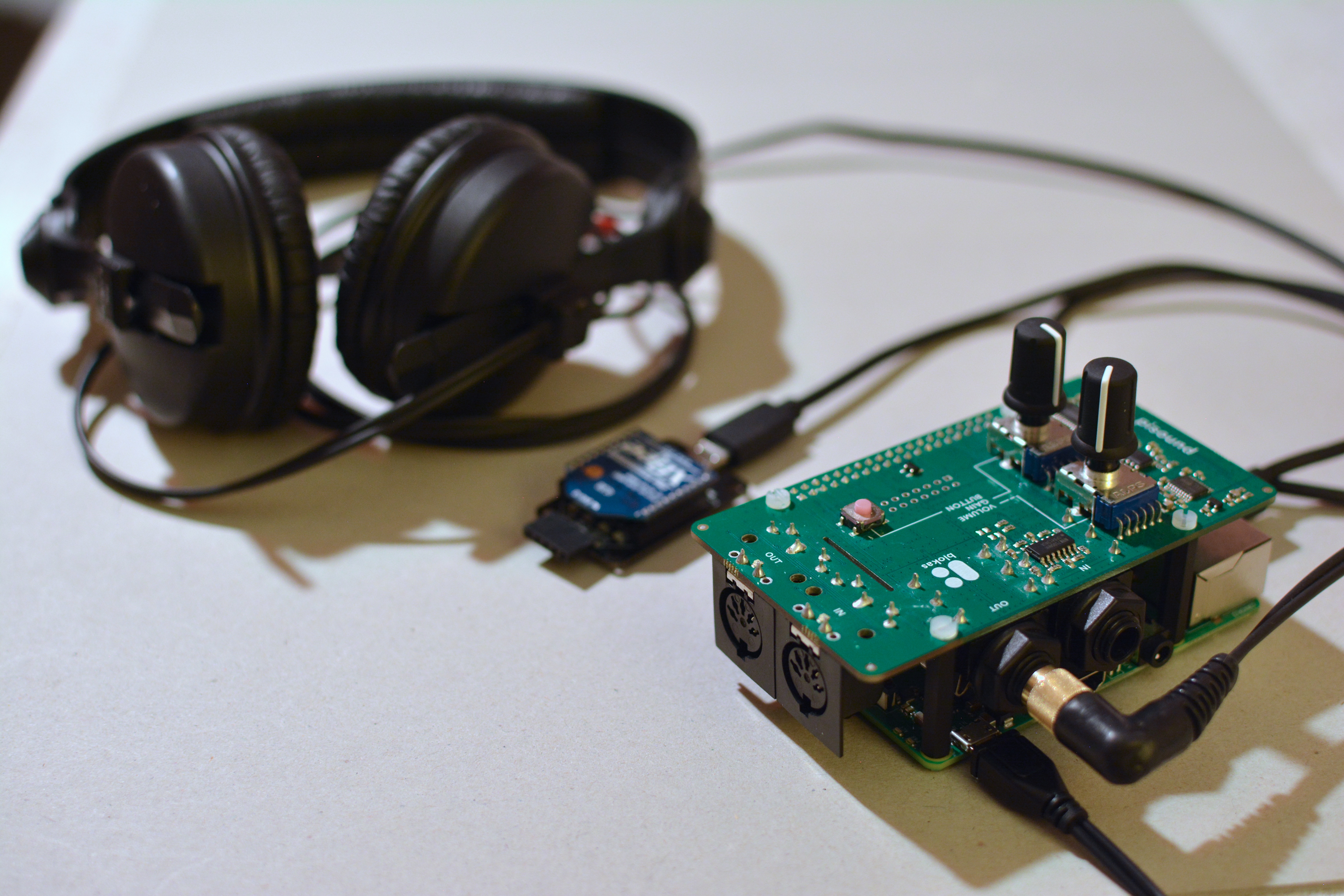

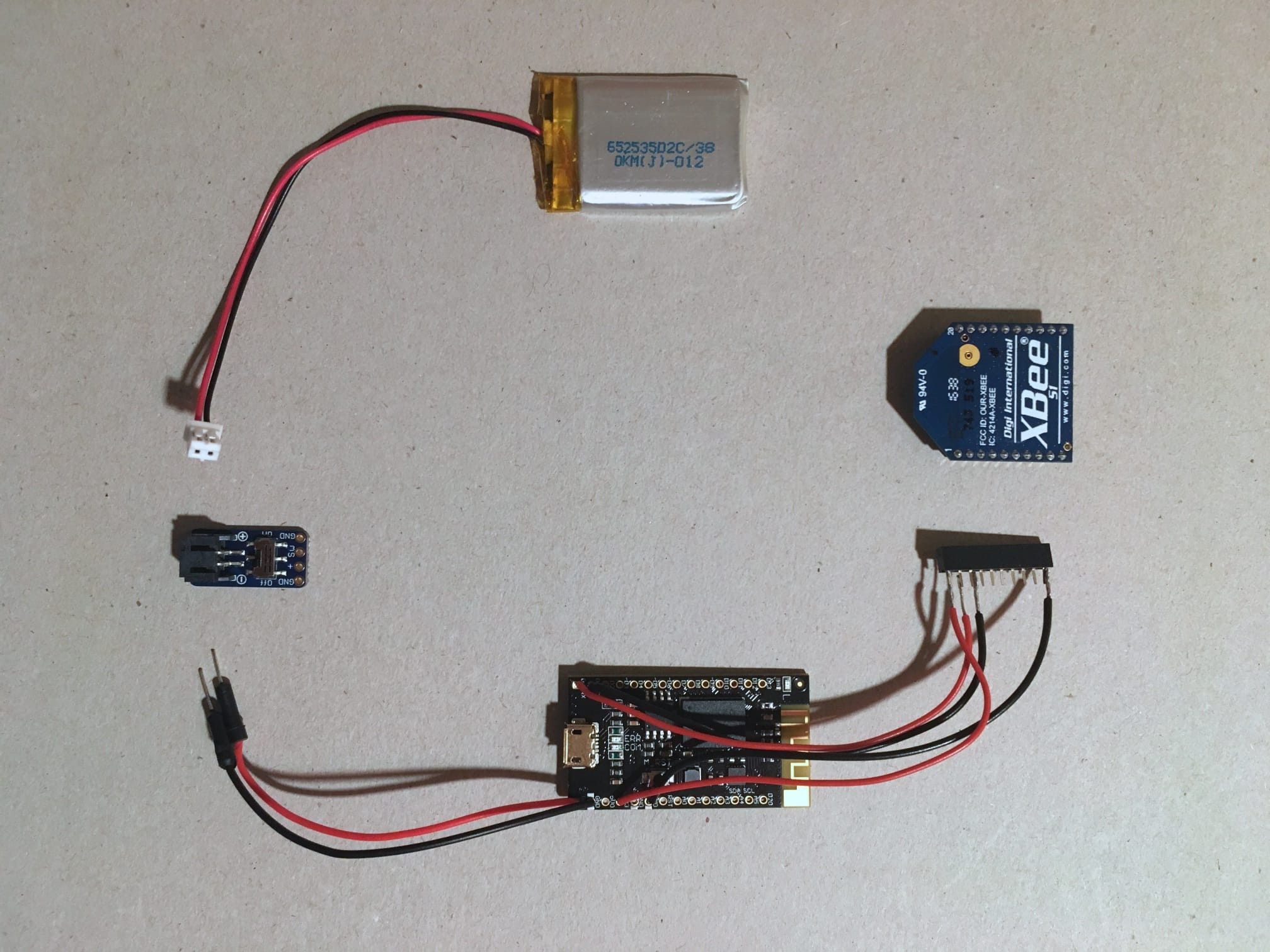

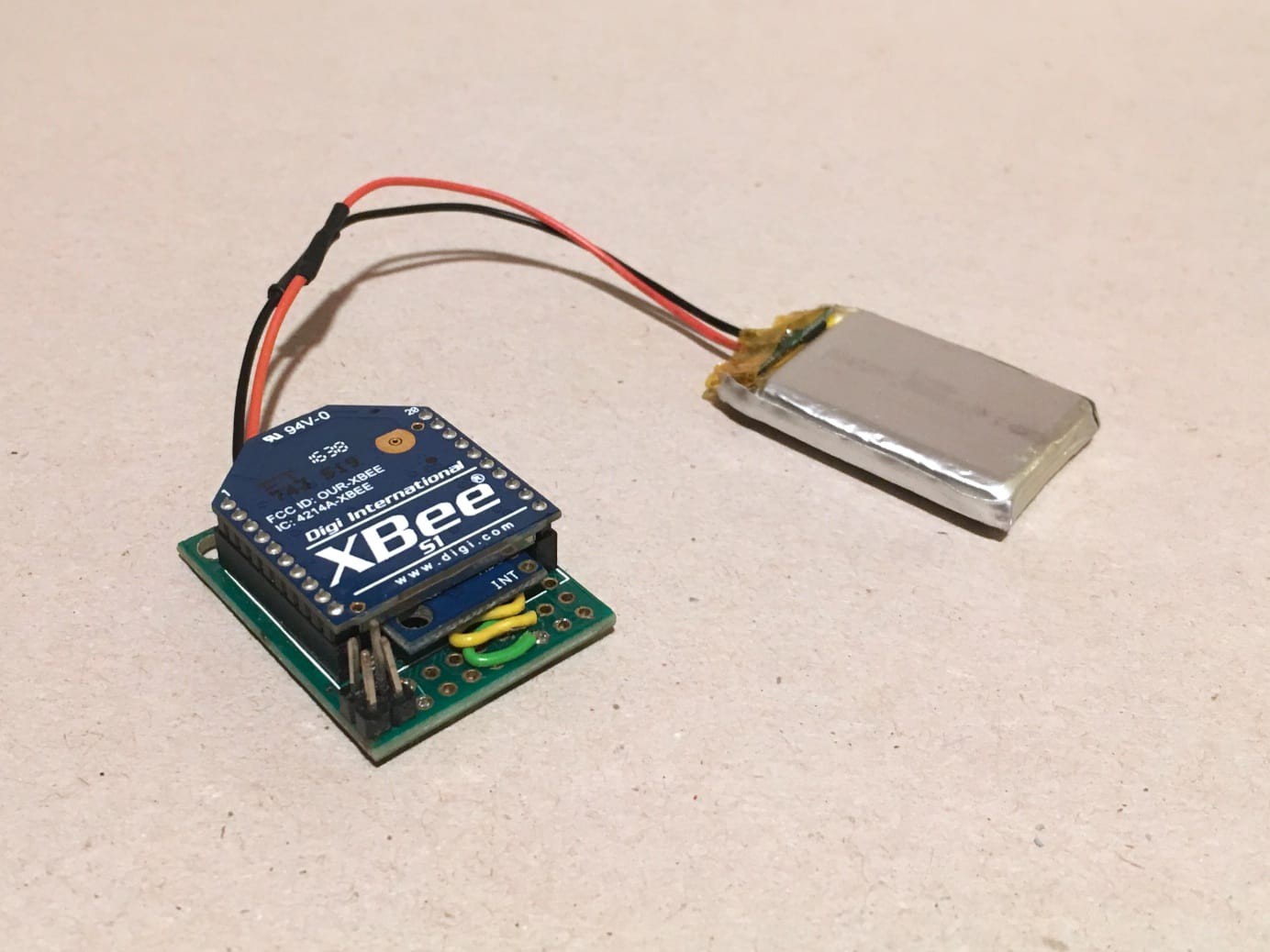

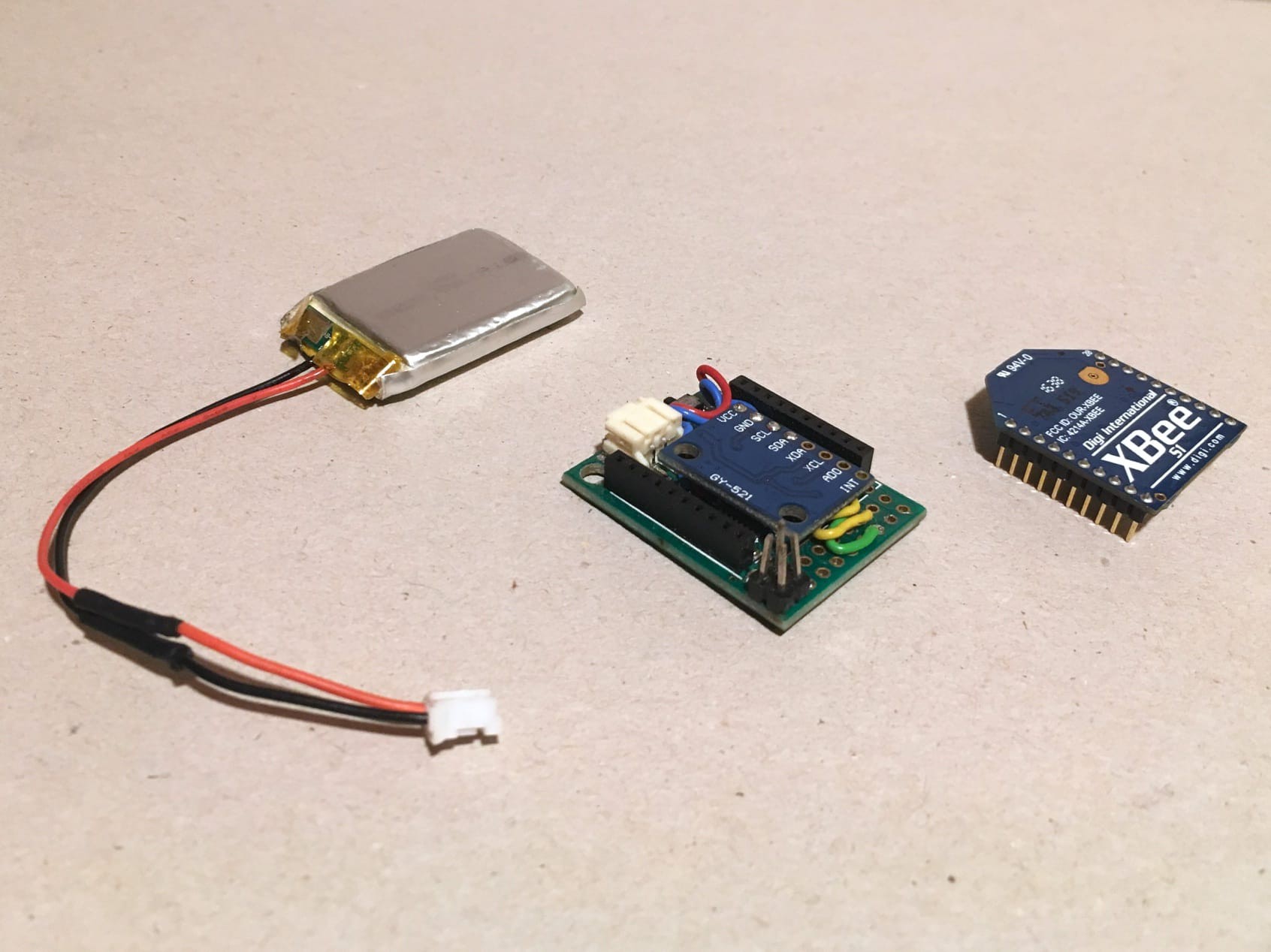

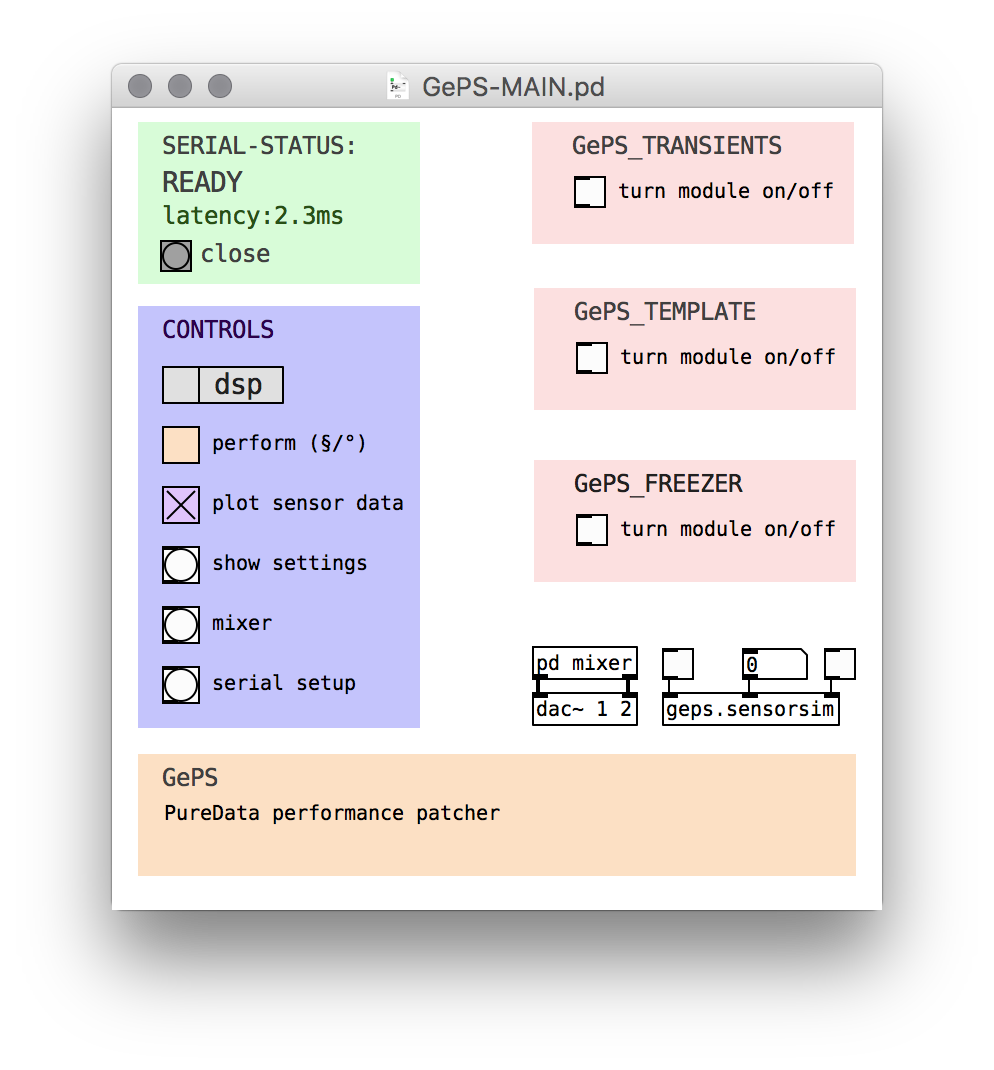

The performance system uses a 3 axis gyroscope and a 3 axis accelerometer as sensors, a tiny Arduino to read the data, a pair of XBee modules to transmit the data wirelessly to the software (pure data) running on a Raspberry Pi with a Pisound audio interface. The software consist of several custom built transformation algorithms and sound modules that are programmed and designed to the creative ideas of the performer.

Zed Cat

Zed Cat

Danie Conradie

Danie Conradie

Mario Frei

Mario Frei

ElectroBoy

ElectroBoy