I took a bit of a break once the project report was submitted, but I was itching to carry on testing some ideas I'd had which were a little beyond the scope of a one semester project. This weekend, I finally got back in the workshop and built a better sensor ring. I had previously tried to build a ring with 16 sensors, but had used the wrong ones and made some other mistakes.

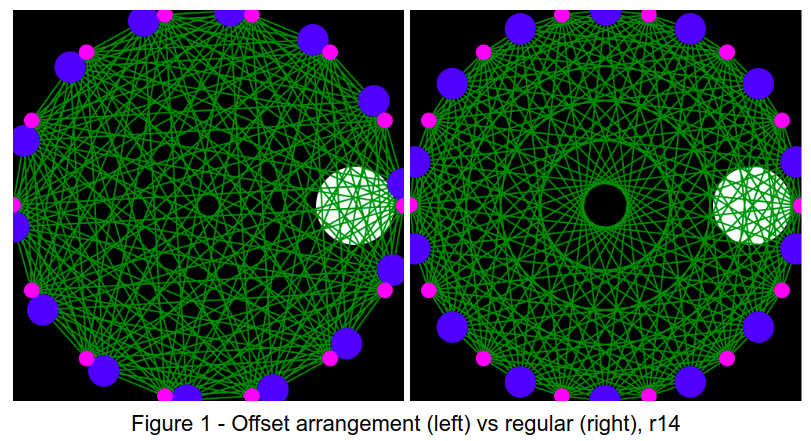

I didn’t have quite enough of the more sensitive phototransistors left to make another ring of 16, so I decided to try something different: A ring with 14 LEDs and 14 PTs in a non-symmetric arrangement. The arrangement is shown on the left - note the difference between this and the one on the right (regular spacing). That hole in the centre has been bugging me.

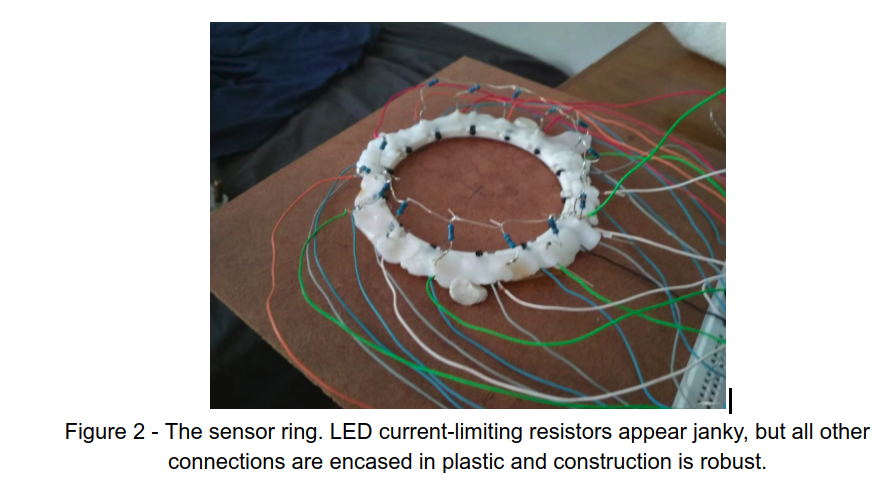

The ring is built better than the previous ones. The LEDs are connected to 5V (not the 3.3V of the GPIOs) and pulled low when activated. This means the signal received by PTs on the other side of the circle now results in adc reads from ~200-900 (out of 1024) giving a much better range than before. The beam spread is still an issue - only about 5-7 PTs opposite the LED are illuminated. But this is still enough to do some image reconstruction!

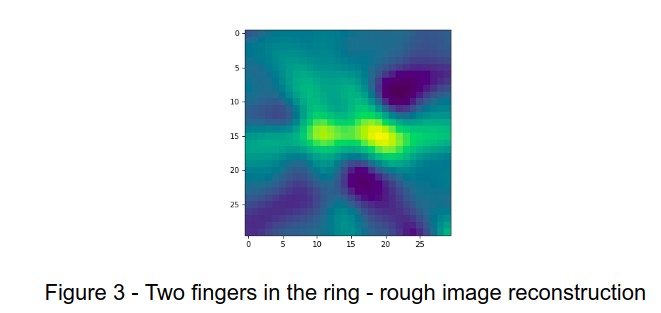

I am busy with the maths (see next bit) but by interpolating to approximate a fan-flat geometry I can already get some images from this thing. Figure 3 shows one example - the two separate blobs are two of my fingers, inserted into the ring. Reconstructions get worse away from the center as less light is available (beam angle) and so the signal is smaller. But there is a nice central area where it works well.

One cool aspect: this ring can capture scans at >100Hz, although my reconstruction code can’t keep up with that. I can store data and reconstruct later to get 100fps ‘video’ of objects moving around. For example, I stuck some putty onto a bolt and chucked it into a drill. Here it is as a gif:

I've only just started playing with this, but should have a bit more time to try things out in the coming week (here's hoping).

The maths

At the moment, I use interpolation to get a new set of readings that match what would be seen by a fan beam geometry with a flat line of sensors. This means I can use existing CT reconstruction algorithms like Filtered Backprojection (FBP) but by doing this I sacrifice some detail. I'm hoping to get some time to work out the maths properly and do a better job reconstructing the image. This will also let me make some fairer comparisons between the two geometries I'm testing (offset and geometric). It will take time, but I'll get there!

For now, enjoy das blinkenlights

johnowhitaker

johnowhitaker

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.