It seems the raspberry pi 5 is making tensorflow-lite on the CPU a viable alternative to tensorrt on the GPU. They're claming 3x faster inference speeds than the rasp 4, which would make 8 bit int on the rasp 5 equivalent to FP16 on the jetson nano. FP16 on the jetson nano was generally 3x faster than 8 bits on the rasp 4.

As for porting body_25 to 8 bits, the rasp 5 would probably run it at a slightly lower speed that it runs in the jetson nano, 6.5fps with a 256x144 network. It would have to use the CPU for a lot of post processing that the jetson does on the GPU. It would not be worth porting.

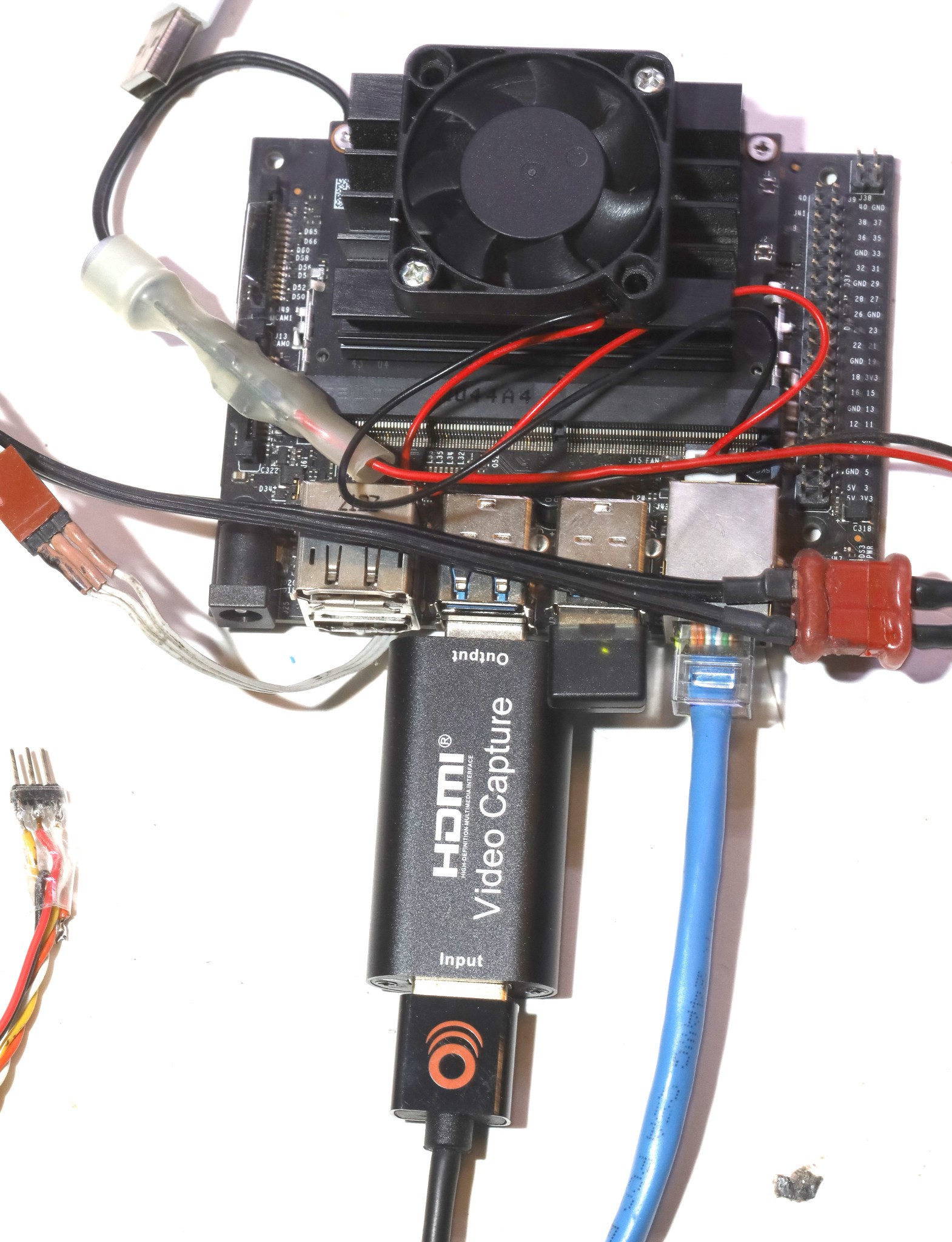

The lion kingdom only paid for the 4 gig rasp, since it only has 2 possible jobs & a USB swap space proved viable on the jetson. It took a long time to finally do the deal, since the jetson nano was so expensive.

https://www.tensorflow.org/lite/examples/pose_estimation/overview

Looking at the top 8 bit pose estimator, MoveNet.Thunder, it should hit at least 11fps on the rasp 5 with all the post processing, maybe 12fps with overclocking. Helas, it only supports 1 animal. It might be viable for rep counting. The rep counter of the last 6 years has been a 256x256 body_25 at 12fps.

The notes for movenet on the rasp 4 were

https://hackaday.io/project/162944/log/202923-faster-pose-tracker

The biggest multi animal network is movenet_multipose_lightning. movenet_multipose lighting FP16 would probably hit 9fps on the rasp 5. Overclocking it to 10fps seems attainable.

---------------------------------------------------------------------------------------------------

The pose estimator tests were using tensorflow for python. This required a totally different installation than the C libraries.

Installing tensorflow for python had a new twist. They no longer allow 3rd party python modules in the root directories. You have to make a virtual environment.

root@truckcam:/root% mkdir venv root@truckcam:/root% python3 -m venv venv

Activate the virtual environment:

root@truckcam:/root% source venv/bin/activate

Install the libraries:

root@truckcam:/root% pip3 install tensorflow

pip3 install opencv-python

apt install libgl1

The trick with python is it can't read from the fisheye keychain cam. It only works with a standard webcam. The past work used a 640x480 webcam.

The next step after installing tensorflow was downloading the demo program.

git clone https://github.com/ecd1012/rpi_pose_estimation

This one used the following model:

The invocation was

python3 run_pose_estimation.py --modeldir posenet_mobilenet_v1_100_257x257_multi_kpt_stripped.tflite --output_path pose_images

Something got this guy hung up on requiring a GPIO library, physical button & LED. Lions just commented out all that code & hacked a few more bits to get it to save more than 1 image. This model hit 16fps but was really bad. Mobilenet V1 was the 1st clue.

The one which uses movenet_multipose_lightning is

https://github.com/tensorflow/examples/tree/master/lite/examples/pose_estimation/raspberry_pi

Download this directory with a github downloader program. Then rename it posenet. Run setup.sh

Helas, this one seems to use a variant of opencv which fails to compile on the rasp 5. Trying to run it with the cv2 package that worked in rpi_pose_estimation dies with Could not load the Qt platform plugin "xcb"

The easiest way to run this one is to make a C program.

After many iterations, the 2D & 1D tracking programs for the raspberry pi all ended up inside

https://github.com/heroineworshiper/truckcam/truckflow.c

The android app for controlling it ended up inside

https://github.com/heroineworshiper/truckcam/

There seems to be no way around endlessly copying the same code around because of the battle between many models with different infrastructure needs. The latest feeling is instead of manetaining status bits, these programs should send print statements to the phone. There are too many problems with the webcams & no-one is ever going to use this who doesn't know the source code. Raw console output would require a streaming protocol, repetition of the error messages, or a counter indicating something was lost.

---------------------------------------------------------------------------------------------------------------------------------------------------------------

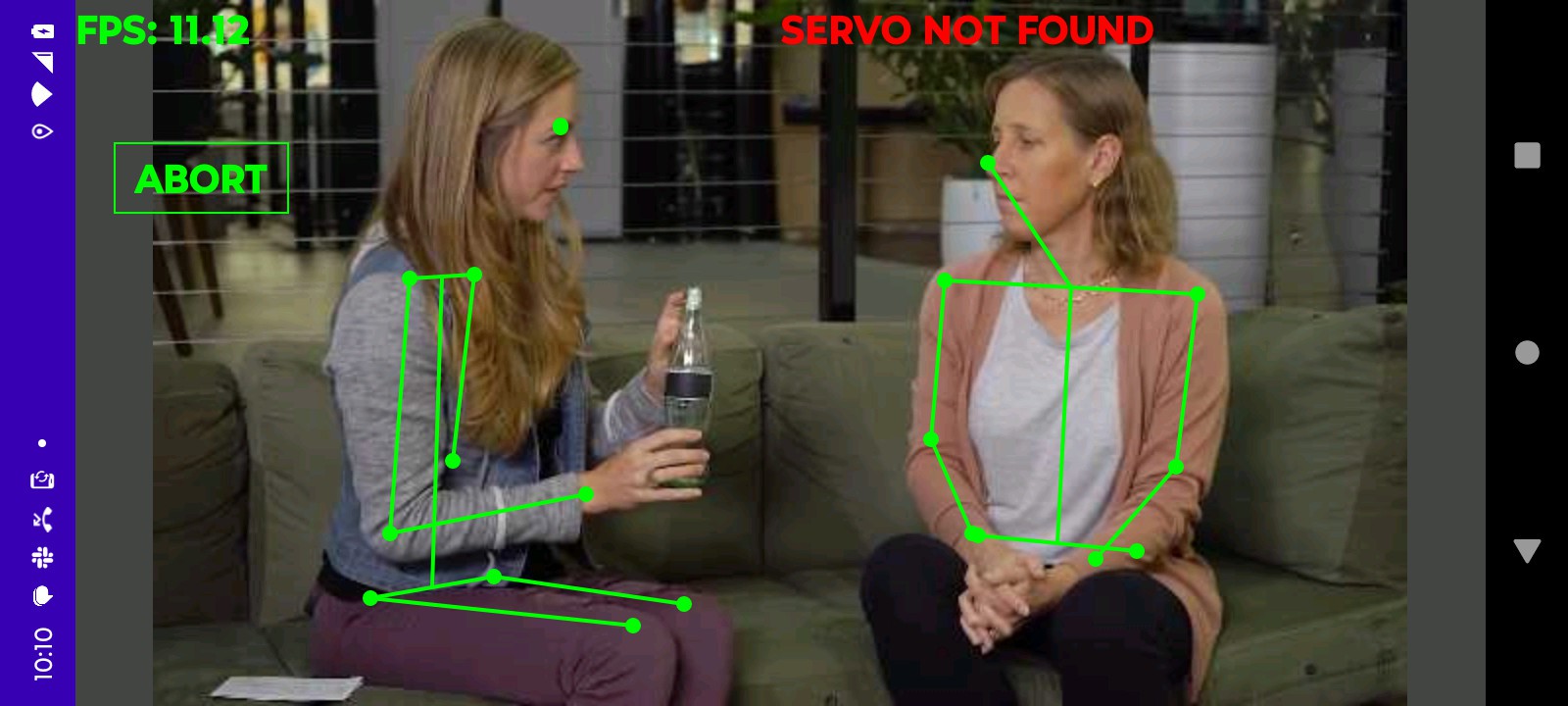

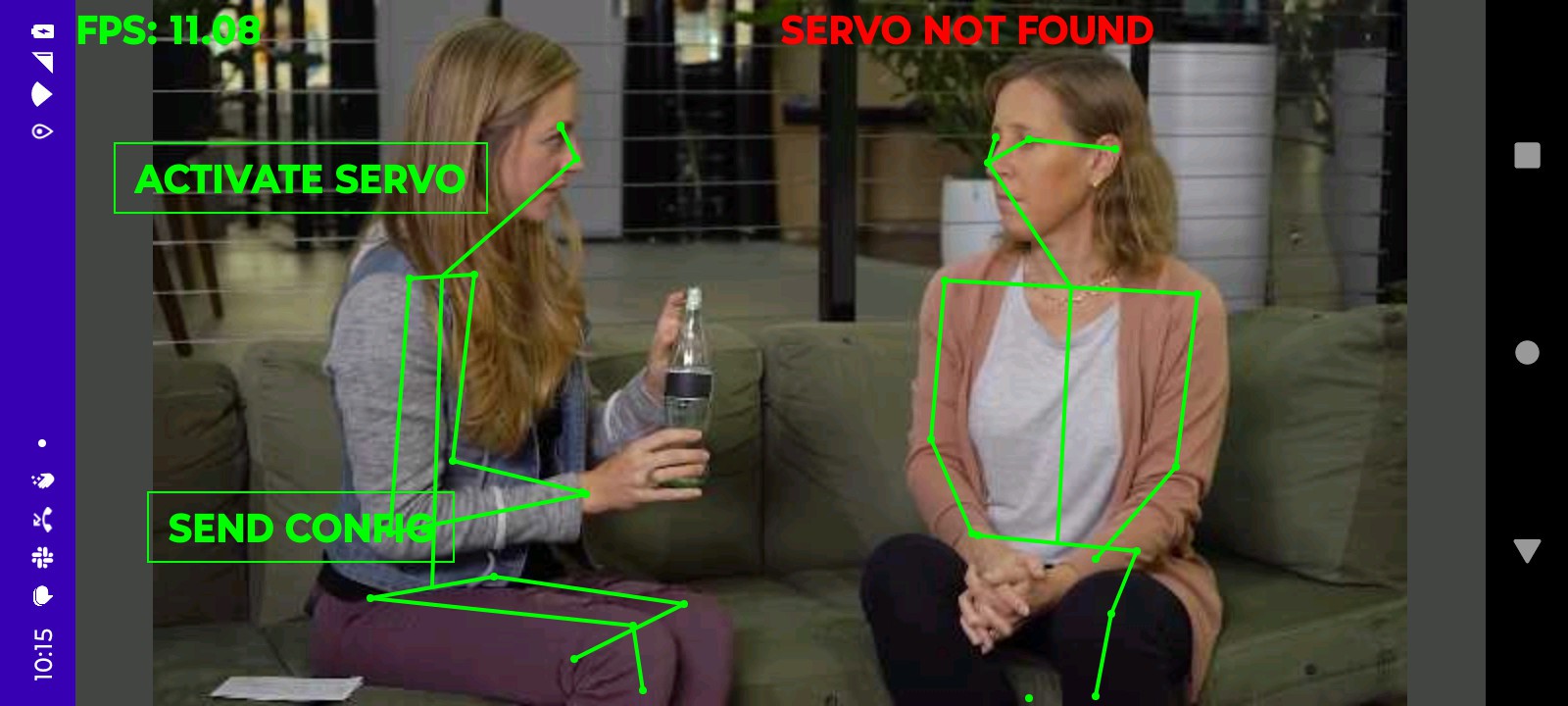

movenet_multipose.tflite has a dynamic size which must be set with ResizeInputTensor. At 256x256, it goes at 17fps on the rasp 5. At 320x320 it goes at 11fps & is much better. Any higher than 320 crashes it. It's hard coded to detect 6 animals.

It's so much faster than the rasp 4, it's amazing they're still using repurposed set top box chips. Smart TV's weren't exactly known for feeds & speeds. Everything might have just gotten pushed against the top end, since the top end couldn't go any faster.

On a test photo, it was highly dependent on threshold values for animals & body parts.

On lion footage, it was a steaming pile of dog turd. Some key advantages of body25 on the jetson nano were detection with most of the body out of frame, detection from behind, fewer missed hits.

In a direct comparison, body 25 at 8fps, 255x144 still outdid movenet at 11fps, 320x320. It seems movenet requires using efficientdet 1st to get hit boxes & then applying movenet to the hit boxes. It might require square tiles too. That doesn't work well for 2 overlapping bodies in horizontal positions.

Looks like body25 on the jetson nano is still the best option for the 2 animal 2D tracking while the rasp 5 is going to be limited to 1D tracking of a single lion. The 16 bit floating point on the jetson is still superior to higher resolution 8 bit. If only customized efficientdets worked on it.

It's possible that body25 could run faster & at higher resolution on the rasp 5 in 8 bit mode but the mane limitation is time. The current solution works.

It shall not die.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.