Brief introduction to the project:

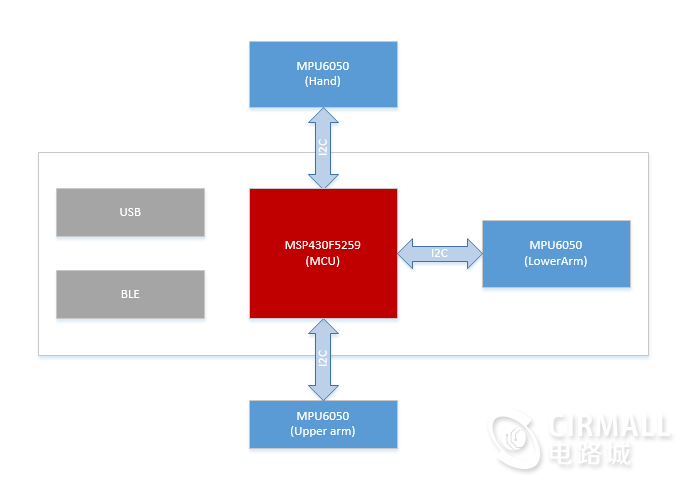

The motion capture of arm is realized based on the hardware solution of "three MPU6050+MCU". The data transmission mode is transparently transmitted by using an onboard USB or Bluetooth serial port.

Solution Description

1. Directly call built-in DMP driver of MPU6050 to capture the posture of each sensor (quaternion format) and send it to host computer.

2. Bind the sensor to the bone model in the 3D environment by DYNAMIC INITIALIZATION to determine the posture deviation between the sensor and the corresponding bone model.

3. Combine inverse kinematics to calculate bone posture in real time to achieve motion capture.

Hardware Frame

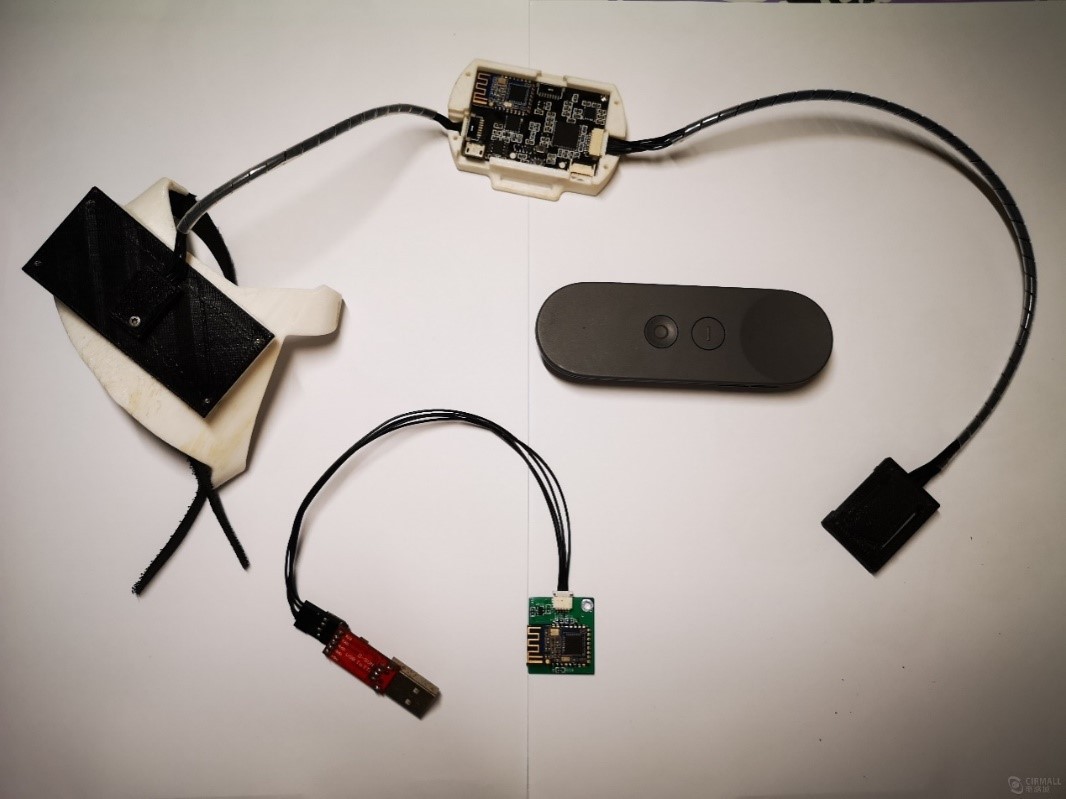

As shown in the figure below, MCU uses TI's MSP430F5259, and the communication with the host computer can be selected in USB or BLE. Three MPU6050 are respectively worn on the back of the hand, the lower arm near the wrist and the upper arm near the elbow, which is corresponding to the calculation of the movement posture of the hand, the lower arm and the upper arm. IMU that captures the lower arm posture is placed directly on the motherboard of MCU, and the remaining two IMUs are two separate modules.

Description of Advantages and Disadvantages of the Solution

Advantages of the solution:

1. The hardware does not use a magnetic field sensor. It can be “plug-and-play”, which effectively avoids problems such as repeated calibration of magnetic field sensors and magnetic interference etc.

2. The same initialization mode can be combined with other devices with posture data. Take Google Daydream's remote controller as an example which participates in a video demonstration later.

3. Directly call built-in DMP driver of MPU6050 to reduce the calculation pressure of MCU and further cut the cost.

Disadvantages of the solution:

1. The startup process of DMP driver is slow, and the posture of sensor can be stabilized after about 15 seconds.

2. The posture calculated by DMP driver is easy to drift, and the effect is not as good as that of Mahony or Kalman filtering.

3. DMP uses FIFO mode to cache the results of calculating. Improper handling can easily cause data overflow.

4. Without fusion magnetic field sensor, the phenomenon of drift will exist no matter which posture calculation mode is adopted. However, when the drift is obvious, it can be reinitialized.

Project Demonstration

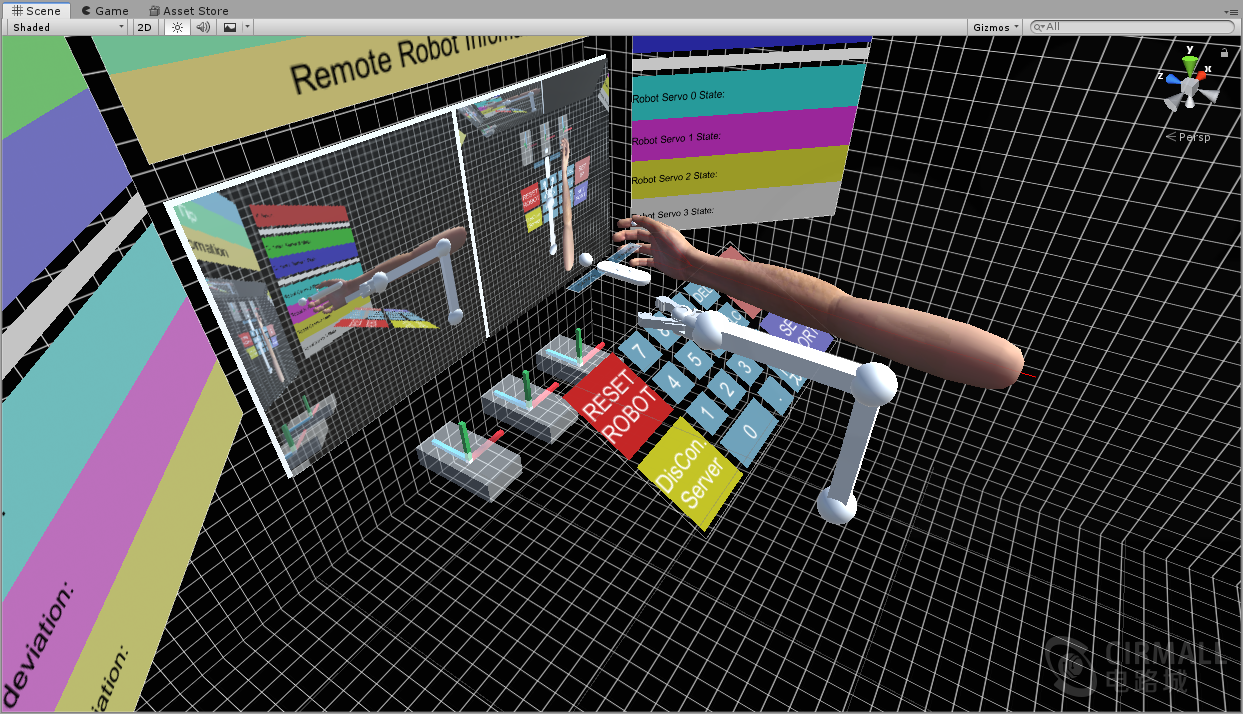

- Motion capture demonstration in the Unity3D environment on the PC terminal

PC is connected to a USB BLE module and set to be HOST mode. It mainly demonstrates the motion capture effect of dynamic initialization and that after the initialization. The hardware sends the posture of each IMU to the PC in real time; the deviation relationship between the coordinate system of each IMU and the coordinate system of the corresponding arm skeleton model can be quickly calculated through a DYNAMIC INITIALIZATION; combined with the initialization calculation result and the real-time posture of IMU, the skeletal posture of the arm model can be calculated, and the motion capture of the arm can be realized.

You can use the "USB Arm" engineering in the Unity3D folder in the provided resources.

- Motion capture and Google Daydream fusion demonstration

Develop Daydream app in Unity, and directly call the data provided by the Google VR service through the official SDK, such as the trigger event of the remote controller. Here, the posture of the remote controller and the posture of the motion capture device are directly called. Fusion is not a simple way to bind the remote controller to the arm model, but rather to the different postures of the synchronizing remote controller relative to the palm as demonstrated in the video. There is APK available for experience in the resource.

Brief description:

A nine-axis IMU is built in Daydream's remote controller, and only the data provided by the Google VR service can be obtained through the SDK, instead of the original posture calculation of IMU. During the use of Daydream, the remote controller can be calibrated repeatedly. The calibration mode is very "subjective", that is, to point the remote controller to the front and long press HOME button, but the remote controller does not “really” point to the front; if you point the remote controller to other directions for calibration, you will also see that the virtual remote controller in the software is pointed to the front. Therefore, there is no absolute reference frame for the remote controller posture called by the SDK.

When developing under Unity3D, a plug-in for the motion capture device is required to import. Source code contains plug-in source code. The plug-in here has some drawbacks. When receiving data from motion capture device, there are some lag and delay that can be seen in the video. Hereby, some work has been done on the hardware source code to reduce the acquisition frequency and shorten the data length (the original two bytes are changed to one byte to express floating point numbers).

- A simple Demo based on the fusion of motion capture device with Daydream

When Daydream and the motion capture device are fused, it is equivalent to adding 3 degrees of freedom to Daydream remote controller (increase the displacement based on the original rotation). This Demo uses the added degree of freedom to place "Cube" in different locations in the VR environment, and to move the player's position in the virtual environment by "dragging and dropping", which is similar to the feeling of "my world". There is APK available for experience in the resource.

- A Demo of a robot arm drawing control based on the fusion of motion capture device with Daydream

First, the 3D reconstruction for robot arm is carried out (not to restore all the details of robot arm, but to achieve important mechanical structural relationships). By the motion capture to synchronize the movement between the real arm and the virtual arm, the virtual arm is used to draw the virtual robot arm, and the virtual robot arm and the real robot arm are synchronized. Finally, the effect of real arm drawing the real robot arm across the air is realized.

Unity3D engineering “IngArmRemoteRobot” is provided in the resource.

Video presentation:(https://www.cirmall.com/circuit/13555)

Cirmall

Cirmall