We are finished with the motor controller Arduino code, there are still some things to do for the touch screen control, and reading the telemetry from the robot. Now we can drive the robot wheels with the joystick like in the "Bástya 1.5" robot. Meanwhile the changes of the wiring in the robot is completed too. There is no more contact error in the motor controller.

The new program for this head mechanic is completed.

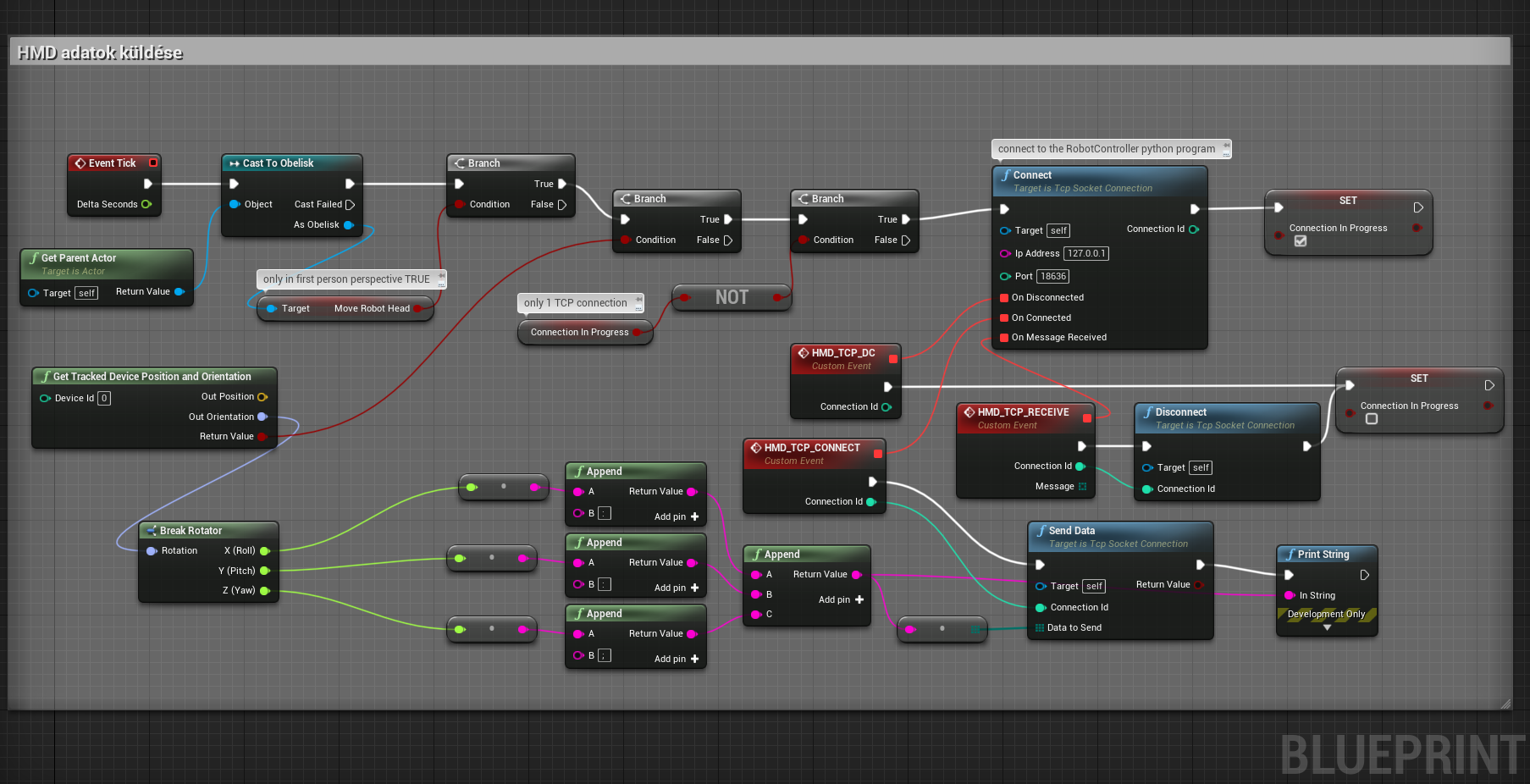

This is the working solution to the VR HMD position reading from the unreal engine. We can send the data to the robot with TCP connection. The blueprint is made with this plugin: https://unrealengine.com/marketplace/en-US/product/tcp-socket-plugin

In the unreal engine there is a working first person perspective, and third person perspective view change function.

There is a boolean variable at the end what we can switch with the perspective change. We only move the robot head in sync with the user head if it's in the first person perspective.

In VR with first person perspective you can view what the robot head sees in stereoscopic view. Or you can switch to third person perspective and look around the robot. In the third person view we are working on displaying the data from the OAK-D devices.

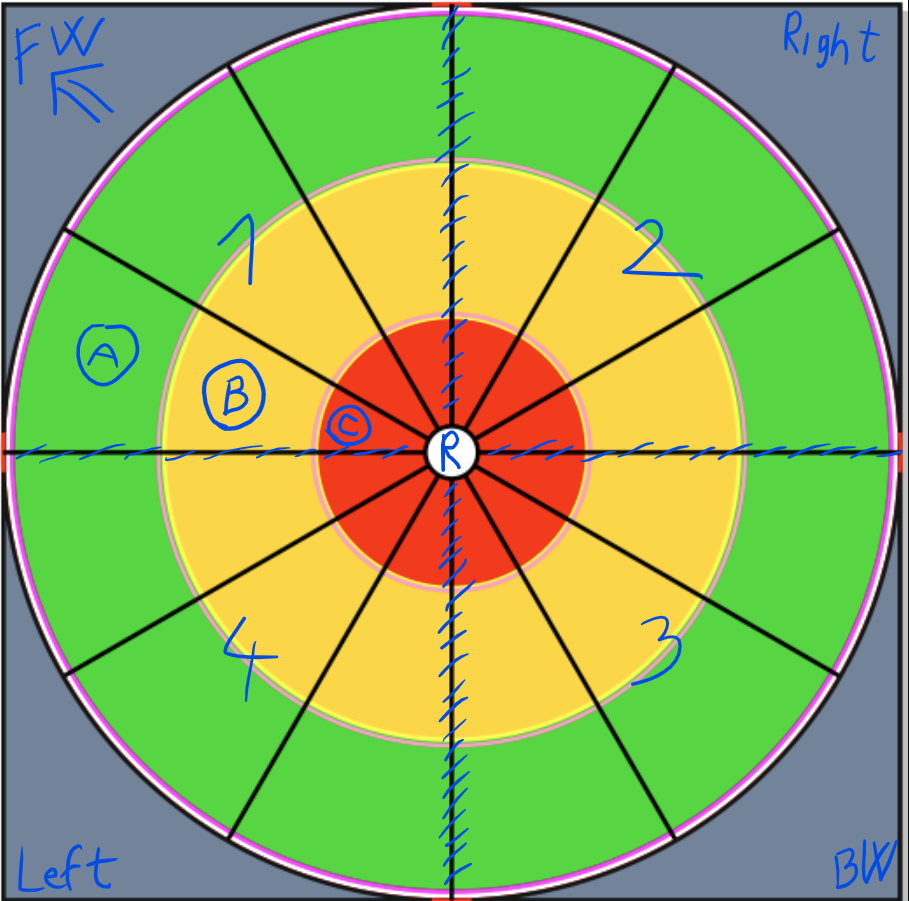

The first detection type will be object recognition with distance. We will divide the space in VR around the robot like in the picture:

The numbers represent the sides of the robot. 1 - front, 2- right, 3 - back, 4 left. The A,B,C fields are the distance from the robot. We will put the detected object in the appropriate field. If the object is in the green A, it is the safe distance we will show it on the third person view in VR.

If the object is in B, it's require more attention, so we will limit the robot speed to that direction. If the object is in the C red field, it's dangerously close, we will inhibit the robot to move to that direction. (It's similar than car parking radar)

We are working on to visualize the raw depth data of the OAK-D cameras, with the unreal engine built in "Niagara visual effects system". It will only serves as visual feedback in VR for the controlling person to be able to get to know the surroundings better.

BTom

BTom

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.