Bicycle Dashcam

Bicycle Dashcam with License Plate Recognition (ALPR)

Bicycle Dashcam with License Plate Recognition (ALPR)

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

ExampleImagePiCameraWhileRiding.jpgExample of a photo taken from the Pi mounted to my bicycle while riding.JPEG Image - 2.21 MB - 10/09/2019 at 00:53 |

|

|

I wanted to build my own vision model for a few reasons:

The first step was building a library of images to train a model with. I sorted through hundreds of images I’d taken on rides in September, and selected 65 where the photos were clear, and there were license plates in the frame. As this was my first attempt, I wasn’t going to worry about sub-optimal situations (out of focus, low light, over exposed, etc…). I then had to annotate the images – draw boxes around the license plates in the photos, and “tag” them as plates. I looked at a couple tools – I started with Microsoft’s VoTT, but ended up using labelimg. Labelimg was efficient, with great keyboard shortcuts, and used the mouse scroll wheel to control zoom, which was great for labeling small details in larger photos.

I then tried one tutorial after another, and struggled to get them to work. Many examples were setup to run on Google Colab. I found when I was following these instructions, and I got to part where I was actually training the model, Colab would time out. Colab is only intended for short interactive sessions – perhaps it wouldn’t work for me as I was working with higher resolution images, which would take more computing time.

What I ended up doing was manually running the steps in the Train_YoloV3.ipynb notebook from pysource, straight into the console. As my home PCs don’t have dedicated GPUs, I setup a p3.2xlarge Amazon EC2 instance to run the training. If memory serves, training against those 65 images, using the settings from that tutorial, took a couple of hours.

I took the model I created from my September rides, and then tested it against images from my October rides – I’m surprised how well it worked.

Since training that model, I’ve been on the lookout for an nVidia video card I can use for training at home. It’s hard to know for sure, but it seems it wouldn’t take long to recoup the cost of a GPU vs training on an EC2 instance in the cloud, and I can always resell a GPU. I’ve tried a few times with the fastest CPU I have in the house (a Ryzen 3400g), and it just doesn’t seem feasible. I haven’t seen a cheap GPU option, and the prices just seem to be going higher since I started looking in November.

I don’t have usable code or a useful model to share at this point, at this point, I’m mostly learning and trying to figure out the process.

One of the features I have in mind for my bicycle dashcam was license plate recognition. I experimented with the OpenALPR license plate recognition library and a couple different Pi cameras. I encountered a few challenges:

I acquired the Luxonis Oak-D AI accelerated camera to experiment with different image sensors which could potentially address my image quality challenges, stereo vision/depth sensing provided interesting capabilities, and the AI acceleration to increase the speed. This spring, I mounted it to my bike and started capturing images on my rides.

I had issues with my Pi 3 – it would stop running reliably after a minute or two – I suspect it had been damaged by vibration from previous rides, being strapped to my bike rack. I acquired a new Pi 4, and was up and running again.

Initially, with the Oak-D setup, I had a lot of the same image quality problems I was having with the Pi 1 and 2 cameras – lots of out-of-focus images, the camera just kept on trying to focus, which is a hard problem with taking photos in moving traffic on a bumpy bicycle ride. My application would also crash – this turned out to be due to filling buffers – I was writing more data to my USB thumb drive than it could handle. I ended up getting acceptable results by reducing my capture speed to 2 fps, recording at 4056×3040, turning auto focus off, locking the focus at its 120 setting, and setting the scene mode sports, in the DepthAI API as follows:

rgb.setFps(2)

rgb.initialControl.setManualFocus(120)

rgb.initialControl.SceneMode(dai.CameraControl.SceneMode.SPORTS) rgb.initialControl.setAutoFocusMode(dai.RawCameraControl.AutoFocusMode.OFF)

With these settings, images are focused in the narrow range where it’s possible to read a license plate – when cars are too far back, the plates are impossible to read anyway, and it doesn’t matter if that’s out of focus. Luxonis will soon launch a

model with fixed focus cameras, which should further improve image quality in high vibration environments. I hope to try this out in the future.

I wanted to build a library of images I could later use to test against various machine vision models, and potentially train my own. I posted a the question on the Luxonis Discord channel – their team directed me to their gen2-record-replay code sample. This code allows you to record imagery, and later play it back against a model – it was exactly what I needed. So I started to collect imagery on my next few rides.

I started a fresh design with a different idea, a new camera, new models, and new code, which I submitted as an entry for the Toronto ♥️’s Bikes Make-a-Thon.

This version of my dashcam has blind spot detection, similar to what you would see in modern cars. I'm now using a USB AI accelerated camera from Luxonis mounted to my bicycle seat post, and my smartphone as a display. It’s a few hundred lines of Python code that builds on a freely available AI vehicle recognition model from the Intel Open Model Zoo. I’ve built on the license plate recognition and MJPEG video streaming sample code from Luxonis that was supplied with the OAK-D camera. I tether the laptop (I hit a snag I didn't have time to address with my Pi) to the smartphone using wifi, and I use an iOS app called IPCams to view the video stream.

The vehicles are recognized and identified. The video is streamed over wifi to the smartphone. A caution alert is added to the video when a vehicle is detected.

This camera mount worked well for me:

https://www.aliexpress.com/item/32795104954.html

And I used this app to view the MJPEG stream on the phone:

https://apps.apple.com/us/app/ipcams-ip-camera-viewer/id1045600272

And... you can download the source for this iteration here:

https://github.com/raudette/SmartDashcamForBikes

I was reading an article about Oak Vision Modules on Hackaday, and thought, wow, this is the PERFECT platform for my bicycle dashcam. The Oak Vision module is a Kickstarter project with camera modules, depth mapping capability using stereo vision, and a processor (Intel Movidius Myriad X) designed to accelerate machine vision in 1 package for $149US – see https://www.kickstarter.com/projects/opencv/opencv-ai-kit/

At the 3:55 mark in the marketing video, I THEN see the board mounted to a bicycle saddle, which is EXACTLY what I want to do:

I went to see what I could find about the developers, and read about them on TechCrunch: “The actual device and onboard AI were created by Luxonis, which previously created the CommuteGuardian, a sort of smart brake light for bikes that tracks objects in real time so it can warn the rider. The team couldn’t find any hardware that fit the bill so they made their own, and then collaborated with OpenCV to make the OAK series as a follow-up.”

This is pretty exciting – CommuteGuardian is the first project I’ve come across with similar goals to mine: Prevent and Deter Car-Bicycle accidents. I exchanged a few emails with Brandon Gilles, the Luxonis CEO, and he shared some background – they also checked out OpenALPR, and started work on mobile phone implementations, but decided to move to a custom board when the Myriad X processor was launched.

You can read more about CommuteGuardian here: https://luxonis.com/commuteguardian and https://discuss.luxonis.com/d/8-it-works-working-prototype-of-commute-guardian

I decided to back Luxonis’ Oak project. I’ll have to learn some new tools, but this board will be much faster than the Pi for image analysis (much faster than the 1 frame per 8 seconds I’m getting now!). The stereo vision capabilities on the Oak-D will allow for depth mapping, a capability for which I had previously been considering adding a LIDAR sensor. Looking forward to receiving my Oak-D, hopefully in December. In the interim, I’ll continue to experiment with different license plate recognition systems, read more about the tooling I can use with Oak-D, and perhaps try a different camera module on the Pi.

On a sunny mid-June Saturday, I took my bike for a ride down Yonge St to lake Ontario with my bicycle dashcam, testing my latest changes (May 18th). Over the course of a 2 hour ride, taking a photo about every 10 seconds:

I’m going to try running these photos through alternate ALPR engines, and compare results. On this run, I tested the Pi Camera V2’s various sensor modes: the streaming modes at 1920×1080 30 fps, 3280×2464 15 fps, 640×922 30 fps, 1640×922 40 fps, 1280×720 41 fps, 1280×720 60 fps, as well as the still mode at 3280×2464. Further testing is likely still required, but I continue to get the best results from the still mode – all of the successful matches were shot using still mode.

I’m getting better results than I had on previous runs as a result of tweaking the pi-camera-connect NodeJS library to:

However, the images are still not as good as I would like.

The Plate Recognizer service has an excellent article on Camera Setup for ALPR. It highlights many of the challenges I’m seeing with my setup:

I might order the latest Pi camera with the zoom lens and see if I get better results.

Reviewing the data from my ride, there’s also an issue with my code that pulls the GPS coordinates from the phone, which I didn’t see when testing at home. I figure this is either the phone locking while I ride, and not running the javascript – I’ll try using the NoSleep.js library before my next test run.

In February, I saw Robert Lucian's Raspberry Pi based license plate reader project on Hackaday. His project is different, in that he wrote his own license plate recognition algorithm, which runs in the cloud - the Pi feeds the images to the cloud for processing. He had great results - 30 frames per second, with 1 second of latency. This is awesome, but I want to process on the device - I want to avoid cellular data and cloud charges. Once I get this working, I'll look at improving performance with a more capable processor, like the nVidia Jetson or the Intel Neural compute stick.

In any case, Robert was getting great images from his Pi - so I asked him how he did it. He wrote me back with a few suggestions - he is using the Pi camera in stream mode 5 at 30 fps.

I wondered if one of the issues was my Pi Camera (v1), so I ordered a version 2 camera (just weeks before the HQ camera came out!). The images I was getting still weren't great. I'm using the pi-camera-connect package, here's what I've learned so far:

I still have more tweaking to do with the Pi Camera 2. If after a few more runs, I don't get the images I need, I'll try the HQ camera or try interfacing with an action camera.

Finally, I've added GPS functionality. If you access the application with your phone while you're riding, the application will associate your phone's GPS coordinates with each capture.

I have an old Canon S90 point and shoot camera that I haven't been using. This weekend, I thought I'd try to see if I could use it for this project.. It isn't rugged, but thought it might be fine for taking this project a bit further, as it takes great photos, and if I could find one, Canon did make a rugged waterproof case for it. It also has image stabilization, which should help with getting useable photos on my bumpy ride.

I looked at how I might be able to control it, and download photos for processing. First, I looked at PTP, the protocol for downloading images from cameras. PTP allows for telling the camera to take a picture, and then download it. I tried an PTP Node library, it didn't work, and then I tried using a command line PTP control tool called gphoto2. Gphoto2 worked great for downloading photos from the command line, but wouldn't instruct the camera to take a picture - it turns out, my S90 doesn't support that feature (although its predecessor, the S80, did!).

Then I thought, I could take regular pictures on a timer, and then download them for processing. I had done this before using CHDK custom firmware for Canon cameras. CHDK allows you to do all sorts of things with your camera that it can't do out of the box, including automation and scripting. I thought I would use a pre-written script to take a photo every 10 seconds, and then download it with gphoto2. But as soon as I connect the camera with PTP/gphoto2, the script stops. So I'm back to looking for a camera, or camera module, that I can control from a Pi & can take useable photos in a high-vibration environment.

We had an early snowfall here in Toronto, and I've put my bike into storage for the winter. I have given some thought about next steps though.

First, I found a really easy way to implement a display. I came across this article about a Vancouver cyclist who tries to "beat" the packed buses on along West Broadway with a display-equipped Pix Backpack. This backpack might be the quickest way to to implement a display.

Second, I started thinking about cameras. Looking at the footage from Cycliq cycling cameras, its definitely possible to get steady footage on a bicycle. I explored modules and spec sheets for various modules from Arducam and camera-modules.com - anything "fast" enough should take a suitable shot. And a co-worker suggested just using a GoPro, but the challenge is, how do you pull the image off to process it as you're riding? Reading various action camera reviews, I came across Yi Action cameras. Although none of the libraries seem to be current, they've made SDKs available to interface with their cameras: https://github.com/YITechnology/YIOpenAPI

I've reviewed the footage on Youtube from Yi cameras mounted to bicycles - it looks pretty good. I'll probably pursue this route.

Following a suggestion from user Gerben, I looked for a way to isolate the camera from vibrations while riding.

A quick search DIY camera vibration isolator seemed to find many were building wire rope isolators:

DIY: Build Your Own Anti-Vibration Camera Drone Mount for Only $10!

DIY Wire Rope Vibration Isolator for RC CAR Camera Mount ( Part 1)

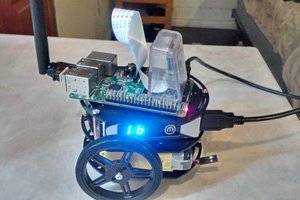

I built an isolator with some wire and plywood, and mounted it along with the Pi to my bike:

I didn't tune it, I'll have to look into how to matching the springiness of the wire by tweaking the length against the weight of the Pi. But it wasn't enough - I think a faster camera is still required. Here are some sample photos from my bike, with the Pi mounted on the isolator:

Photo isn't clear enough to make the the writing on the green sign, or the plates on that car:

Photo is clear enough to make out objects that aren't in motion - Finch Ave., Soban cafe. But not the cars in motion.

I may try to "tune" the isolator, but I think either way, I need a better camera sensor.

Create an account to leave a comment. Already have an account? Log In.

Sounds awesome! Even with the Pi Camera, it works really well when the objects are stationary - the issues I encountered are when things are in motion.

I'm currently stumped on how to get images of sufficient quality for the OpenALPR algorithm to work. An example of what I'm getting with the Pi camera mounted to my bicycle is in the files section - see ExampleImagePiCameraWhileRiding.jpg

I'm not sure if an inexpensive (<$200) camera could be purchased that would be sufficiently fast, or have sufficiently good image stabilization, to create images suitable for image processing by OpenALPR.

I'm currently considering:

- cameras with a global shutter, such as https://www.aliexpress.com/item/33015394467.html or https://www.arducam.com/product/ov9281-mipi-1mp-monochrome-global-shutter-camera-module-m12-mount-lens-raspberry-pi/

- a camera with more resolution, such as a Logitech Brio

Any thoughts?

I think there is too much vibration in the frame of the bike. Try to mount the pi (or only the camera module) in such a way that the vibrations don't get transfered as much. For example, put some foam underneath it, or hang it from some bungee cords.

Maybe having a faster shutter speed could help, but that might be problematic in low-light situations.

I haven't tried - I'll search around and see if others have done this, and maybe play around with a mounting system with rubber bands or springs.

Become a member to follow this project and never miss any updates

Nick Bild

Nick Bild

Eugene

Eugene

Brenda Armour

Brenda Armour

I had no idea that OpenALPR was a thing. That's a big help to me and my long-stalled AI driveway alarm project. Thanks!