As a proof of concept, RemoteLab will make use of a handful between sensors and actuators, mainly the ones available on the Cypress Prototyping kit, and a webcam to provide live footage, and therefore a large amount of data. The board will be connected to a router through the WiFi. On the cloud side, we have AWS IoT Core to manage the Cypress unit, and to handle the data coming from its sensors, and AWS IoT Camera Connector to manage the storage and playback of footage coming from the webcam. The AWS Iot Camera Connector uses AWS IoT core to manage the device, while it sends the data to AWS Kinesis Video Streams to store, index, and playback the footage through its API. AWS IoT Core will be setup to use the ELK stack for the storage, retrieval, and visualisation of the data coming from the PSoC sensors. A demo API to send control signal to actuators and instrumentation connected to the PSoc will be produced.

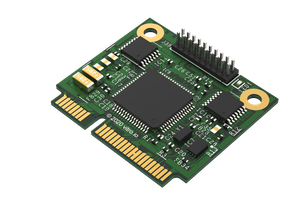

For this project the Cypress PSoc 6 WiFi-BT Prototyping Kit will be used since its selection of sensors allows a smaller count of additional components for a proof of concept. In a more production ready implementation of the system either board/kit would be suitable, and if an additional kit could be provided, it would be interesting to have both kits, and test the system with multiple PSoC to simulate a more realistic lab scenario.

My experience with embedded systems isn't too extensive, as I've only worked on a few projects when I was at Uni, but I do work with AWS cloud infrastructures for a living.

David Goodman

David Goodman

Peter

Peter

Noah

Noah