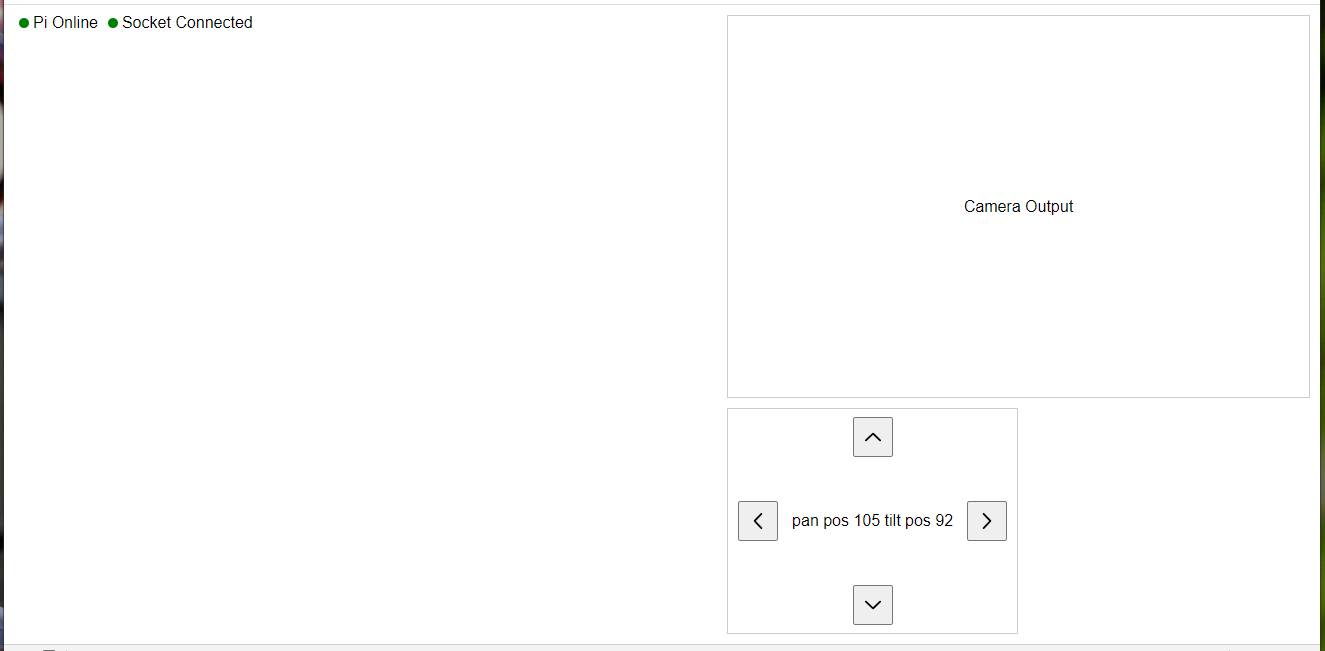

So... the steppers were not a good idea after all, went with servos. Also after seeing the servos almost strip themselves on boot and move randomly(directly connected by GPIO no pull down/driver) I went with an Arduino. Thankfully after following some tutorials I got the I2C to work and it is clean! It's not like that jank software serial I used in the first legged robot I made where I can't even guarantee a single character will arrive correctly.

Anyway I made the interface below, it's pretty sparse in features but man! I made it, the "whole stack" from the web interface down to the servo. It's hacky my methods for sure. I used ReactJS for the interface, node for the socket server and used a system call to execute a python script with cli args for the servo details then Arduino picks that up by I2C to move the servos. This interface is mostly for manual calibration/testing. In the end OpenCV will drive the whole thing/even the wheels.

This I2C communication is so crisp, the software serial I used before was awful granted that was between an ESP8266-01 and the Nano... but it looks like you can setup the EPS as a master to the Pi for I2C. I had problems with hardware serial on the Nano at the time.

This web interface isn't much so far but the little status lights are cool because they're actually from the Pi responding.

I had some major problems with the simple I2C wire byte read to string and trying to concat/parse the commands for the servo/position... I found some way to make it work but was not how I was initially going to write it.

There's a repo now, has the functionality in the video

https://github.com/jdc-cunningham/sensor-fusion-clu

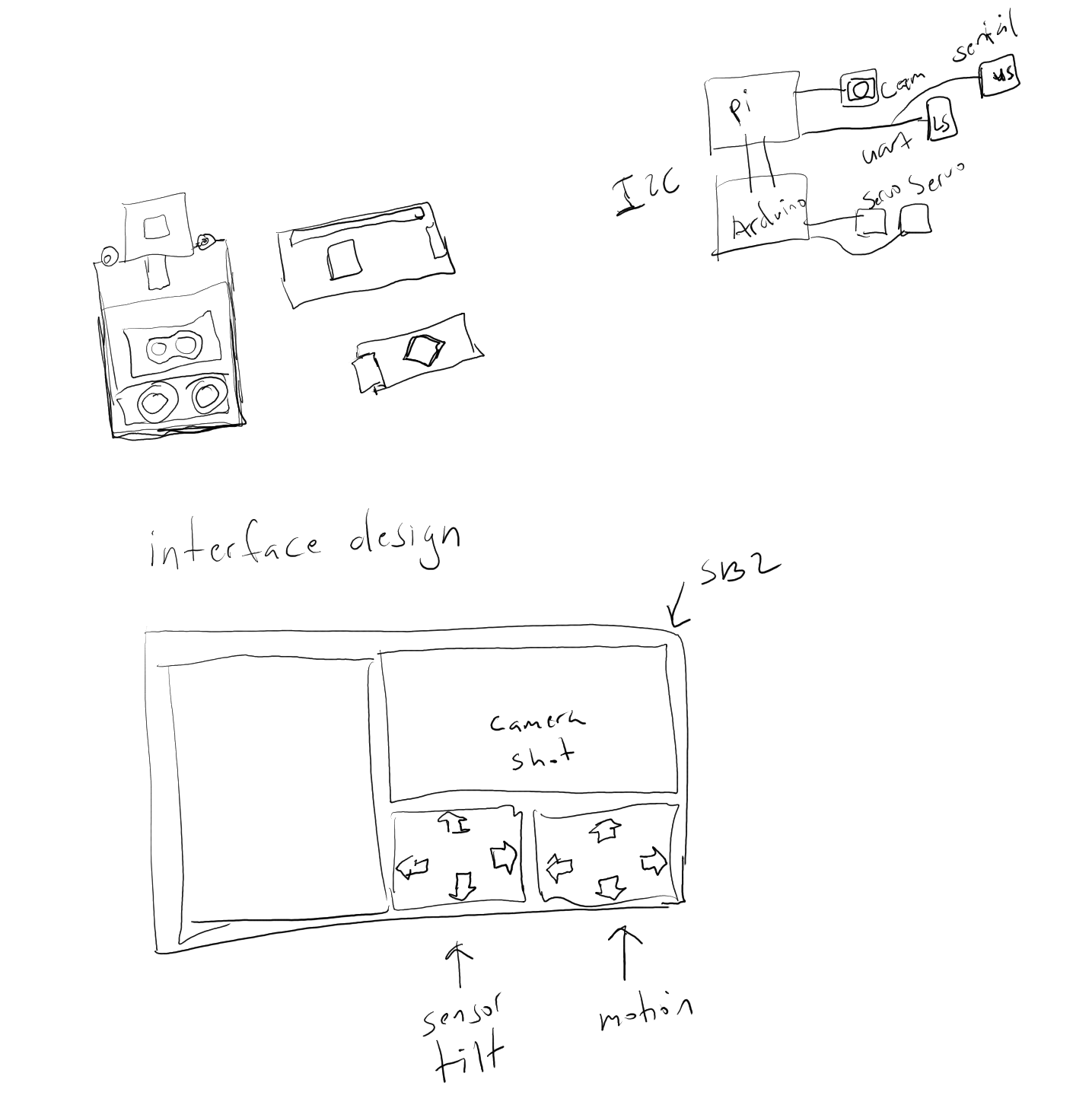

It would be hard for me now but I just have these thoughts like for example the pan/tilt system, say it was big enough/or components were small enough that you could run it "isolated" or "free floating". The bearings/joints would be how you conduct power through but the stuff happens in the floating gimballed unit. And it would have an IMU so you could really know that the plane of the sensor board is flat(vertical/pan zeroed). I know... overkill, just put hall sensors on the axes or don't even bother... just barrage it with a bunch of data/training/lots of images.

The other thought for the Lidar since it's single point either do some kind of "phased-array" thing(look at me I can read) or idk... like a spinning "diamond facet" thing that goes towards the beam and reflects in different directions... that's hard. I don't know... I'm burnt, pulling stuff from nothing.

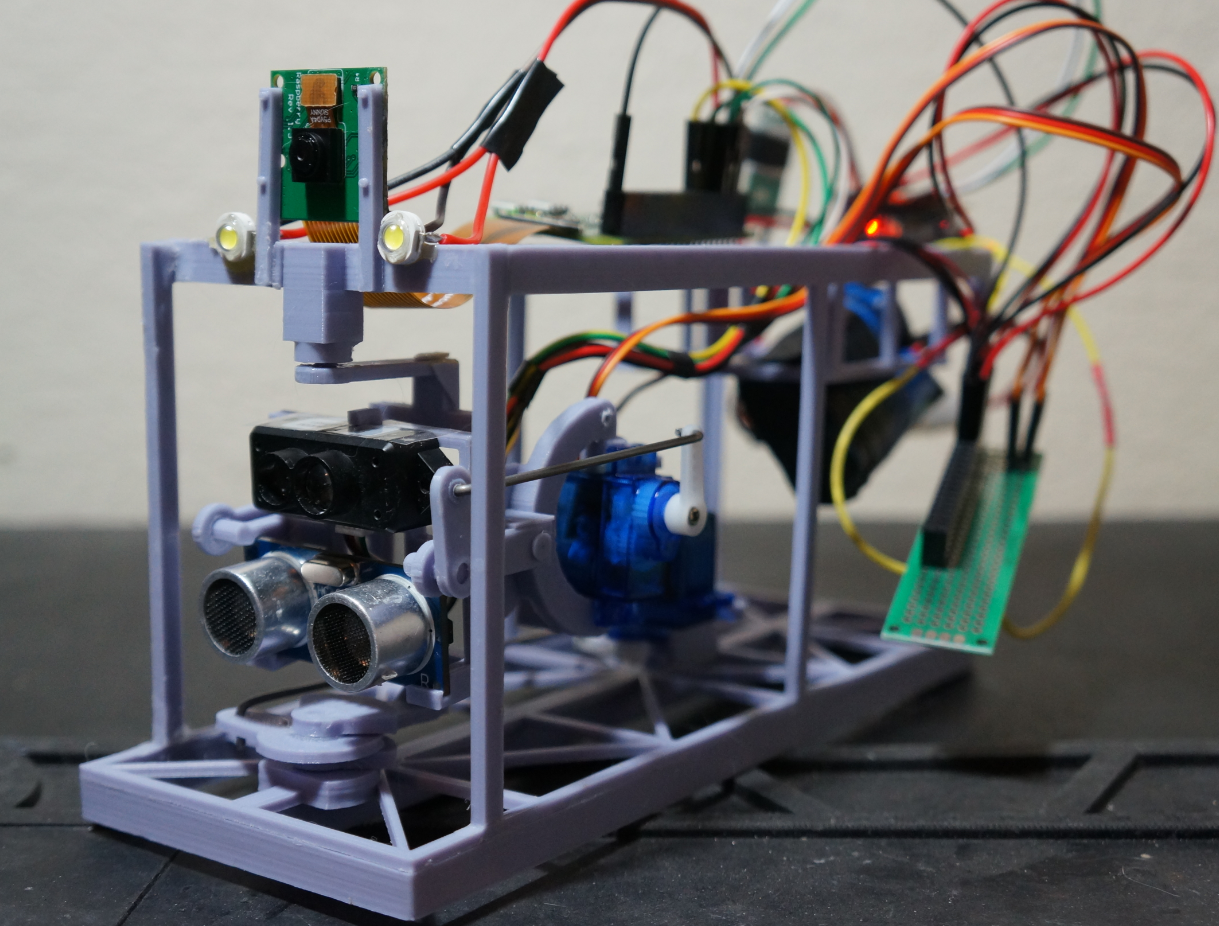

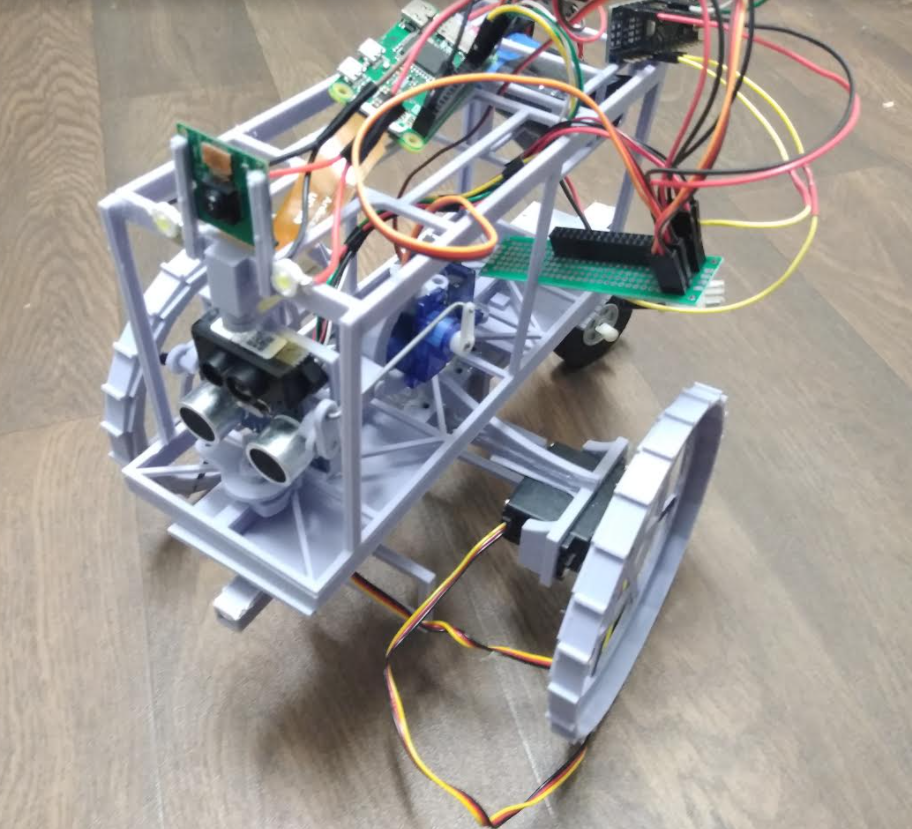

I am getting more and more driven to make significant progress on this, now it's like in one piece and the basic "control systems" are in place to expand on.

Slip ring is what this thing could use, have most of the sensors eg. ToF sensors/IMU/Aurduino/battery in the gimballed section then transmit the data(minimum I think would be 4-5(I2C wires(3) and power)). Then you'd have this theoretically low-friction thing that has no bias to turn either way due to the drag from the ToF sensor wires.

In the end this is what will be moving around. I still have to interface with an IMU for the first time, that'll be interesting. I've watched some videos in the past(about the integration/drift and what not).

I realize you could use one of those Jetson things and just train something... run it off camera but the problem is that's not always guaranteed to work... I want something that actually knows physically how big it is/where it is... so this project also involves 3D collision map checking... it would be cool to eventually reach you know a 3D simulation plot/real time telemetry output.

But I am aware the Pi Zero kind of sucks computation-wise although I like its size.

Bonus

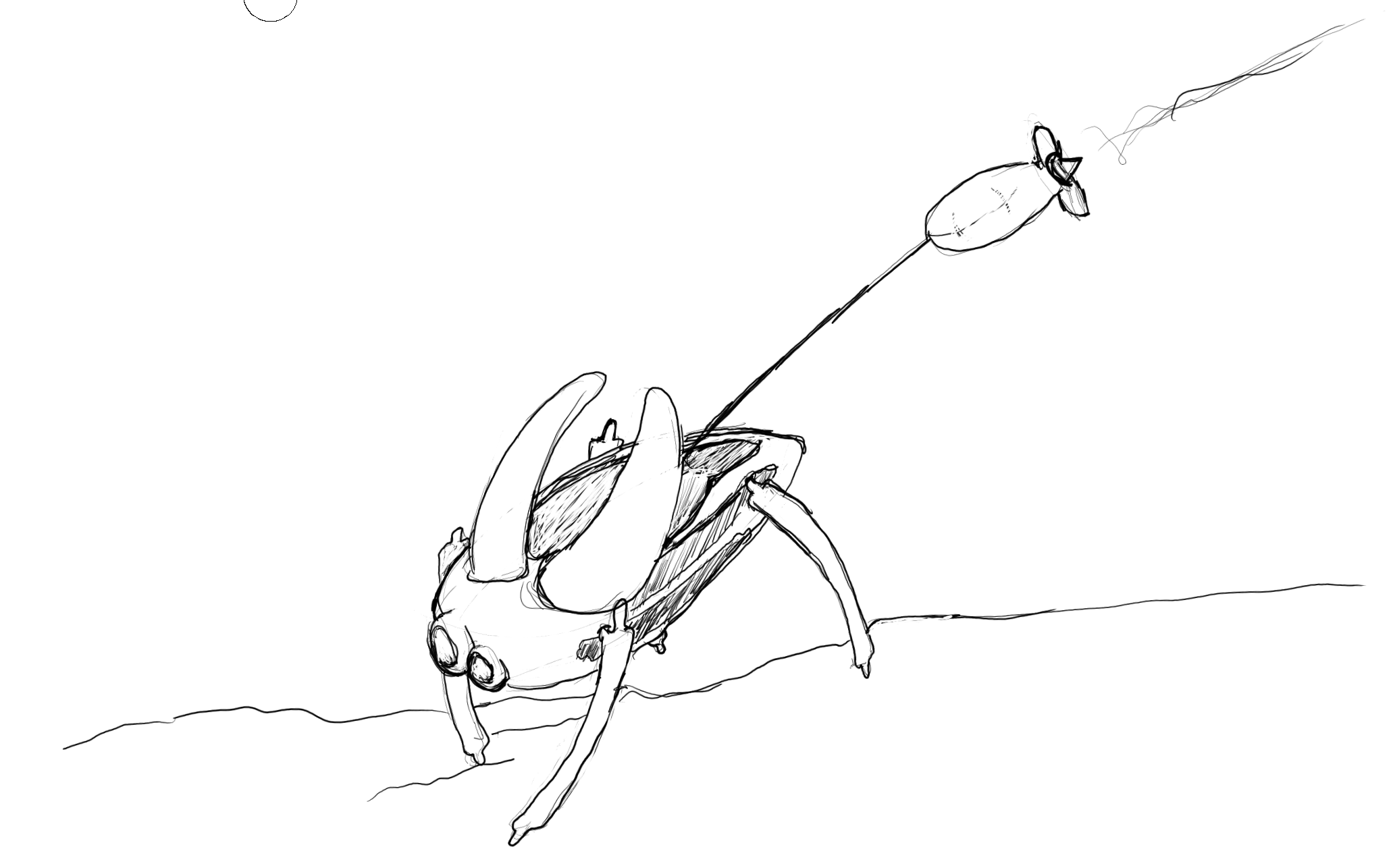

This is a robot I designed like 8 years ago or more. At that time I was not into software/could not build a robot if I wanted to. I was into model airplanes then. Crazy too like if I had stuck with that/drone development and explosion of video piloting, possible could have been in a business doing that. I actually tried but failed(bad business acumen).

Anyway, it's an underwater drone that crawls around in rivers. The top surfaces are like spoilers that can push the robot down and it can deploy a buoy with a propeller on it to recharge. Communication would be by a main transmitter near the shore of the river/robots would surface to talk to it. Idea was the robots could carry some kind of patch/sensor to detect pollution in water... of course overblown solution. I just have this desire to make these robots that are on their own in the wild and hopefully can last months/years.

It's designed like a stone too where you can throw it to deploy it.

Jacob David C Cunningham

Jacob David C Cunningham

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.