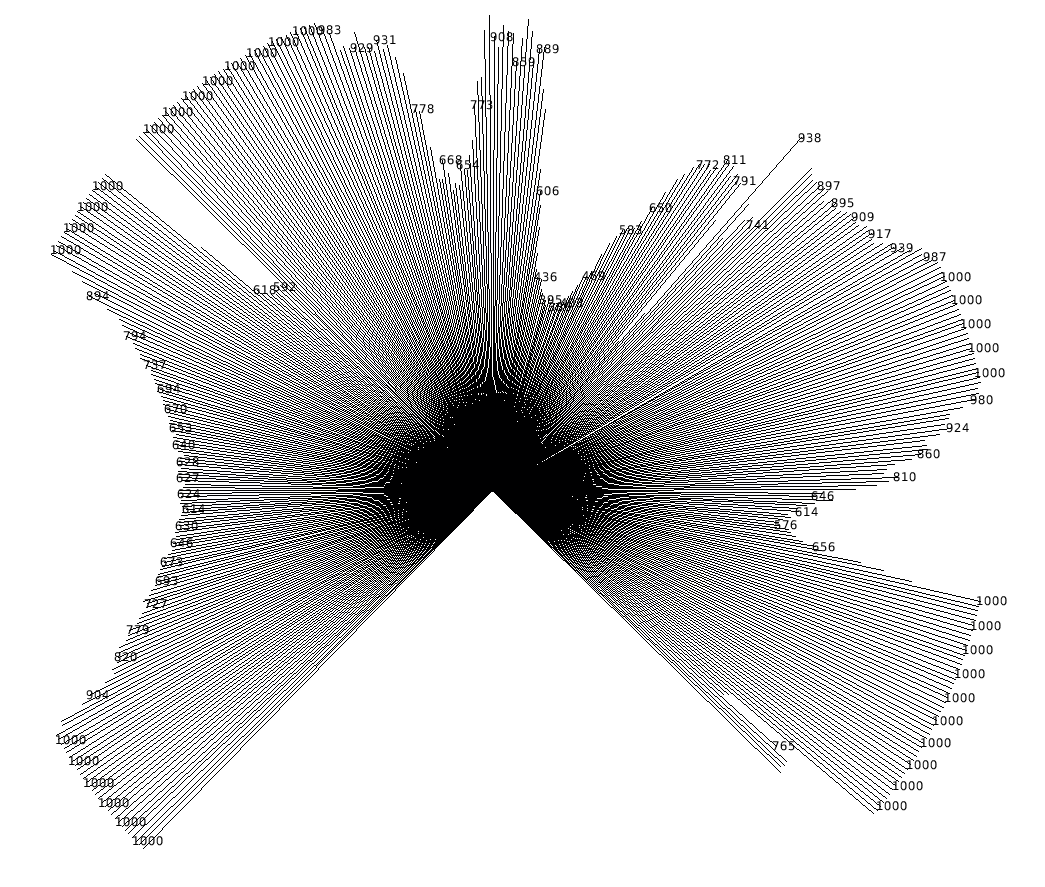

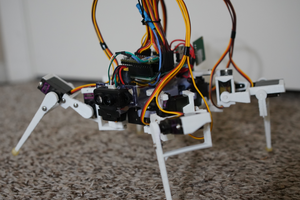

Roz is currently built with 16 AX-12 servos, a bunch of bioloid brackets, a lot of 3D printed parts, and a mix of off-the-shelf and custom made hardware & components. For sensing, Roz has five VL53L1X laser time-of-flight range finder sensors, an MPU-9250 based IMU, and a downward-facing Optical Flow sensor. Roz also has a camera in the nose, hooked up to a Raspberry pi zero w board.

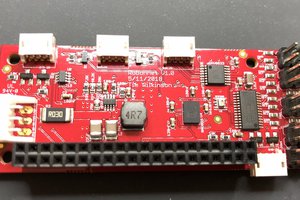

The sensors (except the camera) are controlled by an ARM Cortex M4 board running MicroPython. This board is set up as a Bioloid device on the servo bus, so I can access all the sensors over the bus from the raspberry pi. Roz also has a custom power management board that has an ARM Cortex M4 chip on it, also running MicroPython. This is also on the bus, and manages switching from wall power to the 3S Lipo battery seamlessly.

You can see some videos of Roz (including much earlier ones) on my YouTube Channel, like this one:

Jon Hylands

Jon Hylands

Morning.Star

Morning.Star

Tim Wilkinson

Tim Wilkinson

David Greenberg

David Greenberg

Jacob David C Cunningham

Jacob David C Cunningham

Damn that works great