The reduced power requirements of ultra-low power systems enables the use of energy harvesting to extend battery life, potentially indefinitely. This leads to the question: How does one design an energy harvesting system? The first step is to understand the device’s average power consumption. For indefinite operation, this is then the minimum required average power generation of the energy harvesting subsystem. For intermittent sources such as solar panels, the system should also be designed with enough energy storage buffer that it can run for significant time without any power generated. In many parts of the world it is not uncommon to have two cloudy weeks or more in a row, which could reduce the power generated by 5X or more. The battery should be sized to survive events such as this.

Another mantra to understand for energy harvesting is that efficiency does not matter. All that matters is exceeding your average power and having a large enough energy buffer. The power from energy harvesting is free, so don’t feel like you need to extract it all. There are a number of cases that I will show in which intentional efficiency reduction can reduce cost, simplify the system, and make it more resilient to long time periods without full power energy harvesting available.

Solar Power

The simplest, lowest cost, and most reliable form of energy harvesting is the use of solar photostatic cells, especially if the system is used outdoors. If indoors, solar power can be used, but the available power is far, far lower. However, even if outdoors, scaling an appropriate solar panel has certain pitfalls. Solar panels are typically rated with a peak current, peak voltage, and a peak power. This rating assumes the panel is perpendicular to the sun, under direct sunlight. If shaded or angled relative to the sun, the peak power will be lower. The average power generated can be calculated using a capacity factor. Usually used to describe grid-scale power plants, the capacity factor is the actual energy produced over a certain time divided by the energy that would be produced if it ran at full power during the entire time. For example, if a coal power plant ran for 4 days at full power, then was down for 1 day for maintenance, it would have a capacity factor of 0.8. Industrial solar photovoltaic plants have capacity factors typically ranging from 0.12 (in places like Michigan or Massachusetts) to 0.33 (in deserts of southern California). There are many online tools for estimating available solar power in your area, such as this database from NREL in the USA. It can be helpful to find an installation near you, and use its performance as an upper bound of what you can expect. These industrial-scale installations are well maintained, not shaded, and carefully oriented to maximize power, so your device will likely do worse. It is not a reliable approach to "look outside" and judge if it is typically sunny. The human eye is a very poor tool for estimating the potential power available to solar panels. With a roughly logarithmic response, the human eye is good at seeing in dark places, but fails to see that the power available in shade can be an order of magnitude or more less than in direct sunlight. Depending on the solar panel, partial shading can be just as detrimental as full shading. If possible, the temperature of the solar panel should be limited since solar panels are noticeably more efficient at low temperatures.

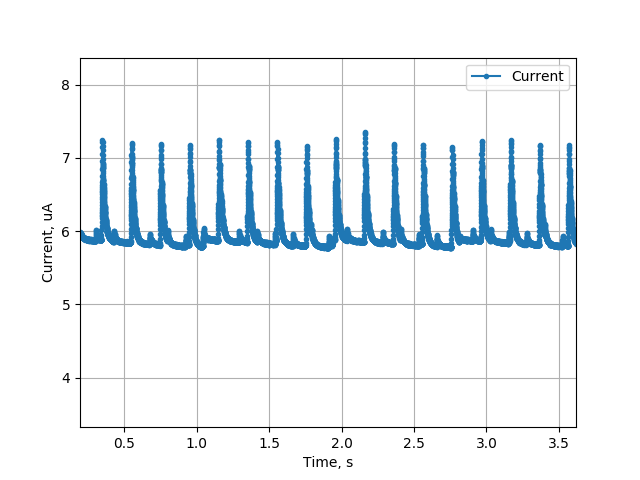

Just because a solar panel can provide a certain amount of power, this does not mean that amount will all be used to charge your battery. There are a few pitfalls to be aware of that can cause a drastic mismatch between the peak power production and the charging power. The first is the amount of power used by the charger itself. This is usually only a concern for very low power systems and small solar panels. Consider my SOL project. In deep sleep, it requires only ~6.2 uA on average, as shown by the profile below. It uses the TP4054 linear lithium-ion charger while in deep sleep to charge its battery. Because it is a linear charger, roughly 30-40% of any input power will be burnt off as heat within the charger. Furthermore, the TP4054 requires roughly 150uA to operate its internal circuitry during charging, which is >24X the power of the rest of the system. Because this power comes from the solar panel though, it does not affect battery life, but does limit maximum charge rate.

The other mismatch between maximum produced power and charging power is due to impedance matching, or lack thereof. Consider again my SOL project. While the solar panel is rated to a maximum of 550mW at 5.5V and 100mA, I have configured the charge current to be a much lower 15mA. This significantly limits the maximum power that SOL would ever charge at to ~60mW. However, there is a very good reason for this. If a linear charge controller attempts to charge at a higher rate than the solar panel can provide, the voltage on the solar panel can droop. If it droops below the battery voltage (plus some offset required by the charger), it will not be able to charge at all. Therefore, this limited charge current ensures that the system can charge much more often, making it more resilient to cloudy days.

An improved approach, albeit typically more expensive, is to use a Maximum Power Point Tracking (MPPT) charge controller. These are switching charge controllers that perform impedance matching at all times in order to maximize the charging efficiency from a solar panel. Therefore, they are not set at a fixed charging current, but rather change throughout the day based on available power. Parts such as the ADP5090 and MAX20361 offer extremely low quiescent current below 1uA. Others require more power to operate, such as the SPV1040 which requires 60uA.

When designing a system that uses solar power for energy harvesting, consider optimizations that can reduce power consumption during times when the panel is less effective or ineffective. For example, my project SOL is a pyranometer so measurements at night or while covered with snow are not useful. Therefore, the sleep time between samples changes adaptively depending on the available sunlight, and is extended significantly at night to reduce power consumption.

A solar powered system will often be installed outdoors. If so, be aware of the limited temperature range at which lithium ion batteries can be safely charged. It will vary somewhat by the specific battery, but 0-45C is a typical range of acceptable charge temperature. A well designed system should have temperature measurement and charger disable capability. It should also be designed for either:

- Low enough power that there is no need to charge during peak power times

- Good thermal management ensuring it can charge more often

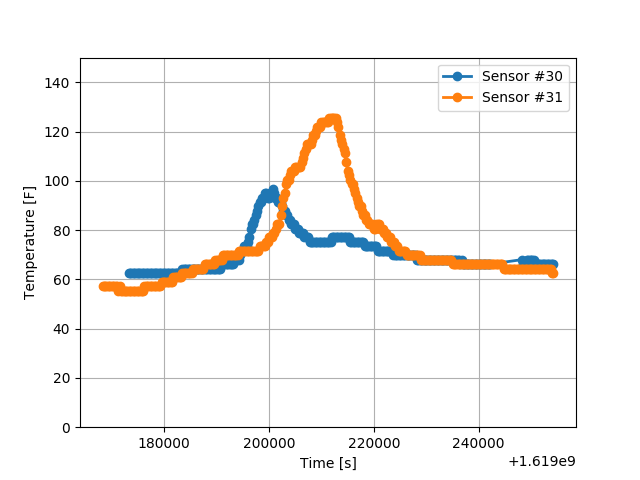

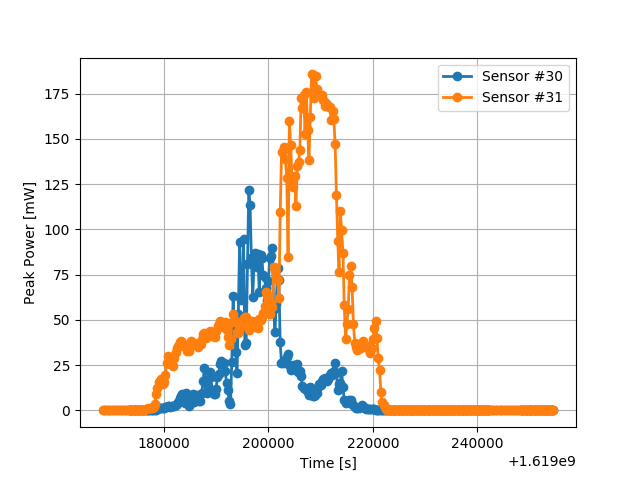

You may be surprised at how easy it is for a device to reach >45C in direct sunlight, even if the ambient temperature is far different. Worse still, a high temperature correlates with significant solar power available, so the system has to disable charging during the highest power times. Consider the data shown below. This is the internal temperature and available solar power for two SOL devices. Both are set up indoors, in two different windows in different parts of Atlanta, GA in April. Although the ambient temperature for both is controlled, Sensor 31 easily reached >45C (113F) temperature during the highest power portion of the day. As is clear from the data, this was a partly cloudy day, it isn’t even the summer, and the ambient temperature is roughly room temperature since it is installed indoors. If this were installed outside, it would be significantly hotter.

If the solar powered system is meant to operate indoors, prepare for a much more significant challenge. The light intensity in an office can easily be 1,000X less than direct sunlight. Worse still, most solar panels are tuned to operate in sunlight’s spectrum and are quite inefficient with artificial lighting. There are specific solar cells (typically amorphous silicon cells) tuned to indoor lighting that you should consider, such as Panasonic’s AM-1819CA. This example can provide 7uA at 3V under 200 lux fluorescent lights, which is far, far less than an equivalent sized monocrystalline cell in direct sunlight. My indoor-solar powered Feather development board could kind-of operate indoors without sleeping, but with significant limitations (though to be fair that was more of a design challenge than a practical device).

Thermoelectric Generation

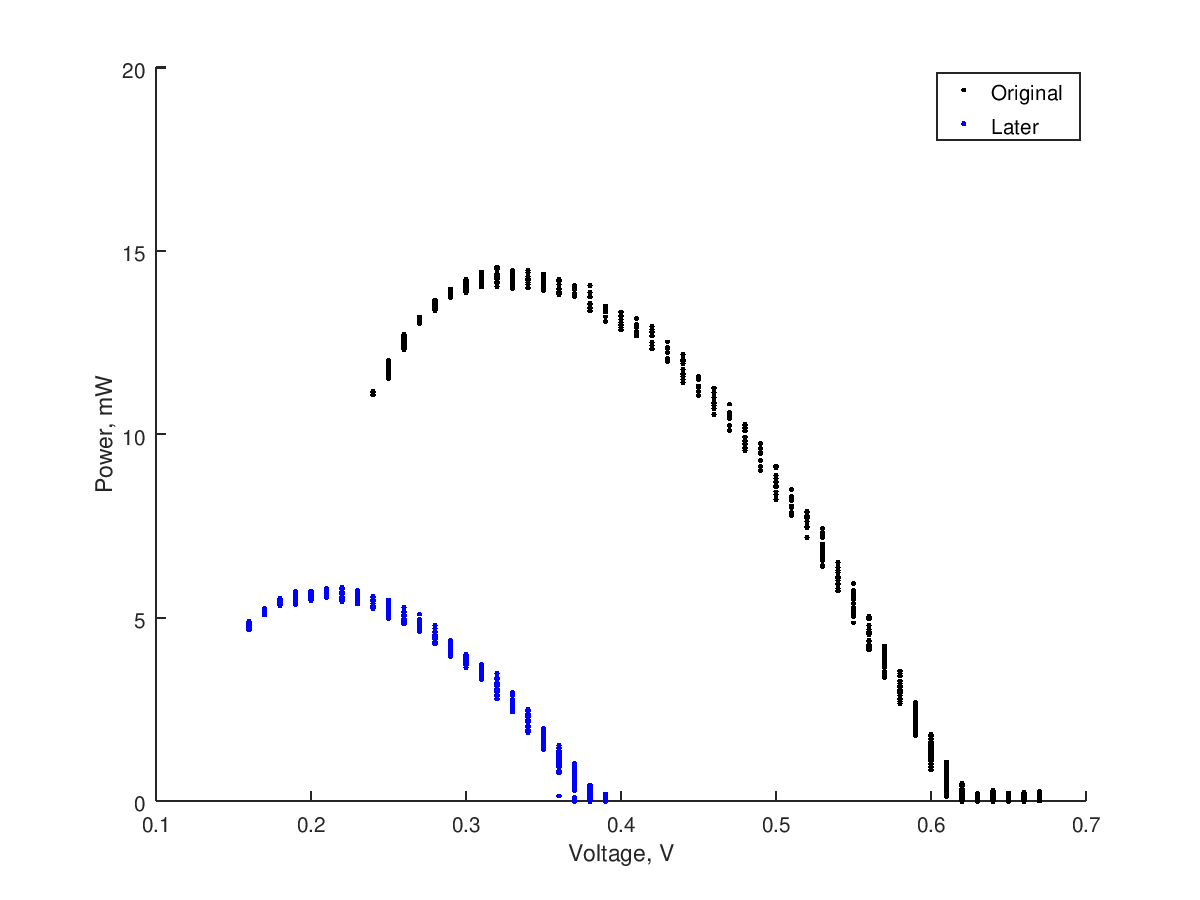

NASA's Curiosity and Perseverance do it, so why can’t you? Thermoelectric energy harvesting is tempting to many developers. A simple, low cost Peltier cooler can be operated in reverse, becoming a (very inefficient) Seebeck Thermoelectric Generator (TEG). I have done some experimentation with these, and the summary is that while it is possible to use them, you should use solar panels instead if at all possible. The available power at a roughly 40F temperature differential was noticeable, at ~15mW. However, the voltage was quite low, requiring some specialized electronics that can boost <0.5V up to more usable levels. Worse still, the available power dropped off quickly as the temperature differential equalized. With better thermal management, this could be improved. The image below shows the power vs. voltage curve for a TEG with ice on one side, and an aluminum heatsink in room temperature water on the other side. After a few minutes, the available power dropped dramatically as the temperature equalized.

Vibration/Piezoelectric Harvesting

Piezoelectric materials can produce a voltage in response to vibration or pressure, and are often used in sensors. In theory, they can also be used for vibration energy harvesting. Some devices and demos have been built that succeed in generating useful amounts of power from vibration. However, they often have abysmal efficiency and/or very expensive electronics to do so. Although I’d love to see more projects leverage this as a design challenge, you are better off using solar power if possible.

Wind Power

Wind power is a significant and growing portion of the world’s renewable grid-scale energy production. However, it seems to be a much less common source for small-scale energy harvesting. There are a few challenges:

- Unlike solar, thermoelectric, or vibration energy harvesting, it has moving parts, making waterproofing and reliability more challenging

- Wind power is much more efficient at large scale and higher altitude

- There aren’t any COTS small-scale, low-cost wind turbines (that I know of)

Nonetheless, I’d love to see more projects with wind power harvesting at the small scale, even if efficiency is poor.

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Good point, ultra low power design doesn't necessarily mean you need energy harvesting!

Are you sure? yes | no

An important consideration to make is whether you actually need energy harvesting for your low power application. It might be much cheaper to select a larger battery or replace the batteries in your product every few months/years. In general, the product can be made smaller when it doesn't have to include the energy harvesting components.

Are you sure? yes | no