Related links

https://github.com/magokeanu/aina_robot

https://hub.docker.com/repository/docker/magokeanu/aina_ros_jetson

------------------------------------------------------------------------------------------------------------------------------------------

Aina is a humanoid robot that has social capabilities, now I'm going to explain to you why this is an interesting project.

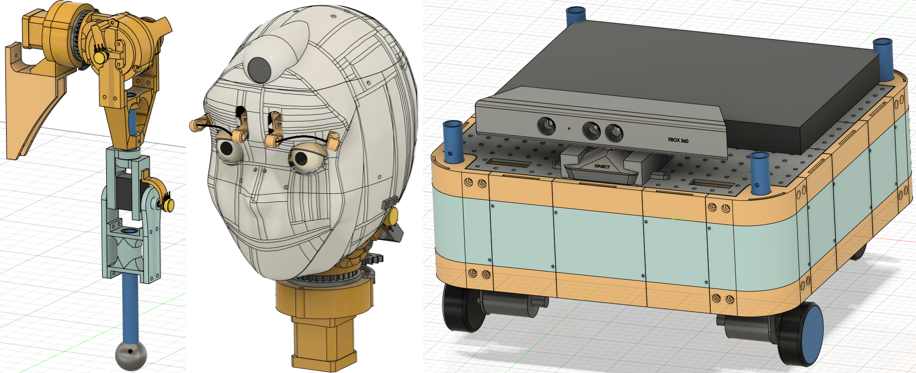

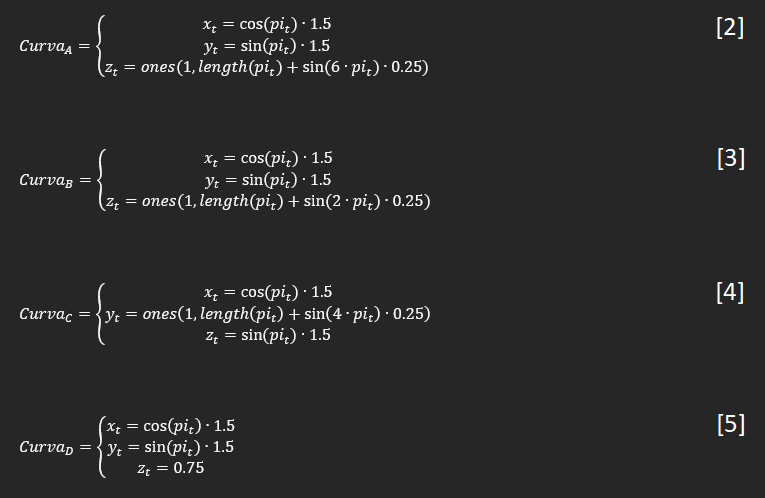

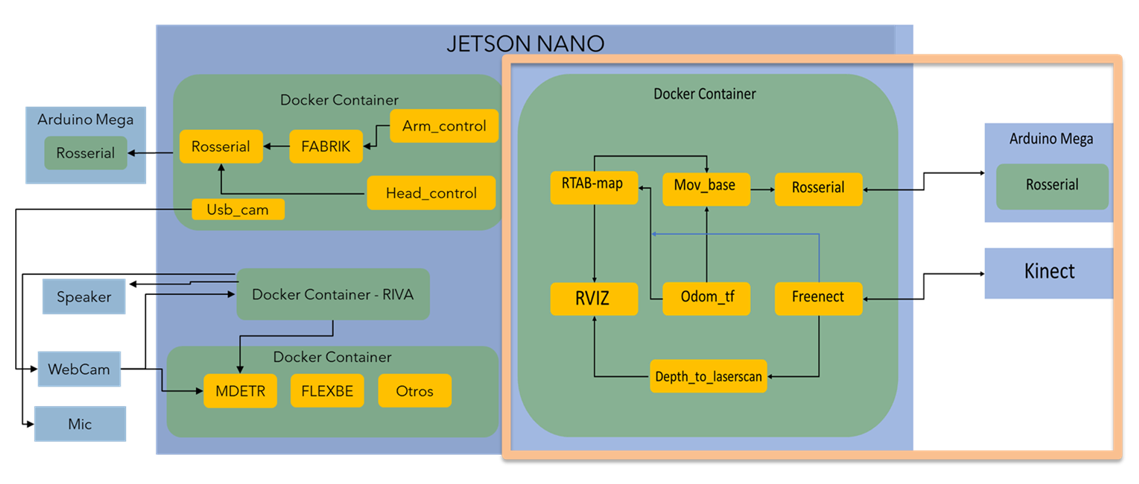

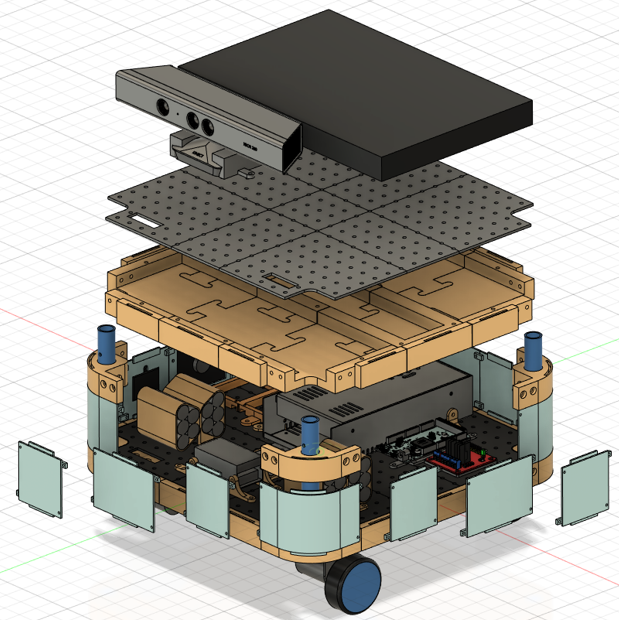

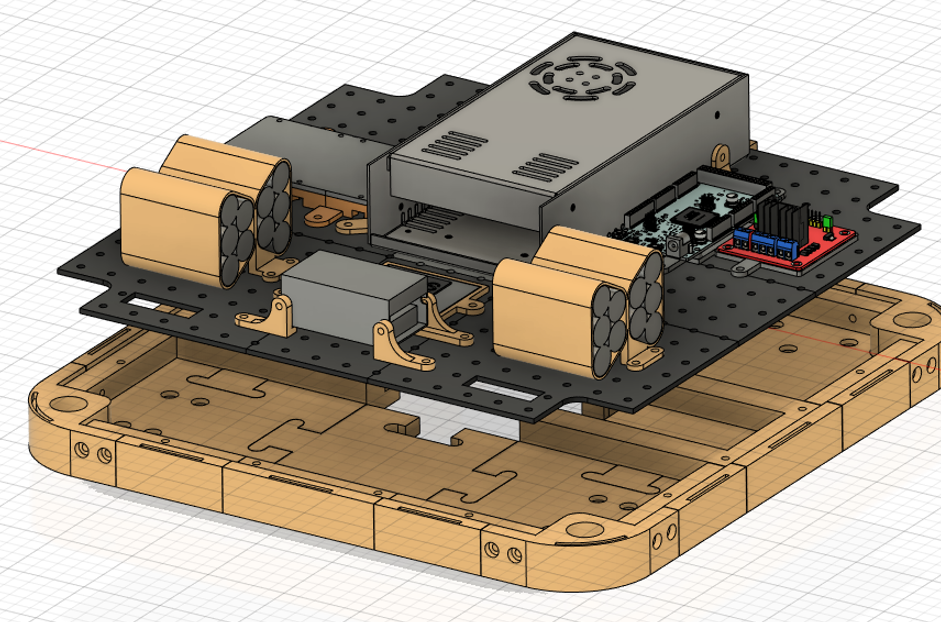

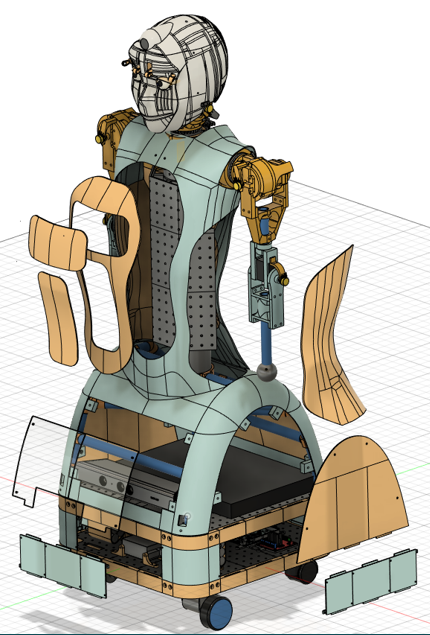

Humanoid robots are fascinating because when are well done they are capable of communicating with people in a significant way, and that's the core of my project, I mean "effective social interaction", to achieve that I was working under the (now in term of design) the concept of modularity, this allows me to divide the robot among three mayor subsystems wich characteristic are/will be:

- Head: this is part that enables the emotional side of the communication through facial expressions with the eyes and eyebrows (and sometimes the neck), no mouth is added because I think that the noise of it moving can be annoying when the robot talks.

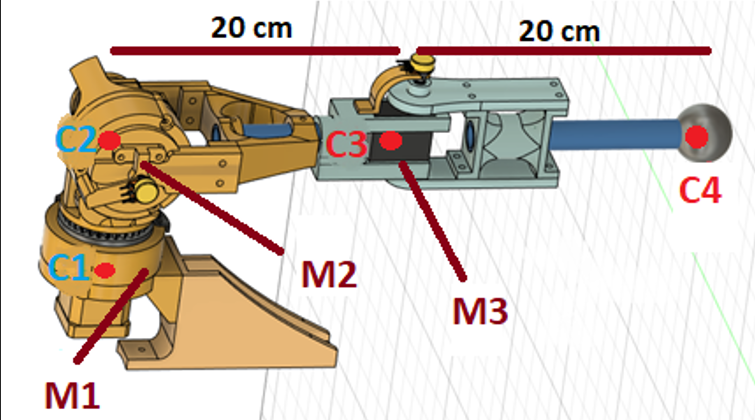

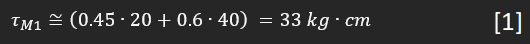

- Arms: No much to say, the arms are designed in an anthropological way and due to financial constraints this cant can have a huge torque, but I can it move with some NEMA 14 with 3d printed cycloidal gear to gesticulate and grasp very light things, so it is fine.

- Mobile base: This is the part that enables the robot to map, positioning, and move the robot in the world through a visual slam with a Kinect V1.

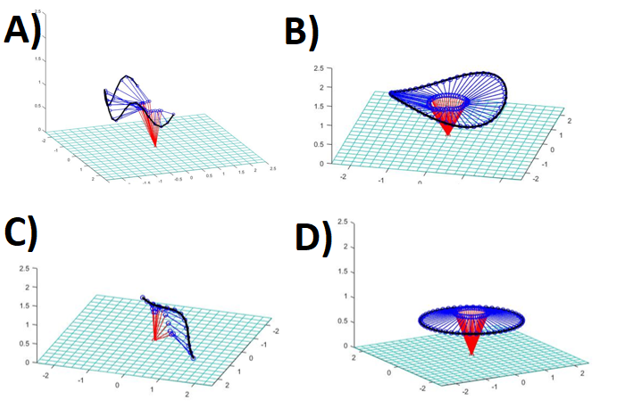

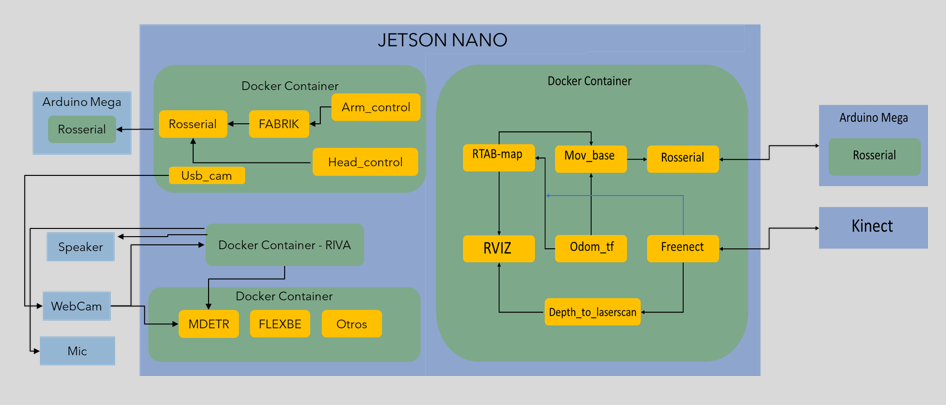

Divide to conquer also applied to the software counterpart, thanks to docker I can create very different diverse programs grouped in containers (one for each subsystem) and that allow me to say "hey, I want to change the way that the mobile base perceives the word without but keep everything else working", but this is not only good for me because if one of you want to modify or just make one of the parts for your projects you can not only have the 3d parts, but also the working software in a container ready to work in minutes.

To interconnect all the programs ROS melodic containers are used and implemented in a jetson nano, this is important because the jetson allows me to implement Deep Learning networks alongside robotics algorithms without the libraries and python versions makes problems between them.

I really think that this can be not only a good companion because of its social interaction design orientation, but also due to its ROS and docker based is easy to add more functionalities like connect it to google calendar or to smart home to manage calendars, light and so on, besides in the hardware part is also easy to modify.

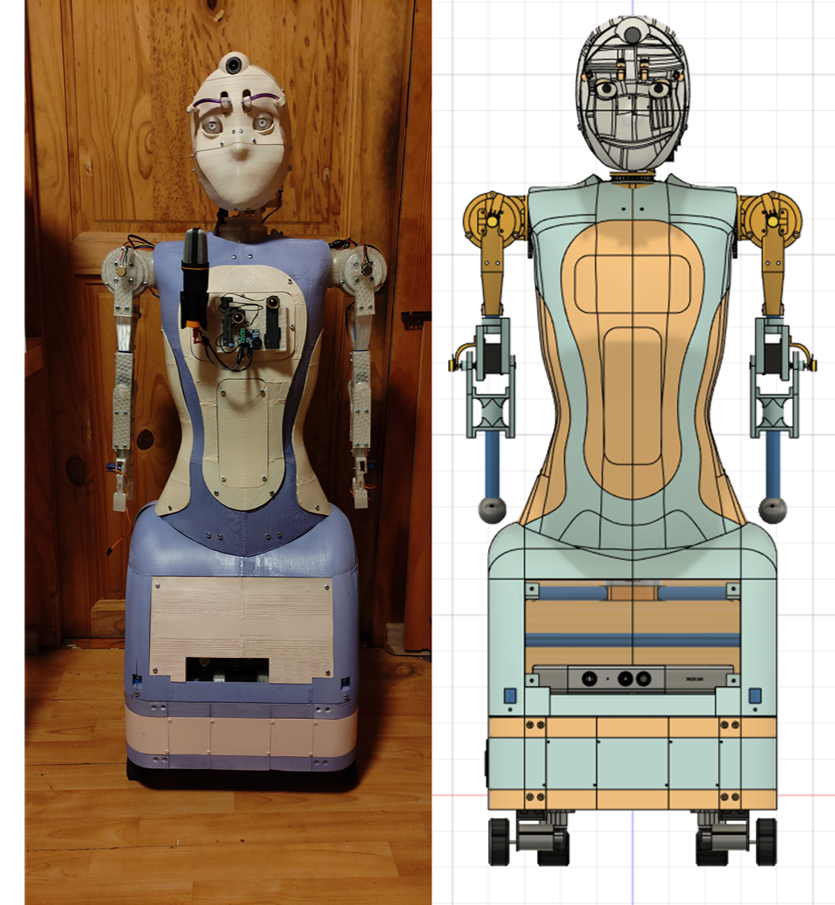

In the logs, I'll explain some things about how I design it and all the parts involved, but in the mid-time, you can see an image of the completed robot.

I think that this robot can be a good companion due to its facial and conversational habilities, the possibilities maybe not be endless, but sure are a lot, it has a webcam in the head and also en the base (the RGB part of the Kinect) so it can follow a person for example, or by it slam process check the house and inform if something odd is happening (neural networks can make the detection part works with just images), it can al remember things to you (meetings for the calendar maybe), I aim to create a cheap and easy to mod version of the Pepper humanoid robot, so follow the project if you are interested and any feedback is welcome.

Maximiliano Rojas

Maximiliano Rojas

Petar Crnjak

Petar Crnjak

Piotr Sokólski

Piotr Sokólski

prateekt

prateekt

Irene Sanz

Irene Sanz