October 2022 Update

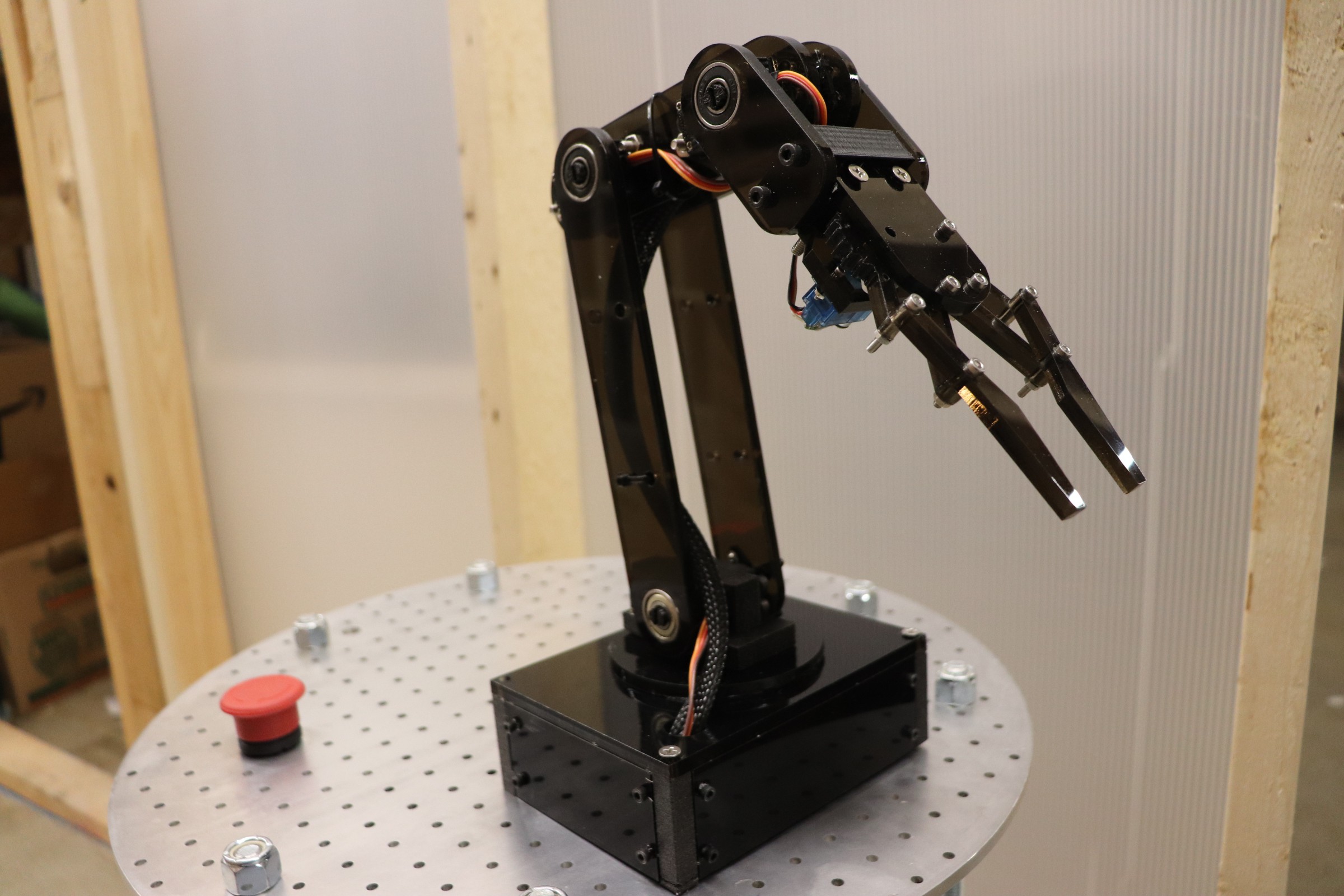

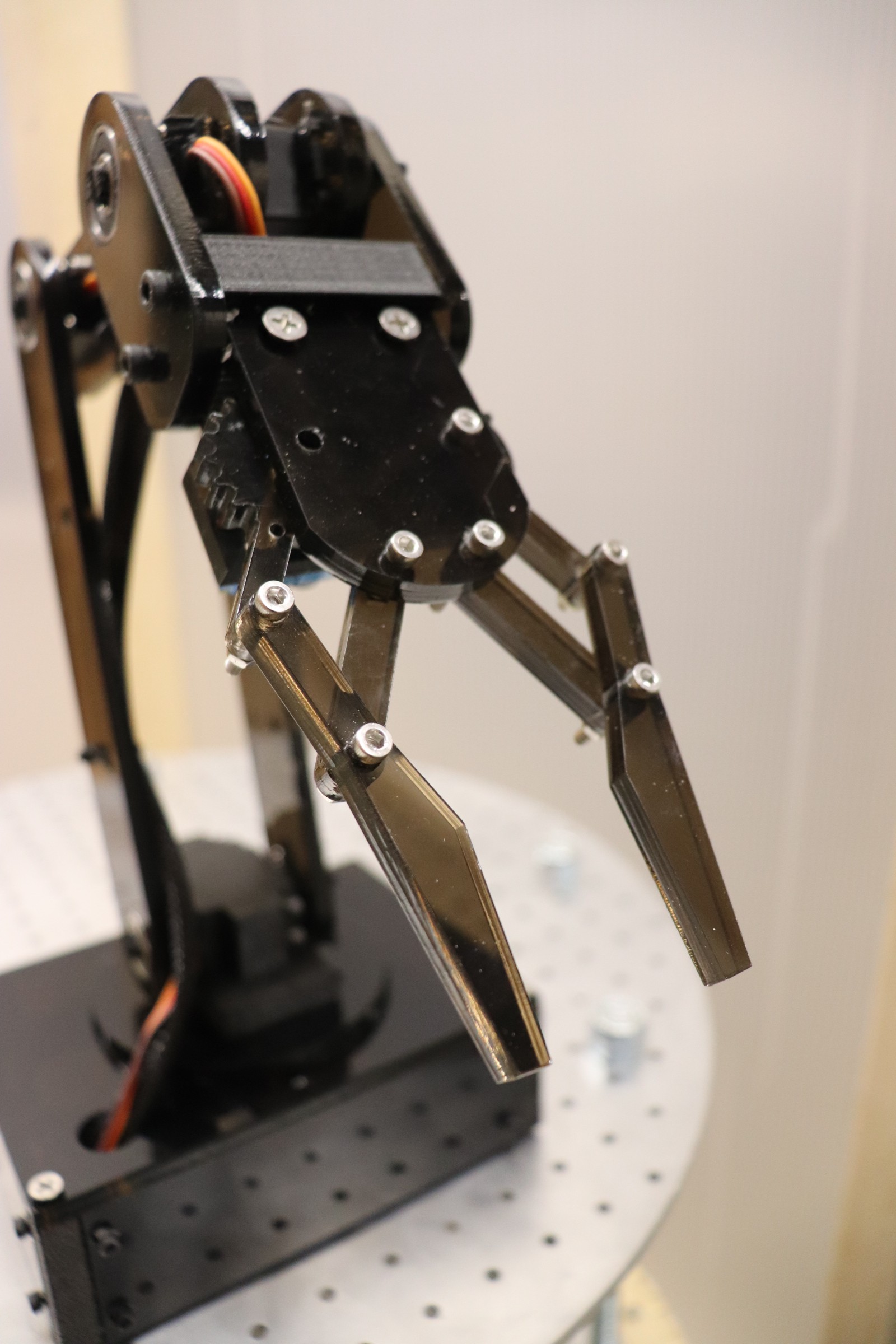

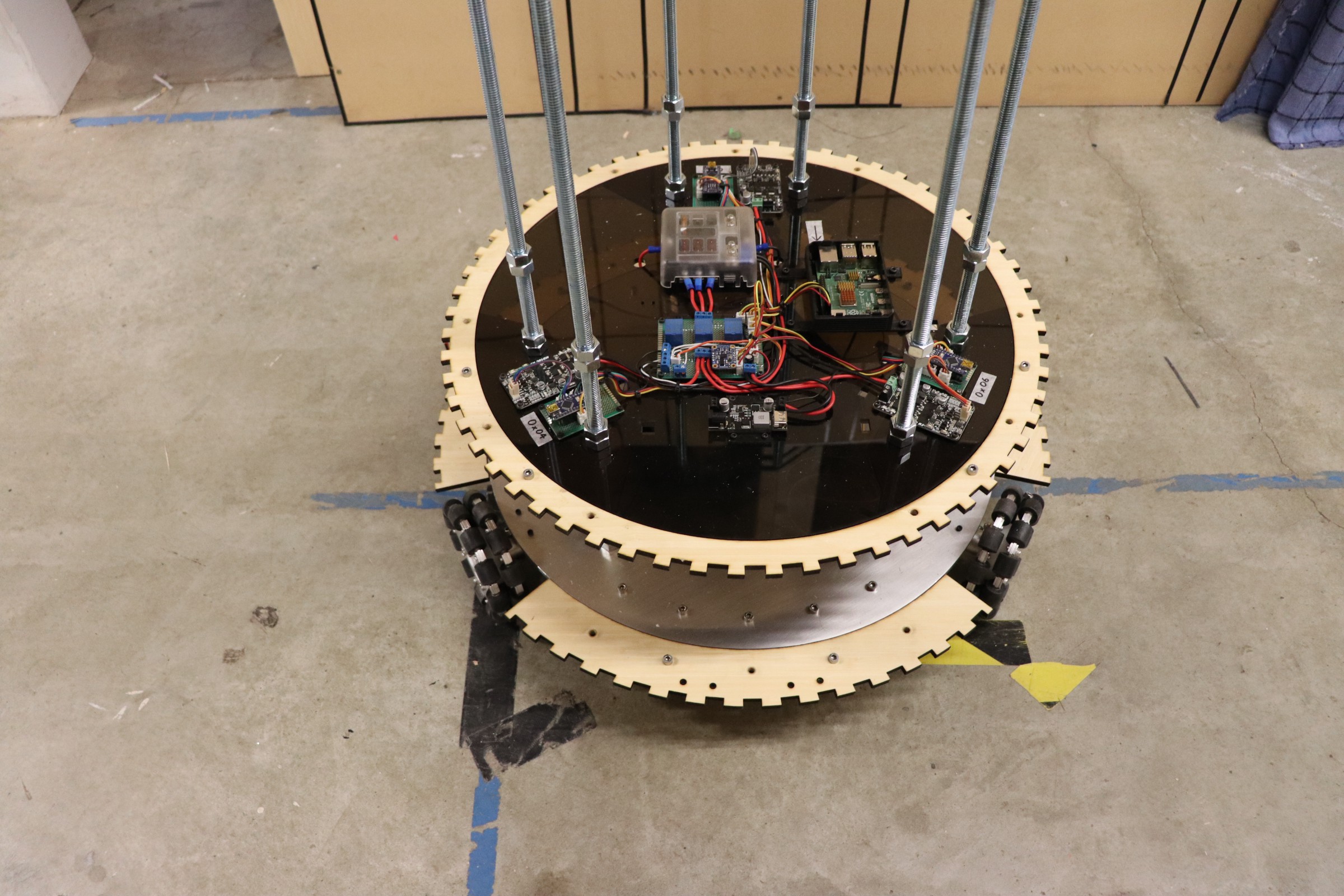

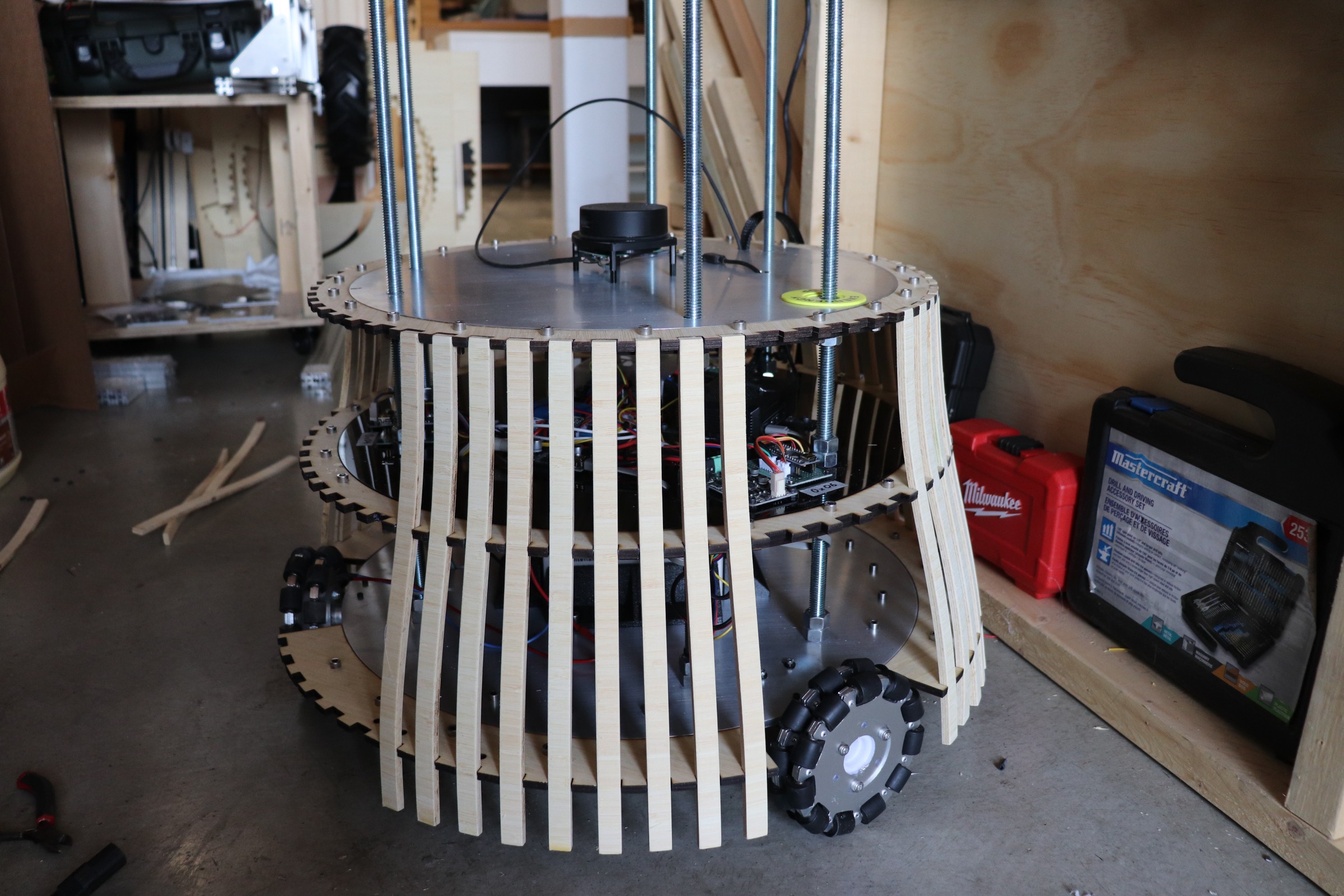

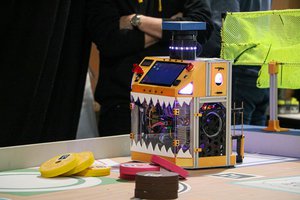

We're releasing the robot! Through the end of 2022 videos will be documenting the build process of OMNi and we are also going to be releasing the CAD, electronics, and software on GitHub. The first video is available below, providing a project overview and desired design improvements to OMNi. These improvements will be addressed over the following videos as OMNi is rebuilt from the ground up. Follow along for the journey!

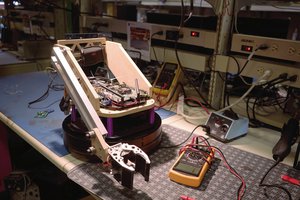

If you want more details right now check out the past build logs covering the initial prototype development down below!

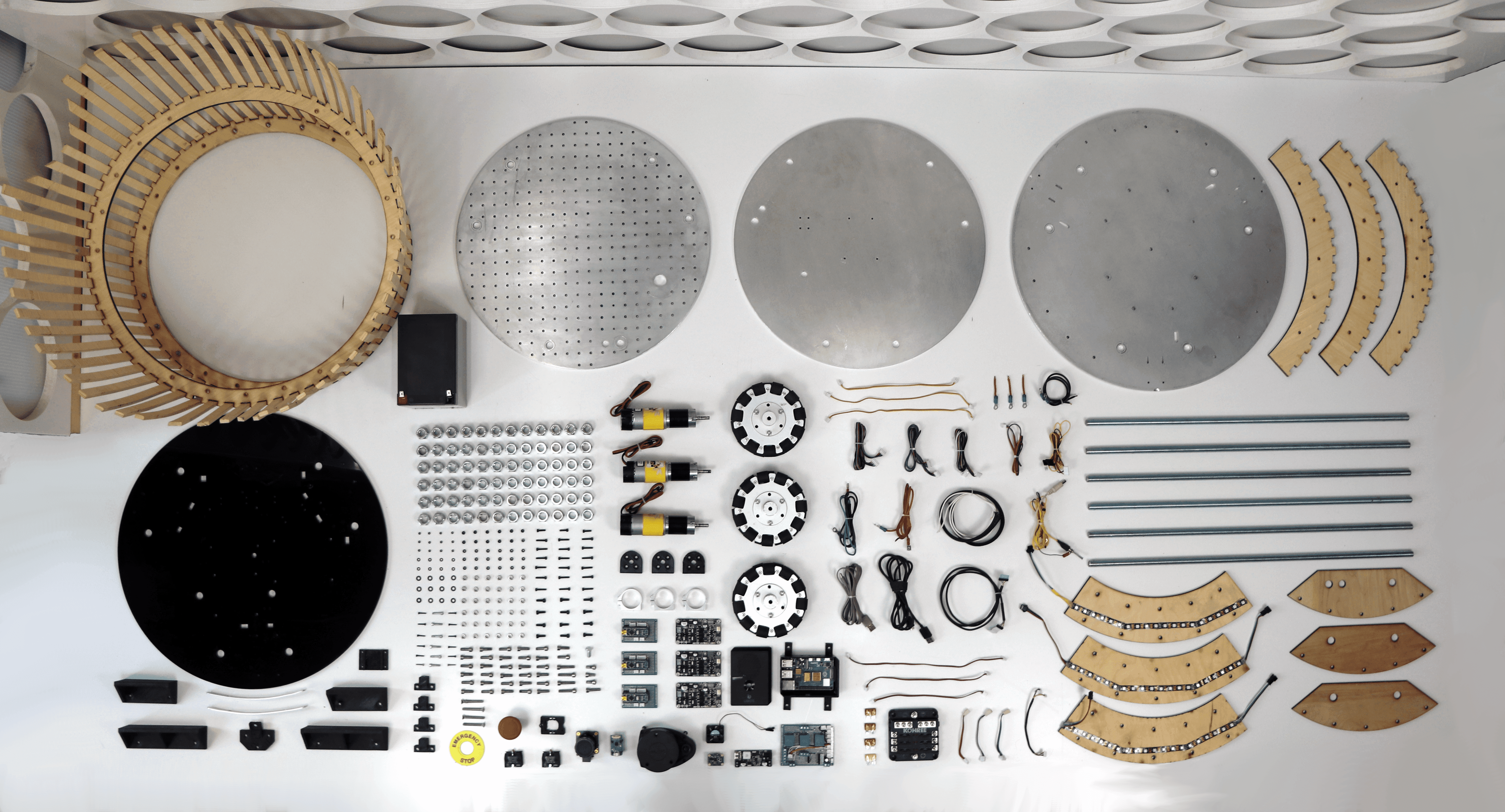

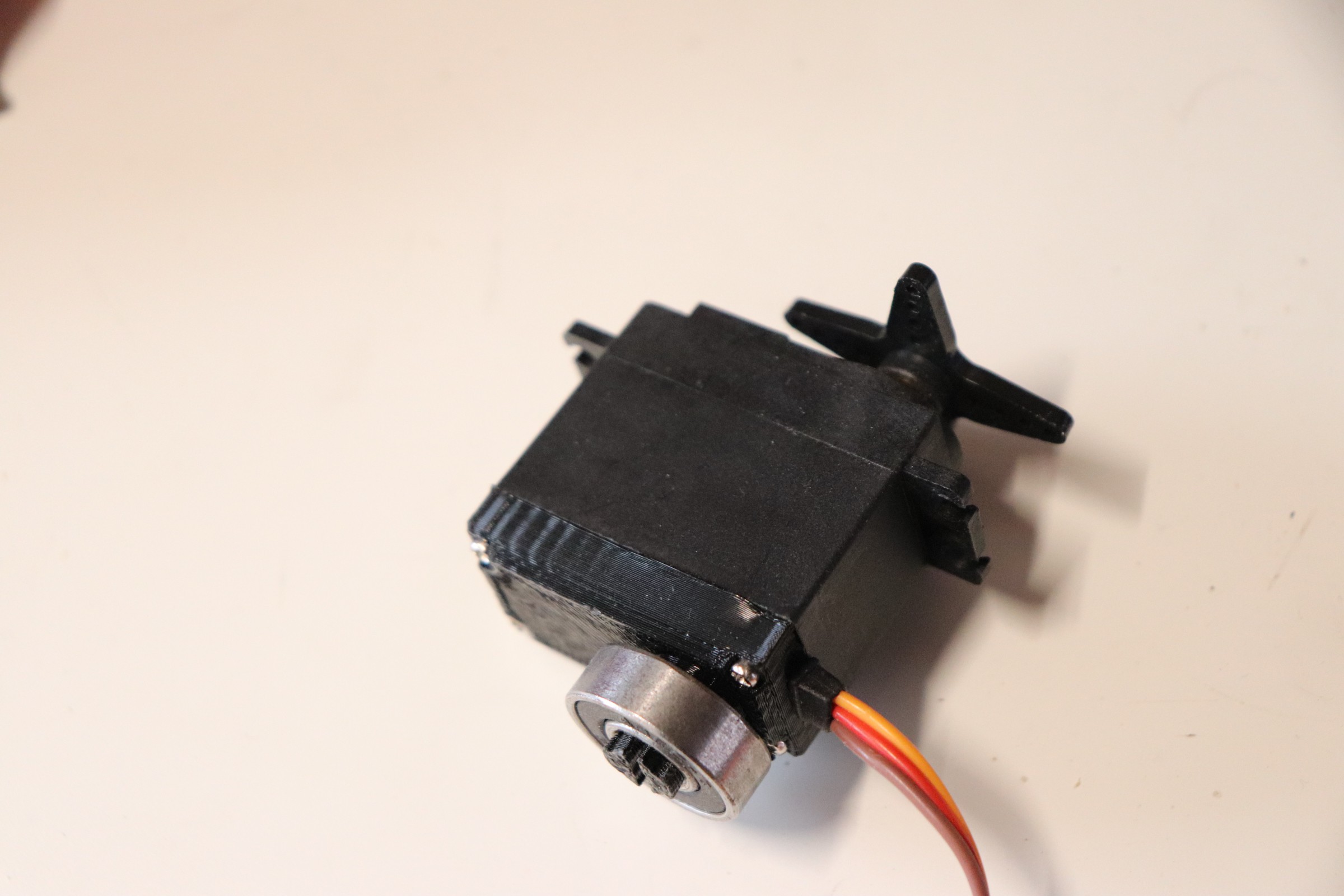

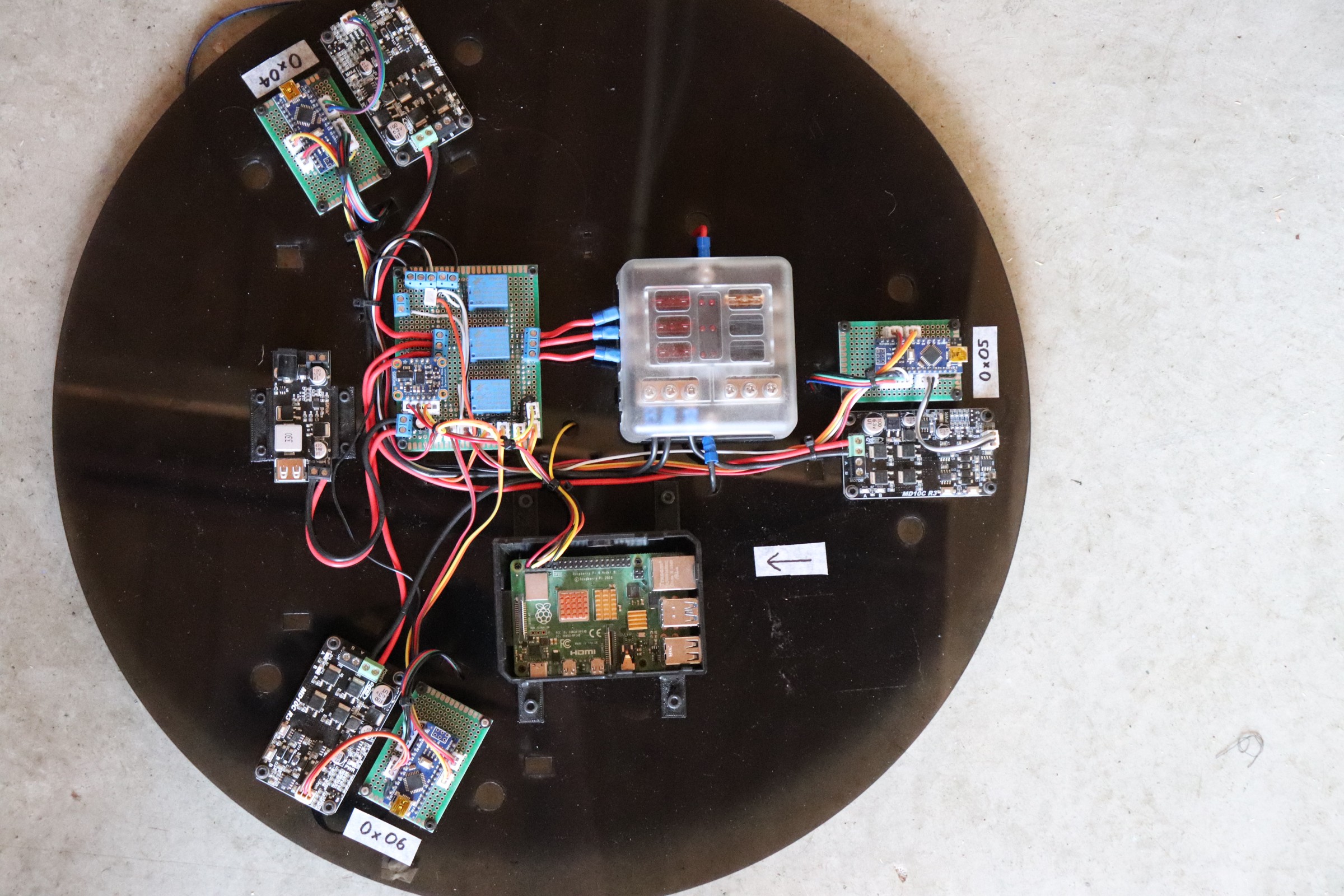

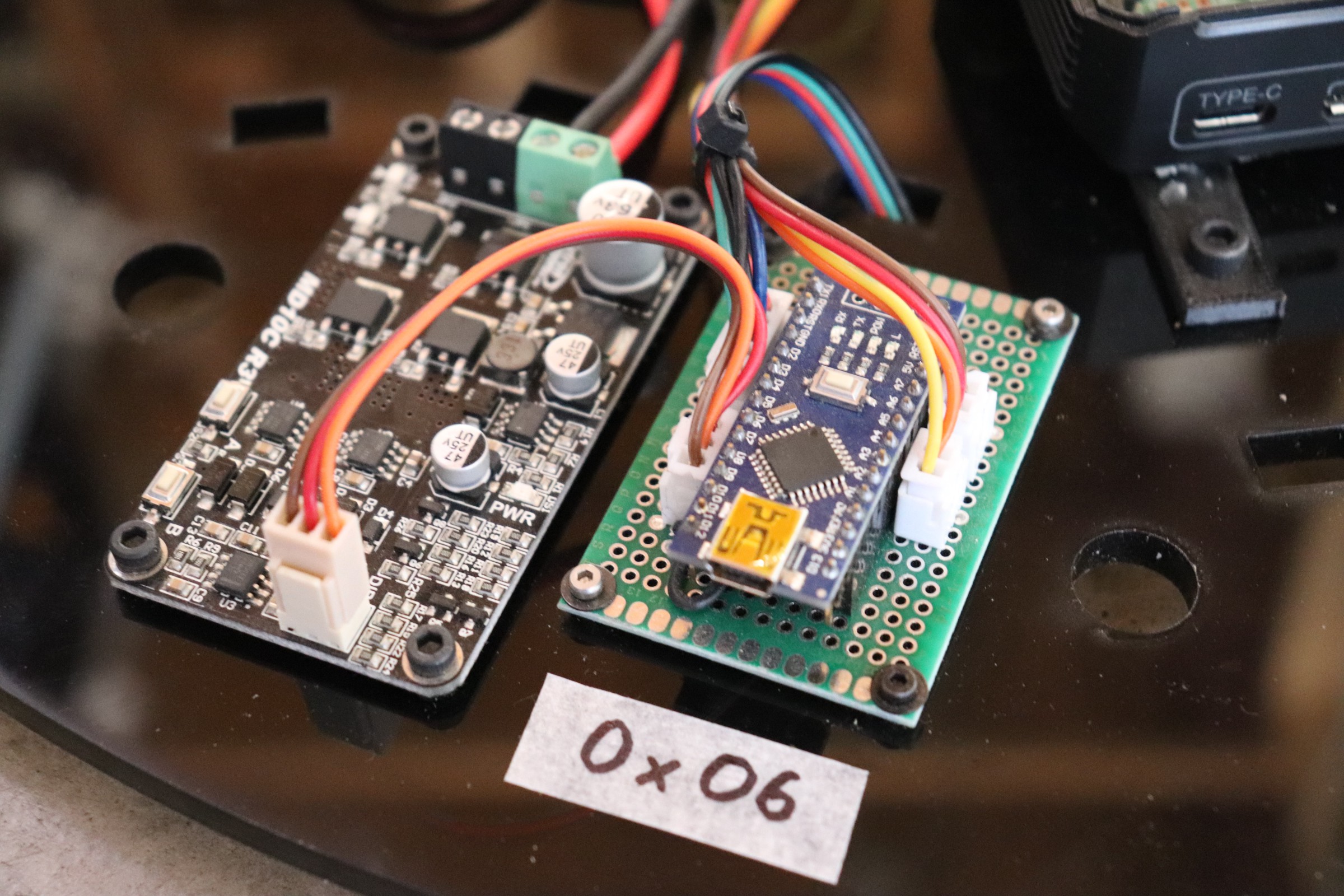

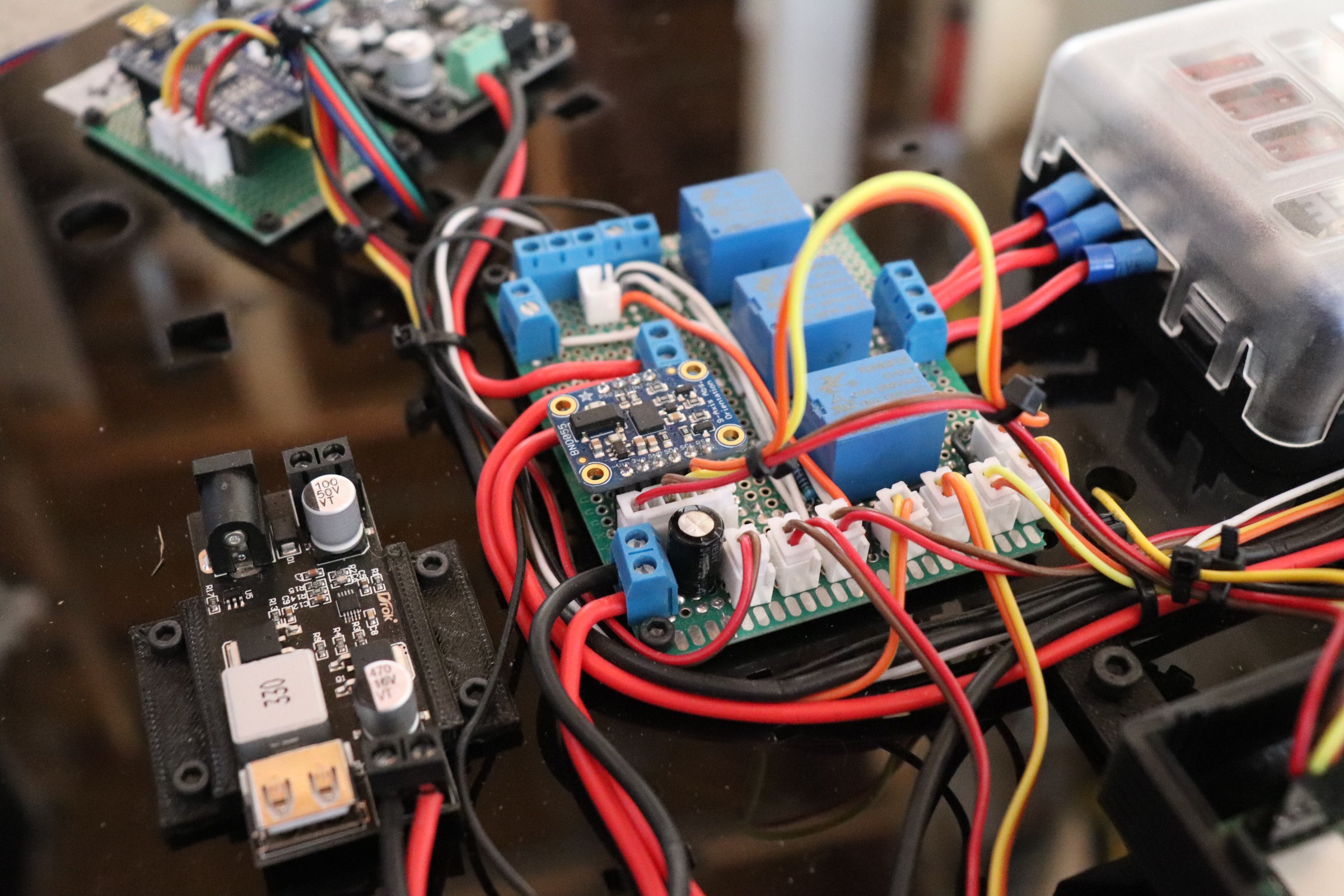

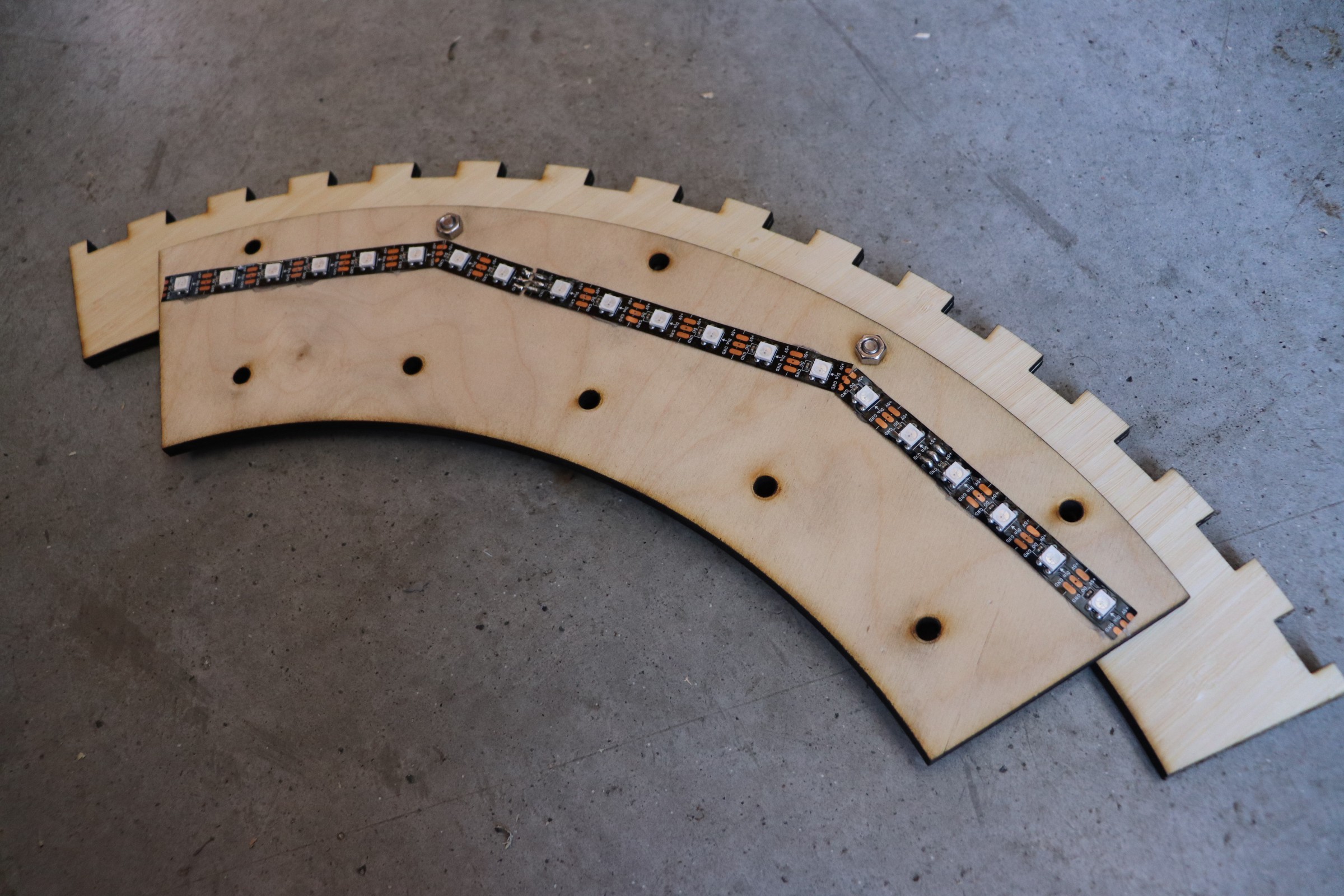

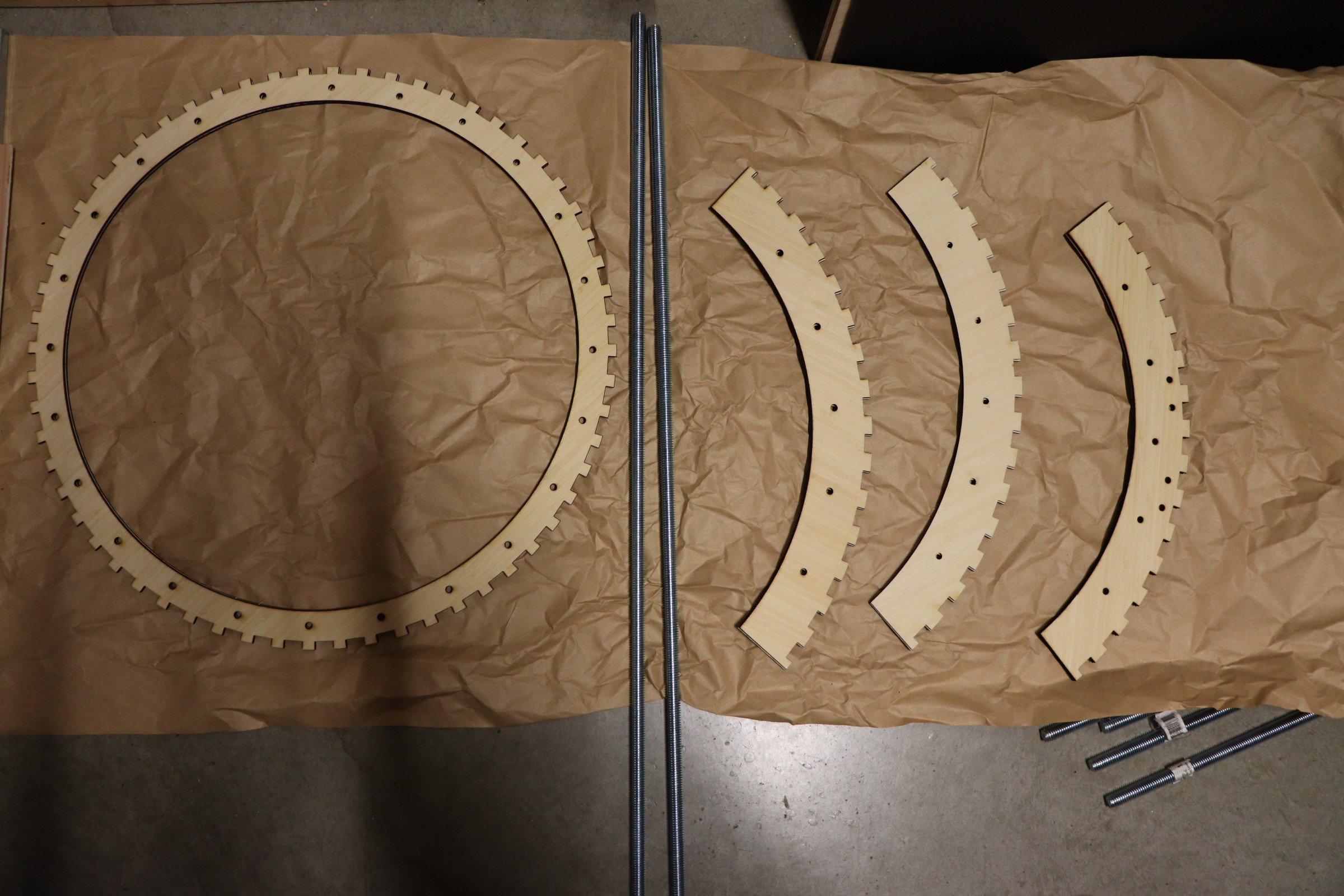

Always be knolling!

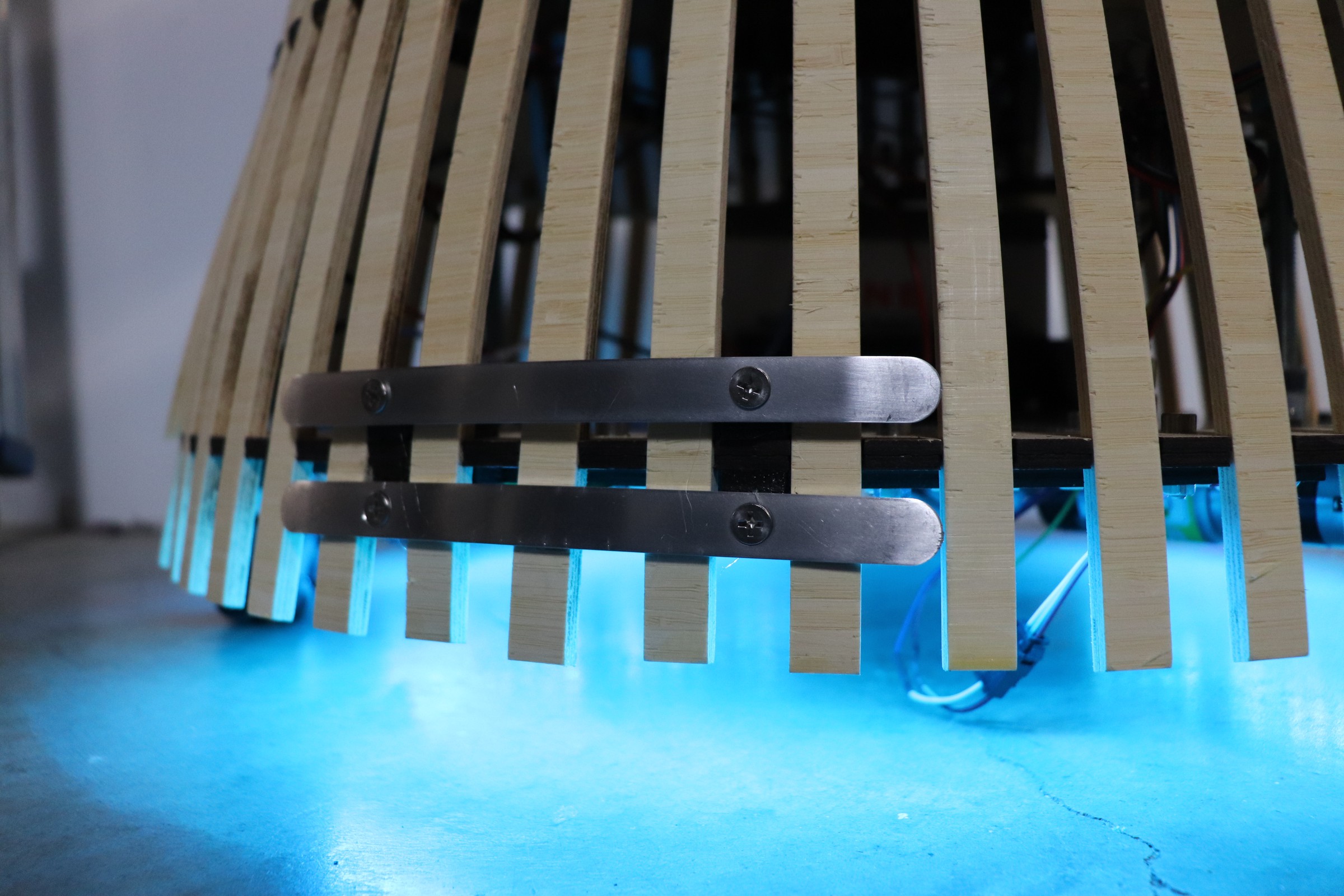

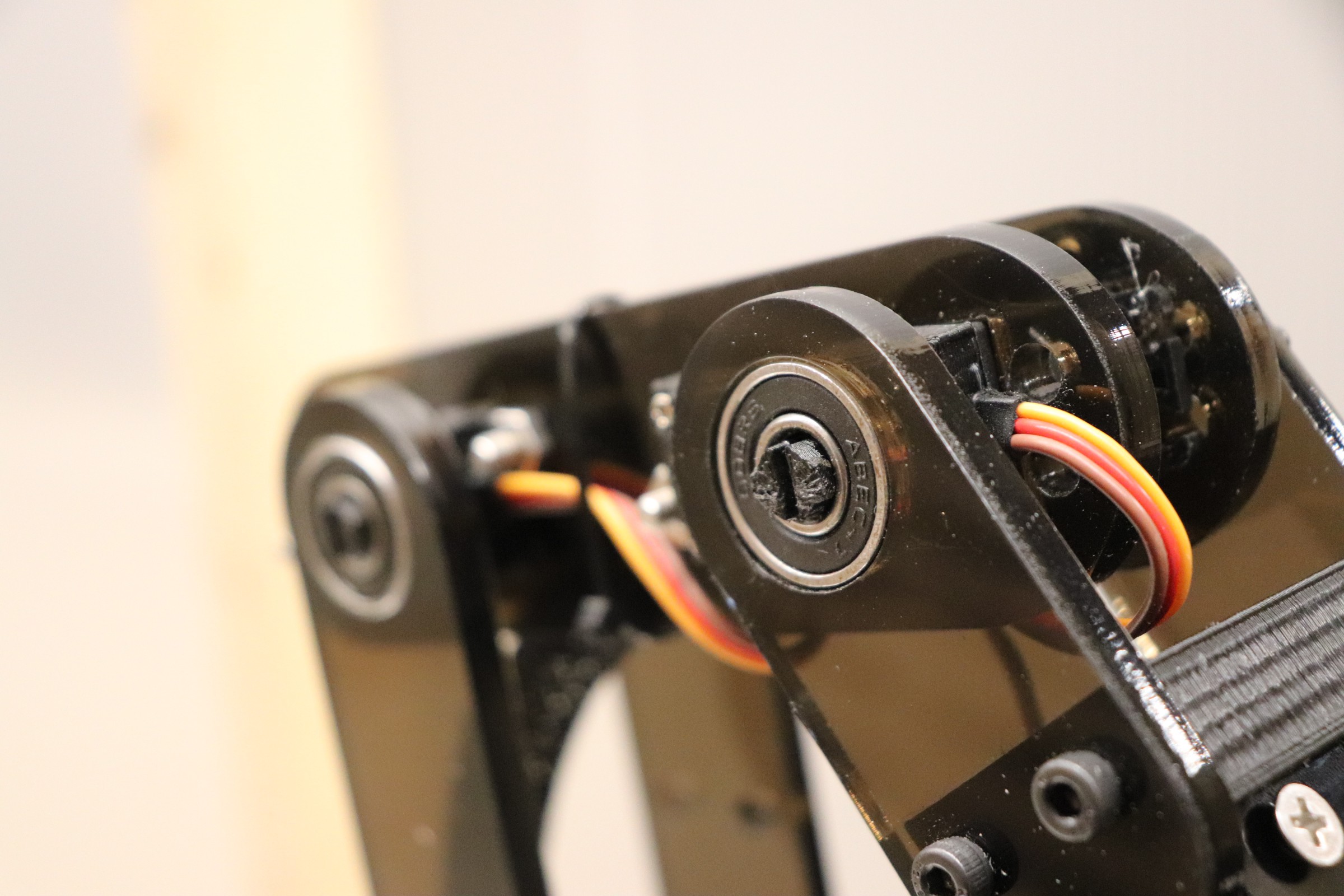

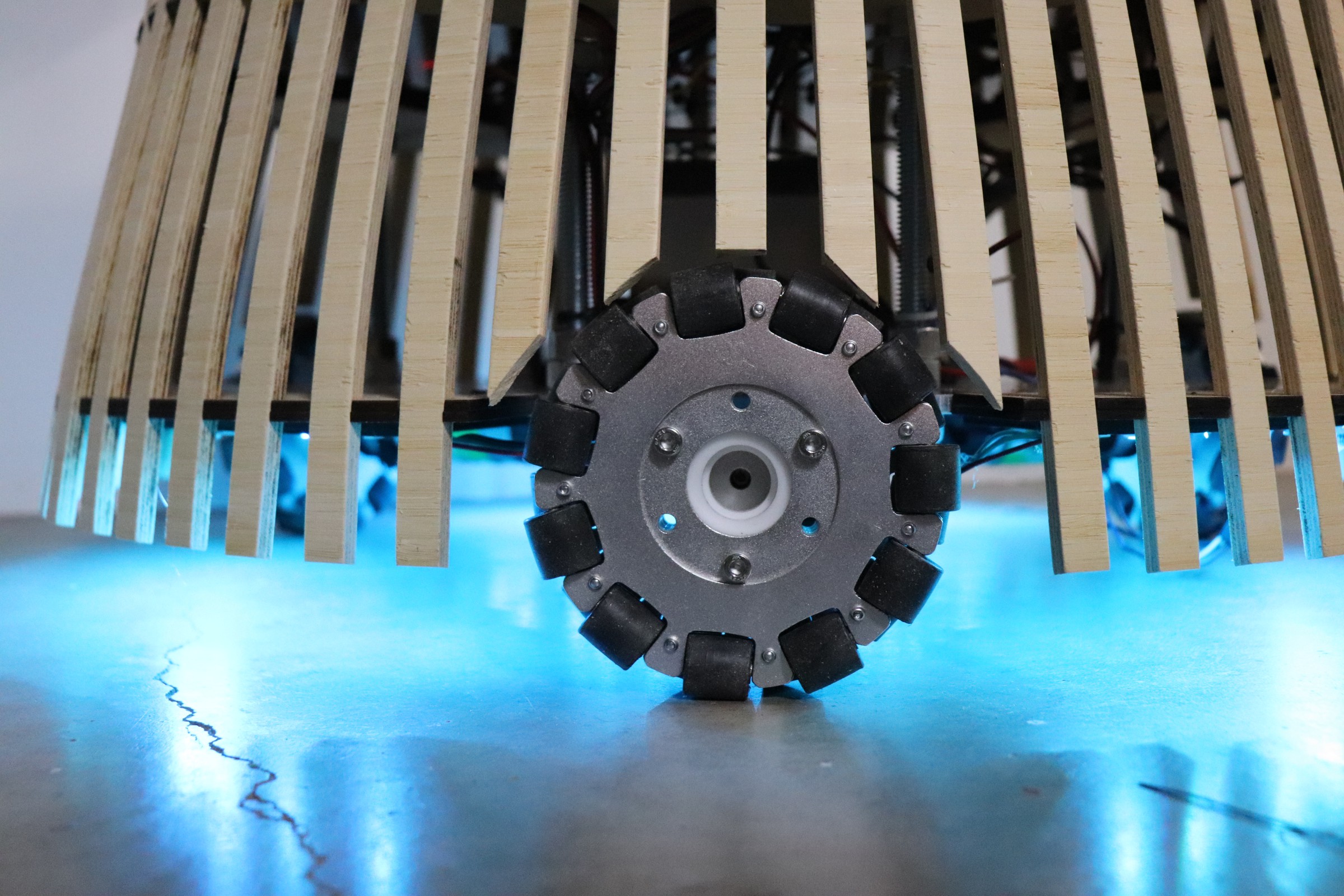

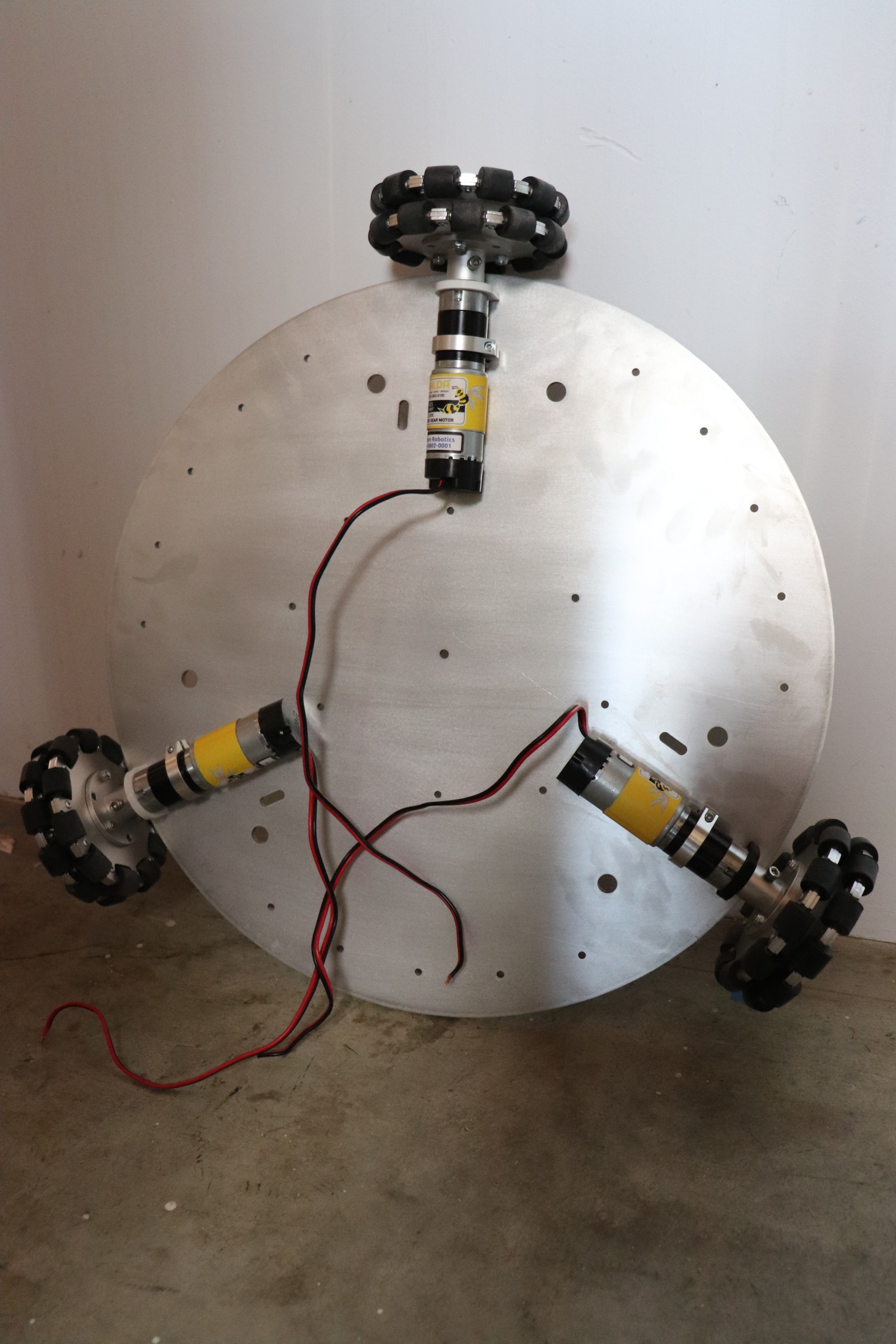

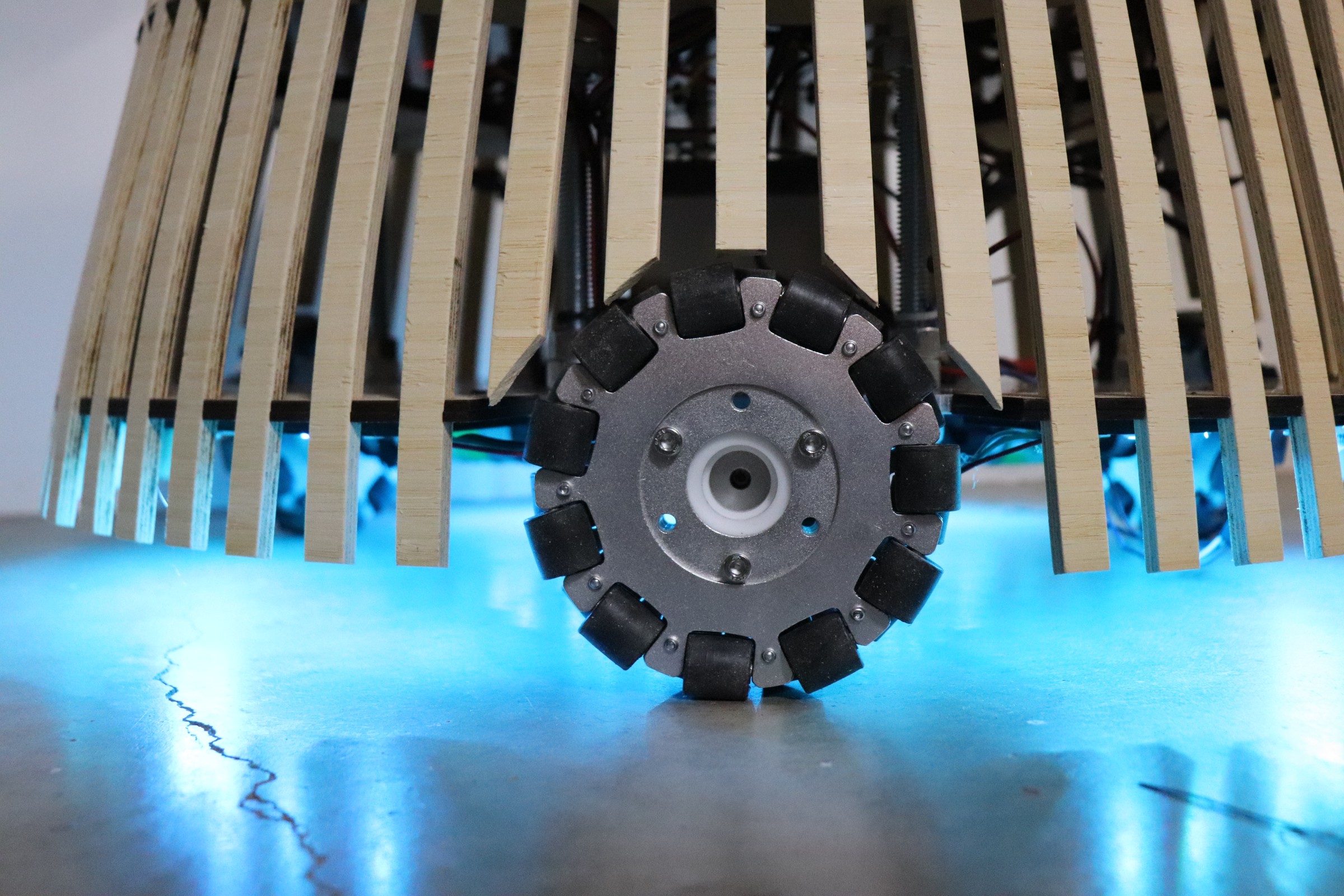

Close up of omni wheel drive

The Case for a Museum AV Support Robot

EDM Studio is a Vancouver-based studio that focuses exclusively on the design, development, and support of interactive digital media for cultural museums and science centers. Over the last 15 years, we have worked on all kinds of exhibit projects, from single kiosks in small regional museums to large redevelopments like Calgary’s Telus Spark, the Museum of Australian Democracy in Canberra, and the Canada Science and Technology Museum in Ottawa.

The visitor experiences we’ve worked on are as varied as the locales. We’ve created an immersive mineshaft exploration, a broadcaster exhibit where visitors record color commentary for Olympic events, a touchscreen kiosk exploring the local implications of sea-level rise, an energy conservation interactive that introduces the concept of “vampire current”, an RFID-mediated experience that allows teams of students to follow a self-directed learning trail, etc.

These experiences leverage technology – computers, touchscreens, projectors, audio, associated physical interfaces – with the goal of providing an intuitive, enjoyable experience for the visitor. Which is all very fine … until something goes wrong. Over the last few years, we have slowly built up a wishlist for an audio-visual support robot. Here are a few use cases:

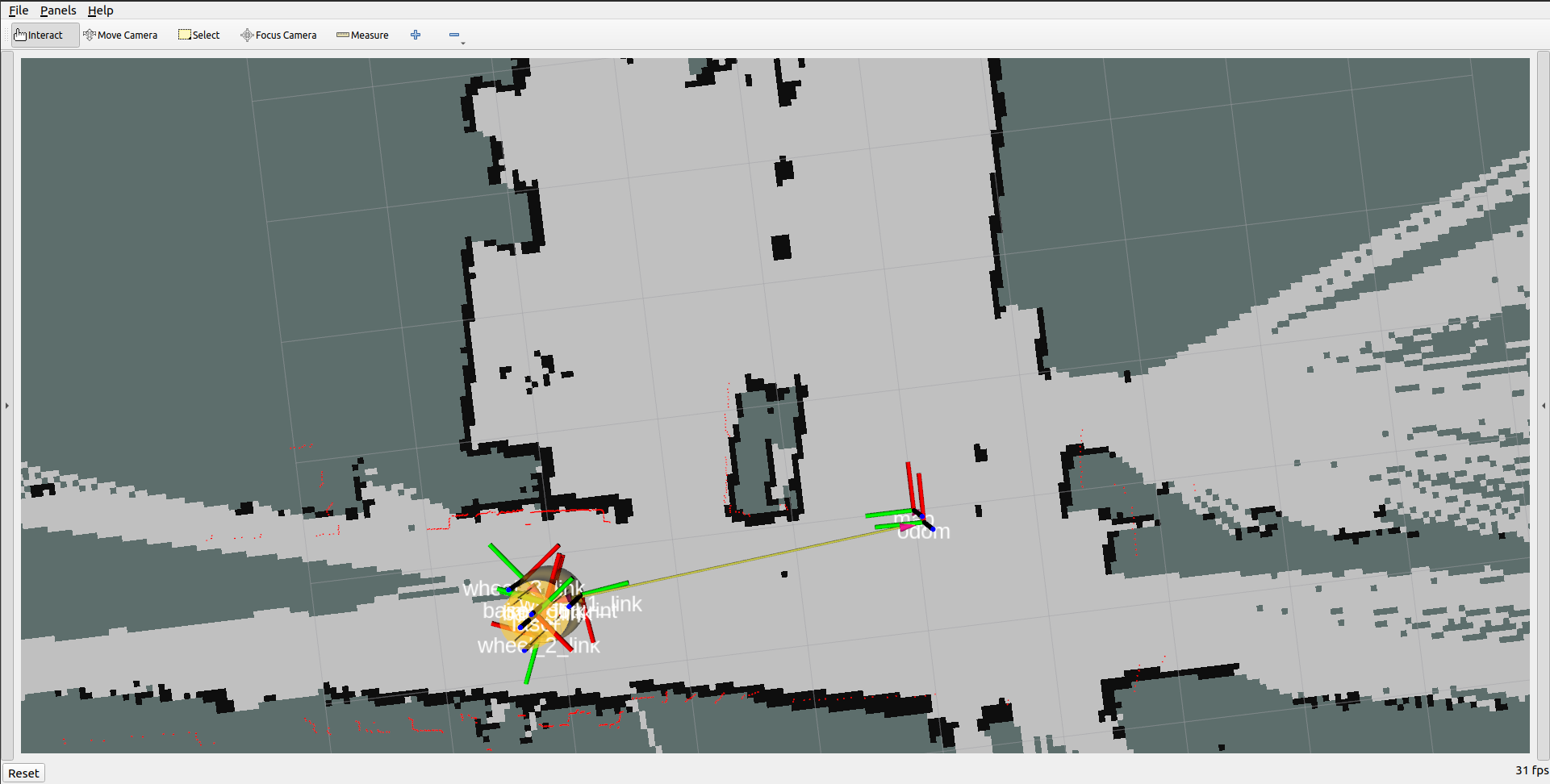

- Rather than relying on a museum staff member doing “morning rounds’ to check all equipment, have a robot visit each screen in turn, confirm it is on, and displaying the expected content. For bonus points, physically tap the screen with a stylus and confirm it responds to touch.

- Staff reports an issue with a specific exhibit. All seems to be working when viewed through Remote Desktop. We need a remote set of eyes and hands … so we navigate the robot out to the exhibit, troubleshoot the problem, and report back. For bonus points, we instruct the robot to autonomously go to the exhibit and let us know when it is ready.

- We recently deployed an exhibit that uses a retroreflective ball on a wand as a means of interacting with a virtual wildlife scene. Recalibrating the scene requires someone to physically wave the wand around the space. This is a job for a robot.

While these are (actual) audio visual-specific use cases, other visitor-centric uses are easy to imagine:

- Telepresence, for example, a remote visitor is able to explore the museum from afar.

- Wayfinding robot able to guide people to specific exhibits.

Museums offer a wonderful environment for the development of assistive robots. OMNi is our team’s attempt to realize this goal. Over the next couple of months, we will be taking our development platform into an actual museum for testing and feedback.

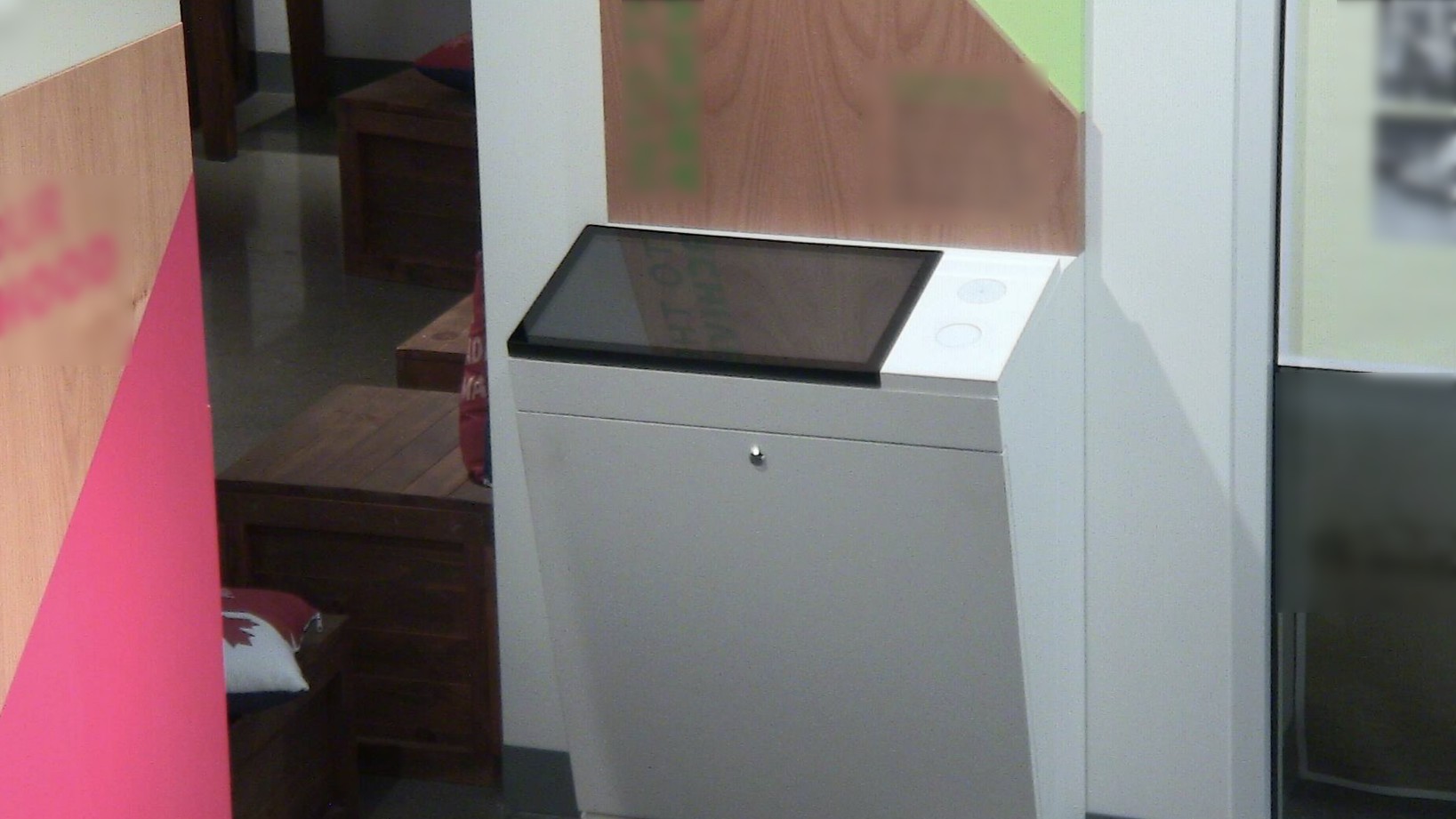

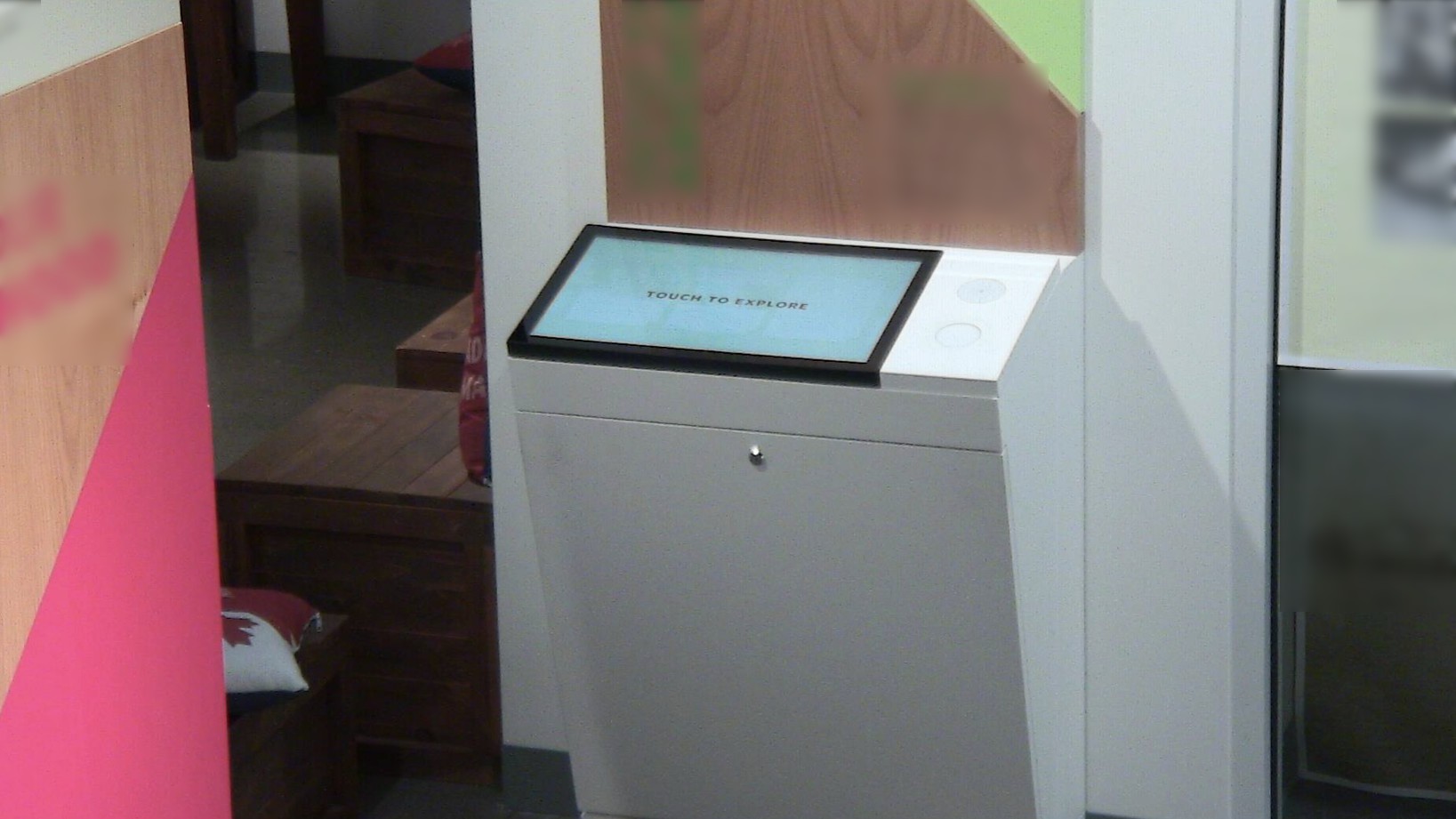

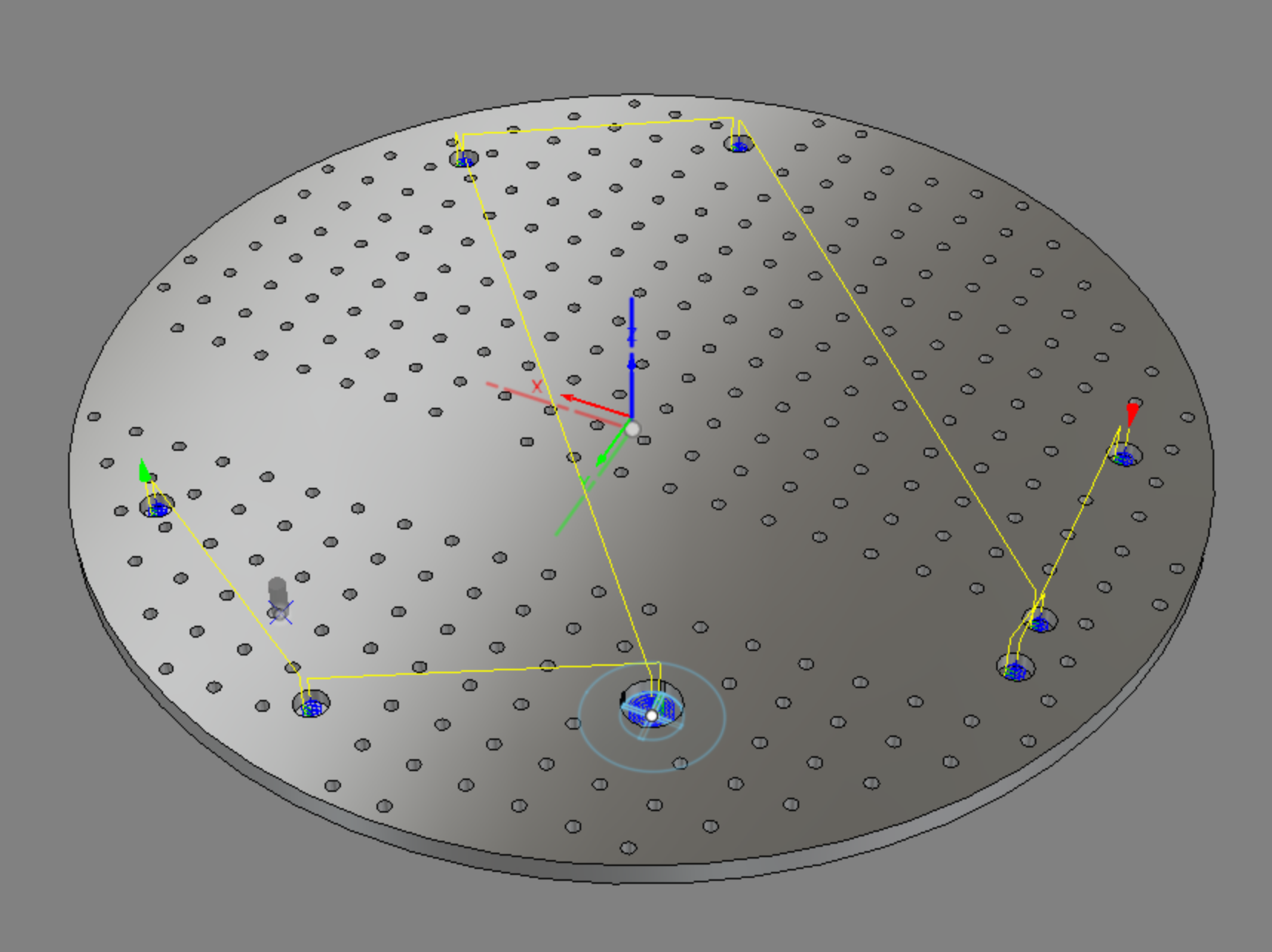

Mockup of OMNi interacting with a touchscreen kiosk at JUMP, Boise.

Will Donaldson

Will Donaldson

Jack Qiao

Jack Qiao

Ted Huntington

Ted Huntington

Gaultier Lecaillon

Gaultier Lecaillon