The original PNG request for comment (RFC) was published in 1997. It is pretty remarkable that almost 25 years later it still remains a format that we all know and love.

The first thing to know about PNG as well as the first thing in the actual file is the PNG signature. The first 8 bytes of any PNG file are all the same 137, 80, 78, 71, 13, 10, 26, 10. This allows a program to identify a PNG file early and is one of the many ways to check if the image is corrupted.

After the signature the PNG file is divided into chunks. The chunk can be broken down into its 4 parts (length, type, data, and crc). The chunk starts with 4 bytes telling you how long the data section will be. The length is followed by 4 bytes that tell you what type of chunk you are currently looking at. The actual chunk data follows and is finally ended with a 4 byte crc used as a checksum to ensure your data is sound.

There are many types of chunks but the ones to look out for are IHDR, IDAT and IEND. Every PNG file starts with an IHDR chunk right after the png signature that gives you a lot of detail about the image including its height, width, color type, and how it is compressed. IDAT chunks contain all of the image data. The data may be split into multiple IDAT chunks but it can be thought of as continuous. Stick the first byte of a new IDAT chunk right after the last byte of the previous chunk. IEND signifies the end of the image. The IEND chunk is always 0 bytes long, but still contains a crc afterwards.

After sticking all of the IDAT chunks together, you have your compressed data in the form of a zlib stream.

zlib makes use of the DEFLATE algorithm to implement lossless compression. Unlike with JPEG, this method of compression does not require any kind of advanced math to understand it. It boils down to a combination of run length encoding and huffman encoding.

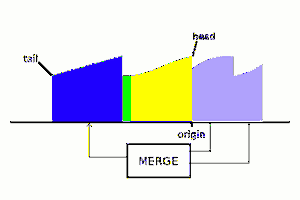

Run length encoding essentially entails looking backwards in the data for a matching pattern to the data you want to encode. If a match is found the encoder will replace the data with a pair of numbers indicating how far back to go and once you get there how many bytes should you copy.

Huffman coding can be used for compression by replacing commonly occurring data with a short symbol and data that comes up infrequently with long symbols. For example in the English alphabet you probably use the letters E and A a lot and the letters Q and Z very infrequently. If you are familiar with C you would know that every character takes up 8 bits regardless of how often you use it. Using huffman coding you could have the character E take fewer than 8 bits and Z take more than 8, in the end reducing the total size of your data.

PNG makes things easier for zlib by adding in a per line image filter system. This doesn't remove any data but it makes the data more compressible by attempting to make patterns that the run length encoder can pick up on.

There are many more ingenious things that the PNG creators thought to include all those years ago. Things like 2D interlacing for slow networks, alpha channels and, the use of palettes. They also included methods to adapt the format to new and changing technologies, effectively ensuring PNG will be around for the foreseeable future. Sorry JPEG fans

Joe Perri

Joe Perri

Yann Guidon / YGDES

Yann Guidon / YGDES

Rowanon

Rowanon

Peter McCloud

Peter McCloud

tklenke

tklenke

Hi Joe, Are you going to release the code?