After years of waiting for Nvidia confusers to come under $100, the cost of embedded GPU's instead rose to thousands of doll hairs, putting embedded neural networks on a trajectory of being permanently out of reach. Both goog coral & Nvidia jetsons ceased production in 2021.

Theories range on why embedded GPUs went the way of smart glasses, but lions suspect it's a repeat of the "RAM shortages" 40 years ago. They might be practical in a $140,000 car, but they're just too expensive to make.

If embedded neural networks ever become a thing again, they'll be on completely different platforms & much more expensive. Suddenly, the lion kingdom's stash of obsolete single board confusers was a lot more appealing.

Having said that, the lion kingdom did once spend $300 on a measly 600Mhz gumstix which promptly got destroyed in a crash. Flying machines burned through a lot of cash by default, so losing $300-$500 on avionics wasn't a disaster.

The fastest embedded confuser in the lion kingdom is an Odroid XU4 from 2015. It was $100 in those days, now $50. It was enough for deterministic vision algorithms of the time but not convolutional neural networks.

No-one really knows how a Skydio tracks subjects. No-one reverse engineers anymore. They just accept what they're told. Instead of tracking generic skeletons the way the lion kingdom's studio camera does, a Skydio achieves the magic of tracking a specific person in a crowd. Reviewing some unsponsored vijeos,

it does resort to GPS if the subject gets too far away, but it doesn't lose track when the subject faces away. It's not using face tracking.

The next theory is it's using a pose tracker to identify all the skeletons. The subject's skeleton defines a region of interest to test against a color histogram. Then it identifies all the skeletons in subsequent frames, uses the histogram to find a best match & possibly recalibrates the histogram. It's the only way the subject could be obstructed & viewed from behind without being lost. The most robust tracker would throw face tracking on top of that. It could prioritize pose tracking, face matching, & histogram matching.

The pose tracker burns at least 4GB & is very slow. A face tracker & histogram burn under 300MB & are faster. Openpose can be configured to run in 2GB, but it becomes less accurate.

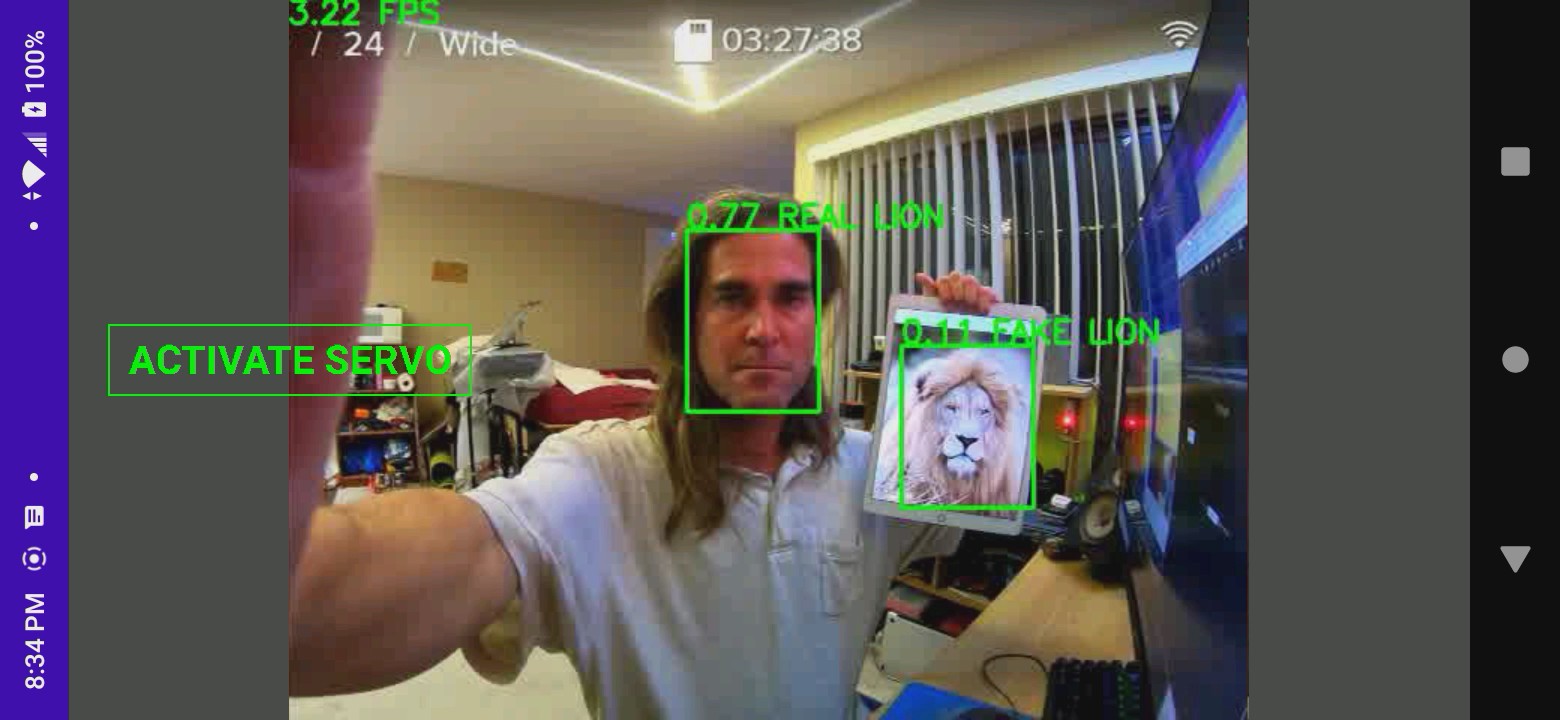

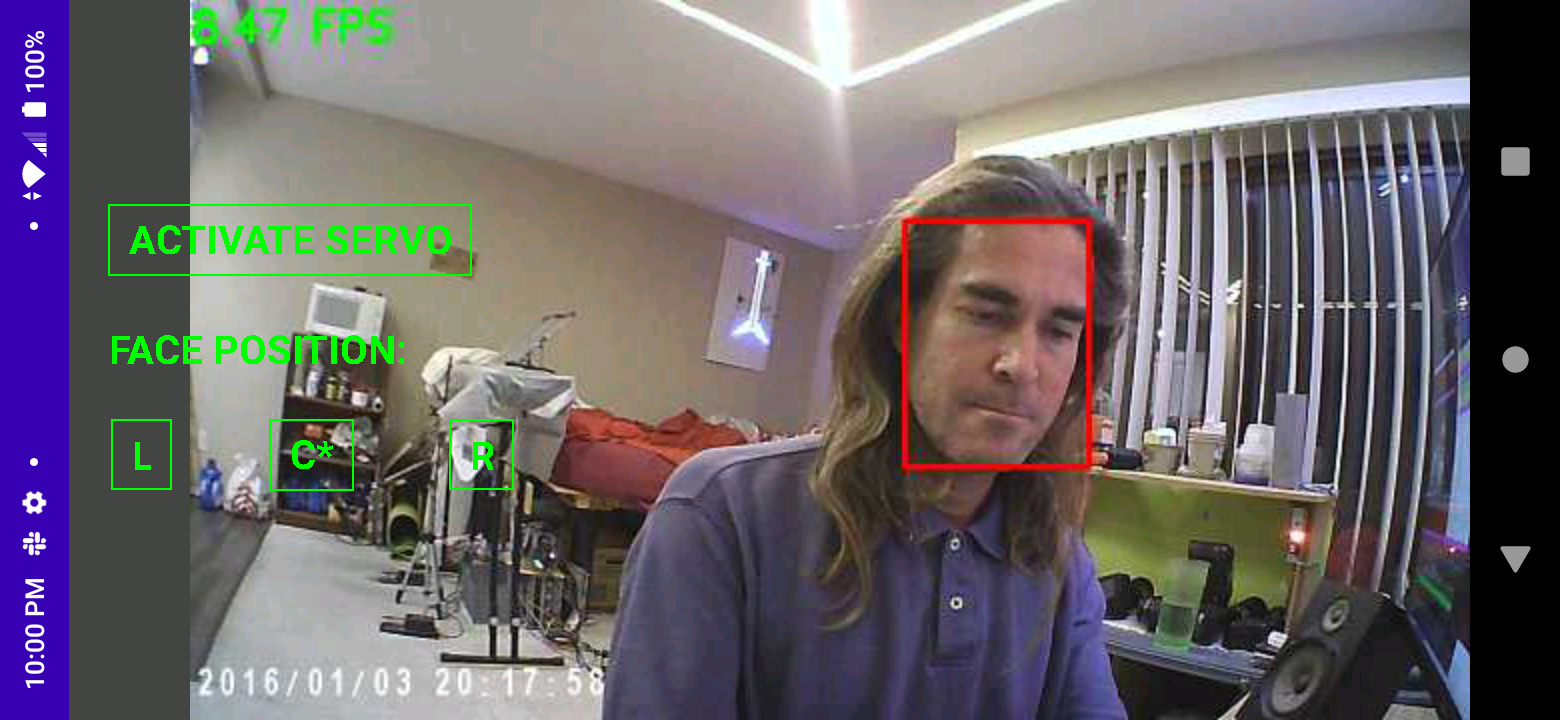

The lion kingdom had been throwing around the idea of face tracking on opencv for a while. Given the usage case of a manually driven truck, the face is never going to be obscured from the camera like it is from an autonomous copter, so a face tracker became the leading idea.

There are some quirks in bringing up the odroid. The lion kingdom used the minimal Ubunt 20 image.

ubuntu-20.04-5.4-minimal-odroid-xu4-20210112.img

There's a hidden page of every odroid image

https://dn.odroid.com/5422/ODROID-XU3/Ubuntu/

The odroid has a bad habit of spitting out dcache parity errors & crashing. It seems to be difficulty with the power supply & the connector. The easiest solution was soldering the 5V leads rather than using a connector.

That gives 4 cores at 2Ghz & 4 cores at 1.5Ghz, compared to the raspberry pi's 4 cores at 1.5Ghz. The odroid has only 2GB of RAM compared to the pi's 4 GB.

In recent years, ifconfig has proven not a valuation boosting enough command, so the droid requires a new dance to bring up networking.

ip addr add 10.0.0.17/24 dev eth0

ip link set eth0 up

ip route add default via 10.0.0.1 dev eth0

Then disable the network manager.

mv /usr/sbin/NetworkManager /usr/sbin/NetworkManager.bak

mv /sbin/dhclient /sbin/dhclient.bak

There's a note about installing opencv on the odroid.

https://github.com/nikmart/sketching-with-NN/wiki/ODROID-XU4

The only opencv which supports face tracking is 4.x. The 4.x bits are different than what was shown.

https://github.com/opencv/opencv/archive/refs/heads/4.x.zip

https://github.com/opencv/opencv_contrib/archive/refs/heads/4.x.zip

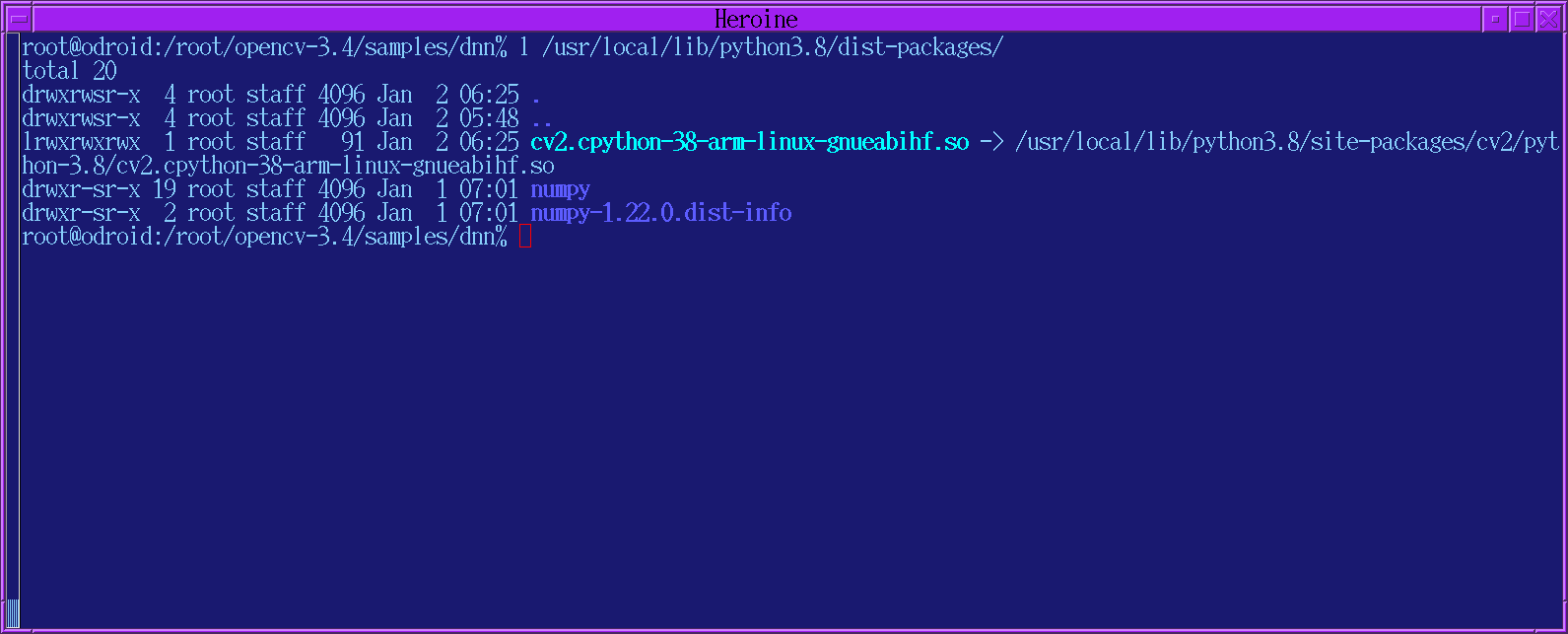

Lions stopped trying to use virtual python environments or containers. It ended up being too many extra steps to get right. This changes the configuration slightly. Opencv won't build any python bindings without numpy. Installing numpy involves

apt-get install python3-pip

pip3 install numpy

The compilation is a bit different for 4.x

cd ~/opencv-4.x/

mkdir build

cd build

cmake -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=/usr/local -D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib-4.x/modules -D ENABLE_NEON=ON -D ENABLE_VFPV3=ON -D WITH_OPENCL=ON -D WITH_JASPER=OFF -D BUILD_TESTS=OFF -D INSTALL_PYTHON_EXAMPLES=OFF -D BUILD_EXAMPLES=OFF ..

The 'droid didn't have enough memory for make -j8. It only did make -j4 without crashing. Compiling the perf_tests required make -j1. This finished with 4gig of the SD card used. Opencv has version tags & version branches. The branches continue to get changed after the tags. The lion kingdom compiled the branches.

All previous opencv bits in /usr/local had to be deleted before the runtime linker resolved all the symbols.

It took creating a symbolic link for python to import the cv2 module.

Then, the python interpreter could call it up.

root@odroid:/root% python3

>>> import cv2

>>> cv2.__version__

'3.4.1'

>>>

It's been 10 years of compiling opencv on different platforms, but there's never been any info on what any of the sample programs do or how to use them.

samples/dnn/face_detector has bits required for training the face detector

https://docs.opencv.org/4.x/d0/dd4/tutorial_dnn_face.html has a white paper about the face detector but no steps for using the programs.

The journey begins by downloading 2 bits required by the face detector.

Face detection:

https://github.com/ShiqiYu/libfacedetection.train/raw/master/tasks/task1/onnx/yunet.onnx

Face recognition:

https://drive.google.com/file/d/1ClK9WiB492c5OZFKveF3XiHCejoOxINW/view?usp=sharing

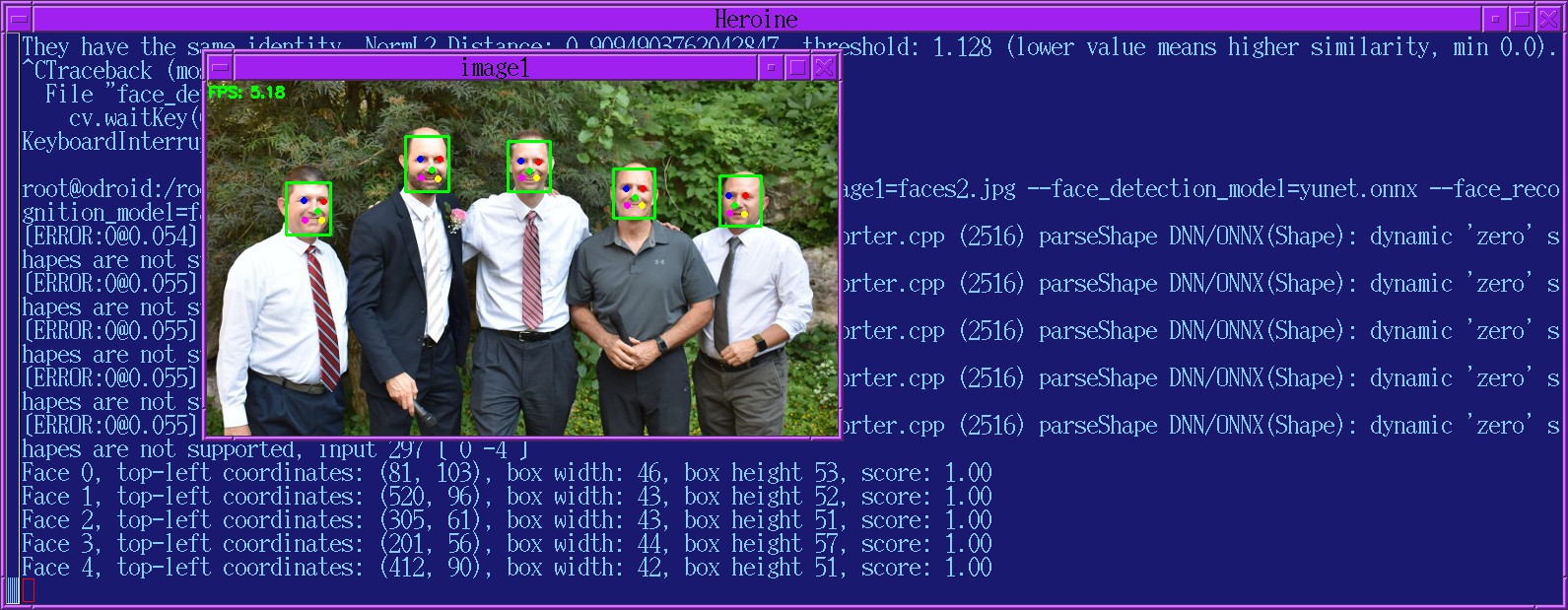

Then in theory, this should find all the faces in an image & show a window with the faces highlighted.

python3 face_detect.py --image1=faces.jpg --face_detection_model=yunet.onnx --face_recognition_model=face_recognizer_fast.onnx

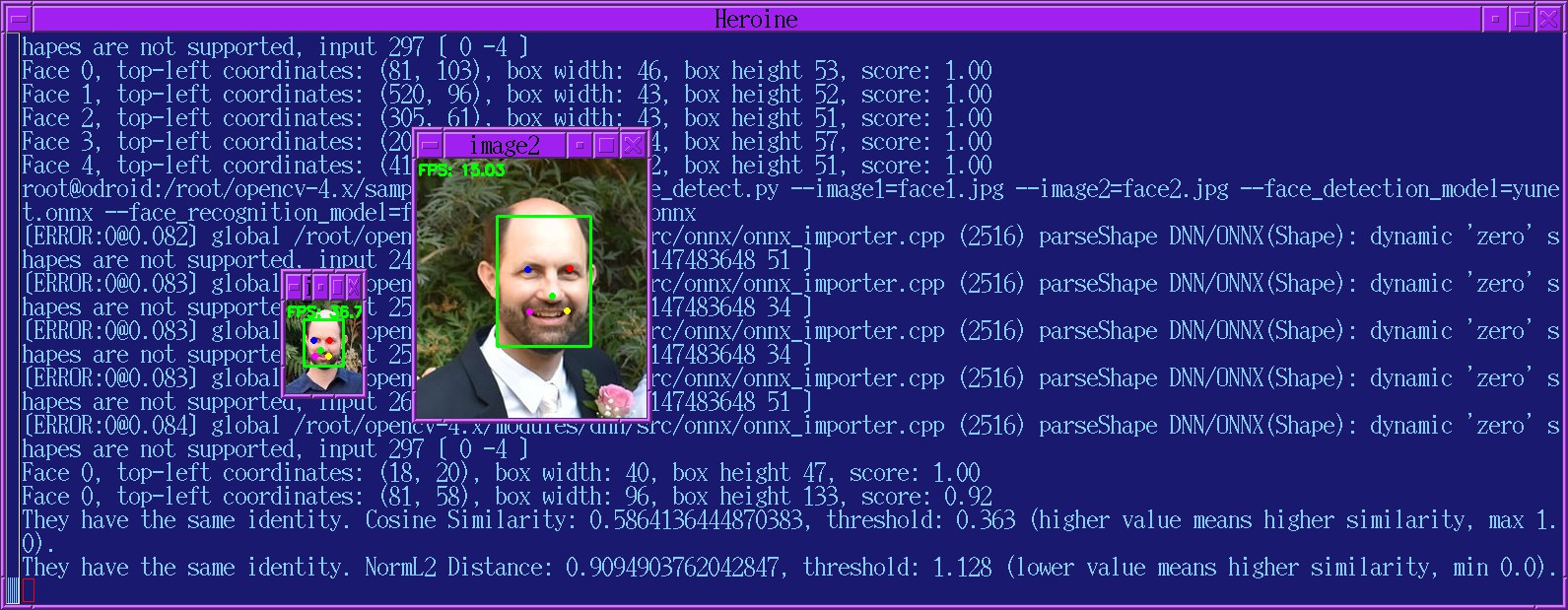

Given 2 images of a single face, this should show if it's the same person.

python3 face_detect.py --image1=face1.jpg --image2=face2.jpg --face_detection_model=yunet.onnx --face_recognition_model=face_recognizer_fast.onnx

Face detection hit .8fps on a 1920x1080 image, 5fps on 640x360. All speeds are with 1.5Ghz. It gets faster as the image size gets smaller. It only uses the 5 highlighted features, rather than the mane or the beard. Lions know nothing about the science of face matching.

This detects all the faces on the webcam.

python3 face_detect.py --face_detection_model=yunet.onnx --face_recognition_model=face_recognizer_fast.onnx

Passing an image2 doesn't cause it to compare faces from the webcam, but this feature can be added easily.

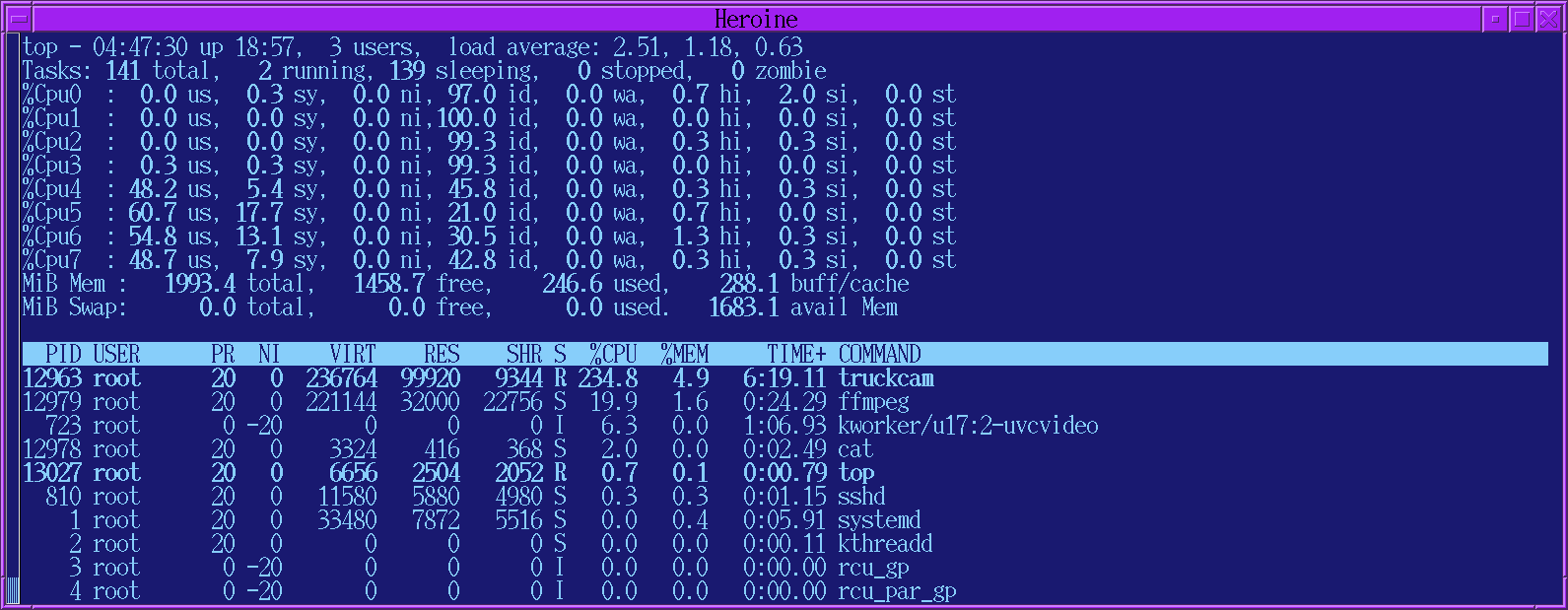

Opencv defaults to 8 cores on the odroid. Adding cv.setNumThreads(4) to face_detect.py was required for faster results. CPU usage was 460% with 8 cores & 300% with 4 cores while the frame rate was 5fps with 8 cores & 6fps with 4 cores at 1.5Ghz. Running 4 cores at 2Ghz got it to 7fps with 640x480. It has trouble accessing memory from all 8 cores.

The next step was ingesting video from the gopro. Capturing from the gopro is a multi step process. The interface is shown exclusively on HDMI. You have to navigate to the camera icon with the power button & then press record to get a preview over HDMI. You have to press record again to record while getting an HDMI preview. The image is pillarboxed. So a wifi display is required.

The need for wifi & the lack of 8 cores justified a test with the raspberry pi.

The lion kingdom used the latest raspberry pi image on a 4B.

2021-10-30-raspios-bullseye-armhf-lite.zip

Compilation was the same as the odroid.

The result was 6fps with 640x480. It was a bee's dick faster with the frequency scaling at performance.

The very latest raspberry pi at 1.5Ghz wasn't as fast as a 7 year old odroid at 2Ghz.

It's a bit frustrating that both these embedded confusers are less powerful than the lion kingdom's phone which has 3GB RAM & a Mediatek Helio P22 MT6762. The mediatek is the same 8 core deal with 4 cores at 2.3Ghz & 4 cores limited to 1.4Ghz. It might be marginally faster than the Odroid, at the expense of all the extra hoops. It would require an extra bluetooth board for servo control. An app is required no matter what for viewing HDMI. It would eliminate the access point. It might be thermally limited. Every Stanford undergrad develops using an opencv SDK on android.

Despite the advantages, the problem with an android face tracker is the lion kingdom isn't going to upgrade phones for at least 4 years & the odroid bits are applicable to an embedded GPU. An embedded GPU is highly likely in the next 4 years, rendering all the android bits useless.

Lion priced phones in the last 10 years have gone from 1gig RAM to 3 gig RAM, 1Ghz to 2.3Ghz CPUs, 16 gig storage to 32 gig storage. Lions just can't afford phones which will ever be capable of ideal tracking while lions see embedded GPUs becoming much cheaper than phones in 10 years. The lack of any future upgrades & the need for a common code base favors the odroid.

Hostapd, rfkill, dnsmasq were all installable by apt on the odroid. The lion kingdom appended a small bit to the end of /etc/dnsmasq.conf

interface=wlan0

except-interface=lo

bind-interfaces

server=8.8.8.8

domain-needed

bogus-priv

dhcp-range=10.0.3.50,10.0.3.150,12h

Then the /etc/hostapd/hostapd.conf was

ctrl_interface=/var/run/hostapd

ctrl_interface_group=0

interface=wlan0

driver=nl80211

ssid=ODROID

hw_mode=g

ieee80211d=1

ieee80211n=1

ieee80211ac=1

channel=6

#channel=36

country_code=US

wmm_enabled=0

macaddr_acl=0

auth_algs=1

wpa=2

wpa_group_rekey=65535

wpa_passphrase=abcdef

wpa_key_mgmt=WPA-PSK

wpa_pairwise=TKIP CCMP

rsn_pairwise=CCMP

The odroid started dnsmasq automatically. The mane wrinkle with bringing up an access point on the odroid was the need to disable /etc/init.d/hostapd. Then start hostapd in /etc/rc.local.

rfkill unblock wlan ip link set wlan0 up ip addr add 10.0.3.1/24 dev wlan0 sleep 5 start-stop-daemon --start --oknodo --quiet --exec /usr/sbin/hostapd -- /etc/hostapd/hostapd.conf&

The lion kingdom used a 10 year old RTL8192 which to this day has been the only mass produced wifi dongle & also has never been fully supported.

The mane problem was starting hostapd in an init script caused

Register frame command failed (type=176): ret=-114 (Operation already in progress)

While starting it from a console usually worked.

The only workaround was using the start-stop-daemon command to start it in rc.local & the order of the network startup also mattered. Iptables, ip forwarding had to be configured after hostapd. Eth0, default route, resolv.conf had to be configured before hostapd.

Helas, the RTL8192 would stutter in greater amounts as the frame rate got lower. It seemed to be a power management requirement.

The 1st vijeo through the phone interface revealed a much slower frame rate & much higher latency than face_detect.py. The 7fps counted face detection but not face recognition or decoding frames from HDMI. HDMI decode takes 37ms. Sending frames to the phone takes 10ms. Face detection takes 125ms. Face recognition takes 100ms. The face recognizer has to run once for every face in the frame.

It wasn't using a lot of CPU time. 47 of the 272ms are single threaded. The size of the reference image made no difference. The size of the face detection image made a difference. 400x300 boosts it to 4fps, but faces have to be closer. The camera might need a higher angle to get closer faces or there might be cropping of everything below the horizon. The real tradeoff is useful distance from camera vs frame rate.

Most of the usage might be with just 1 detected face, in which case it might be possible to ignore face recognition completely or just go for the biggest face. No-one gets between the lion & the camera. There are other ways to recognize faces, like histogram matches.

Unfortunately, the gopro 7 has a 2 second lag on its HDMI output. The lag isn't present in the viewfinder. It's caused by HDMI being fed the stabilized output while the viewfinder is unstabilized. Newer 'pros fixed the lag but of course are bricks. Older 'pros don't lag but don't have stabilization. The decision was made to use a 10 year old 808 #13 keychain cam for tracking. They just happened to work as webcams with no latency.

With no face recognition & scaling to 640x360, the frame rate went to 8.5. Wifi stuttering on the RTL8192 was less at 8.5fps than at 4fps.

Unfortunately, the 808 cam tended to lock up on the odroid & wifi continued to have problems. Bought the very last TP-LINK WN823N, the only wifi dongle cheaper than a raspberry pi. This one used an RTL8192EU instead of the 10 year old RTL8192CU but it didn't work at all with the 5.4 kernel driver. It required downloading a hacked driver from https://github.com/Mange/rtl8192eu-linux-driver This one almost never accepted hostapd while it didn't stutter. It seems wifi on the RTL8192 will never be fully supported on Linux.

With the problems in the webcam & wifi, the decision was finally made to abandon the odroid & use the raspberry pi 4B. The odroid might have been useful after a long period of dedicated debugging. It will be required if embedded GPUs of the future still don't have wifi.

Frame rate on the odroid while streaming on the phone app:

Frame rate on the raspberry pi while streaming on the phone app:

The slower raspberry pi is good enough, but lions would prefer the maximum possible frame rate.

lion mclionhead

lion mclionhead