Made a new set of images with the lion in difficult lighting & the edges of the frame to try to bake the lens distortion into the model. The trick is to capture training video with tracking off, otherwise it'll keep the lion in the center.

It was assumed efficientdet_lite0 is mirror image independent. The lion kingdom assumed distance affects parallax distortion, so it's not scale independent. The full 360 degrees of a lion must be captured in the edges & center of the frame & from various distances. There were a few images with fake mane hair.

It might be more efficient to defish the lens, but lions so far have preferred to do as much as possible in the model. Yolov5x6 labeled 1000 images.

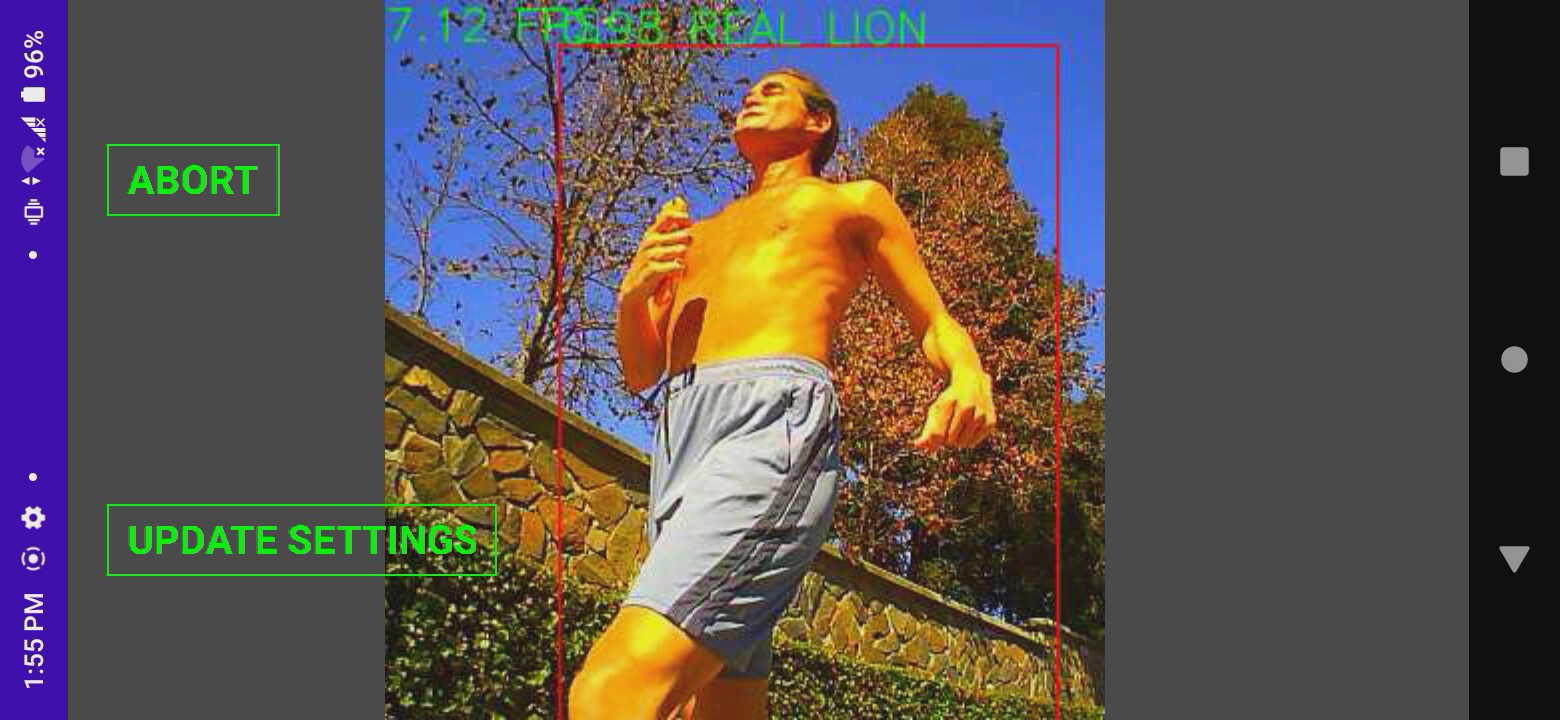

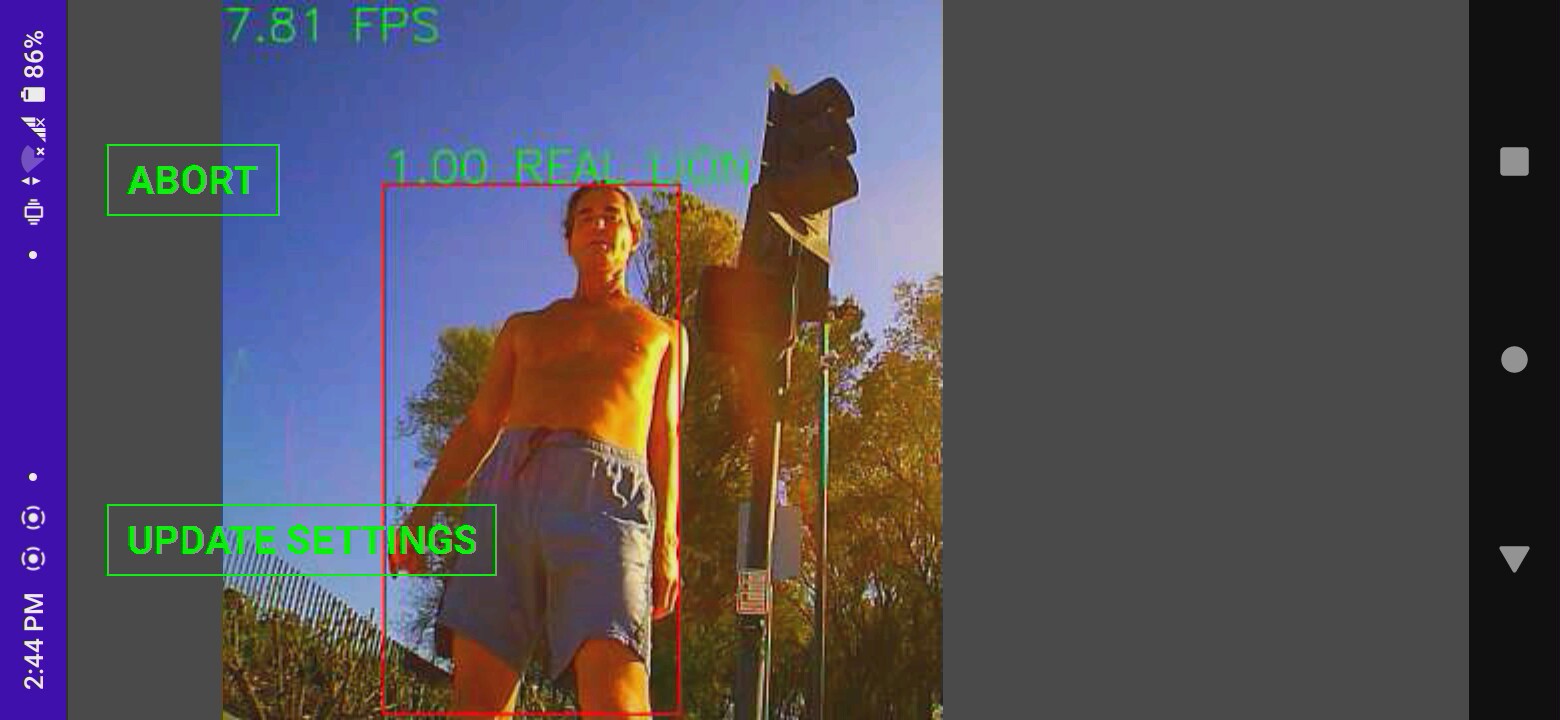

After 100 epochs of training with model_maker.py, another round of tracking with efficientdet_lite0 went a lot better. The tree detection was all gone. It handled difficult lighting about as well as can be & definitely better than face tracking.

Detecting lions in the edges of the frame was still degraded, but just good enough for it to track. It was another point in favor of defishing.

The misdetections were extremely rare. Fortunately, only having to detect a running lion is a lot simpler than detecting lions in all poses. Results definitely were better at 100 epochs than 30 epochs. Overfitting might benefit such a simple detector.

Lessons learned were Android doesn't capture the screen if the power button is pressed, but does capture the screen after the 30 minute timeout. YOLOv5 is a viable way of labeling training data for simpler models. In the old days, embedded GPUs could have run YOLOv5 directly of course & that would have been the most robust tracker of all. There may still be an advantage to training a simpler model so it can be combined with face recognition.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.