Maybe not true lidar but the FOV is much smaller (3.6 degrees), better for my case.

Edit: actually it's 2 degrees FOV which is even better

So for a long time I assumed that the VL53L0X is a lidar sensor. Some sources say it is, most don't. But the 25 degree FOV is not idea for something that is supposed to be "Lidar" or a laser/single point. The TFmini-s is something that I had already from another project which I stopped working on.

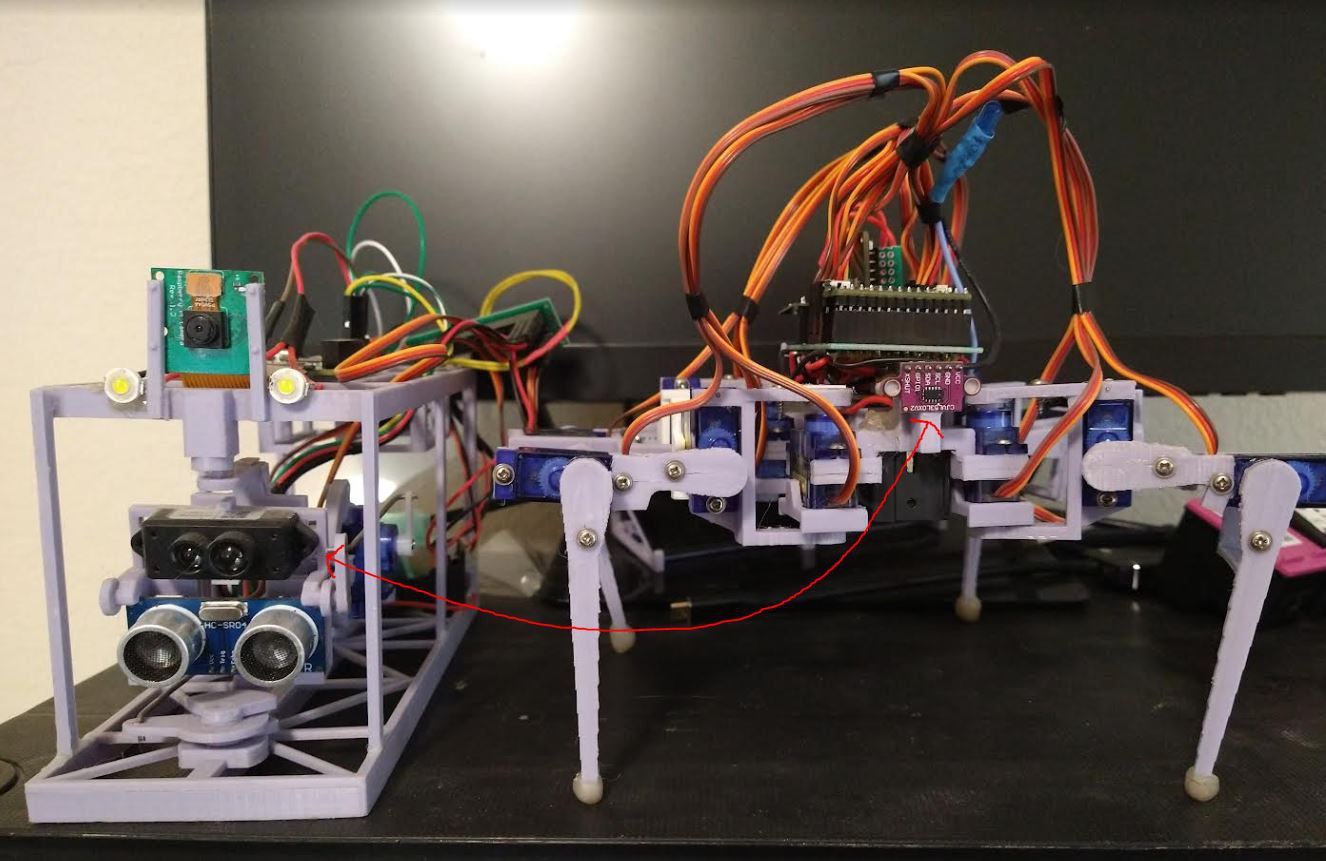

So I stole that sensor and put it on here. I forgot about the UART/I2C stuff... on the left side I'm using a Pi to do all the computation, there is an Arduino just to control the servos (less problematic on boot). And in this particular code base I had found some sample code provided in a raspberrypi.com thread about the TFmini-s. This code was written in serial. Initially (yesterday) I tried to use the Benewake GUI to switch the sensor from UART to I2C using the hex commands mentioned at this thread. However I kept getting "wrong command". I decided before I went too deep, I would see how I got it to work before on the Raspberry Pi using the sample code mentioned above. So I figured out how to convert that Python serial write/read code to Arduino and it worked out.

Initially I was going to just have TFmini-s on its own but I decided to keep both sensors on there since I have the pins and I already know how to interface with both of them. The main reason I wanted it is for scanning close range, since the TFmin-s is good for 1ft (30cm) and beyond. It can't measure anything less than 12 inches. Where as the ToF ranging module can measure below 12".

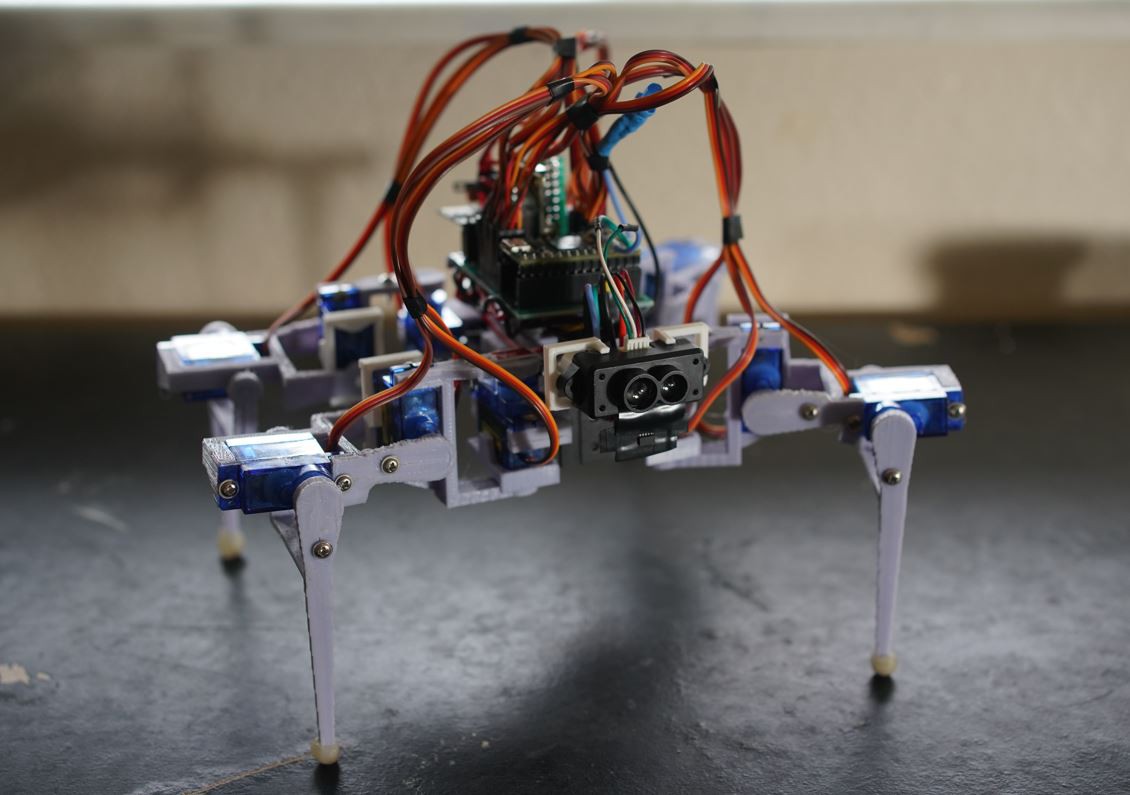

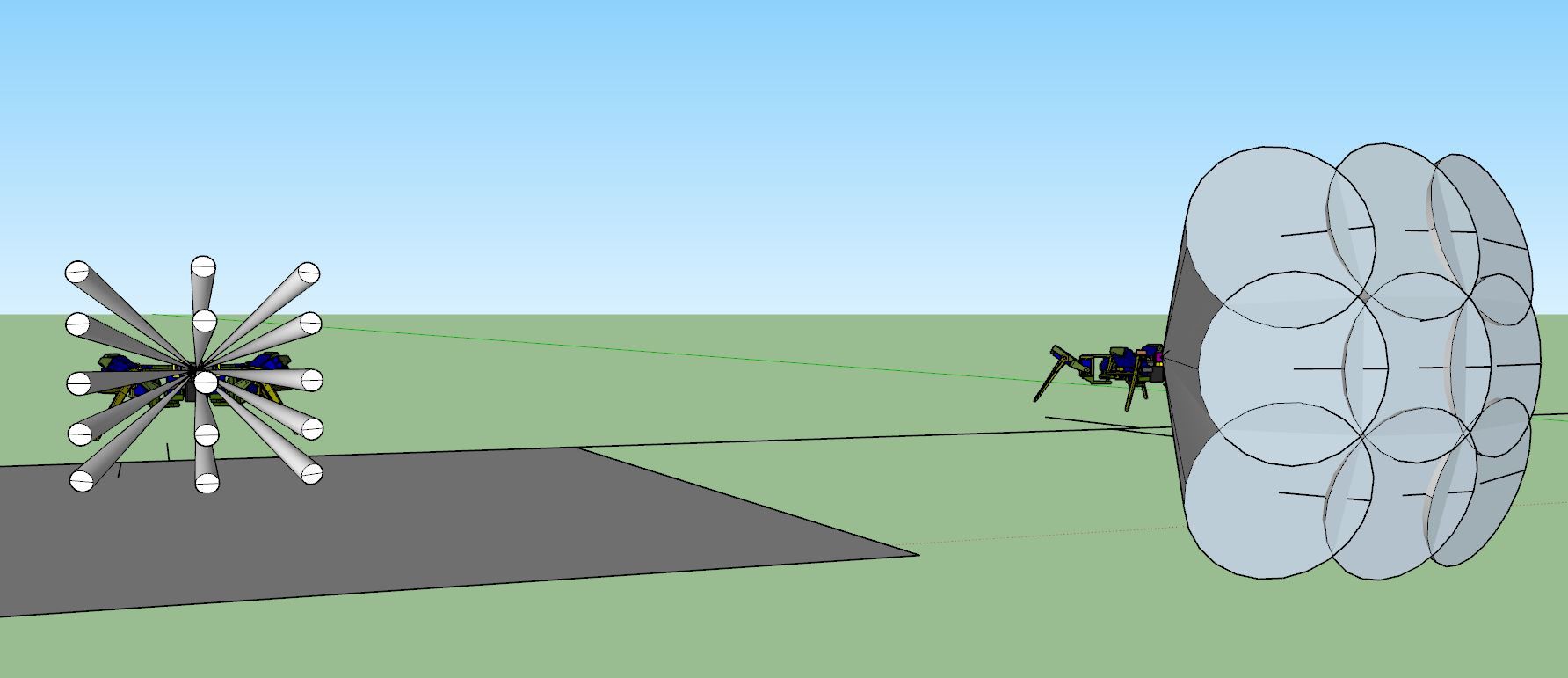

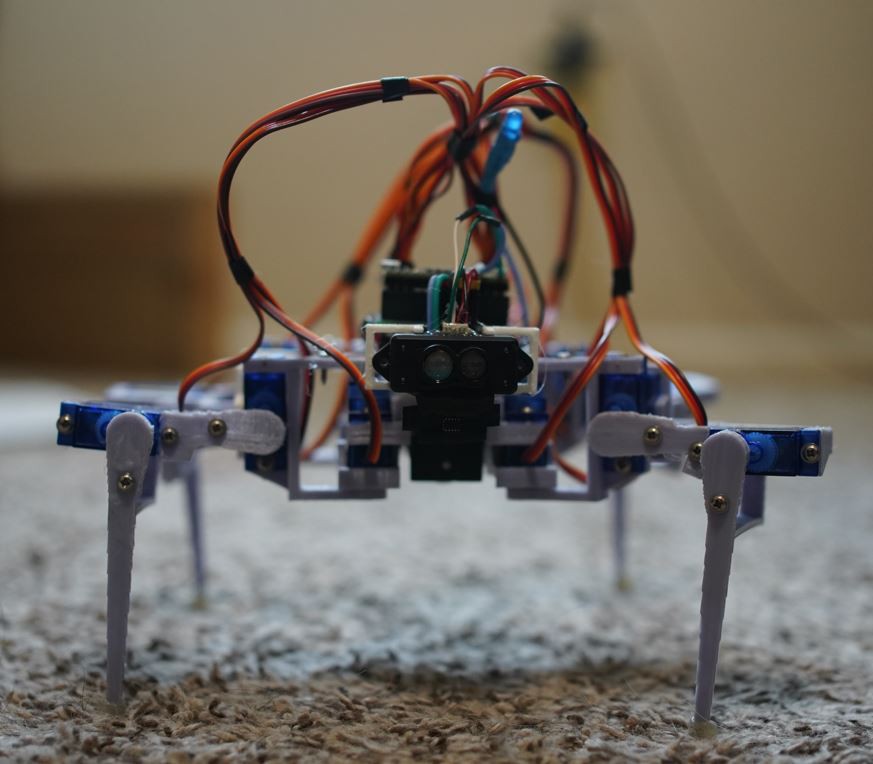

You can see a comparison here of the difference in the scanning area. The TFmini-s has a FOV of 3.6 degrees vs. the 25 degrees for the VL53L0X. I will have to rework all of the code to change the dimensions/scanning process. Also the robot is a little heavier now it weighs 10.5oz.

According to the torque diagram from this video theses servos should have 7.8oz/1.5in which is beyond the weight of this robot (per leg), however I think the servos are still too weak. I was also thinking I would need to incorporate the IMU with the servo motions eventually to try and keep the robot steady/not wobble.

The problem is the single threaded aspect, it's hard to mix different domains together in real time. The Teensy 4.0 is fast 400MHz or something. So maybe in "real time" it's not noticeable even if per degree moved I'm pinging the IMU to check/make the robot more stable/self-balancing.

I really like these "eyes" though, the Lidar module lenses. It makes it look like a jumping spider. Also the pins on the VL chip look like little teeth in the right lighting.

I was thinking I could send the measured points and angles to do the plotting on the web... the websocket is pretty good with sending the characters... just a matter of chunking the data and then ensuring they all make it to the web. I want to make the scanning really good by combining the sensors and doing tests against physical objects and knowing what I can expect performance wise.

Anyway it's just more work... opportunity to write better code.

Jacob David C Cunningham

Jacob David C Cunningham

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.