Random Tricks and Musings

Electronics, Physics, Math... Tricks/musings I haven't seen elsewhere.

Electronics, Physics, Math... Tricks/musings I haven't seen elsewhere.

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

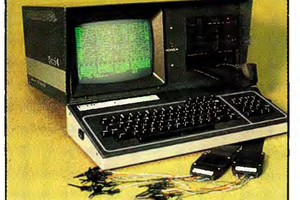

I just saw a few minutes of Adrian's vid wherein he dug out a 386 motherboard with CACHE!

This is one of those long-running unknowns for me... How does cache work. I was pretty certain I recalled that 486's had it, but 386's didn't... which means: for a 386 /to/ have it requires an additional chip... And /chips/ I can usually eventually understand.

So I did some searching to discover Intel's 82385 cache controller and took on some reading, excited to see that, really, there's no reason it couldn't be hacked into other systems I'm more familiar with, like, say, a z80 system. Heck, with a couple/few 74LS574's, I think it could easily be put into service as a memory-paging system. up to 4GB of 8K pages!

But the more I read of the datasheet, the more I started realizing how, frankly, ridiculous this thing is, for its intended purpose.

I mean, sure, if it was an improvement making a Cray Supercomputer a tiny bit more super when the technology first came out, or a CAD system more responsive to zooming/scrolling, I could see it. But... How it works in a home-system seems, frankly, a bit crazy. A Cash-Grab from computer-holics, who /still/ post questions about adding cache to their 35y/o 386's for gaming. LOL.

Seriously.

Read the description of how it actually functions, and be amazed at how clever they were in implementing it in such a way as to essentially be completely transparent to the system except on the rare occasion it can actually be useful.

Then continue to read, from the datasheet itself, how carefully-worded it is that there's a more sophisticated mode that actually only provides a "slight" improvement over the simpler mode which provides basically no improvement over no cache, unless the software is basically explicitely-designed with cache in mind.

Which, frankly, seems to me would have to be written in inefficient ways that would inherently be slower on cacheless systems, rendering benchmarking completely meaningless.

So, unless I'm mistaken about the progression of this trend--as I've extrapolated from the advancement of the "state of the art" between the era of computers without cache, to the first home computers with cache--here's how it seems to me:

If programmers had kept the skills they learned from coding in the before-times, cache would've been nothing more than a very expensive addition (all those transistors! Static RAMs!) to squeeze a few percent more useable clock-cycles out of top-end systems, for folk using rarely-used softwares which could've been carefully programmed to squeeze even more computations out of a system with cache.

But, because programmers started adopting cache as de-facto, they forced the market who didn't need it /to/ need it. The cacheless systems would've run code not-requiring-of cache roughly as-efficiently as code poorly-written to use cache running on systems with it. Which is to say that already-existant systems lacking cache were hobbled not by what they were capable, but by programmers' tactics to take advantage of tools that really weren't needed at the time, and, worse, often for little gain other than to use up the newly-available computing resources.

The 486, then, came with a cache-controller built-in, and basically every consumer-grade CPU has required it, since.

Now, I admit this is based purely on my own extrapolation based on what I read of the 82385's datasheet. Maybe things like burst-readahead and write-back, that didn't exist in the 82385, caused *huge* improvements. But, I'm not convinced, from what I've seen.

Think about it like this: In the time of the 386, 1 measly MB of DRAM was rather expensive... so how much, then, would be 32KB of /SRAM/? The 82385 also had nearly 2KB internal SRAM/Registers just to keep track of what was and wasn't cached. And this all in addition to a huge number of gates for address-decoding, etc.

After all that taken into account, I'm guessing the 82385 and cache chips probably contained*far* more...

Read more »This is my own invention... Maybe someone else has done it, maybe it has someone's name attached to it. I dunno. (Maybe not, and I'll finally hear from someone who can get me a patent?)

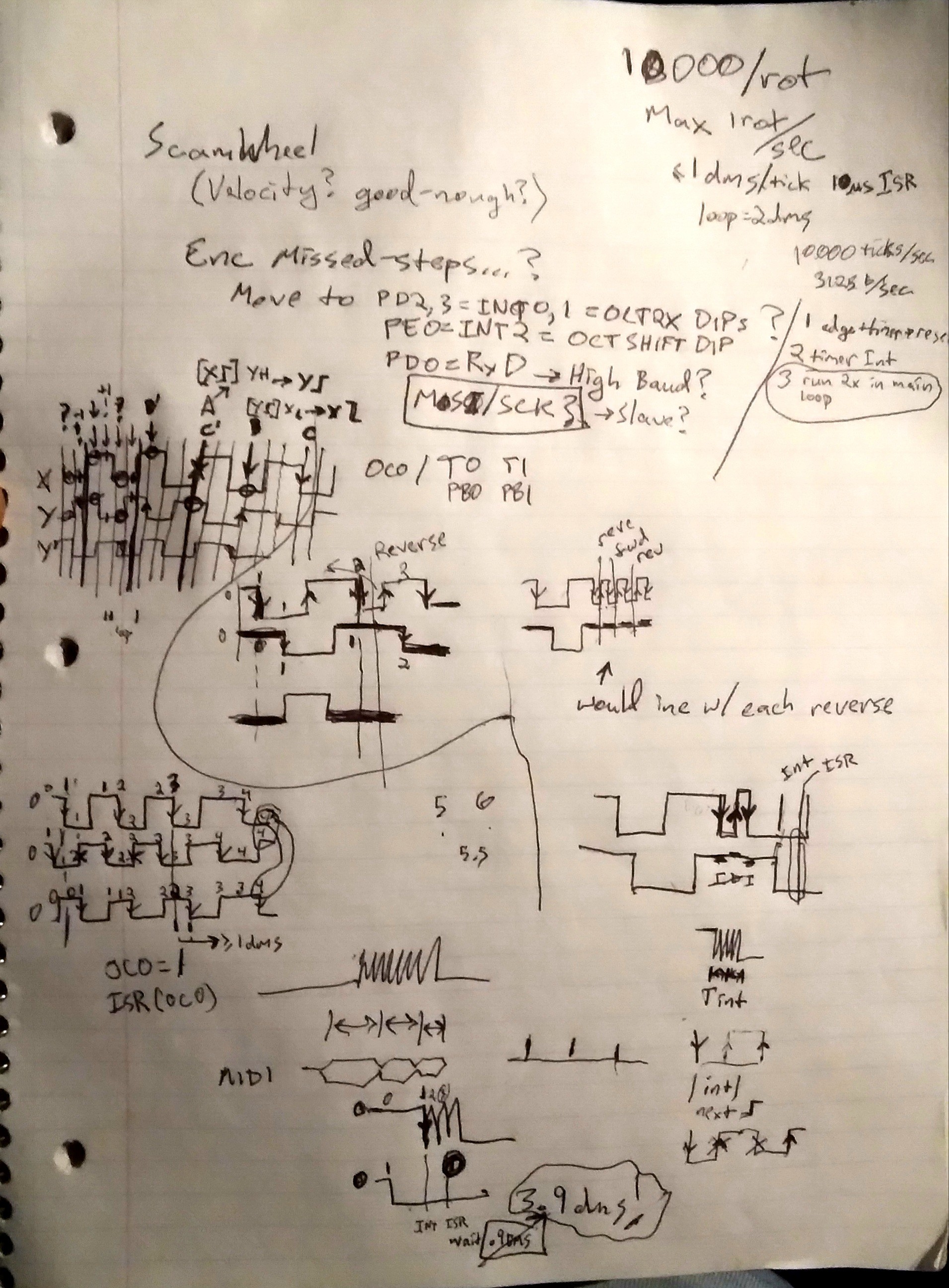

The idea is simple enough... I'll go into detail after the first image loads:

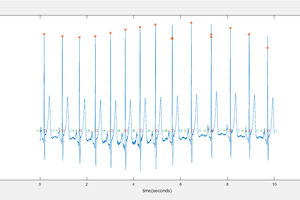

A typical method for decoding a digital quadrature rotary encoder involves looking at each edge of each of the two signals. If One signal "leads" the other, then the shaft is spinning in one direction. If that same signal "lags" the other, then the shaft is spinning in the other direction.

This is very well-established, and really *should* be the way one decodes these signals (looking for, then processing, all the transitions).

This is important because of many factors which we'll see if I have the energy to go into.

...

An UNFORTUNATE "hack" that has entered the mainstream does it differently. It's much easier (but, not really).

The idea, there, is to look for the edge(s) of one of the signals, then when it arrives, look at the *value* of the other signal.

This is kinda like treating one signal as a "step" output, and the other as a "Direction" output. But That Is Not What Quadrature Is.

If the signal was *perfect*, this would work great.

But the real world is not perfect. Switch-Contacts bounce. Optical encoder discs may stop rotating right at the edge of a "slot", a tiny bit of mechanical vibration or a tiny bit of 120Hz fluorescent lighting may leak into the sensor. And obviously electrically-noisy motors can induce spikes in decoding circuits as well as the encoders'.

That "Step" pulse may oscillate quite a bit, despite no change in the "direction".

...

My friggin' car's stereo has this glitch. And now that the detents are a little worn, it's very easy to accidentally leave the volume knob atop a detent, where suddenly and without warning a bump in the road causes the volume to jump up, sometimes an alarming amount, even though [actually /because/] the knob itself still sits atop that detent.

This Would Not Happen if they decoded the quadrature knob correctly. That was the whole point of the design behind quadrature encoders, as far as I'm aware.

But then somehow it became commonplace to decode them *wrong*, and I'm willing to bet it's in so many different devices these days that at least a few folk have died as a result. (It's sure given me a few scares at 70MPH!)

"Hah! That's a one in a million chance!" Yeah? But if you plan to sell 10 million... Sounds like ten counts of first-degree manslaughter to me. What's that... Ten 20 year prison sentences? But, yahknow, since "Corporations are people" it means the end of said corporation, and prison sentences, not slaps on wrists, for those who knowingly aided and abetted.

.

.

.

So, let's get this straight:

Quadrature can be decoded correctly.

The "step/direction" method is NOT correct.

.

.

.

If the elite engineers who hold onto trade secrets like this technique are unwilling (or just too tired) to teach you, and there's even the tiniest chance a glitch could hurt someone, then please remember that the internet is full of seeming-authoritative information from folk who really have no clue what they're talking about, and then look into products designed for the task, like HP/Agilent's HCTL-2000, or similar from U.S. Digital.

.

.

.

Now, As A /hacker/ or /maker/ or DIY sort of person, I often try to solve puzzles like the one I faced tonight: How do I decode my two quadrature signals with only one Interrupt pin and one GPIO pin and no additional circuitry?

So, ONLY BECAUSE it doesn't really matter in this project, I considered the "Step/Direction" decoding approach, which I'd dismissed so long ago that I'd forgotten exactly /why/. And I had to derive, again, the *why*, which I described earlier ("Noise" on "Step" would cause the counter to run away).

.

I spent a few hours trying to think of ways to resolve that, and ultimately went back to...

Read more »Imagine you've designed a clock...

Each time the seconds go from 59 to 0, it should update the minutes.

Imagine it takes three seconds to calculate the next minute from the previous.

So, if you have an interrupt at 59 seconds to update the minute-hand, the seconds-hand will fall-behind 3 seconds, by the time the minute-hand is updated.

...

Now, if you knew it takes three seconds to update the minute-hand, you could set up an interrupt at 57 seconds, instead of 59.

Then the minute-hand would move one minute exactly after the end of the previous minute.

BUT the seconds-hand would stop at 57 seconds, because updating the minute uses the entirety of the CPU. If you're clever, the seconds-hand would jump to 0 along with the minute's increment.

....

Now... doesn't this seem crazy?

...

OK, let's say we know it takes 3 seconds of CPU-time to update the minute-hand. But, in the meantime we also want to keep updating the seconds-hand. So we start updating the minutes-hand *six* seconds early... at 54 seconds. That leaves six half- seconds for updating the seconds-hand, in realtime, once-per-second, and three split-up seconds for updating the minutes-hand once.

But, of course, there's overhead in switching tasks, so say this all starts at 50 seconds.

...

At what point do we say, "hey, interrupts are stupid" and instead ask "what if we divided-up the minutes-update task into 60 steps, each occurring alongside the seconds-update?"

What would be the overhead in doing-so?

...

So, sure, it may be that doing it once per minute requires 3 seconds, but it may turn out that the interrupt-overhead is 0.5 times that, due to pushes and pops, and loading variables from SRAM into registers, etc.

And it may well turn out that dividing that 3 seconds across six will require twice as much processing time due to, essentially, loading a byte from SRAM into a register, doing some small calculation, then storing it back toSRAM to perform the same load-process-store pocedure again a second later...

But, if divided-up right, one can *both* update the seconds-hand and calculate/update the minutes-hand every second; no lag on the seconds-hand caused by the mintues' calculation.

No lag caused by a slew of push/pops.

...

And if done with just a tiny bit more foresight, no lag caused by the hours-hand, either.

...

Now, somewhere in here is the concept I've been dealing with off-n-on for roughly a decade. REMOVE the interrupts. Use SMALL-stepping State-Machines, with polling. Bump that main-loop up to as-fast-as-possible.

With an 8-bit AVR I was once able to sample audio at roughly its max of 10KS/s, store into an SD-Card, sample a *bitbanged* 9600-baud keyboard, write to an SPI-attached LCD, writes to EEPROM, and more... with a guaranteed 10,000 loops per second, averaging 14,000. ALL of those operations handled *without* interrupts.

Why? Again, because if, say, I'd used a UART-RX interrupt for the keyboard, it'd've taken far more than 1/10,000th of a second to process it, between all the necessary push/pops, and the processing routine itself (looking up scancodes, etc), which would've interfered with the ADC's 10KS/s sampling, which would've interfered with that sample's being written to the SDCard.

Instead: i.e. I knew the keyboard *couldn't* transmit more than 960 bytes/sec, so I could divide-up its processing over 1/960th of a second. Similar with the ADC's 10KS/s, and similar with the SDCard, etc. And, again, in doing-so managed to divide it all up into small pieces that could be handled, altogether, in about 1/10000th of a second. Even though, again, handling any one of those in its entirety, in say, an interrupt, would've taken far more than 1/10000th of a second; throwing everything off, just like the seconds-hand not updating between 57 and 0 seconds in the analogy.

There used to be special ISA cards that allowed for connecting a VCR to a PC in order to use the VCR as a tape-backup.

I always thought that was "cool" from a technological standpoint, but a bit gimmicky. I mean, sure, you could do the same with a bunch of audio cassettes if you're patient.

...

But, actually, I've done some quick research/math and think it may not have been so ridiculous, after-all.

In fact, it was probably *much* faster than most tape-backup drives at the time, due to its helical heads. And certainly a single VHS tape could store far more data. (nevermind their being cheap, back then).

In fact, the numbers suggest videocassettes were pretty-much on-par with spinning platters from half a decade later in many ways.

https://en.m.wikipedia.org/wiki/VHS

https://en.m.wikipedia.org/wiki/ST506/ST412

I somehow was under the impression the head on a VHS scans one *line* of the picture each time it passes the tape. But, apparently it actually scans an entire frame. At 60Hz!

In Spinning-platter-terms, it'd be the equivalent of a hard disk spinning at 3600RPM. Sure, not blisteringly fast, but not the order-of-magnitude difference I was expecting.

The video bandwidth is 3MHz, which may again seem slow, but again, consider that hard drives from half a decade later were limited to 5MHz, and probably didn't reach that before IDE replaced them...

"The limited bandwidth of the data cable was not an issue at the time and is not the factor that limited the performance of the system."

...

I'm losing steam.

...

But this came up as a result of thinking about how to archive old hard drives' data without having a functioning computer/OS/interface-card to do-so.

"The ST506 interface between the controller and drive was derived from the Shugart Associates SA1000 interface,[5] which was in turn based upon the floppy disk drive interface,[6] thereby making disk controller design relatively easy."

First-off, it wouldn't be too difficult to nearly directly interface two drives of these sorts to each other, with a simple microcontroller (or, frankly, a handful of TTL logic) inbetween to watch index pulses and control stepping, head-selects, and write-gates. After that, the original drive's "read data" output could be wired directly to the destination's "write data" input. Thus copying from an older/smaller drive to a newer/larger one with no host inbetween.

It's got its caveats... E.G. The new drive would only be usable in place of the original, on the original's controller, *as though* it was the original drive.

The new drive has to have equal or more cylinders and heads. It has to spin at exactly the same rate, or slower. Its magnetic coating has to handle the recording density (especially, again, if it spins slower).

BUT: it could be done. Which might be good reason to keep a later-model drive like these around if you're into retro stuff. I'm betting some of the drives from that era can even adjust their spin-rate with a potentiometer.

...

Anyhow, again, I've a lot to cover, but I'm really losing steam.

...

Enter the thoughts on using VHS as an only slightly more difficult-to-interface direct-track-copying method... as long as the drive (maybe Shugart or early MFM? is within the VHS's abilities, which it seems there may very well be such drives in retro-gurus' hands.

So, herein, say you've got a 5MB mini-fridge-sized drive with an AC spindle-motor (which just happens to spin at 3600RPM, hmm). Heh...

Anyhow, I guess it's ridiculous these days, each track could be recorded directly to a PC via a USB logic analyzer, right? (certainly with #sdramThingZero - 133MS/s 32-bit Logic Analyzer)

Or isn't there something like that already for floppies ("something-flux").

Anyhow, I guess the main point is that storing/transferring the flux-transitions in "analog" [wherein I mean *temporally*] has the benefit of not needing to know details...

Read more »When I was a kid, I heard this often...

Lacking in mutually-understood history and details of technicalities, it took me years to try to explain "Well, more like Steve Wozniac." And literally decades to realize how *that* was probably misinterpretted.

So, bare with me as I try to reexplain to several different audiences simultaneously.

First, OK, everyone knows Billy-G. I shouldn't have to explain that one, but I will.

As I Understand (I'm no history-buff):

Billy-G didn't design computers nor electronic circuits; he did software *for* computers. And his real claim to fame was actually software he *bought* (not wrote) from someone else.

Most folk who made the statement about me being "The Next" weren't aware of Stevie-W...

Stevie-W, unlike Billy-G, did electronics design, the actual computers themselves. That's a fundamental difference I was trying to get across, but couldn't convey in terms that really struck a chord.

In their minds, I gathered over many following years, the two were basically one-and-the-same, just from different companies. And the latter, then, was the "runner-up" that few outside the nerddom even know by name.

Not quite.

Billy-G: Software

Stevie-W: Mostly Hardware

Fundamentally different sorts of people. Fundamentally different skills. Fundamentally different aspects of computing. Maybe like comparing a finish-carpenter to a brick-layer.

Both, mind you, can be quite skilled, and the good ones highly revered. But therein lies the next problem in trying to explain to folk not already in-the-know: It seems many, again, associate a statement like that the wrong-way compared to my intent; thinking something like "oh, finish carpenters are concerned with minute *details*, whereas brick-layers are concerned with 'getting er done'" ish... I dunno what-all other people think, but I know I was yet-again misunderstood when I made such comparisons, so let me try to re-explain:

Well, no. My point wasn't some judgement of the skill-level or quality of craftsmanship or even about the utilitarian importance/necessity of what they do. My point was that what they do are both related to construction, but that we generally hire both when we build a house; because one is good at one thing, and the other is good at the other.

Maybe I should've chosen electricians and plumbers as the example, instead. But I'll never finish this if I open that can of worms.

Billy-G: Software that the end-user sees

Stevie-W: Hardware, and software in the background that most folk these days don't even know exists.

Which, probably, goes a ways in explaining why so many folk know the former, since his stuff is in your face, while the latter's stuff is encased in beige boxes.

.

Now, during *years* of trying to figure out how to explain this fundamental difference, without *ever* getting far-enough in the conversation to make my *main* point, the end-takeaway often seemed to be "The Next Steve Wozniac." At which point I was so friggin' exhausted... ugh.

.

So, for years I tried [and obviously failed] to rewire my brain to at least get that fundamental concept across to such folk concisely, *so that* I could maybe finally get across the next point:

....

There were MANY folk, probably *thousands,* doing what Stevie-W was doing before he and his work got "picked."

.

Now, when I say *that*, folk tend to, it seems, think I'm looking to "get picked." And, I suppose I can understand why they might come to such a conclusion (despite the fact we're nowhere near far-enough along in this discussion for conclusions to be jumped to) because I had to try to work on their level, and explain fundamental concepts from a perspective I thought they understood... which... apparently to me, requires names of celebrities to even be bothered to try to understand. Hey, I'm not claiming that *is* the way they are, I'm saying that as someone who has dedicated a huge portion of my life's brainpower to something...

Read more »The 8x51 series *has* internal ROM, even the 8051. The 8031/8032 are allegedly "ROMless" versions of the 8x51/52.

What that means to designers/hobbiests is, e.g.:

You can design for an 8031, with an external ROM, then drop-in any old 8x51, even if its (OTP, mask, etc.) ROM was programmed with code from an entirely different product, or buggy, or whatever.

Find some old 8x51 in some old piece of trash, think it's worthless because it's already been programmed...? Tie one pin to a voltage rail and use it as an 8031 in your own project.

What that probably meant from Intel's perspective:

"Hey, a customer ordered a bunch of preprogrammed 8051's, but discovered a bug before we shipped" and/or "We got a batch of 8051's with flaky ROMs" and "we're sitting on 1000's of otherwise useless 8051's. What should we do with them?" "Remarket them as ROMless 8031's, and let the new customer supply their own ROM chip!"

Forward-thinking, reusability, reduction of eWaste...

And, in this new era of old paper datasheets now scanned and uploaded as pdfs (as opposed to my earlier experience where pdfs only existed for products designed in the pdf-era (THANK YOU to those who take the time and provide that effort!)), I've discovered that many previously zero-search-result ICs on old PCBs scavenged from VCRs, CD players, TVs, Stereo Components, and whatnot, now have full-on datasheets detailed down to instruction-sets and hex operands...

And many of those, similar to the 8051, have a pin which can be tied to a voltage rail to disable the internal mask-ROM, enabling them, like the 8031, to run off an external ROM.

Wow! SO MANY uC designers, of so many various architectures, even 4-bit, considered this worthwhile! And even if it was purely because they wanted to sell otherwise defective/wrongly-programmed uCs, it *still* benefits the likes of the customer and even the alleged us, that are hardware hackers.

I dunno, I thought it was darn-relevant in the "chip shortage era."

[Inspired by the lack of response to my comment at https://hackaday.com/2022/12/01/ask-hackaday-when-it-comes-to-processors-how-far-back-can-you-go/ and the fact it seems to be blocking my adding the above as a clarification-reply.

Fact is, I've been meaning to document my box full of scavenged PCBs with such uCs, and maybe even turn each into a "NOPulator"]

...

Oh, it finally appeared there, twice, numerous hours later. Heh.

I even got a response suggesting looking into Collapse OS: http://collapseos.org/

(Very Intriguing).

...

Lots more thoughts and My Collection, now over at:

I wonder how many folk training AI have never even trained a dog to shake.

What IS The Speed of Light Squared?

What IS Distance Squared Over Time Squared... ?

What is Time Squared?!

Dunno, BUT:

Well, Acceleration is Distance Over Time Squared...

And Distance Squared is Area

So might Speed Squared be The Acceleration Of Area?

...

"The Acceleration of Area Is Constant" ?

If you were to release a ball down a ramp, its acceleration would be constant, (assuming constant gravity and constant slope).

If you drop a rock in a pond, the ripples propagate outward at a constant speed along the radius... But, interestingly, the Circumference of the ripple has a constant "acceleration" (distance over time squared)

If you were to create a "ripple" in three-D space, say by exploding some TNT in midair, the sound of the explosion would propagate at a constant speed radially in all directions, at the speed of sound... The surface-area of that 3D "ripple" would have a constant acceleration.

So, if all the energy in some mass were released instantaneously, it would create a spherical wavefront whose surface-area would "accelerate" at a constant rate.

E= MC^2

So, then... Energy is usually electromagnetic, right? And ripples in electromagnetic fields travel at the speed of light...

So E=MC^2 basically says that if you were to instantaneously release all the energy that a mass *can* contain into a burst of electromagnetic energy, then that energy would propagate as an electromagnetic wave-front which has a constant "acceleration of surface area". I.E. a /sphere/ (?)

...

Now, if we think of it this way, and we change our units of time and speed from meters and seconds to something instead related to the propagation of this wavefront, we'll find that the "constant acceleration of surface-area" (i.e. C^2) Is Directly Related to Pi^2, and thus the speed of light (radially) is in fact an integer constant times Pi. (?!)

...

Musings not yet verified.

Note that I later found an article about a relationship between Pi and C, which derives it through the half-period of a pendulum. Unfortunately, I'm not sure the math works-out, due to the fact that a pendulum's period is not /exactly/ as stated, but only /very close/, under some conditions. However, the fact is those /specific/ conditions seem to come /very close/ to equating C to Pi, in a very similar way as my wavefront-propagation theory does.

...

So, what are the implications?

Dunno, haven't had time to really look into it, yet.

However, one path seems to suggest that gravity, too, is directly related to C^2 (and thus Pi^2), which makes sense if considering, again, the idea of the surface-area of a wavefront's propagation.

Imagine a brief "pulse" of gravity as the opposite of an electromagnetic explosion... An implosion(?). Its wavefront (pulled into its center, rather than extending away from it) would have a constant acceleration (of surface-area).

So, now, imagine a constant flow of such energy, much like a constantly-lit lightbulb...

...

I dunno where it goes!

Here's a weird thought just popped-up...

Gravity doesn't /do/ anything, unless there's another object involved. So, until another object comes into the path of its (impulse) "wavefront," that wavefront exists everywhere on the sperical surface. But, when an object comes into its path, does that spherical surface "collapse" onto that object? Much like they suggest a photon may propagate outward in every direction spherically /until/ it hits something? At which point that sphere collapses onto that object, much like an expanding bubble in bubblegum "rubberbands" (spherically!) back to one's face?

...

hmmm...

Create an account to leave a comment. Already have an account? Log In.

Become a member to follow this project and never miss any updates

By using our website and services, you expressly agree to the placement of our performance, functionality, and advertising cookies. Learn More

Alex Lungu

Alex Lungu

Eric Hertz

Eric Hertz

Starhawk

Starhawk