// -- Motivation --

I wanted e-Paper wall art, with large-scale displays. After browsing the available sizes and colors, and discovering text-to-image synthesizers like stable-diffusion, the idea popped into my head and wouldn't leave. I knew I had to build it.

// -- Parts and Materials --

* 1 x Waveshare 7.3inch e-Paper, Red/Yellow/Black/White https://www.waveshare.com/product/displays/e-paper/7.3inch-e-paper-hat-g.htm

* 2 x Good Display 5.79inch e-Paper strip, Red/Black/White https://www.good-display.com/product/441.html

* TinyPico https://www.tinypico.com

* 3D printer filament: ProtoPasta Matte Fiber HTPLA "Walnut Wood" https://www.proto-pasta.com/products/matte-fiber-htpla-walnut-wood

* 32GB microSD card and reader module

* E-Paper triptych board, manufactured by OSH Park. I had 2 extras leftover from the Carpets project.

* 350mAh LiPo battery from Adafruit. Soon this will be upgraded to 2200mAh!

// -- Design --

This was inspired by the "shadow box" art style, especially when differently-sized frames are arranged to form a larger piece. The e-Paper panels have different aspect ratios and color capabilities. The side panels are slightly recessed, which makes their presence feel a bit uncomfortable, as it's difficult to focus on the entire piece (this was an intentional choice).

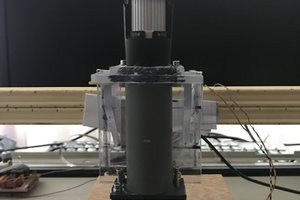

The frame is 3 separate parts, connected at dovetail joints. After experimenting with prototypes, I assembled the frame by squirting a generous amount of industrial hot glue into the joints, assembling the parts, then cutting off excess glue blobs after it dried.

The displays are held in place by S-shaped brackets, with felt adhesive on the display-side, to exert gentle force on the fragile glass panels. Most other components are held in place with zip ties and hope.

Images are stored on the microSD card as PNGs, then loaded-decoded-resized-cropped-palettized-dithered within TinyPico's 4MB PSRAM, using an image processing framework. The goal was to create a reusable, high-level framework for microcontrollers; each image operation is executed in one line of C++.

Currently, the TinyPico awakes every 12 hours, redraws, and goes back to sleep. With the 350mAh battery, a full charge lasts for 16 days. A subtle low-battery icon is drawn when the battery voltage dips below 3.3V.

I wrote a prompt-generation script, which generated endless portraits with randomized characteristics. (So I can't share "the prompt", they're all different, sorry.) The best, most emotional images were produced by stable-diffusion v1.4, probably because the prompt keywords were tailored for this version. Only about 1 in 20 generated images was usable, the others were rejected for having multiple heads, misaligned eyes, I just didn't like them, etc. Most of the side panel images were generated on the dreamstudio.ai website.

The center panel has striping artifacts, especially on the right-hand side of yellow pixels. It looks like this could be a brief voltage droop. If you know which bits to flip to fix this, please let me know :)

// -- Future Concerns --

This project began in September 2022, when stable-diffusion seemed fun and innocent. I'm concerned that "A.I."-generated images will soon devalue the craft of living, working human artists, and the effect will be wide-spread.

"A.I."-generated images seem to be mere style transfers onto compositions and scenes which are fundamentally boring and lifeless. The faces stare back with dead eyes; elements aren't arranged or spaced in ways that are pleasing to our human senses. You can browse libraries of generated images (ex: lexica dot art) and after the initial shock of "wow that style is cool," you may feel a sense of monotony creeping in.

Soon these images will be everywhere, and regarded as normal, even desirable. This is a future I don't want to see. I'm thinking about this problem every day.

Zach Archer

Zach Archer

avishorp

avishorp

sjm4306

sjm4306

Kevin Kadooka

Kevin Kadooka