The jetson was originally envisioned as the minimum required to get useful results. The problem is efficientdet was optimized enough on the raspberry pi 4 that a straight port to the jetson would now not make any difference. It needs to run another model in addition to animal detection.

In the interest of improvement, the journey begins with face recognition. The 1st goog hit was

https://github.com/nwesem/mtcnn_facenet_cpp_tensorRT

The mane problem with this setup is matching the commands with your version of jetpack. It seems nvidia stopped manetaining jetpack for the jetson nano since the last nano version was 4.6 & jetpack after version 5 is only for the orin. 1st, tensorflow isn't preinstalled on the jetson.

pip3 install --pre --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v46 tensorflow

2nd, the step01_pb_to_uff.py program gives the dreaded AttributeError: module 'tensorflow' has no attribute 'AttrValue'

The funny thing about github is every repository has hundreds of forks. Some of these forks have fixes for various bugs. Another fork

https://github.com/RainbowLinLin/face_recognition_tensorRT

gets through the step01_pb_to_uff.py conversion. After that 1 step, the instructions in this fork seem to work. You put jpg images of faces in the imgs directory & run build/face_recogition_tensorRT It dies expecting the nvargus-daemon to provide video frames. Of course, lions don't have a supported camera.

Helas, a port of the truckcam system to the jetson nano using this source code is very platform specific. The days of POSIX & write once run anywhere are long gone. Maybe it could be more modular.

https://github.com/NVIDIA/TensorRT/tree/main/samples/python/efficientdet

Fitting face recognition, efficientdet, & pose estimation on the jetson would best be done with a 64 gig card. To get it into 32 gig, a lot of junk on the jetson has to be deleted.

/usr/lib/libreoffice

/usr/lib/thunderbird

/usr/lib/chromium-browser

/usr/share/doc

/usr/share/icons/

There's still some belief a jetson with a screen could be practical, so the X stuff remaned. The screen was abandoned when pose tracking proved not good enough for rep counting.

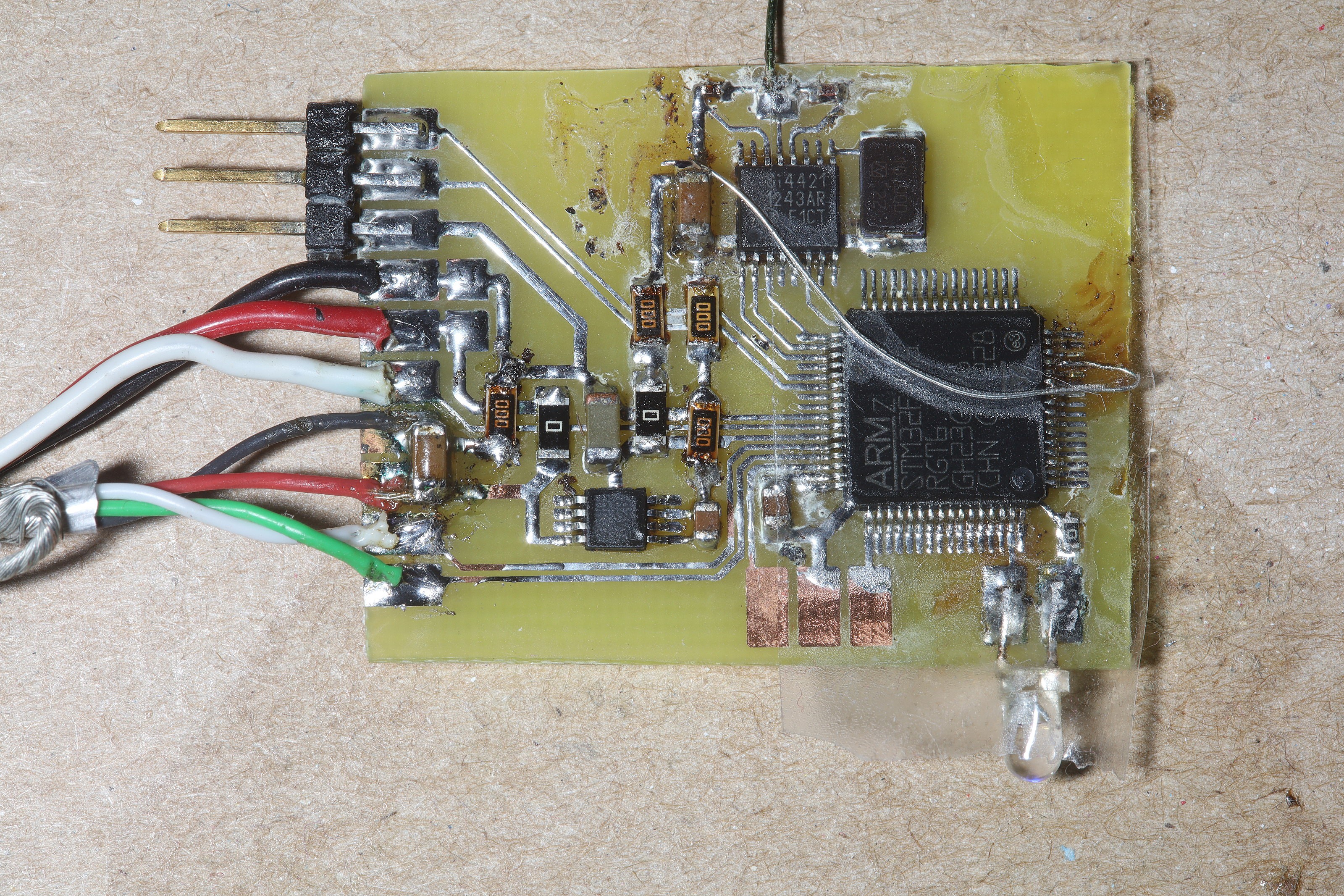

The next decision was the board for controlling the servo from either jetson commands or RF commands.

When the camera panner was on the raspberry pi, it took commands from SPI since it was easy. A single USB cable with servo power & commands would be simpler. The lion kingdom never ported a ttyACM driver to stm32 but did have a raw libusb driver. There were a few ttyACM projects on a PIC. The mane advantages with ttyACM were no need for libusb, simpler initialization & no funky URB callbacks. TtyACM devices are a lot easier to access in python.

The advantage with libusb was being able to identify the device from its USB ID & distinct packet boundaries but that took a lot of code. Multiple ttyACM devices can't be distinguished as easily as under libusb. We're not dealing with multiple devices now.

Kicking off the new servo board was the rediscovery that VDDA is required on the STM32. The redesigned board didn't shrink as much as hoped, so a new jetson nano enclosure is required. The space for the L6234 was filled up by the voltage regulator & all the jumpers.

To use the ttyACM device, /usr/sbin/ModemManager must be disabled or it'll clobber the servo commands. Implementations of a ttyACM device on an STM32 abound. There's an automatic code generator in the IDE which generates a ttyACM device. Nevertheless, it took some debugging to get it to work since the lion kingdom codebase has many artifacts from years of other projects.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.