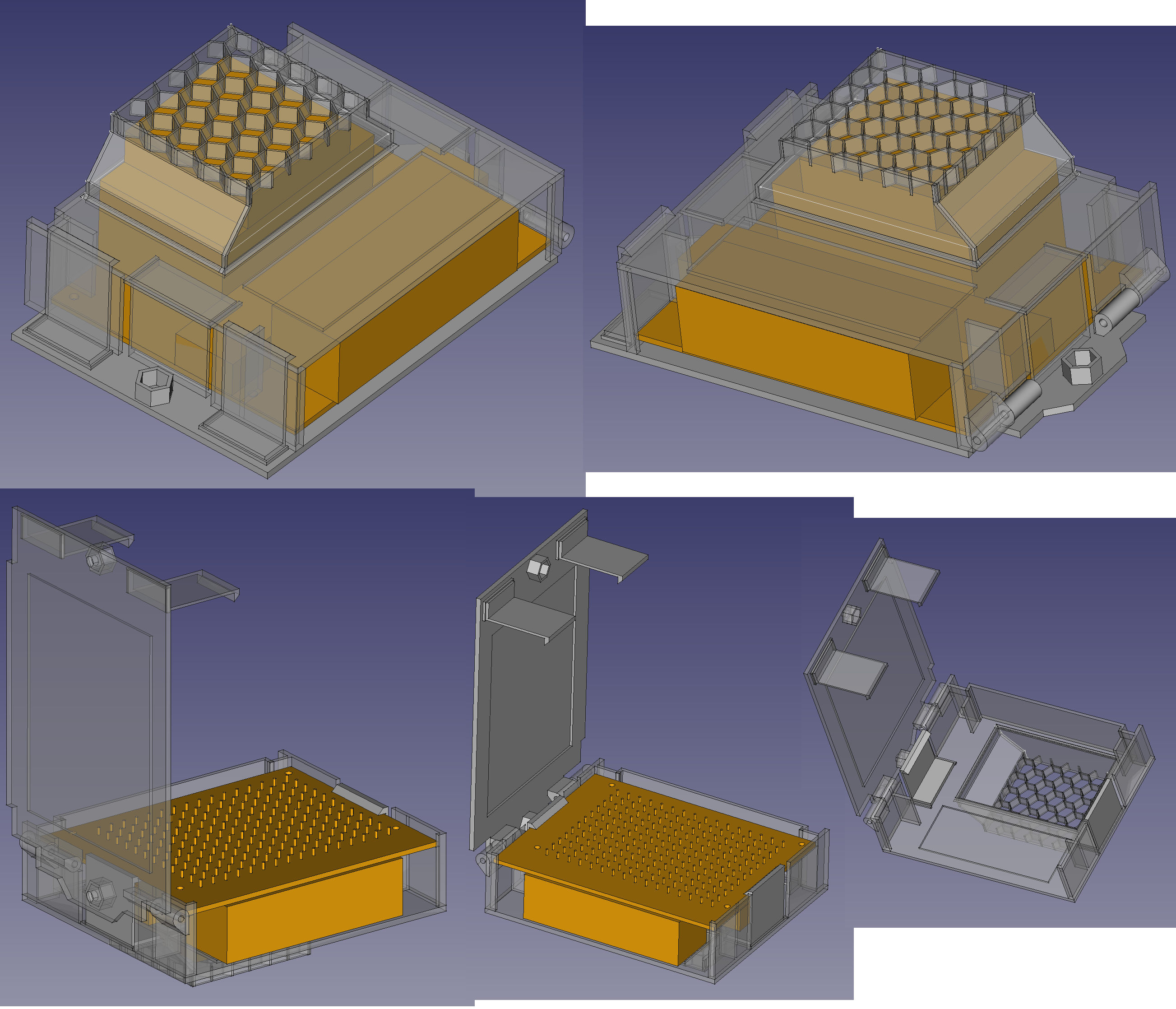

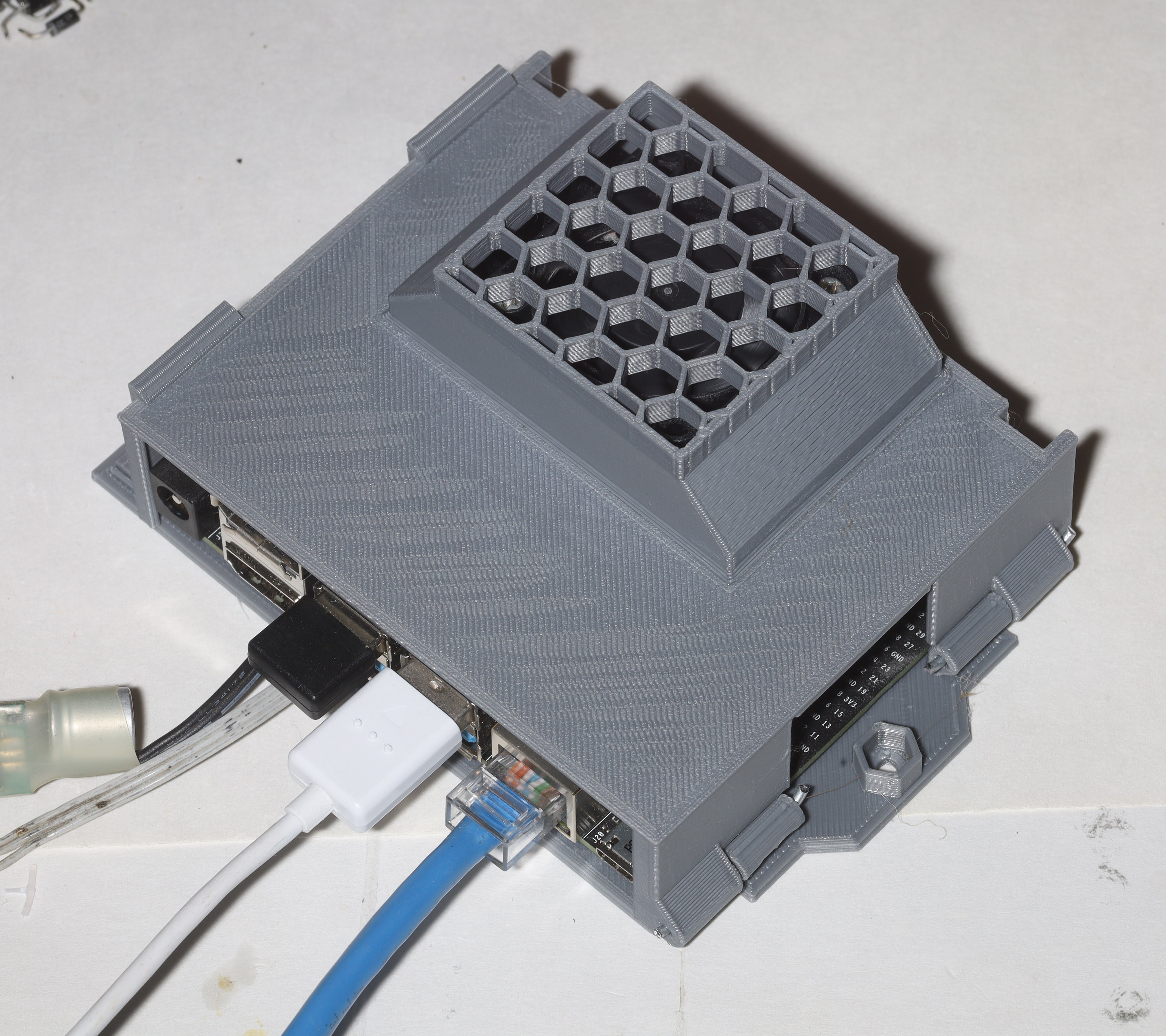

The enclosure was making better progress than the neural network.

It evolved into this double clamp thing where 1 set of clamps holds the jetson in while another set holds the lid closed. There could be 1 more evolution where the lid holds the jetson in on its own. It would require some kind of compressible foam or rubber but it would be tighter. It wouldn't save any space. It could be done without compressible material & just standoffs which were glued in last. The clips being removable make it easy to test both ideas.

This design worked without any inner clips. The jetson wobbles slightly on 1 side. It just needs a blob of hot snot or rubber thing as a standoff on 1 side. The other side is pressed against the power cable. There's enough room inside for the buck converter, but it blocks the airflow. A hole in back could allow the power cable to go out that way & the buck converter to wrap around the back. The hinge wires could use PLA welds.

The air manages to snake around to the side holes. It would be best to have the hinges in the middle & openings in the back. The hinge side could have wide hex grids for more air flow. The clip side could have 1 clip in the middle & wide hex grids where the 2 clips are. There's enough room under the jetson for the clip to go there. This would take more space than the existing side panels.

The existing holes could be widened. A hex grid under the heat sink instead of solid plastic could get rid of the empty space. Another hex grid on top of the USB ports would look cool.

The next move was to try inference on x86 with automl-master/efficientdet/model_inspect.py

python3 model_inspect.py --runmode=infer --model_name=efficientdet-lite0 --ckpt_path=../../efficientdet-lite0-voc/ --hparams=voc_config.yaml --input_image=320.jpg --output_image_dir=.

python3 model_inspect.py --runmode=infer --model_name=efficientdet-lite0 --ckpt_path=../../efficientlion-lite0/ --hparams=../../efficientlion-lite0/config.yaml --input_image=test.jpg --output_image_dir=.

python3 model_inspect.py --runmode=infer --model_name=efficientdet-lite0 --ckpt_path=../../efficientdet-lite0/ --hparams=../../efficientdet-lite0/config.yaml --input_image=320.jpg --output_image_dir=.

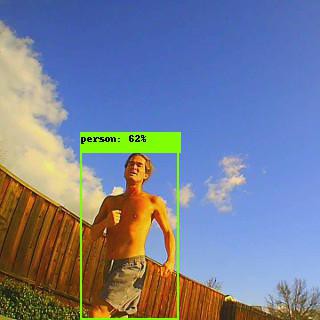

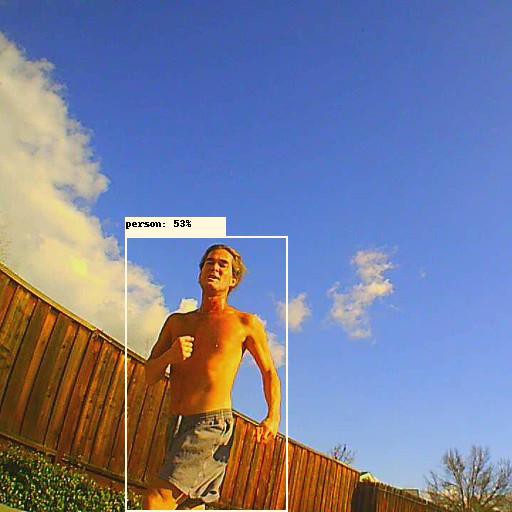

Used a 320x320 input image. None of the home trained models detected anything. Only the pretrained efficientdet-lite0 detected anything.

Another test with the balky efficientdet-d0 model & train_and_eval mode.

python3 main.py --mode=train_and_eval --train_file_pattern=tfrecord/pascal*.tfrecord --val_file_pattern=tfrecord/pascal*.tfrecord --model_name=efficientdet-d0 --model_dir=../../efficientdet-d0 --ckpt=efficientdet-d0 --train_batch_size=1 --eval_batch_size=1 --num_examples_per_epoch=5717 --num_epochs=50 --hparams=voc_config.yaml

python3 model_inspect.py --runmode=infer --model_name=efficientdet-d0 --ckpt_path=../../efficientdet-d0/ --hparams=../../efficientdet-d0/config.yaml --input_image=lion512.jpg --output_image_dir=.

Lower confidence but it detected something. Create the val dataset for a lion.

PYTHONPATH=. python3 dataset/create_coco_tfrecord.py --image_dir=../../val_lion --object_annotations_file=../../val_lion/instances_val.json --output_file_prefix=../../val_lion/pascal --num_shards=10

Then use the pretrained efficientdet-lite0 as a starting checkpoint.

https://storage.googleapis.com/cloud-tpu-checkpoints/efficientdet/coco/efficientdet-lite0.tgz

Another training command for efficientlion-lite0

python3 main.py --mode=train_and_eval --train_file_pattern=../../train_lion/pascal*.tfrecord --val_file_pattern=../../val_lion/pascal*.tfrecord --model_name=efficientdet-lite0 --model_dir=../../efficientlion-lite0 --ckpt=../../efficientdet-lite0 --train_batch_size=1 --eval_batch_size=1 --num_examples_per_epoch=1000 --eval_samples=100 --num_epochs=300 --hparams=config.yaml

python3 model_inspect.py --runmode=infer --model_name=efficientdet-lite0 --ckpt_path=../../efficientlion-lite0/ --hparams=../../efficientlion-lite0/config.yaml --input_image=320.jpg --output_image_dir=.

Accuracy seemed to improve. Using the pretrained efficientdet-lite0 as the starting checkpoint seems to be required but the vals shouldn't be. It just takes too long to train on a GTX 970M. The internet recommends the RTX 3060 which would be like going from the lion kingdom's Cyrix 166Mhz to its dual 550Mhz Celeron.

The tensorrt version still didn't detect anything.

The model_inspect.py script also runs on the jetson.

OPENBLAS_CORETYPE=CORTEXA57 python3 model_inspect.py --runmode=infer --model_name=efficientdet-lite0 --ckpt_path=../../efficientlion-lite0/ --hparams=../../efficientlion-lite0/config.yaml --input_image=lion320.jpg --output_image_dir=.

This worked on the jetson, but it required a swap space.

The working version seems to use libcudnn but it builds the model procedurally from python source code & the checkpoint weights.

There is a tensorrt demo for python.

cd ~/TensorRT/samples/python/efficientdet

OPENBLAS_CORETYPE=CORTEXA57 python3 infer.py --engine=~/efficentlion-lite0.out/efficientlion-lite0.engine --input=~/lion320.jpg --output=~/0.jpg

Going to the next step & inferring with tensorrt requires cuda bindings for python which were never ported to the jetson nano. pip3 install pycuda just hits a ponderous number of errors. Sadly, pip3 also completely breaks your numpy installation in this process.

Trying to run the onnx model with onnxruntime just fails on the unsupported efficientNMS operator.

The only way to debug might be dumping layers from model_inspect.py

There's still a vijeo of efficientdet running on tensorrt.

Another look at pretrained efficientdet-lite0 revealed it was generating boxes for interleaved RGB while truckcam was feeding planar RGB. truckcam_trt.c was also double transposing the output coordinates. With those 2 bugs fixed, it started properly detecting but efficientlion-lite0 & the voc model were still broken.

Note face detection failed from this camera angle.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.