It was long believed if model_inspect.py could just test the tensorrt engine, it might narrow down the problem to the input format. Lately started thinking if it could work at all in model_inspect.py with the tensorrt engine, all the inferrence should stay in python & the rest of the program should communicate with shared memory. Python has a shared memory library.

Reviewing model_inspect.py again, all it does it ingest the efficientdet graph & the protobuf files, compile the tensorrt engine on the fly, & benchmark it. The compilation always failed. The way a tensorrt engine is loaded from a file in python is the same way it's done in C++ so it's not going to matter. The problem is the way it's being trained doesn't agree with an operator in tensorrt.

https://github.com/NobuoTsukamoto/benchmarks/blob/main/tensorrt/jetson/detection/README.md

The thing about these benchmarks is they were all done on the pretrained efficientdet checkpoint. It's unlikely anyone has ever gotten a home trained efficientdet to work on jetson nano tensorrt.

Given the difficulty with body_25 detecting trees, it might be worth using efficientdet on libcudnn. It might be possible to run a chroma key on the CPU while efficientdet runs on the GPU. The problem with that is it takes a swap space just to run efficientdet in libcudnn. There's not enough memory to do anything else.

The cheapest improvement might be a bare jetson orin module & plugging it into the nano dock. Helas, the docks are not compatible. The orin's M2 slot is on the opposite side. It doesn't have HDMI. Bolting on 360 cam & doing the tracking offline is going to be vastly cheaper than an orin, but it has to be lower & it needs a lens protector in frame. The weight of 360 cams & the protection for the lenses has always been the limiting factor with those.

The raspberry pi 5 8GB recently started being in stock. That would probably run efficientdet-lite0 just as fast as the jetson nano.

-------------------------------------------------------------------------------------------------------------------------------------------------

Efforts turned to making model_inspect.py some kind of image server & just feeding it frames through a socket. model_inspect.py has an option to export a saved model. Maybe that would start it faster & use less memory.

root@antiope:/root/automl/efficientdet% OPENBLAS_CORETYPE=CORTEXA57 python3 model_inspect.py --runmode=saved_model --model_name=efficientdet-lite0 --ckpt_path=../../efficientlion-lite0.1/ --hparams=../../efficientlion-lite0.1/config.yaml --saved_model_dir=../../efficientlion-lite0.saved

Then perform inference using the saved model

root@antiope:/root/automl/efficientdet% OPENBLAS_CORETYPE=CORTEXA57 python3 model_inspect.py --runmode=saved_model_infer --saved_model_dir=../../efficientlion-lite0.saved --input_image=../../truckcam/lion320.jpg --output_image_dir=.

Helas, no difference in loading time or memory. It takes 3 minutes to start, which isn't practical in the field. Suspect most of that is spent swapping to a USB flash. The tensorRT version of efficientdet takes 20 seconds to load.

There is another inference program which uses a frozen model:

root@antiope:/root/automl/efficientdet/tf2% time OPENBLAS_CORETYPE=CORTEXA57 python3 inspector.py --mode=infer --model_name=efficientdet-lite0 --saved_model_dir=/root/efficientlion-lite0.out/efficientdet-lite0_frozen.pb --input_image=/root/truckcam/lion320c.jpg --output_image_dir=.

This takes 43 seconds to start & burns 2 gigs of RAM but the frame rate was only 10.5fps. body_25 on tensorrt did 6.5fps & efficientdet_lite0 on the raspberry pi 4 did 8.8fps. It could possibly do chroma keying in the CPU while efficientdet ran in the GPU.

------------------------------------------------------------------------------------------------------------------------------------------------------

Had to retrain new models since what was left after 2023 was no longer working. Notes about training:

https://hackaday.io/project/190480/log/221560-death-of-efficientdet-lite

https://hackaday.io/project/190480-jetson-tracking-cam/log/221020-efficientdet-dataset-hack

root@gpu:~/nn/yolov5# source YoloV5_VirEnv/bin/activate

root@gpu:~/nn/yolov5# python3 label.py

create training images:

root@gpu:~/nn/automl-master/efficientdet# PYTHONPATH=. python3 dataset/create_coco_tfrecord.py --image_dir=../../train_lion --object_annotations_file=../../train_lion/instances_train.json --output_file_prefix=../../train_lion/pascal --num_shards=10

create validation images:

root@gpu:~/nn/automl-master/efficientdet# PYTHONPATH=. python3 dataset/create_coco_tfrecord.py --image_dir=../../val_lion --object_annotations_file=../../val_lion/instances_val.json --output_file_prefix=../../val_lion/pascal --num_shards=10

It must be trained with a starting checkpoint or it won't detect anything. Train with a starting checkpoint specified by --ckpt because the model size won't match

root@gpu:~/nn/automl-master/efficientdet# time python3 main.py --mode=train --train_file_pattern=../../train_lion/pascal*.tfrecord --model_name=efficientdet-lite0 --model_dir=../../efficientlion-lite0 --ckpt=../../efficientdet-lite0 --train_batch_size=1 --num_examples_per_epoch=1000 --num_epochs=200 --hparams=config.yaml

Resume training without starting checkpoint:

root@gpu:~/nn/automl-master/efficientdet# time python3 main.py --mode=train --train_file_pattern=../../train_lion/pascal*.tfrecord --model_name=efficientdet-lite0 --model_dir=../../efficientlion-lite0 --train_batch_size=1 --num_examples_per_epoch=1000 --num_epochs=300 --hparams=config.yaml

Test inference on PC:

root@gpu:/root/nn/automl-master/efficientdet% time python3 model_inspect.py --runmode=infer --model_name=efficientdet-lite0 --ckpt_path=../../efficientlion-lite0/ --hparams=../../efficientlion-lite0/config.yaml --input_image=lion320c.jpg --output_image_dir=.

Create frozen model on a PC:

root@gpu:~/nn/automl-master/efficientdet/tf2# PYTHONPATH=.:.. python3 inspector.py --mode=export --model_name=efficientdet-lite0 --model_dir=../../../efficientlion-lite0/ --saved_model_dir=../../../efficientlion-lite0.out --hparams=../../../efficientlion-lite0/config.yaml

Convert to ONNX on jetson nano:

root@antiope:/root/TensorRT/samples/python/efficientdet% time OPENBLAS_CORETYPE=CORTEXA57 python3 create_onnx.py --input_size="320,320" --saved_model=/root/efficientlion-lite0.out --onnx=/root/efficientlion-lite0.out/efficientlion-lite0.onnx

Had bad feelings about this warning but it might only apply to using the onnx runtime.

Warning: Unsupported operator EfficientNMS_TRT. No schema registered for this operator.

Warning: Unsupported operator EfficientNMS_TRT. No schema registered for this operator.

Convert to tensorrt on jetson nano:

time /usr/src/tensorrt/bin/trtexec --fp16 --workspace=2048 --onnx=/root/efficientlion-lite0.out/efficientlion-lite0.onnx --saveEngine=/root/efficientlion-lite0.out/efficientlion-lite0.engine

Test inference on jetson nano:

root@antiope:/root/automl/efficientdet% swapon /dev/sda1

root@antiope:/root/automl/efficientdet% OPENBLAS_CORETYPE=CORTEXA57 python3 model_inspect.py --runmode=infer --model_name=efficientdet-lite0 --ckpt_path=../../efficientlion-lite0/ --hparams=../../efficientlion-lite0/config.yaml --input_image=../../truckcam/lion320c.jpg --output_image_dir=.

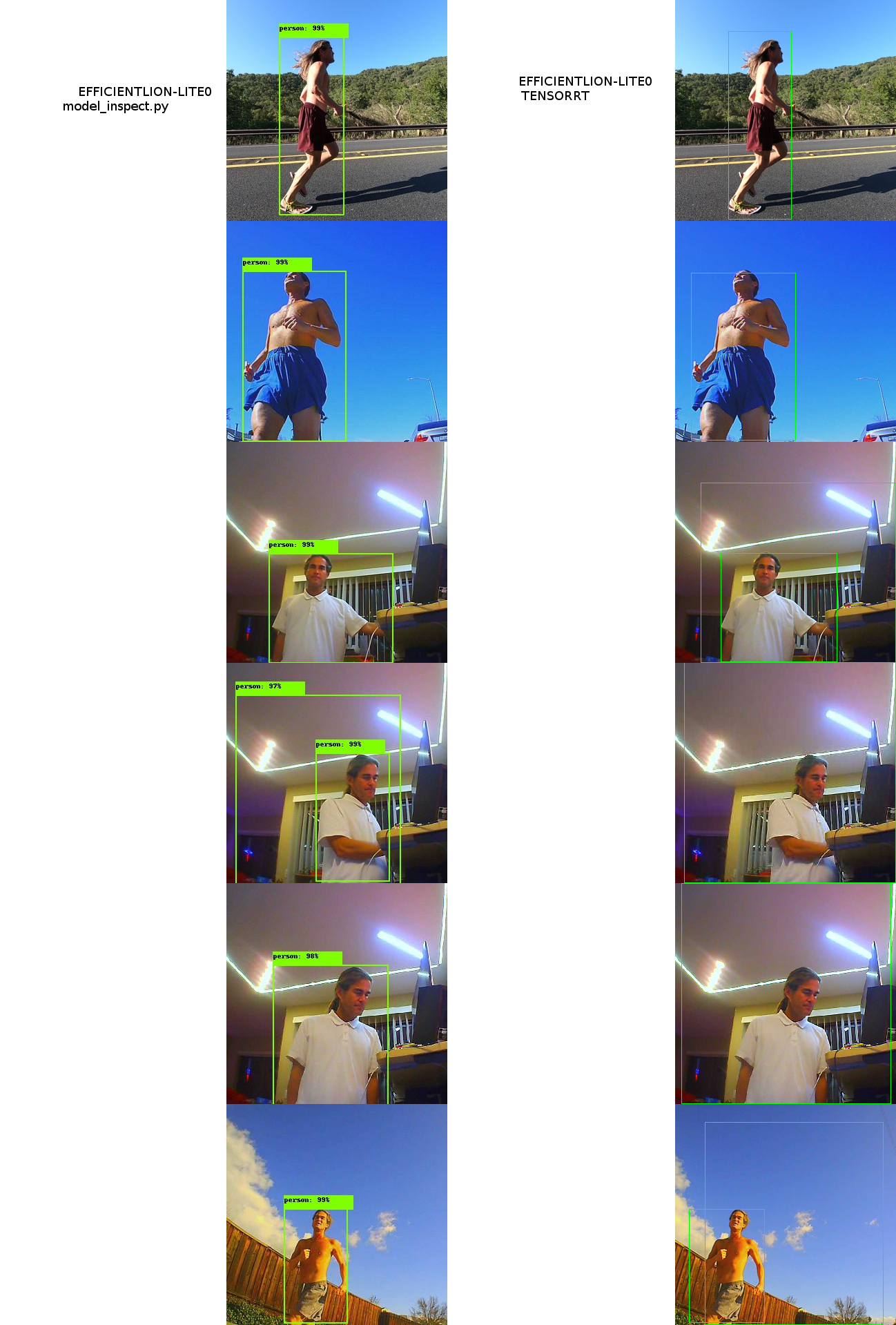

Ran another comparison of efficientdets & still found the cudnn backend working while the tensorrt backend was bad. Fenegling the min & max pixel values got tensorrt pretty close in the outdoor images but still failing on the indoor images. --noTF32 & --fp16 had the same errors.

The smoking gun in this has always been the goog model giving the same result in tensorrt while the lion one does not. Neither of the lion back ends are great indoors because the training data has no indoor images. The lion back ends seem pretty close in outdoor images but the tensorrt version falls apart in indoor images. There are slight improvements when using linear interpolation instead of nearest neighbor. The percentages are different when the goog model is run on tensorrt. 1 thing that might work is taking the overlapping area of all the boxes. Something about the training data is throwing off the lion model.

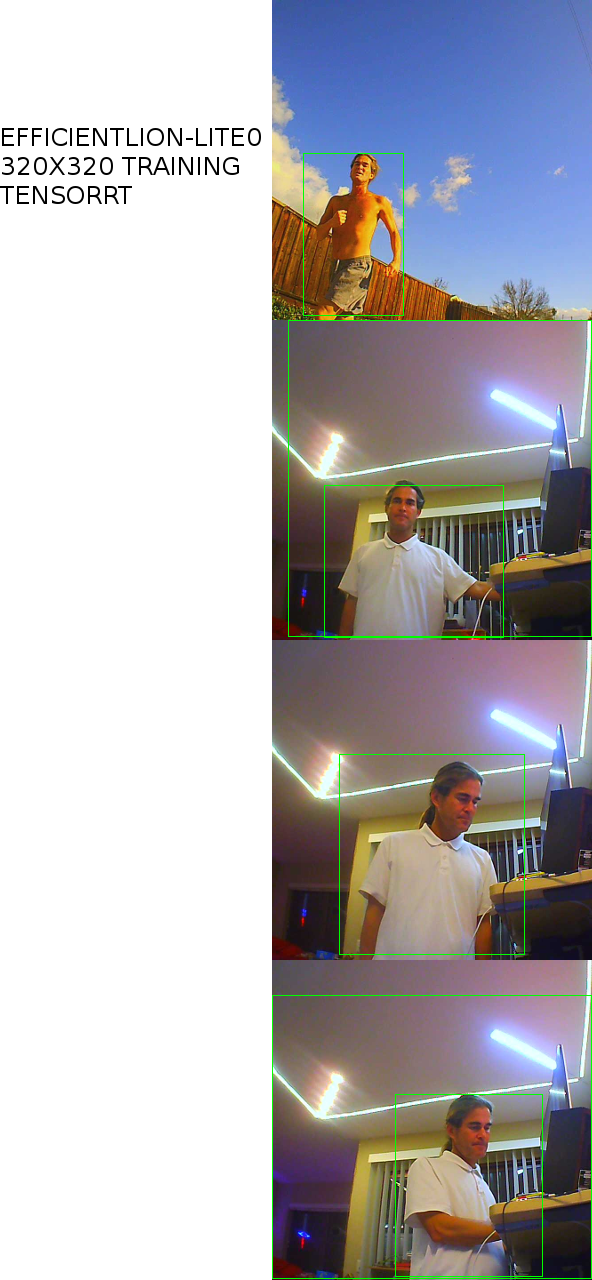

Tried training it with all the training images cropped to 320x320. Young lion didn't perform this test but did a failed test with animorphic aspect ratio.

That got it to detect all the trouble images within a somewhat reasonable error. The glitch boxes could probably be filtered out.

----------------------------------------------------------------------------------------------------------------------------------------------------------------

After all the fuss about tracking software, a much simpler camera system started gaining favor.

A rigid camera on a leash got better & better. It gives the most desirable angle. The mane flaw is it can't do any panning moves. A static camera angle is really boring.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.