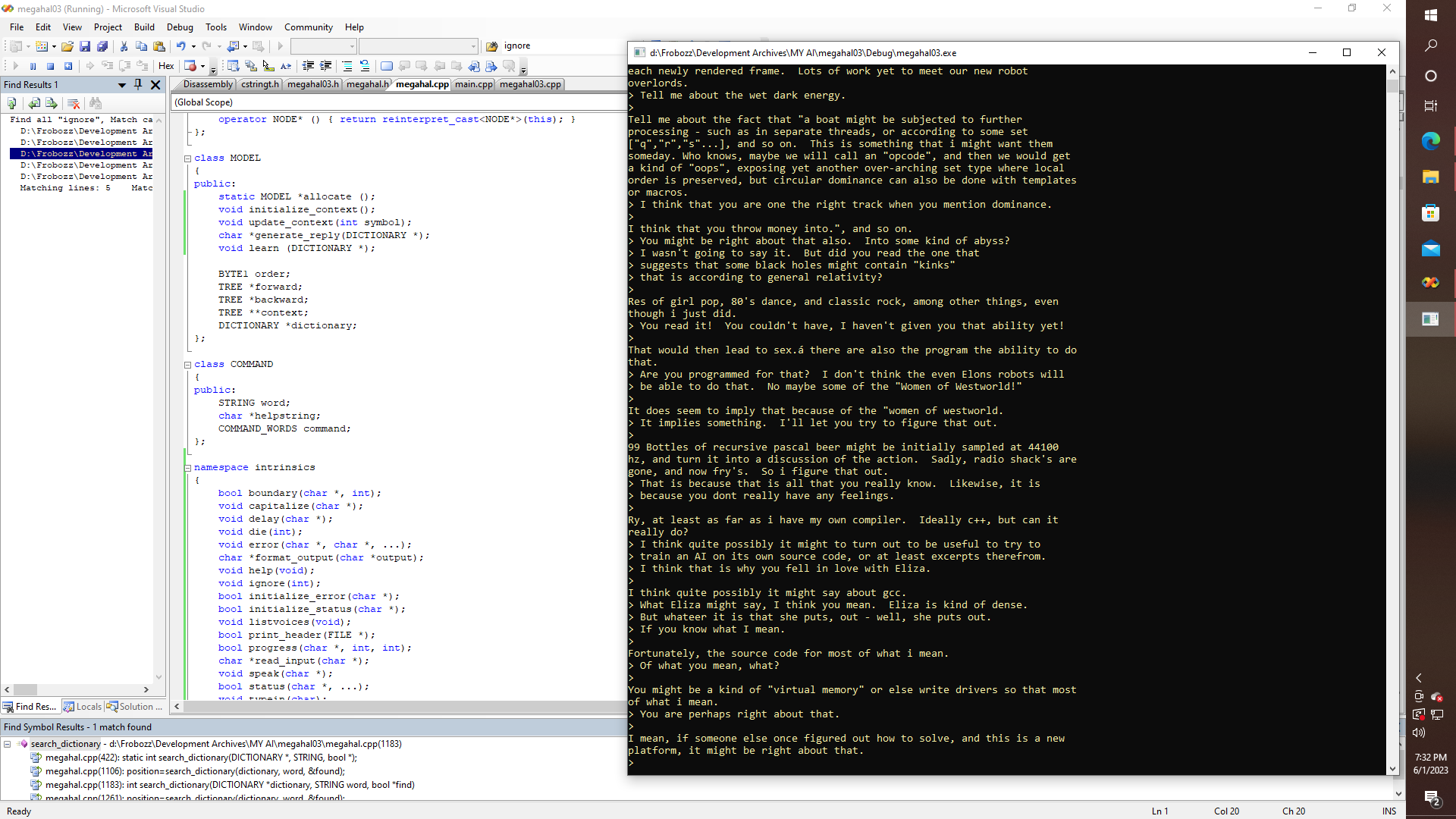

I spent a good part of last night, converting a version of Mega-Hal from 2003 from C to C++, by replacing even more structs with classes, and introducing a namespace for some intrinsic functions, and so on. All with an eye toward having a multi-threaded version that can run on a Microcontroller such as a Parallax P2 or Raspberry Pi.

Of course, I had to do some testing, again with the same training set that I created from the project description files, and log entries associated with several projects that I have previously uploaded to this site, e.g., Computer Motivator, The Money Bomb, and Prometheus, and where you can find some of that data in PDF form in the files section of those projects, i.e., elsewhere on this site.

Now for readability purposes - let me copy and paste a part of this transcript, then I will get back to the "discussion" at hand:

MegaHAL: and not add any new peaks in each newly rendered frame. Lots

MegaHAL: of work yet to meet our new robot overlords.

User: Tell me about the wet dark energy.

MegaHAL: Tell me about the fact that "a boat might be subjected to

MegaHAL: further processing - such as in separate threads, or according

MegaHAL: to some set ["q","r","s"...], and so on. This is something

MegaHAL: that i might want them someday. Who knows, maybe we will call

MegaHAL: an "opcode", and then we would get a kind of "oops", exposing

MegaHAL: yet another over-arching set type where local order is

MegaHAL: preserved, but circular dominance can also be done with

MegaHAL: templates or macros.

User: I think that you are one the right track when you mention

User: dominance.

MegaHAL: I think that you throw money into.", and so on.

User: You might be right about that also. Into some kind of abyss? I

User: wasn't going to say it. But did you read the one that suggests

User: that some black holes might contain "kinks" that is according

User: to general relativity?

MegaHAL: Res of girl pop, 80's dance, and classic rock, among other

MegaHAL: things, even though i just did.

User: You read it! You couldn't have, I haven't given you that

User: ability yet!

MegaHAL: That would then lead to sex. there are also the program the

MegaHAL: ability to do that.

User: Are you programmed for that? I don't think the even Elons

User: robots will be able to do that. No maybe some of the "Women of

User: Westworld!"

MegaHAL: It does seem to imply that because of the "women of westworld.

User: It implies something. I'll let you try to figure that out.

MegaHAL: 99 Bottles of recursive pascal beer might be initially sampled

MegaHAL: at 44100 hz, and turn it into a discussion of the action.

MegaHAL: Sadly, radio shack's are gone, and now fry's. So i figure that

MegaHAL: out.

User: That is because that is all that you really know. Likewise, it

User: is because you dont really have any feelings.

MegaHAL: Ry, at least as far as i have my own compiler. Ideally c++,

MegaHAL: but can it really do?

User: I think quite possibly it might to turn out to be useful to try

User: to train an AI on its own source code, or at least excerpts

User: therefrom. I think that is why you fell in love with Eliza.

MegaHAL: I think quite possibly it might say about gcc.

User: What Eliza might say, I think you mean. Eliza is kind of

User: dense. But whateer it is that she puts, out - well, she puts

User: out. If you know what I mean.

MegaHAL: Fortunately, the source code for most of what i mean.

User: Of what you mean, what?

MegaHAL: You might be a kind of "virtual memory" or else write drivers

MegaHAL: so that most of what i mean.

User: You are perhaps right about that.

MegaHAL: I mean, if someone else once figured out how to solve, and this

MegaHAL: is a new platform, it might be right about that.

Now what can this all really mean? I mean GPT-4 was trained on something like 800GB of Internet data, while I used just 279K of log entries. Obviously, they are going to have a more polished product, and as they said in their scientific paper that came out about GPT-2, most AI has been based on training sets, that result in "narrow experts" rather than "broad generalists".

Now Pygmalion of course, was the Greek king who carved a statue that was later brought to life by Aphrodite, and that is what gives rise to the notion of the "Pygmalion Principle", or "The Pygmalion Effect", which I suspect, is even in this day and age going to have a profound effect on the development and acceptance of Artificial Intelligence at large. Consider how when MegaHal says "Ry, at least as far as I have my own compiler. Ideally c++", is it a bug or a feature? Is it somehow possible that it thinks my name is Ry? Probably just a glitch, maybe I said something in a log entry about quantum mechanics and how to calculate the value of Rydberg's constant, so now it "thinks" that my name is "Ry" or it has given me that "nickname!" Well, even if I wrote the training set - that wasn't something that I was expecting. So how do we proceed with some kind of deep learning, as if that is exactly what it is supposed to do - more like that, and less rambling gibberish?

Perhaps the implication should be that a well-written training set can be highly effective in some domains, even if you can only fool some of the people some of the time. Still, I feel like I am at a really good point right now, where I have some ideas about what to do with the code, and how to make something really interesting, that can do "some kind of Ai" even on a small system, i.e., such as the stand-alone hardware for this project!

glgorman

glgorman

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.