Shortcuts: Too much texts, No Time

In case you've got no time, the following two diagrams I hope should answers all your "geeky" questions in mind.

Overall HW Architecture:

High-Level Operational Features (October 2023):

Project Logs Shortcuts:

- High Level Architecture Design

- Hardware Feature Deeper Dive

- High Level Operation Functionalities

- Operation Passive Mode: a Keyboard

- Operation Generative Mode: Informative

- Operation Generative Mode: Creative

- Operation Generative Mode: Suggestive

- Operation Generative Mode: Illustrative

- Operation Generative Mode: Educative

- Development: GUI

- Development: AI Avatar

- Development: Enclosure

The Rationale

ChatGPT and the whole generative AI scene? Now that's some seriously mind-boggling stuff, isn't it? The buzz around these things is off the charts, and it's no wonder why. I mean, think about it – we're talking about AI that can spin out text and creations like pure magic. It's like having a digital wizard by our side, whipping up ideas and content that we never thought possible.

I'm far from the expert in this field, but ChatGPT, despite its marvels, comes with a handful of limitations. It's like the forgetful friend who can't hold onto a variety of past conversations, lacking that personal touch that comes from remembering details. Contextual awareness is hit-and-miss, leaving us with moments of confusion akin to talking to someone who wasn't quite paying attention. And let's not forget the awkwardness of reading text that doesn't quite capture our unique style – it's like wearing a suit that's one size too big or too small. So, while it's impressive, it's got a way to go before it truly becomes our digital twin.

That's where prompt engineering kicks in – it's our way of telling the AI exactly what we want in a language it gets. We're basically giving it a nudge in the right direction, so it doesn't go off on some wild tangent. It's like we're talking to a friend who's super smart but occasionally gets things hilariously wrong.

"The use of generative AI can altogether succeed or fail based on the prompt that you enter" - Source

So, having said that, my project's main "Research questions" are more like:

- How can we whip up an open-source hardware wizard to help us "prompt through" the imperfection of Generative AI?

- What's the coolest hardware to slot in without messing up our groove?

- Can we make the variable prompts adapt like chameleons to match our intended context?

The Hypothesis

Introducing Generative kAiboard

Wait, hold-on-a-minute, a keyboard? Yes, let me explain.

When you think about it, there is actually a lot of similarity between generative (text) AI such as ChatGPT and a keyboard. they both generate text! while chatgpt relies on pretrained large language model, our keyboard relies on our brain to convert our thought in the analog domain into the digital realm. In fact you are probably holding one as you read this. But of course, mostly keyboard as we use today is just an extension of us, a tool, nothing more. What if we can make it more intelligent, make it a complement, make it a part of us as we surf through the age of generative AI.

Just think about it for a sec: if I threw this question at you – who's the real expert when it comes to your writing style, the handpicked words you dig, when you usually kick off your day, and how long you grind on stuff? It's gotta be your trusty keyboard, right? I mean, let's pretend it's all "smart enough" just to play around with the idea. In my book, I'm all in on the notion that our keyboards have some untapped superpowers. And you know what cranks up the excitement? The cool new trend of prompt engineering has cranked this whole concept up a notch.

And here's the kicker: we're talking about a keyboard here, so it won't mess with your groove, if you catch my drift. This ain't an extra gadget you gotta drag around, or some software headache that needs installing and babysitting – it's just a good ol' keyboard. You know, that thing you kinda take for granted, sitting on your work desk at the office or your home setup. The real gem is making this a sidekick instead of just another needy gadget shouting for your precious attention.

The Goal

Before we start getting all nerdy with the technical nitty-gritty, let me say few things about the goals. And I'm not just talking about the project goals from a tech perspective – I mean, those are important too. But what really takes the spotlight is the personal aim, the push, the reason why I jumped into this project in the first place – to me that's what holds the greatest significance.

I mean, man, can you believe it? Almost ten whole years have zipped by like it was nothing. Feels like just a blink ago when I first ran into Mike Szczys in Maker Faire Bay area back in 2014. I was hustling on my Kickstarter gig with Phoenard, and there he was, interviewing me like a pro. Few months ago I was quite shocked to realize that the Hackaday Prize is hitting its tenth round now! I can still picture myself at that Hackaday meetup in Munich, back when SatNOGS snagged the crown as the first winner. What a blast it was, meeting the whole Hackaday crew in person and soaking in the energy.

Oh, and speaking of time flying, it's as if I blinked and it was yesterday when I whipped up the Fsociety Keyboard back in 2016. You won't believe it – that keyboard is still going strong, and I use it daily. It's crazy how these moments can blossom into something way bigger than you ever thought possible.

Fast-forward to the present, man, a bunch of stuff has changed, both in my own life and in the big wide world. I've just hit a new-age-decade, and somehow coincides with the Hackaday Prize's 10th edition. It's got me thinking – why not make this milestone really mean something, you know? I want to mark this point in my life with something seriously special. A project that'll be like a reminder, not just of how far I've come, but of all the things that get me pumped and the awesome community that's had my back through it all. Something that, down the road, will quietly remind me of this exact milestone in my life.

Crossing my fingers and all, I'm really hoping the Generative kAiboard project delivers on its promises.

The Ultimate Goal

And you know what? Apart from all that non-techie chatter I threw out there earlier, the ultimate goal is to create something that really sticks. I'm talking about a project that captures today's tech scene, rides the hype wave, and ventures into uncharted territory (at least from what I've seen). Can you believe it? Just a few weeks back, if you punched in "Generative kAiboard" on Google, you'd get absolutely nada. Here's to hoping those words catch on like wildfire sooner rather than later.

Moreover, my main gig isn't just about spreading the word, churning out a bunch of these projects, or making a quick buck. It's all about sparking up a new idea, finding a fresh use-case that hasn't been explored yet. So, all the design files for this project? They're wide open for you to dive into, size up against your own thoughts, give them a good critique, and maybe even throw in your two cents.

Rather than cranking out a bunch of copy-paste physical samples, what I'm really excited about is building an open playground, a platform where folks can grab onto the concept, the hardware bits, the software tricks, and make 'em dance in their own setups.

Now, about the current prototype – to me, it's like the embodiment of the idea in physical form. Stuffed with features that are bound to turn heads, give that "wow" factor, but let's be real, not everything that glitters is gold. It might not be everyone's cup of tea, and that's perfectly cool. So, I'm throwing the whole nitty-gritty of my project right here on hackaday.io. By doing that, I'm crossing my fingers and hoping I'm taking a step closer to nailing down what I'm really aiming for.

The Embodiment

Enough chit-chat, from this point on I'm going to provide more extensive details of the current version of Generative kAiboard. This is the first HW version let's call it then GKV1. And to be clear, the HW of the first revision to me is considered complete and finished, however the software part, the logic, the prompt classification etc is still pretty much work-in-progress.

On this project details section, I'm mainly detailing "The WHAT" part of the project. What exactly the current features and for what purposes. "The HOW" in more detail I'll describe in under the build-log section of this project page. Furthermore, "The WHY" of the project such as the design decision, why it is the way it is etc, should be covered in the project log.

The Video

Perhaps before continue reading too much into the detail, you can watch the video below how the GKV1 should work. This is far from ready, but I'm sure it already gives you an idea.

Technical Specification Summary

- Primary uController: STM32F429

- Co-uController: ESP32-WROOM-DA

- Graphics-uController & Display: Nextion NX8048P050-011C

- Network Controller: Wiznet W5300 (10/100-base-T, 16-bit parallel interface)

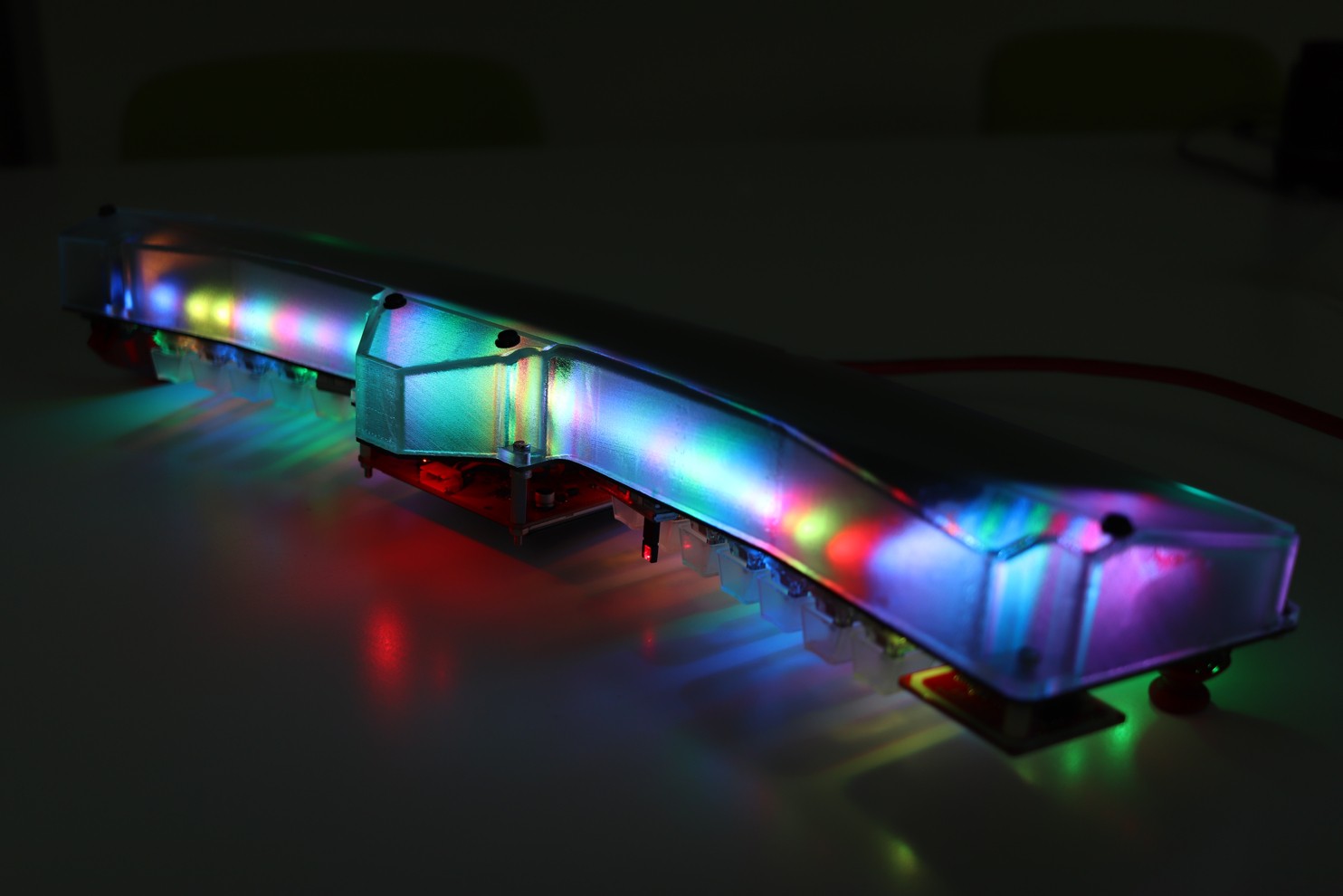

- Keyboard layout: Split symmetrical, 15 degrees angle

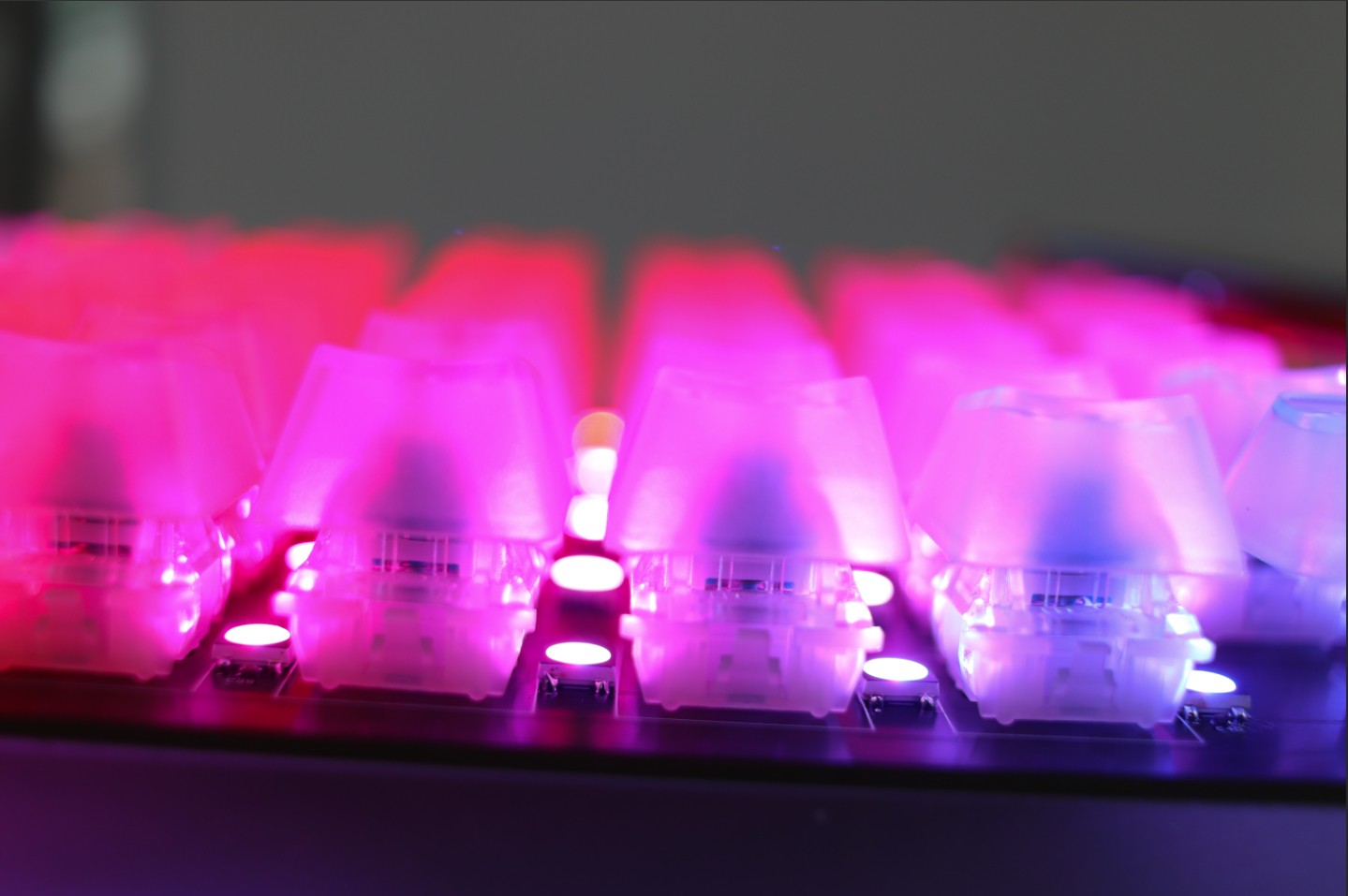

- Keyboard Switch: 70 x Cherry MX (Currently use hybrid Blue and Brown)

- Power: Power-over-ethernet 802.3at compliant (25W max)

- Display: 5 Inch with capacitive touch screen

- LED: 80 x WS2812b on the top, 60 x WS2812b on the side

- Sensor: 8x8 multi-zone time-of-flight sensor

- EEPROM: External 1Mbit I2C

- Peripherals: 2 x 2-axis joystick + button

- Peripherals: NFC Tag reader PN532

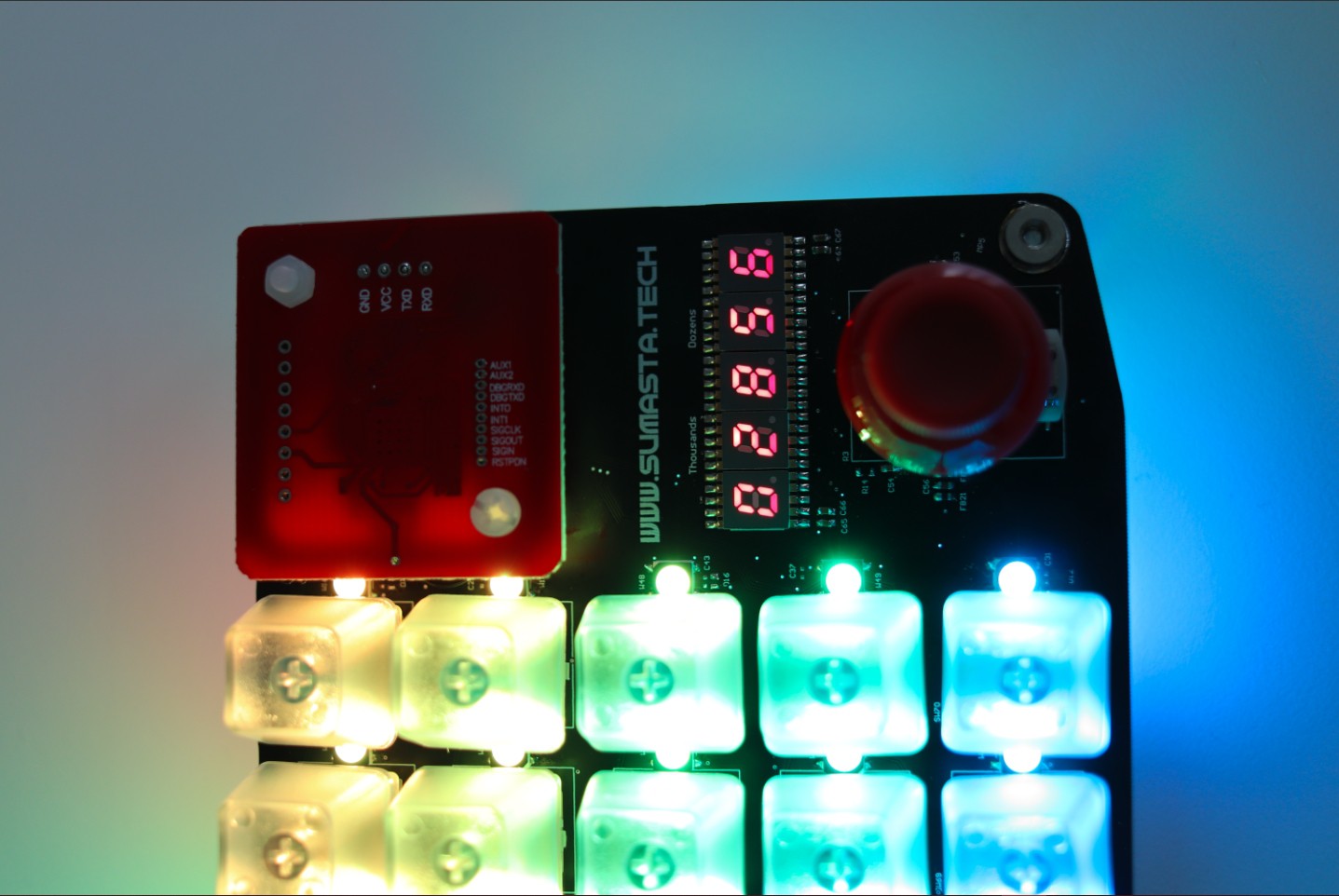

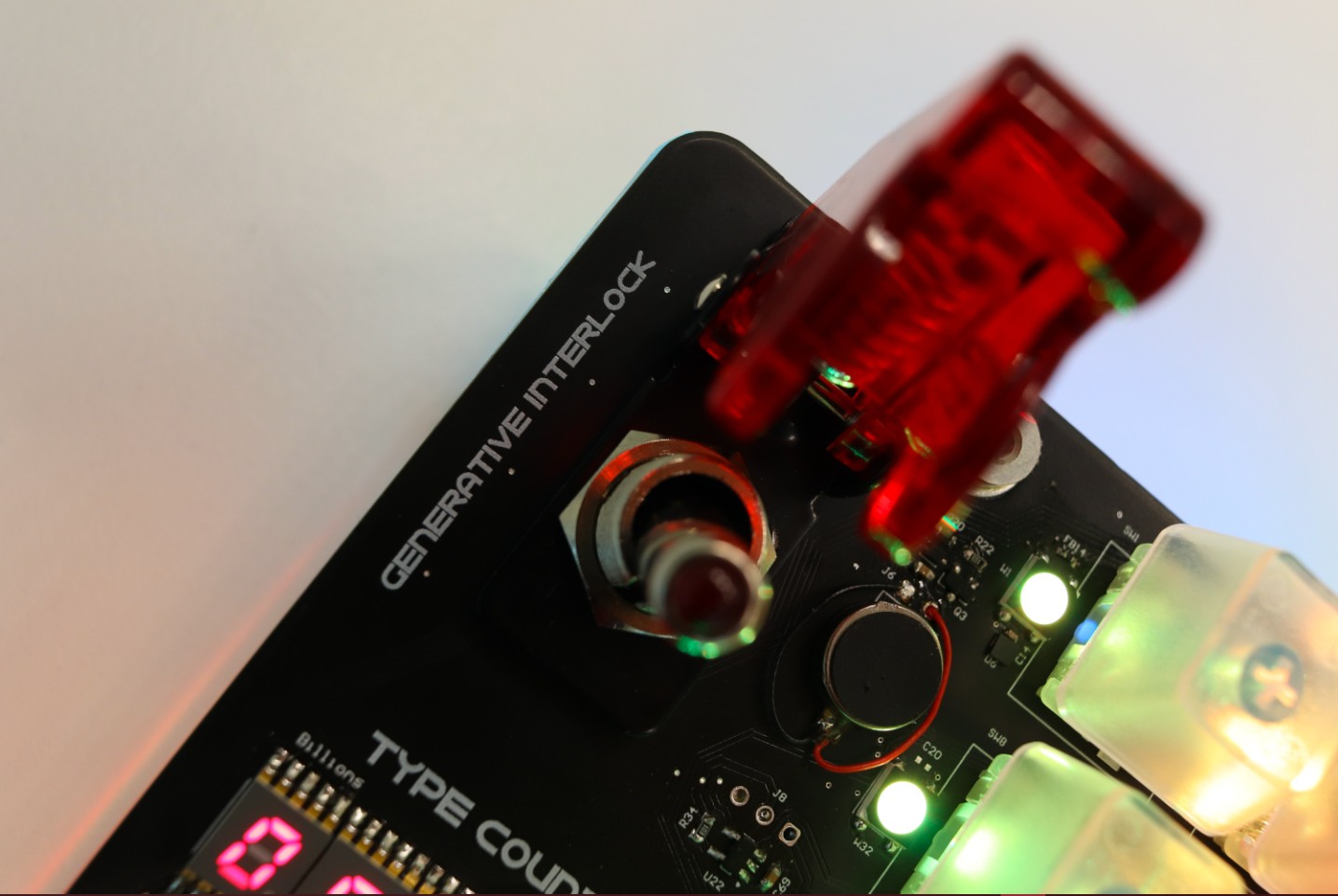

- Peripherals: 10 digits type counter (up to 9.99 billion)

- Peripherals: Physical Interlock Switch

- Peripherals: 2 x Potentiometer Slider

- Peripherals: 1 x Mono speaker

- Peripherals: 2 x Vibration motors on each side.

- Programmability: ST-Link for Primary uController via USB-C

- Programmability: CP2104 UST-to-UART for ESP32 via USB-C

- Dimensions: ~500 x 150mm

- Housing: 3D printed translucent design

The Features

Overall outlook of the GKV1

The primary 5-Inch screen with capacitive touch sensor

Power-over-ethernet port, and 2 x USB-C for programming

Cherry-MX Key switches. 70 of them to be precise.

Left-side peripherals: Physical Interlock Switch, Type counter and left-Joystick. The interlock is a physical switch to prevent unintentional GPT message streamed from primary uC to the co-uC. Type counter on the left is for the millions and billions. Left joystick currently used for media.

Right-side peripherals: NFC Tag reader, type-counter and right-joystick. NFC tag reader used for user authentication purposes, encrypt password etc, type counter for the dozens and thousands, right joystick is currently for arrow key.

8x8 multizone time-of-flight sensor from ST. Mainly used for presence detection, gesture detection, power saving etc.

Addressable RGB pixel WS2812b. 80 of them placed between keycaps.

2 x Slider potentiometer. currently for controlling the light effects.

ESP32-WROOM-DA co-uC. used primarily for Bluetooth HID bridge. Cool looking dual antenna ESP32 module.

2-step interlock switch and vibration motor.

60 x WS2812b side illumination strips and 3D printed translucent housing.

Lastly the Generative kAiboard logo :)

The Details

As said in the coming weeks I'm planning to complete this page, provide you with more details about the design decisions, build instructions and the software framework I intended to implement on this project. In a nutshell:

- In the Build Log I will detail the step by step instruction how you can adapt changes and build your own or simply just duplicate the GKV1.

- In the Project Log I will detail more about the background story in terms of the design decisions, I'll try to go through each schematic in a bit more detail and surely I will share latest update also there.

- In the Github repository, you can find the latest SW that I'm working on as I try to update it regularly.

- Through my website www.sumasta.tech you can reach me the fastest in case you have urgent questions :)

Pamungkas Sumasta

Pamungkas Sumasta