So far, I avoided introducing convolutional layers at the full image resolution of 32x32. This is because it would drive up the SRAM memory footprint signficantly. However, since no convolution takes place in 32x32, there are limitations to the image quality.

Depth first/tiled inference of the CNN may help to reduce memory footprint. So we should not immediately discard adding more layers.

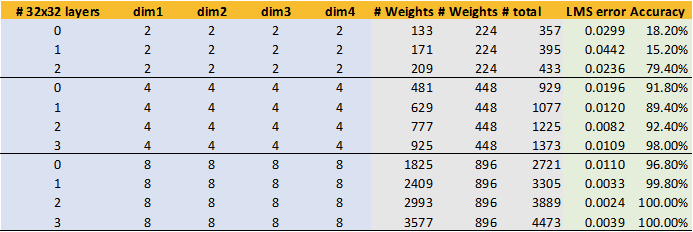

The table above shows results of model modifications where I added between 1 and 3 convolutional layers on the 32x32 step. The number of channels for the 32x32 is the same as in Dim1. These do only add a limited number of weights, as 3x3 kernels are used. However, we can see that there are drastic improvements when one or two layers are added. There are diminishing returns or even degradation with three layers.

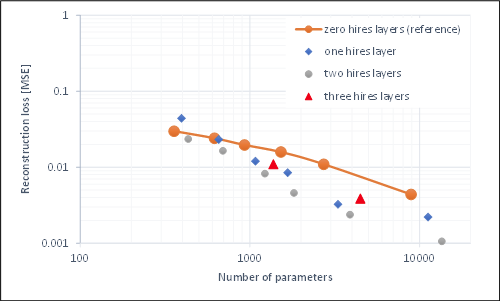

This is even more evident in the plot above. Adding one or two hires layers beats the trend of the old model (line) and creates a better tradeoff for loss vs. number of weights. (However, at the expensive of significantly more computational and memory footprint at inference time).

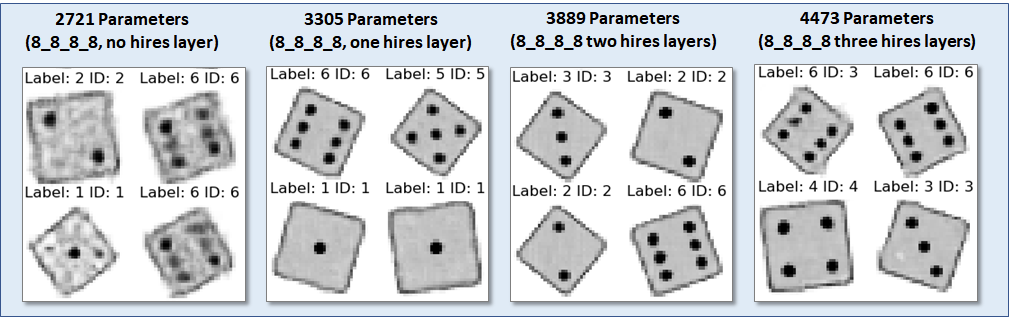

The improvements are even more impressive when looking at the visual quality. The 8_8_8_8+two hires layers combination provides generated image very close to the original at less than 4000 weights. However, at these settings, 16kb of SRAM would be needed to store the temporary data of the hires layers during inference.

Very encouraging, let's see what we can do about the memory footprint at inference time.

Tim

Tim

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.