Yet to be done or even researched by myself.

Dataset collection:

⦁ Identify Domains and Use Cases: identify the use cases that the small system is intended to handle. For example, if the system is designed for home automation or if it's for robotics, the dataset should include commands related to controlling lights, temperature, and appliances ad or moving, performing actions or tasks.

⦁ Data Collection: Collect a wide range of commands that cover the identified domains and use cases. This could involve manual collection, where you or a team of data collectors generate the commands, or it could involve automated collection, where you use a tool or script to scrape relevant commands from online sources.

⦁ Diversity: Ensure diversity in your dataset by including variations of the same command. For example, for a home automation or robotics system, "move forward" “Turn on the lights”, “Switch on the lights”, and “Lights on” and robotics "move forward" "Hey could you maybe move forward a little please?" are all variations of the same two commands.

⦁ Noise Handling: Include commands with different levels of noise, such as spelling mistakes or grammatical errors, to make your model robust to real-world inputs.

Training and deployment of DUM:

⦁ Data Collection: A diverse dataset of commands is collected.

⦁ Preprocessing: The collected command dataset is preprocessed through tokenization, normalization, and data augmentation.

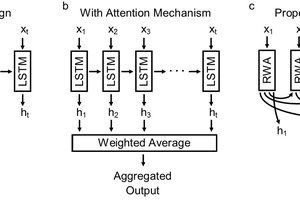

⦁ Model Selection: A model architecture that balances performance and efficiency is chosen. Models like BERT or LSTM can be considered as they are relatively lightweight and suitable for small-scale deployments.

⦁ Training and Fine-tuning: The selected model is trained using the preprocessed dataset. Transfer learning and fine-tuning are employed to adapt the model to the specific command recognition tasks.

⦁ Model Optimization: Techniques like quantization, pruning, or knowledge distillation are used to optimize DUM for deployment on resource-constrained systems.

⦁ Integration with Small System: Finally, DUM is integrated into the small system, enabling enhanced command-based interaction. In my case a robot, overlaid on top of DUM would be a more complex streamed LLM.

Lee Otto

Lee Otto

alex.miller

alex.miller

Sumit

Sumit

Jared (jostmey)

Jared (jostmey)