What is it?

lalelu_drums is a system (hardware + software) that can be used for live music performances in front of an audience. It consists of a camera recording a live video of the player, an AI network that estimates the body pose of the player from each video frame and algorithms that detect predefined gestures from the stream of pose coordinates and create sounds depending on the gestures.

Video 1: Example video showing basic drum pattern

Why?

This type of drumming allows to incorporate elements of dancing into the control of the drum sounds. Also, the drummer is not hidden behind the instrument. Both aspects should promote a more intense relation and interaction between the musician and the audience.

The pose estimation yields coordinates of many different landmarks of the human body (wrists, ellbows, knees, nose, eyes,...) and I envision that there are intriguing options how to create music from gestures with these.

Compared to other forms of modern electronic music control, lalelu_drums can be played with a minimal amount of tech visible to the audience (i. e. the camera in front of the player). It is therefor especially well suited to be combined with acoustic instruments in a low-tech setting.

With this kind of atmosphere in mind and in order to foster a good contact to the audience, I would like to design the system in a way that the player has no need to look at any display while playing. For checking basic parameters like illumination or camera positioning or for troubleshooting, I think a display will be necessary. But it should not be needed for the actual musical performance so that it can be installed in an unobstrusive way.

An interesting application of lalelu_drums is to augment other instruments with additional percussive elements. In such a hybrid setting, the gestures need to be defined taking into account the normal way of playing the instrument.

Video 2: Acoustic cajon and egg shaker augmented with snare drum and two bells

While it is certainly possible to use the arrangement of lalelu_drums to control other types of instruments apart from percussion (e. g. Theremin-like), I chose percussion for the challenge. If it is possible to design a gesture controlled percussion system with acceptable latency and temporal resolution, it should be straight forward to extend it for controlling other types of sounds.

Prior art

There are examples of gesture controlled drums using the kinect hardware:

https://www.youtube.com/watch?v=4gSNOuR9pLA

https://www.youtube.com/watch?v=m8EBlWDC4m0

https://www.youtube.com/watch?v=YzLKOC0ulpE

However, the pose estimation path of the kinect has a frame rate of 30fps and I think that this rate is too low to allow for precise music making.

Here is a very early example based on video processing without pose estimation:

https://www.youtube.com/watch?v=-zQ-2kb5nvs&t=9s

However, it needs a blue screen in the background, and since there is no actual pose detection it can not react on complex gestures.

There is a tensorflow.js implementation from 2023 of a pose estimation based drumming app, but it seems to be targeting rather a game like application in a web browser than a musical instrument for a live performance:

https://www.youtube.com/watch?v=Wh8iEepF-o8&t=86s

There are various 'air drumming' devices commercially available. However, they either need markers for video tracking (Aerodrums) or they use inertia sensors so that the drummer still has to move some kind of sticks (Pocket Drum II) or gloves (MiMU Gloves) and can not use gestures comprising ellbows, legs or face.

One other interesting commercially available device is the Leap motion controller. It uses infrared illumination and dual-camera detection to record high-framerate (115fps) video data of the user's hands from below. The video data is processed by some proprietary algorithm to yield coordinates of the hand and finger joints + tips. Here is a project using the device for making music:

https://www.youtube.com/watch?v=v0zMnNBM0Kg

While the Leap motion controller was already available when I started lalelu_drums, I actively deciced to rather use whole body pose estimation instead of hand poses for the following reason. My idea is that the audience can perceive the connection between the gestures of the performer and the audio generated from them. Therefor, the gestures need to be large enough to be recognizable by an audience of more than just a few people and I found whole body gestures more suitable in this regard.

A disadvantage of the Leap motion controller seems to be that it requires a Windows or macOS host system and the integration to custom code is not clear to me.

Finally, I would like to mention this 'full body keyboard':

https://hackaday.com/2023/04/01/modern-dance-or-full-body-keyboard-why-not-both/

The user can generate keyboard input to the computer (letters, numbers, special signs like !, ? etc.) by using a modified version of the semaphore alphabet. While the constraints on latency and temporal precision are certainly lower than for lalelu_drums, the project shows a nice example of using human pose estimation data from a live video to detect gestures and eventually trigger predefined actions. I especially like the way the project presentation relates the movements of the player to dancing.

Architecture

In the following I will present a high-level overview of the system. I plan to publish more technical details in the project logs.

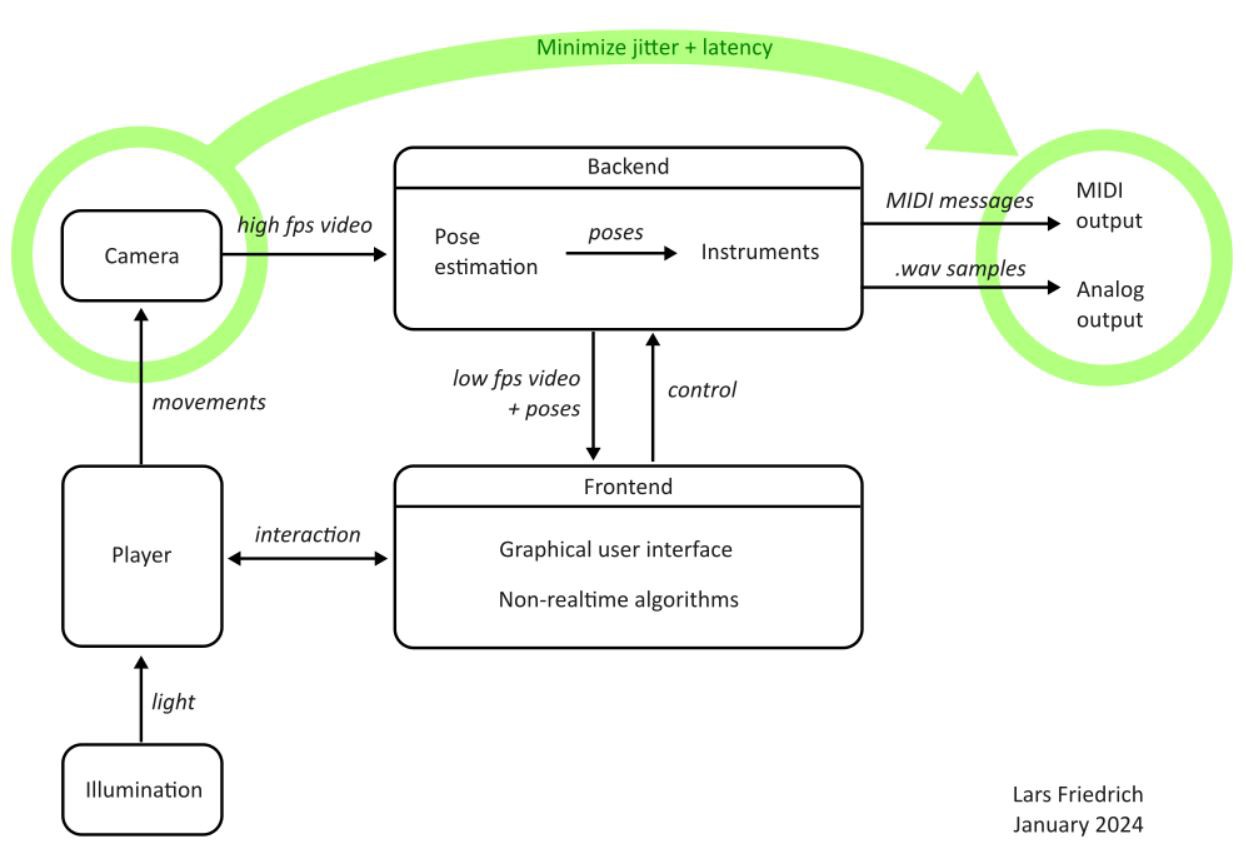

Figure 1: Global architecture

Backend vs Frontend

One of the technical challenges of this project is to minimize the latency and the temporal jitter between the gestures performed by the player and the triggering of a drum sound. The major design decision I took to meet this challenge is to split the electronic system into a backend and a frontend. The backend concentrates on the time critical tasks while the frontend does everything else.

The backend receives live camera images and applies the body pose estimation network to each of them. The results of the body pose estimations ('poses') are then presented to a customizable list of so called 'instruments', each instrument being responsible for detecting a specific gesture and deciding if and which sound should be output. While I did some experiments with single-board-computers together with one or two Google Coral AI accelerators, as described in my earlier project highratepose, I currently use a four core intel x86 system with a GTX1660 GPU, running ubuntu server 22.04 for the backend.

The frontend runs on my Windows dev PC and receives the live camera images (reduced frame rate) and detected poses (full frame rate) via 1GBit/s TCP/IP from the backend. It presents the live camera image to the player so that illumination and camera positioning can be checked. It can create overlays based on the current settings and the detected poses.

Besides providing the graphical user interface, another task of the frontend is to control the configuration of the backend. Triggered by a user command it would load presets from a file and transfer the respective settings to the backend via TCP/IP. Also, some non-realtime algorithms on the pose data can be run by the frontend, for example it can detect if the player is absent from the video and then mute the output of the backend.

Camera + pose estimation

As a camera I use the PS3 camera (Play Station Eye). Please see my earlier project highratepose for my considerations.

For pose estimation, I use the 'singlepose-thunder' variant of Google's Movenet, provided as a trained tensorflow model by kaggle. It accepts 256x256 RGB image input and gives XY coordinates and a confidence value for 17 keypoints.

Instruments

The gesture detectors (Instruments) are central to this project. They define at which point in time, depending on the movements of the player, a sound is triggered. My goal is to find one ore multiple algorithms that give the player a natural feeling of drumming.

One important technical aspect on the transistion from the time series of poses, generated by the pose estimation network, to the detection of gestures is the following. The pose estimation network treats each camera frame as completely individual and uncorrelated to the previous frames. It has no inner state keeping track of the course of the motion. However, in the case of lalelu_drums, there is typically a strong correlation between the poses of consecutive video frames, since they belong to a continuous motion of the player. It is advantageous to make use of this prior knowledge and apply some kind of temporal filtering to the stream of pose coordinate data. It can be a simple exponential smoothing or more sophisticated filters like Kalman filters. In any case, there is a tradeoff between strong smoothing (leading to high precision pose data) and low latency.

The crucial question is, which feature or combination of features of the filtered time series of poses should trigger a sound. I did some experiments with the following and combinations thereof:

- Relative position

A region of interest (ROi) is defined, relative to the torso coordinates of the player (torso = left and right shoulder + hip coordinates). If a predefined keypoint (e. g. right wrist) enters the ROI, a sound is triggered. If absolute coordinates were used, it would be necessary to watch the live video display to identify where the ROI is in relation to the own body. Using relative positioning makes the ROI move with the player, allowing to play without watching the frontend display. - Speed exceeding a given threshold

A direction vector is defined, and the speed vector of a predefined keypoint (e. g. right wrist) is projected to the predefined direction. When the projection exceeds a given threshold, a sound is triggered- - Speed falling below a given threshold

Similar to (2), but when the speed exceeds the given threshold, the instrument goes to an 'armed' state (no sound is triggered). Only when the speed falls again below a given second threshold, a sound is triggered.

As you can see from the demo videos, I have a working solution. However, I am still not satisfied with it and I would like to invest more research work on this central topic.

Illumination

With the high frame rates necessary for a good temporal resolution, exposure times of the camera become short. Accordingly, the player needs to be illuminated with sufficient intensity to provide enough signal. To achieve a homogeneous illumination of a standing player, without creating hard shadows, I designed a lamp. The lamp has a height of 1.8m and a footprint of 30cm x 35cm. It features four LED bulbs and a diffusor screen made from greaseproof paper.

Figure 2 + 3: lalelu lamp

It seems that illumination from the side is advantageous, probably because it creates intensity gradients on body parts of the player, giving the pose estimation network contrast to work with. Hard shadows can lead to pose estimation artifacts, because the network can mix up the shadow shape with the actual body.

Unfortunately, these illumination requirements are in contradiction to many stage lighting concepts, that can involve flashing lights or even artificial fog. Currently, any lalelu_drums performance requires a temperate illumination.

History of the project + acknowledgements

In 2021 I had the task at my workplace of testing the Google Coral USB accelerator device. I chose the example of human pose estimation for testing, because it was straight foward to create test input data with a camera. The idea for lalelu_drums came about, when I realized that with the low inference times of <20ms, it should be possible to achieve low enough latency and high enough temporal resolution to build a musical instrument.

I want to thank my supervisor at the time, Arnold Giske, for giving me that task and the opportunity to learn about AI image processing and hardware acceleration.

I teamed up early with my brother in law, Jonas Völker. He is a well trained percussionist and I am grateful that he was willing to test the lalelu_drums system in very early stages and for many helpful thoughts and discussions during all the development time.

I want to thank my colleague Kai Walter for helpful discussions about the AI related aspects of this project.

I want to thank my colleague Alexander Becker for tips and tricks related to embedded linux and linux hardware driver issues.

I want to thank my colleague Fabian Haerle for help with the configuration of the x86 backend.

At some point, a drummer from the neighbourhood, Andreas Rausch, became involved into the project. I want to thank him for various testing sessions and motivating comments and discussions.

Until October 2023, I kept the project rather private, because I was still wondering if any company would be interested in exclusive licensing of the development result. Then, I actually approached various companies and asked if there was any interest, without receiving any positive feedback. Therefor, I am now publishing here, on hackaday.io.