Hi. So this is the story:

My interest/knowledge of embedded stuff was always around 32bit MCUs and a little bit of FPGA/CPLD devices. Mostly in audio signal processing world. The embedded Linux always was for me something out of my scope.

Back in the day I’ve got the early revision of BeagleBone Black platform with only 2Gb eMMC flash instead of 4Gb, which was problematic to manage the space in latest distributions. So playing around with this board my interest leaned towards the video streaming applications. But unfortunately messing with the ffmpeg library for too long I’ve only managed to get about 1 second delay for mjpeg stream through usb wi-fi dongle with bad framerate. So I gave up.

And, after 10 years, here we go again :)

By choosing the BeagleBone AI-64 board I decided (I guess) to do not follow the Jetson Nano/Raspberry Pi mainstream. Plus it was slightly cheaper than Jetson. But there is one issue — it is not the most popular platform. So the community is small. And the number of stupid questions you may ask on forums is strictly limited. Or people just can not help you with your specific situation at all.

The Quick Start Guide is must to read. And in my case messing up the file system and re-flashing it with the last distros several times was the way to go.

Few caveats related to the hardware:

Mini Display Port instead of mini HDMI? Because of TDA4VM SoC hardware support of DP interface. So the ONLY! Active miniDP to HDMI 4K adapter should be used to get a picture through HDMI.

Power. I’m using 5 Volts 3 Amps wall adapter with barrel jack. Waveshare UPS Module 3S does work for me as autonomous power supply. According to the ups metrics BeagleBone AI-64 is consuming about 5W when idle. So it is quite power hungry device.

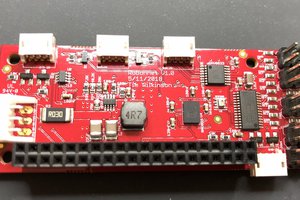

Wi-Fi. BeagleBone AI-64 has M.2 E-key PCIe connector for Wi-Fi modules. However as I found from this and this forum threads the only Intel AX200, AX210 were tested and supported out of the box.

So lets get started.

BeagleBone AI-64 board has two CSI (Camera Serial Interface) connectors. This feature attracted me in the first place. But it seems that so far the only camera sensor “supported” is the IMX219. In TI Edge AI Documentation of their TDA4VM based evaluation board you may find support of several other sensors. But compatibility of their Device Tree Overlays (DTO’s) is questionable.

So I took the Arducam IMX219 Camera Module with 15 Pin to 22 Pin camera flex cable included. And the struggle begun…

It is possible to grab the camera picture with v4l2 from /dev/video* and use it by any of linux program you want. But the problem is that imx219 sensor requires additional image signal processing to control the white balance, exposure, etc. And this is done by TI in their custom GStreamer plugins edgeai-gst-plugins, which utilize TDA4VM hardware accelerated ISP called Vision Preprocessing ACcelerator (VPAC), aside the v4l2 driver. So v4l2-ctl -d /dev/video2 --list-ctrls (list video device controls) shows nothing and the GStreamer is the only way to get proper picture from CSI camera. Also TI’s dcc isp files needed by the tiovxis plugin are missing in the distro, and can be taken for example from TI J721E SDK and placed in /opt/imaging folder.

This knowledge came to me after a few weeks of digging the forums/documentation without having any clue what I’m looking for.

And finally with the few “magic” lines:

sudo media-ctl -d 0 --set-v4l2 '"imx219 6-0010":0[fmt:SRGGB8_1X8/1920x1080]' sudo gst-launch-1.0 v4l2src device=/dev/video2 ! video/x-bayer, width=1920, height=1080, format=rggb ! tiovxisp sink_0::device=/dev/v4l-subdev2 sensor-name=SENSOR_SONY_IMX219_RPI dcc-isp-file=/opt/imaging/imx219/dcc_viss.bin sink_0::dcc-2a-file=/opt/imaging/imx219/dcc1/dcc_2a.bin format-msb=7 ! kmssink driver-name=tidss

...the image from camera is shown on screen!

GStreamer command line tools and its philosophy as well as edgeai-gst-apps...

Read more » hypnotriod

hypnotriod

Nathaniel Wong

Nathaniel Wong

Tim Wilkinson

Tim Wilkinson

Etienne

Etienne

krzysztof krzeslak

krzysztof krzeslak