I decided to describe here my struggles with retrieving and displaying images from OV7675 camera as I stumbled across some unexpected events, but that's the part of the fun, isn't it?

I wanted to understand well what is retrieved from a camera, play a bit with basic preprocessing (having in mind future inference) and be able to stream the image live on the PC.

Having that in mind I prepared an arduino sketch test_camera.ino and a Python script camera_cont_display.py. Both available in my repository.

I looked through the available examples that are provided with the libraries (mentioned in a previous log) as well as workshop provided by Edge Impulse.

OV7675 can return images in RGB565 or grayscale format - I played with both and python script can handle both as well. However, I decided to use grayscale to reduce the size. And...that's when the weird things started to happen. The camera libs say that the camera returns 2 bytes per pixel in grayscale (nothing found in the datasheet though). However, the image I received was either double or I had some kind of "halo" effect. To my surprise the lib already filters that, while the method Camera.bytesPerPixel() still returns 2. Well - as my university professor told me - never trust any specification!

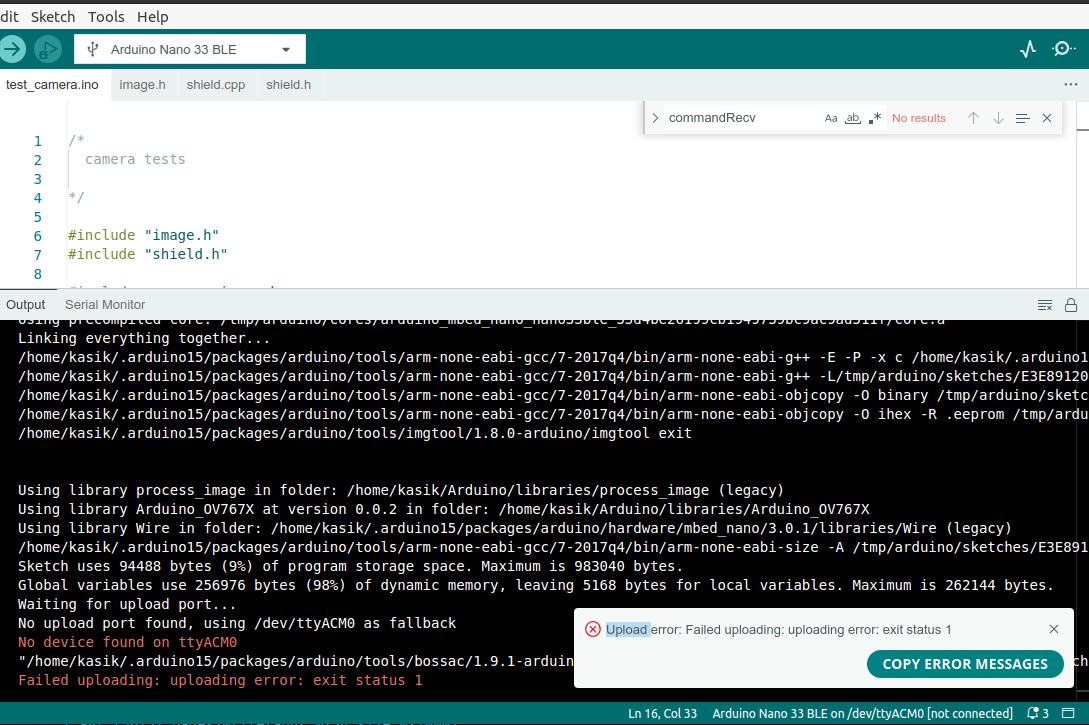

I went on to implement scaling and cropping methods (to be found here: ), inspired by the Edge Impulse example. I was a bit surprised to see that there is a number of image arrays allocated dynamically, but I thought to give it a try. Only 2 arrays need to be allocated at the same time for each operation - this way we reduce the size of memory used in comparison to static allocation. However, it took only a few seconds before arduino started to have issues with memory allocation, I presume due to memory fragmentation. Static allocation then! After creating 3 arrays (for image retrieved from camera, for the scaled one, for the cropped one) for QVGA resolution I reached as much as 98% of dynamic memory usage. This managed to put my arduino to some unexpected state, where the program didn't work and the PC didn't recognize the device anymore.

TIP: double-click of the reset button brought it back to life and I could flash the arduino once more. I searched through documentation and various fora and I failed to find any mention of this. Anyone could point me to some explanation?

Finally, after some trial and errors I decided to use QCIF (176x144), scale it to 117x96 and then crop to 96x96. It takes 35% of dynamic memory (perhaps I shall consider in-place operations) and takes 8ms to process

kasik

kasik

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.