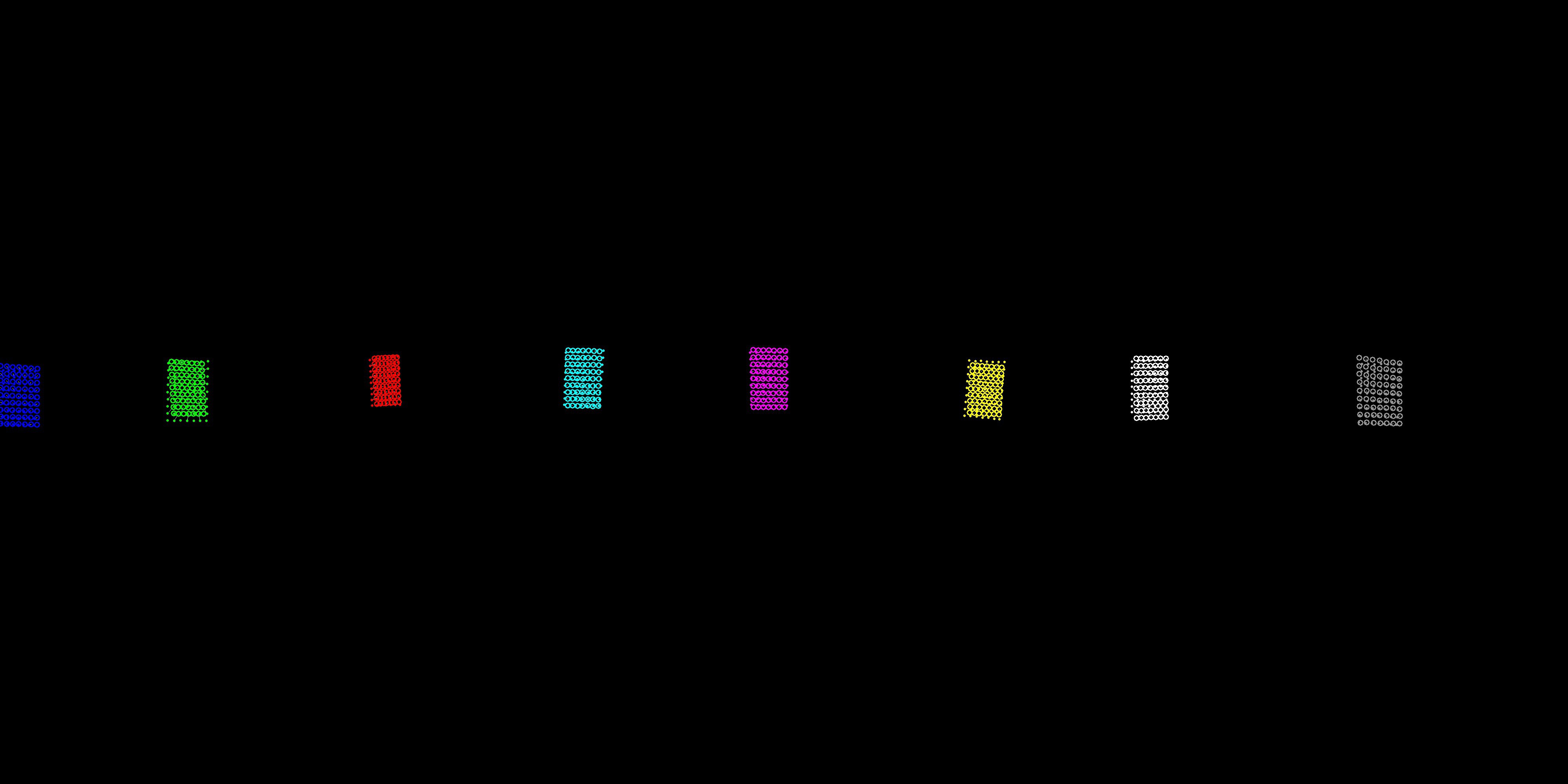

Here's the end of the baseline status - first, here's a nice picture that shows how the system has fused a series of checkerboard patterns it saw and calculated the field of view and attitude of all lenses (yeah, I punted on lens translation for the nonce) The solid dots represent the coordinates as seen in the right field of view of the counterclockwise camera and the hollow circles the location of the same coordinate as seen by the clockwise camera in the left field of view. A white line connects the two. The goal is to get the dots inside the circles. The enemy is noise. Lord of the Rings orc horde level amounts of noise :-)

But, not too shabby - calibration patterns are 68.5 cm away from the camera, local undistortion has been done, and it's not like stuff is scattered to the four winds.

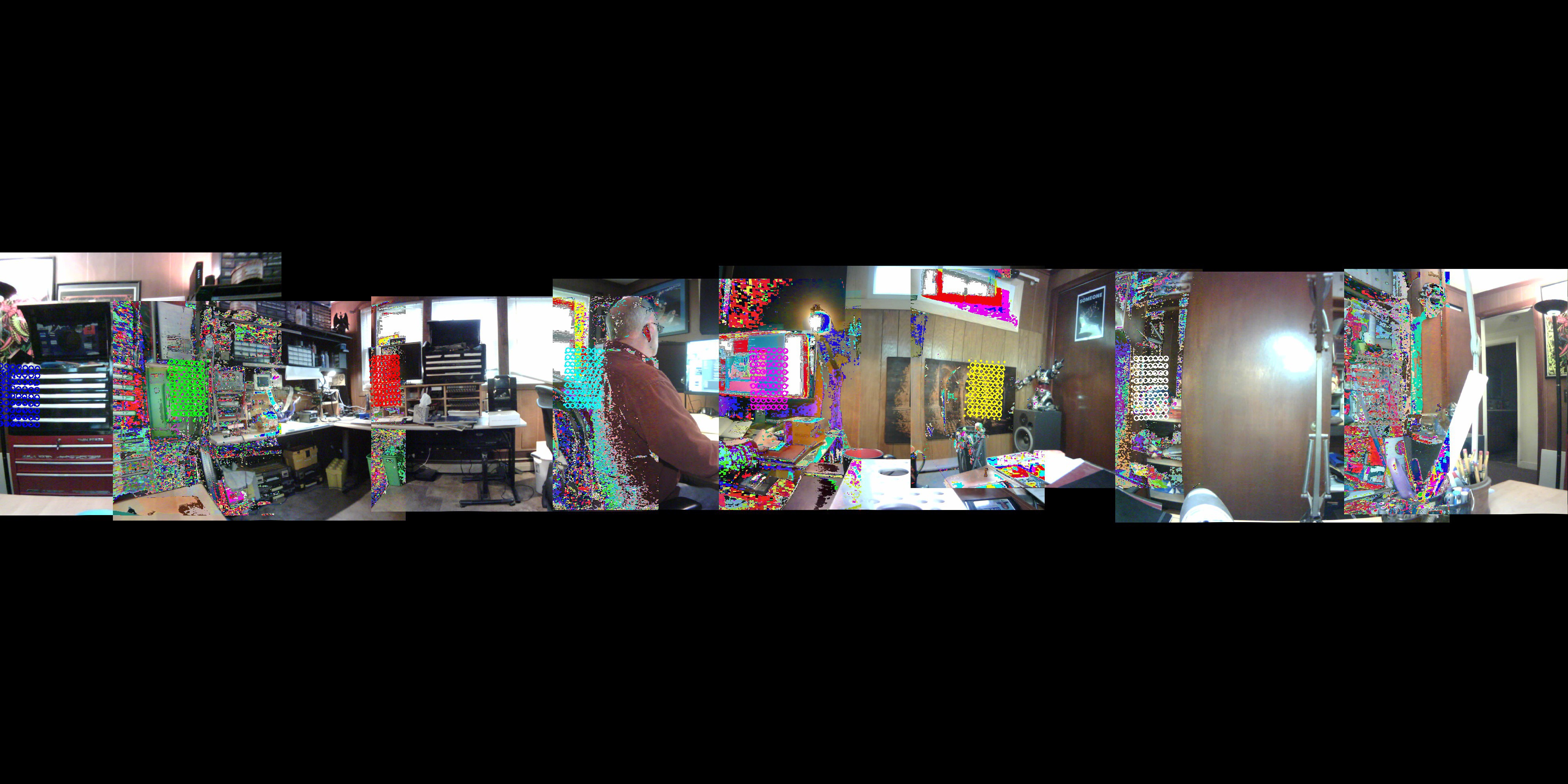

Welcome to machine learning, or, lets solve our problems with a lot of stats and incremental error reduction. The problem is that reality gleefully rolls around in the mud of non-linear systems dynamics, and........ if I put the actual images in place, here's what bozo came up with.

In parts it's done quite nicely, in parts it seems deranged, however what is clearest is that everything changes everywhere and you really can't trust a damn thing until it gets to be a habit. Now, there's obviously a need to rectify all of the undistorted images, which is going to be a trick since it's a fully circular field of view. However, this won't finish here - two tensorflow systems have to be integrated now, one to control voting on FOV/Attitude adjustments, and a much larger nastier one that handles non linear distortion of the image onto a hypersphere. We have to use a hypersphere for two reasons. First, we have no idea how large the actual space is, but we need to be able to quantify it externally. Space grows as long as a quamera delivers data for it, but a quamera needs space to deliver the data to. Secondly, and more interestingly, note that while two cameras having overlapping fields of view may align with respect to intersection of rays on a common visual sphere, they actually do have completely different opinions about the angle of incidence, which arises from their separate locations in space. In simple terms, the answer to 'what value lies here' is a function of your point of view.

In parts it's done quite nicely, in parts it seems deranged, however what is clearest is that everything changes everywhere and you really can't trust a damn thing until it gets to be a habit. Now, there's obviously a need to rectify all of the undistorted images, which is going to be a trick since it's a fully circular field of view. However, this won't finish here - two tensorflow systems have to be integrated now, one to control voting on FOV/Attitude adjustments, and a much larger nastier one that handles non linear distortion of the image onto a hypersphere. We have to use a hypersphere for two reasons. First, we have no idea how large the actual space is, but we need to be able to quantify it externally. Space grows as long as a quamera delivers data for it, but a quamera needs space to deliver the data to. Secondly, and more interestingly, note that while two cameras having overlapping fields of view may align with respect to intersection of rays on a common visual sphere, they actually do have completely different opinions about the angle of incidence, which arises from their separate locations in space. In simple terms, the answer to 'what value lies here' is a function of your point of view.

Cleverly placing the td/dr at the end, the pictures show what's being done right now repeatedly in near real time, and there will now be and intermission while I get a whip and a chair and go play with TensorFlow

Mark Mullin

Mark Mullin

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.