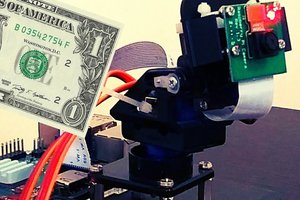

So let's go over some specs. The robot arm is made of:

- 4 Dynamixel AX-12A servos

- 1 Raspberry Pi 2 model B

- 1 Raspberry Pi camera module

- 1 Electromagnet on the top

- Aluminum, wood

- A small circuit for communicating with the servos (see here for more info’s)

- Colorful ribbon cables

It is able to search for screws (image processing with the Raspberry Pi camera module), pick them up and put them somewhere. Things I tried to optimize while building the thing are as follows:

- Making it move smoothly

- Getting it to pick up screws consistently

Making it move smoothly

I wasn't satisfied with the servos moving around when given just the goal position. The stopping and starting was too harsh, too sudden. The robot arm was shaking after reaching its goal position. I tried to fix this by implementing a software start-stop controller. Given a goal position, it makes sure that both the starts and stops are shaped in the form of a sine wave. This was supposed to make it move more elegantly, more smoothly. And to a certain degree, it works. For the case you are wondering how the heck the speed control was done more specifically: "speed" is one of the servos parameters which can be controlled over the serial bus. It is as easy as that. No need to try to get some kind of current control going. The servo does it all for you.

Getting it to pick up screws consistently

The second thing worth mentioning is the image processing. I didn’t use OpenCV. The image processing algorithms applied here are all very simple. I wanted to write them by my own. An important library which I used was pythons "picamera". "picamera" provides an easy way to get grey scale pixel data from the Raspberry Pi camera module. The pixel data then was put through some algorithms: Edge Detection, Binarization, Pixel Expansion, Labeling and Object Extraction. After that, the robot knows the positions of the objects in front of it (only in the xy plane though) and it's area in pixels. The area is useful when deciding whether or not to pick up objects. This robot arm will ignore things if they appear to be too small.

So let's take a closer look at the image processing. I wrote that I used several algorithms to determine the xy position of the screws. I called the algorithms Edge Detection, Binarization, Pixel Expansion, Labeling and Object Extraction. But what are those algorithms doing? To get a better idea, look at the gif below.

Starting with the gray scale image the data gets processed and passed to the next algorithm. In the end, all that's left are 3 points which determine the 2 dimensional position of the objects when viewed from the camera. Note how the objects differ in color. Different colors mean the Raspberry Pi is aware that there are multiple objects on the table. Watch the embedded video above to see more image processing pics (they are in the second half of the video).

Moving the Robot Arm to reach the target

What do we got so far? We got an image with some objects on it. We used some simple image processing algorithms to extract the xy position relative to the camera. Notice how the units for this coordinate are literally "pixels". We could determine some constant to compute the position in [cm], [inches], or any other unity of length we desire, but this all means nothing to the Raspberry Pi, so we might as well leave it the way it is. Our unit of length at this point is the [pixel].

What's next? We need a way to move the robot arm in such a way that the electromagnet tip comes close enough to the object so that we can pick it up. There are several ways to do this. Here are two ideas which might pop up.

- Inverse kinematics

- Path teaching

The idea with the former approach is that we let the program know about how long all the parts are and how they are connected. This, plus the information about the current rotation of all the joints relative to each other enables the Raspberry Pi to compute how much and in which direction every joint has...

Read more » Tobias Kuhn

Tobias Kuhn

Daryll

Daryll

BTom

BTom

Norbert Zare

Norbert Zare

DrYerzinia

DrYerzinia