About a week ago, Google had released open source code for the amazing artificial neural networks research they put out in June. Neural networks are trained on millions of images, progressively extracting higher features of the image until the final output layer comes up with an answer as to what it sees. Like how our brains work, the network is asked "based on what you've seen or known, what do you think this is?" It is analogous to a person recognising objects in clouds or Rorschach tests.

Now, the network is fed a new input (e.g. me) and tries to recognize this image based on the context of what it already knows. The code encourages the algorithm to generate an image of what it 'thinks' it sees, and feeds that back into the input -- creating a positive feedback loop with every layer amplifying the biased misinterpretation. If a cloud looks a little bit like a bird, the network will make it look more like a bird, which will make the network recognize the bird even more strongly on the next path and so forth.

You can visit the Inceptionism Gallery for more hi-res images of neural net generated images.

I almost immediately downloaded the deepdream code upon its release, and to my relief it was written in python within an ipython notebook. Holy crap, I was in luck! I was able to get it running the following morning and create my own images that evening! My first test was of Richard Stallman, woah O.o

I made several more but my main interest is with Virtual Reality implementations. The first tests I did in that direction was to take standard format 360 photospheres I took using Google Camera and to run them through the network. Here's the original photograph I took:

and here's the output:

I can easily view this in my Rift or GearVR, but I had a few issues with this at first:

- Because the computer running the network is an inexpensive ThinkPad, I was limited to what type of input I fed into the network before my kernel would panic.

- The output was low resolution which would be rather unpleasant to view in VR

- Viewing a static image does not provide a very exciting and interactive experience with a kickass new medium like Virtual Reality.

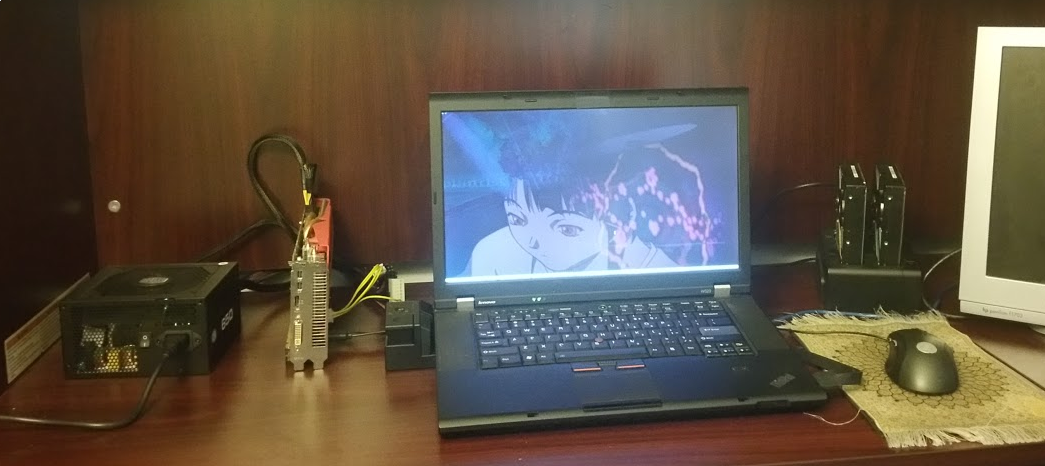

The first issue is something I would probably be able to handle with an upgrade, which I have been able to provide for my workstation laptop via external GPU adapter:

That is a 7970 connected via PCI express to my W520 Laptop. It needs its own power-supply as well. Had to sell that GPU but I'll be in the market for another card. VR / Neural Network research requires serious performance.

The second issue was the low resolution. I had previously experimented with waifu2x, it uses Deep Convolutional Neural Networks for up-scaling images. Here's an example I made:

This super-resolution algorithm can be subtle but its brilliant and it made my next test a lot more interesting! I have the original side by side with the up-scaled dream version:

Here's some detail of the lower resolution original image:

And here's the output from the image super resolution neural net:

The reason it has taken on some cartoonish features is because the network was trained on a dataset consisting of mostly anime (lol), but I find that it makes the results more interesting because I find reality to be rather dull.

Lastly, the third problem I have with these results is that the experience of seeing these images in VR is still not yet exciting. I have brainstormed a few ideas of how to make Deep Dream and VR more interesting:

- Composite the frames into a video, fade in and out between raw image and dream world.

- Implement a magic wand feature (like photoshop / gimp) and select a part of the world as input into the neural network and output back into the image in VR. I found this: Wand is a ctypes-based simple ImageMagick binding for Python.

- Leap motion hand tracking in VR to cut out the input from the 360 picture inside and output back into a trippy object in VR or composite back into the picture.

- Take a video and have a very subtle deep dream demo with only certain elements [such as the sky] be fed as input rather than the whole image (same concept as previous two points)

- Create my own data sets by scraping the web for images to train a new model with.

- Set up a Janus Server and track head rotation / player position / gaze. The tracking data will be used to interact with deep dream somehow...

- Screen record the VR session and use unwarpVR ffmpeg scripts to undo distortion and feed input into neural network. More useful when paired with Janus server tracking.

- Record timelapse video with a 360 camera and create deep dream VR content.

- Experiment with Augmented Reality

I'll have a VR room online later today testing various experiments out. To be continued...

alusion

alusion

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.