Texture Synthesis

What is it like to be a computer that makes art? If a computer could originate art, what would it be like from the computer's perspective?

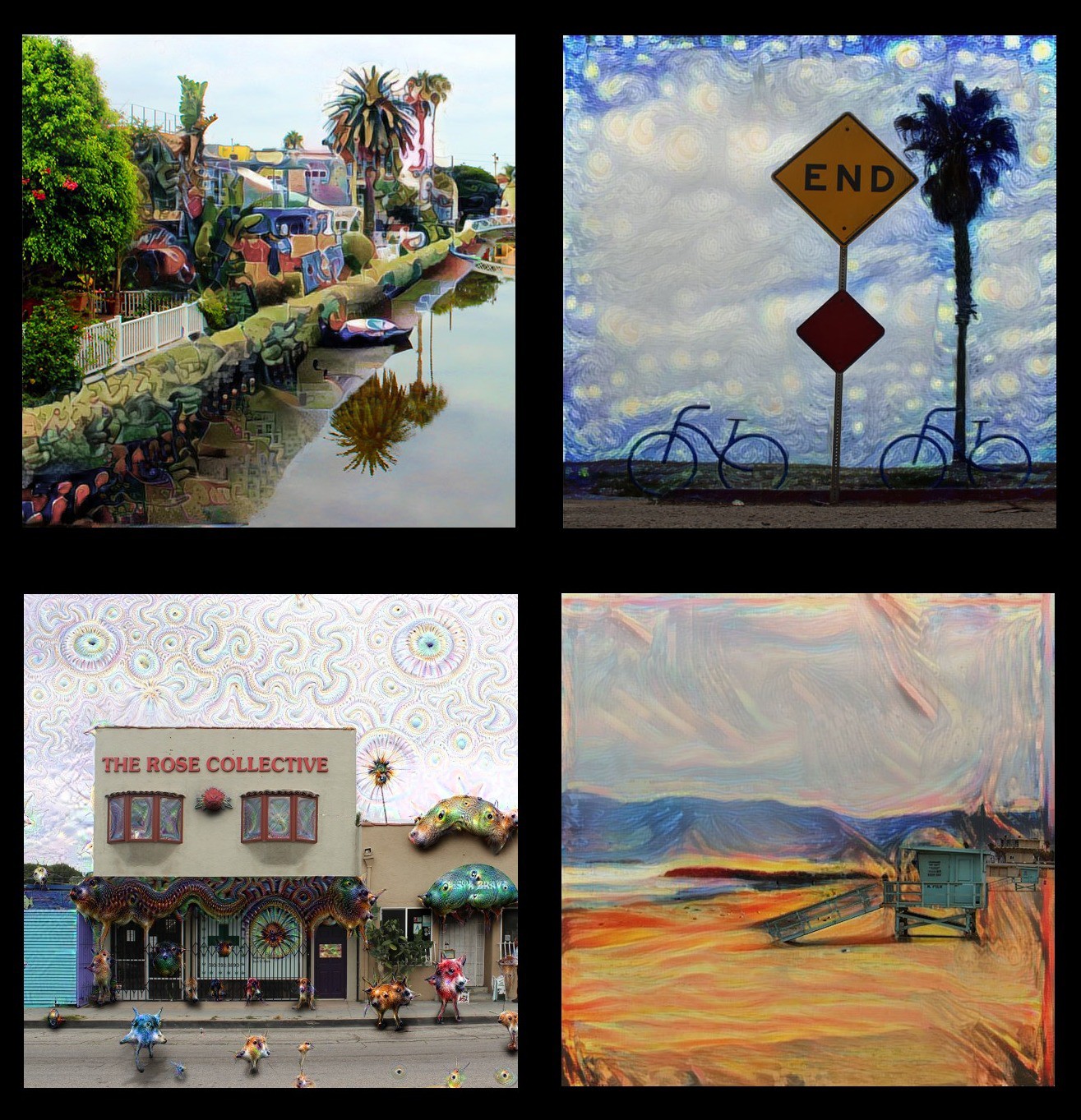

A concept taught to beginner artists to eschew is "schema", a phenomena in which the artist draws from the minds eye rather than what the eye sees. Deep dream is the good example of this neurological indoctrination in which cognitive bias is reinforced at every level from the moment it notices a trend.

Complex systems studies how relationships between parts give rise to the collective behaviours of a system and how the system interacts and forms relationships with its environment. Examples of complex systems are Earth's global climate, the human brain, social organization, an ecosystem, a living cell, and ultimately the entire universe. In many cases it is useful to represent such a system as a network where the nodes represent the components and the links their interactions. Neural networks have been integral in advancing the studies of complex systems. In many ways, the process for generative glitch art is about exploiting chaos within the system.

Chaos theory and science fiction share much in common: tiny alterations about the world that later cause thunderstorms into the future. There is a deep structure in the apparently random, chaotic behaviour that characterizes all natural and some social phenomena. This structure takes the form of a 'strange attractor', a state towards which a system is drawn. A good example would be The Difference Engine where Charles Babbage perfects his Analytical Engine and the computer age arrives a century ahead of its time.

Generative art refers to art that in whole or in part has been created with the use of an autonomous system. Generative art can be viewed developing in real-time. Typically such works are never displayed the same way twice. Some generative art also exists as static artifacts produced by previous unseen processes.

G'MIC is a research tool. Many commands (900+ all configurable, for a libgmic library file < 5 MB.) routinely produce 'images' which are useful data sets, but do not span a range of values compatible with standard graphic file formats. ZArt is a stand-alone program, distributed within the G'MIC sources, to apply (almost)-real-time image effects on videos. Multiple webcams, image files and video files are now supported. However, the much improved CLI interface is undoubtedly the most powerful and elegant means of using G'MIC. It now uses by default OpenCV to load/save image sequences. We can then apply image processing algorithms frame by frame on video files (command -apply_video), on webcam streams (command -apply_camera), or generate video files from still images for instance. New commands -video2files and -files2video have been added to easily decompose/recompose video files into/from several frames. Processing video files is almost childs play with G’MIC, making it a powerful companion to FFmpeg and ImageMagick.

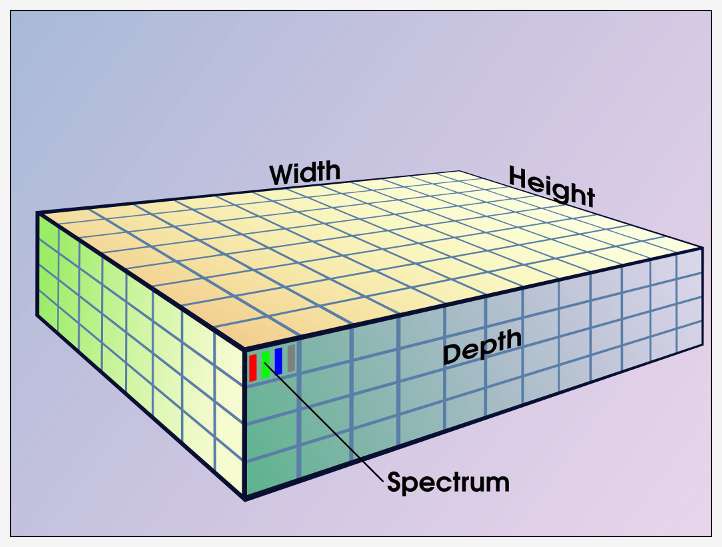

With depth, G'MIC images represent volumes, a construct that readily supports tomographic imaging (i.e., 'CATScans'). Video footage also constitutes one 'image' on a G'MIC image list, depth a proxy for time. Depth-aware commands can produce various animation effects. A one minute NTSC video, 720x480 pixels shot at 29.97 frames per second, becomes a G'MIC image with a depth of 1798 pixels. Increments along the depth axis are often called slices, a frame in the larger context of a video, and a two dimensional section in the larger context of tomographic imaging.

G'MIC is found within the most popular GNU/Linux distributions as gmic and gimp-gmic. One useful filter for virtual reality world building would be the patch-based algorithm for seamless textures. With the gimp plugin installed, you can apply this filter via → → .

This filter creates a tileable texture that is blended nicely

View my other generated neural art seamless textures: http://imgur.com/a/gMUTv

With the G'MIC interpreter, you can easily script an array to view the texture tiling:

# Apply patch-based seamless filter to image

gmic file.jpg -gimp_make_seamless 30

# Tile single image into an array

gmic file.jpg -frame_seamless 1 -array 3,3,2

# All images in directory

for i in `ls *.jpg`; do gmic $i -array 2,2,2 -o test/$i; done

Stroke Style Synthesis

Non-Photorealistic Rendering: NPR systems simulate artistic behavior that is not mathematically founded and often seems to be unpredictable.

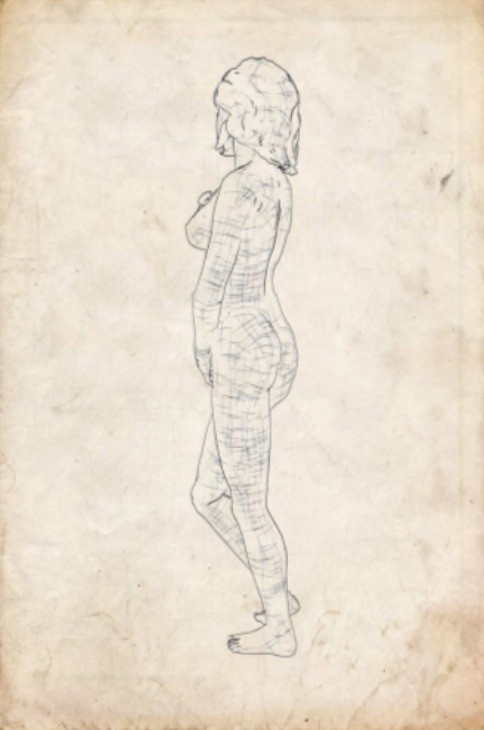

Procedural Generation of Hand-drawn like Line Art: http://sirkan.iit.bme.hu/~szirmay/procgenhand.pdf

Artistic line drawing generation that supports different contour and hatching line renderings.

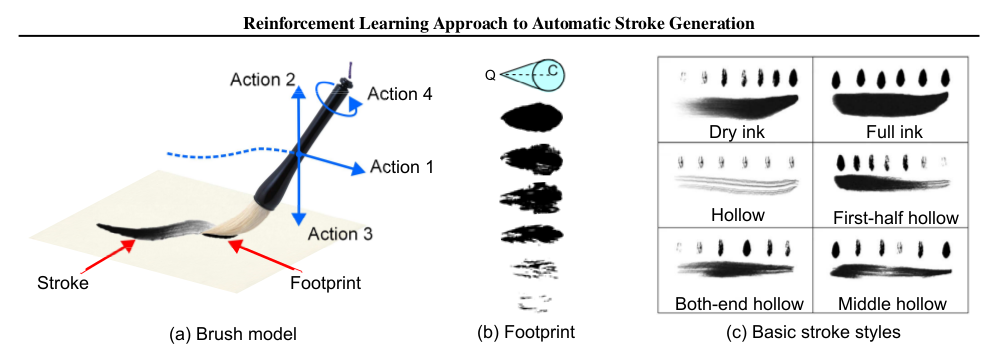

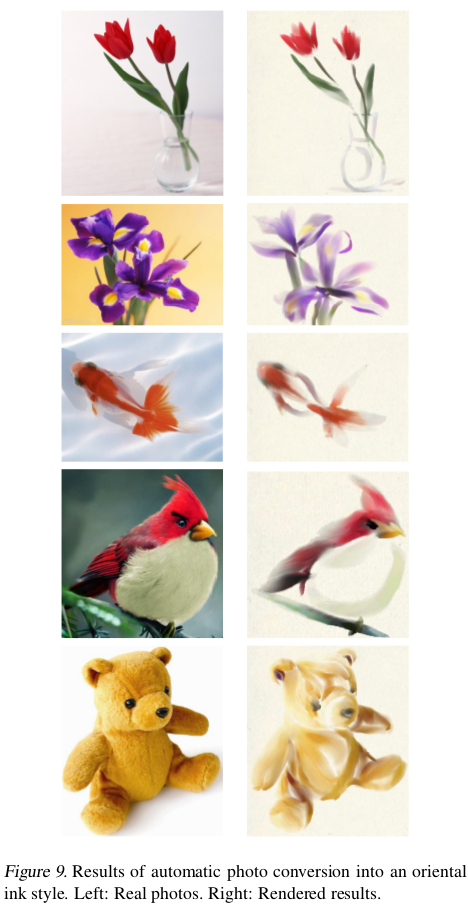

Automatic Stroke Generation in Oriental Ink Painting: http://icml.cc/2012/papers/101.pdf

The agent uses a Markov Decision Process to learn a (local) optimal drawing policy by being rewarded for obtaining smooth and natural brush strokes in arbitrary shapes.

A Markov chain is a discrete random process with the property that the next state depends only on the current state. An extension of the Markov chain is a Markov Decision Process (MDP) which provides a mathematical framework for modeling decision making in situations where outcomes are partly random and partly under the control of a decision maker, the difference being the addition of actions (allowing choice) and rewards (giving motivation).

The problem with the process now is the lack of control over rendering with 1 dominant style processing the entire frame. To the eyes of a trained artificial network, a picture should be worth a thousand words. The artist should be able to extract and express unique features from a scene. In other words, we should be able to select parts of the scene rather than process everything. This would go a longer way in generating richer assets for our use with mixed reality art.

Manual Feature Extraction

This is the fastest way I have found for segmenting parts of a scene manually

gmic image.jpg -x_segment , -output output.png

----------------------------------------------------------------------------------------------------

---- Left mouse button or key 'F' create a new foreground control point (or move an existing one).

---- Right mouse button or key 'B' create a new background control point (or move an existing one).

---- Mouse wheel, or keys 'CTRL+arrows UP/DOWN' zoom view in/out.

---- 'CTRL+mouse wheel', 'SHIFT+mouse wheel' or arrow keys move image in zoomed view.

---- Key 'SPACE' updates the extraction mask.

---- Key 'TAB' toggles background view modes.

---- Key 'M' toggles marker view modes.

---- Key 'BACKSPACE' deletes the last control point added.

---- Key 'PAGE UP' increases background opacity.

---- Key 'PAGE DOWN' decreases background opacity.

---- Keys 'CTRL+D' increase window size.

---- Keys 'CTRL+C' decrease window size.

---- Keys 'CTRL+R' reset window size.

---- Keys 'ESC', 'Q' or 'ENTER' exit the interactive window.

----------------------------------------------------------------------------------------------------

The green and red dots are used to draw the mask points and spacebar will update the preview while pressing tab to flick between channels. I think in a VR environment mapping these functions to different and more discreet processes such as via a pointer / eye tracking / voice. It's about clipping out the important bits from a memory, it's best to try and image simulating its ideal function state AFK because c'mon this is mixed reality.

There'll be plenty of cooler examples of this technique in the future.

Autonomous Format

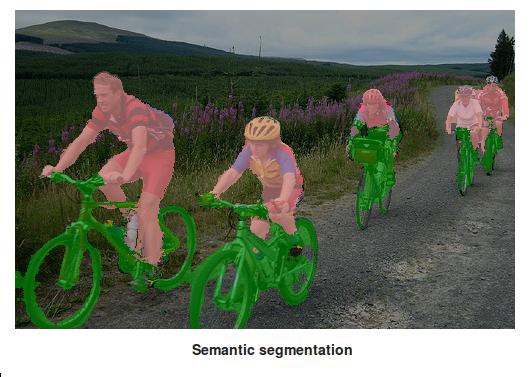

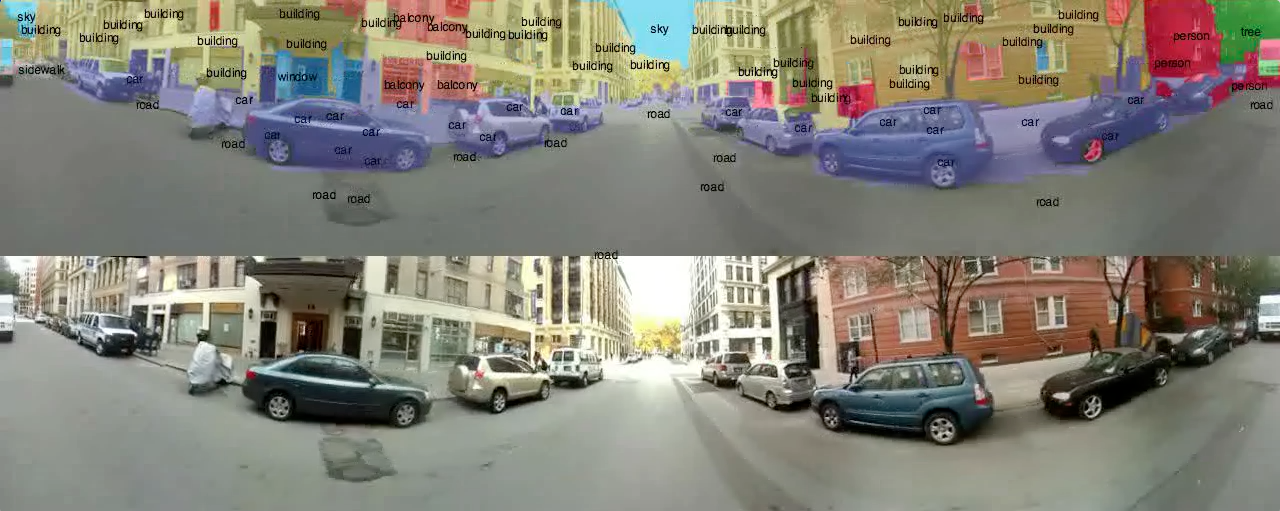

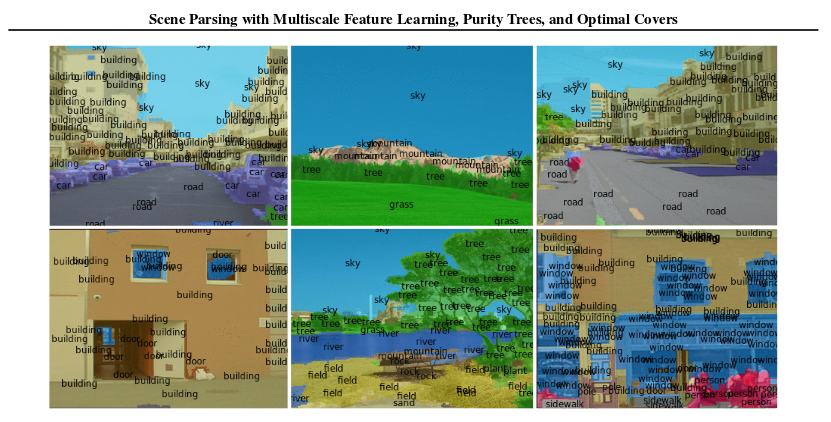

Semantic Segmentation allows computers to recognize objects in images and even recover the 2D outline of the object. Try it out here.

The traditional approach to dealing with complexity is to reduce or constrain it. Typically, this involves compartmentalisation: dividing a large system into separate parts. Organizations, for instance, divide their work into departments that each deal with separate issues. Engineering systems are often designed using modular components. However, modular designs become susceptible to failure when issues arise that bridge the divisions but atleast you can fix or replace that part instead of the entire system. In order to guide the process better, the algorithm must first learn to classify through observation. This way it will begin to pay closer attention to details within the composition by expressing the unique objects within the scene.

[The future is there... looking back at us. Trying to make sense of the fiction we will have become.]

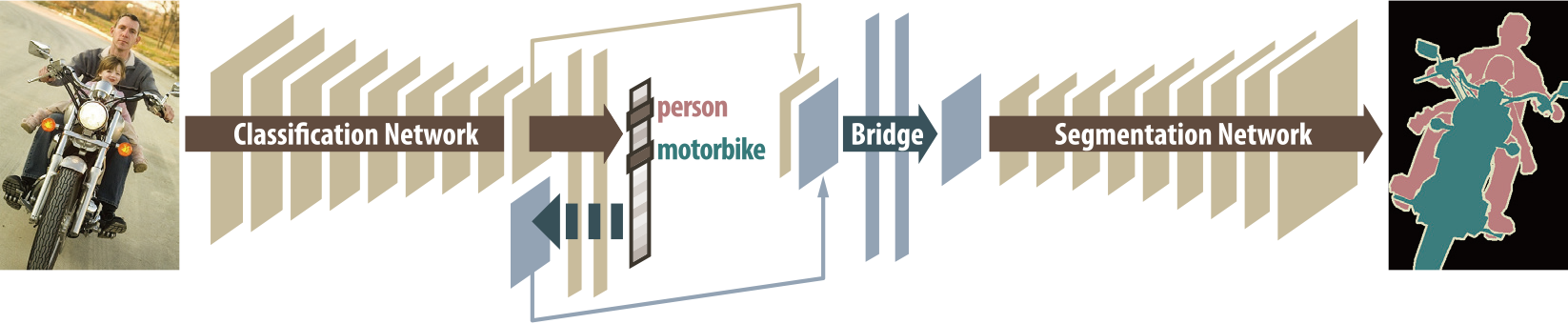

DecoupledNet: Decoupled Deep Neural Network for Semi-supervised Semantic Segmentation

This algorithm learns a separate network for each classification and segmentation task.

Extracting features from the world and encoding it with more useful metadata like time and position. For building a memory palace from which will sink into dreamspace. The metadata will be piped into the system for processing various textures that will be assimilated into generated virtual worlds. Each mask represents a layer of texture that can be extracted and preserved as numerical data. See: http://gmic.eu/tutorial/images-as-datasets.shtml

alusion

alusion Full Album http://imgur.com/a/tcmj1

Full Album http://imgur.com/a/tcmj1

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.