Team Pegasus created an autonomous vehicle that utilizes machine vision algorithms and techniques as well as data from the on-board sensors, in order to follow street lanes, perform parking maneuvers and overtake obstacles blocking its path. The innovational aspect of this project, is first and foremost the use of an Android phone as the unit which realizes the image processing and decision making. It is responsible for wirelessly transmitting instructions to an Arduino, that controls the physical aspects of the vehicle. Secondly, the various hardware components (i.e. sensors, motors etc) are programmatically handled in an object oriented way, using a custom made Arduino library, which enables developers without background in embedded systems to trivially accomplish their tasks, not caring about lower level implementation details.

The use of a common mobile phone, instead of specialized devices (i.e. a Linux single-board computer), offers much higher deployability, user friendliness and scalability. Android-based autonomous vehicles, which could be deployed on the road, were not found in the literature, therefore the team behind it believes this avant garde work, can constitute the basis of further research on the subject of autonomous vehicles controlled by consumer, handheld, mobile devices.

This article will cover details on the development and the implemented features of the first Android autonomous vehicle. It was created by Team Pegasus during the last couple of months, within the context of the DIT168 course, at the University of Gothenburg. The rest of the Team Pegasus who worked on this project and I would like to thank very much were (in alphabetical order): Yilmaz Caglar, Aurélien Hontabat, David Jensen, Simeon Ivanov, Ibtissam Karouach, Jiaxin Li, Dimitrios Platis, Petroula Theodoridou.

Related repositories

Driving logic (for OpenDaVinci simulation)

Background story

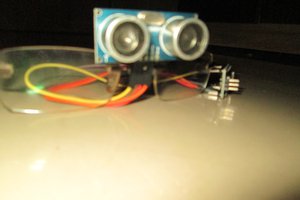

In the DIT168 course, offered by professor Christian Berger, the students who were divided in groups of 8, were tasked to create self-driving autonomous vehicles, that could follow street lanes, overtake obstacles and perform parking maneuvers. In order to achieve that, they were supplied with an RC car, a single board Linux computer (ODROID-U3), a web camera (to provide machine vision capabilities to the system) as well as a plethora of sensors and microcontrollers. Over the span of approximately three months, they had to first implement the various features in a virtual simulation environment and then deploy and integrate them on the actual vehicle. The OpenDaVinci middleware, was suggested by the course administration as both the testing and the deployment platform.

This was an overview the default course setting, which has been working well over the years the course has been taught. However, “well” was not good enough for us. :-)

The problem

To begin with, we did not like how the previous end products looked. Particularly, all of them were characterized by a tower looking structure where the webcam was mounted. Because of that, a hole had to be curved off the vehicle's default enclosure. This plastic enclosure merely serves aesthetic purposes but since it makes the vehicle look like... a vehicle, we considered important to maintain its integrity.

Furthermore, the OpenDaVinci platform seemed rather excessive for what we were trying to do. Since OpenDaVinci is designed as a distributed, platform independent solution, that even includes a simulation environment it inevitably is accompanied by a specific degree of complexity, especially when it comes to deployment and use. Do not get me wrong, OpenDaVinci seems to be a software with a lot of potential, however we believed we could make do without it. Last but not least, everyone, all these years has been doing essentially the same thing. Using a Linux single board computer, that is connected to a camera and some kind of microcontroller and sensors, to perform the...

Read more » platis.solutions

platis.solutions

J Groff

J Groff

Debargha Ganguly

Debargha Ganguly

Shah Selbe

Shah Selbe

Bruno Laurencich

Bruno Laurencich

Every type of the education is very https://getassist.net/best-essay-writing-service-review/ helpful to you. So, where you find the good information take it. You give the information about the massage therapist which is really necessary to know about it. Massage is good for the body.