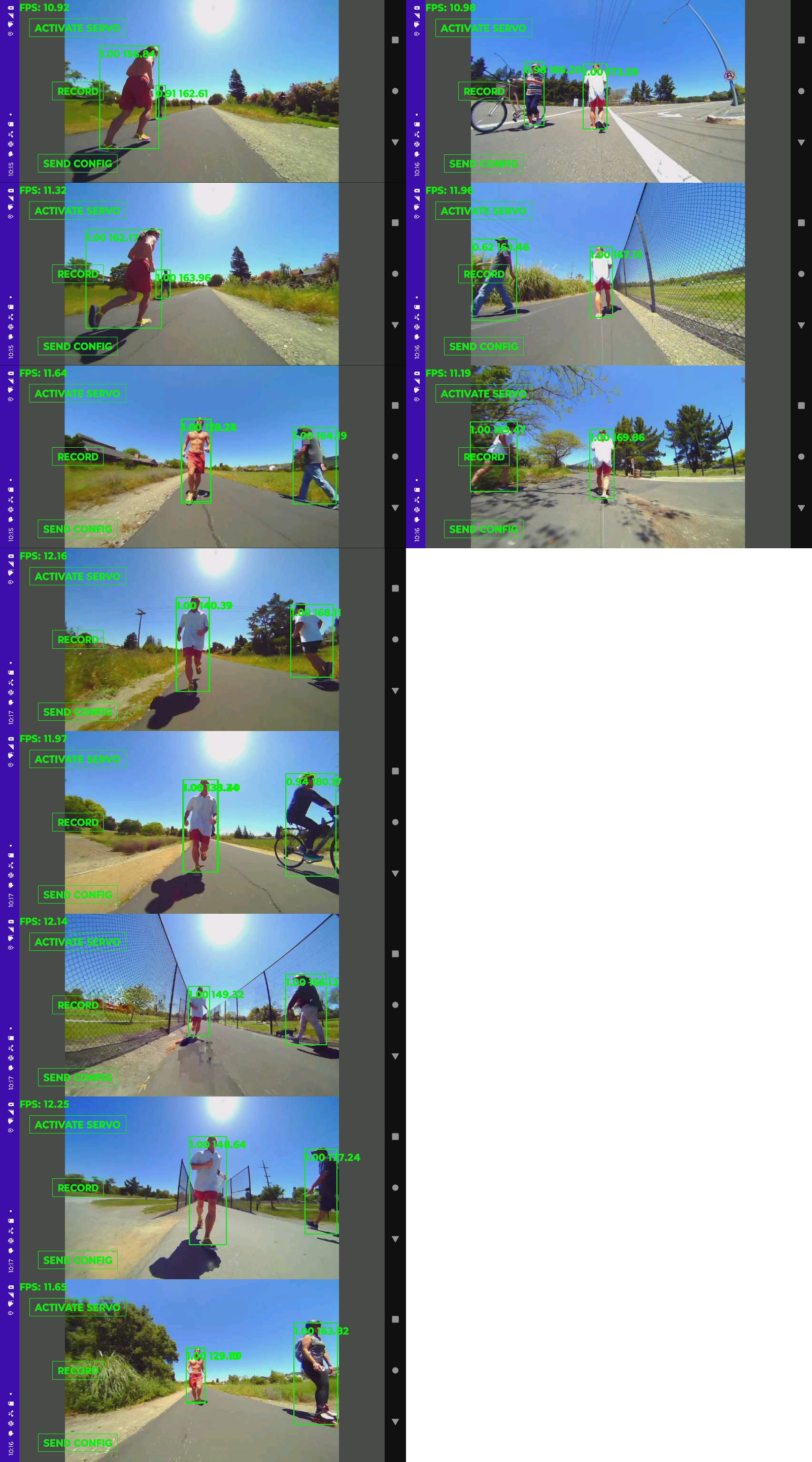

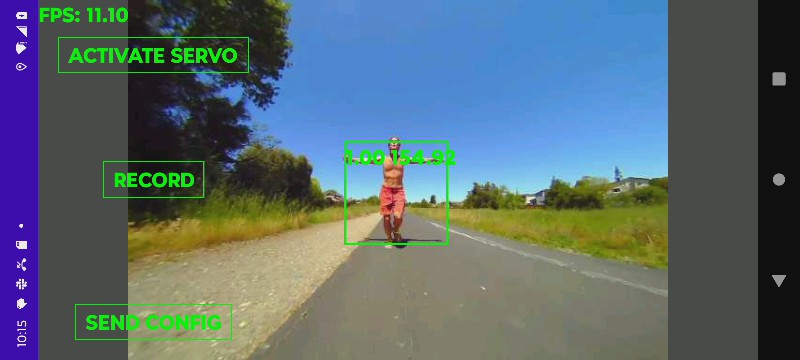

The latest efficientlion-lite1 model worked perfectly, once the byte order was swapped. imwrite seems to require BGR while efficientlion requires RGB. It's a very robust model which doesn't detect trees & works indoors as well is it does outside. It seems to handle the current lens distortion. It definitely works better with the fisheye cam than the keychain cam, having legs & a sharper image.

The pendulum swung back to color histogram feature extraction for recognition. The latest idea was whenever there was just 1 hit to update the target color based on the 1 hit. It would use a lowpass filter so the hit could be the wrong object for a while. It might also average many frames to try to wash out the background.

They use the chi squared method to reduce the difference between histograms to a single score. Semantic segmentation might give better hit boxes than efficientdet. It goes at 7fps on the rasp 4. The highest end models that could be used for auto labeling might be:

https://github.com/NVIDIA/DeepLearningExamples/tree/master/PyTorch/Segmentation/MaskRCNN

https://github.com/NVlabs/SegFormer

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Meanwhile in the instance segmentation department, the latest yolo model is yolov9. yolov5 has been good enough as the lion kingdom's auto labeler for over 2 years. Yolov9 is probably what Tesla uses.

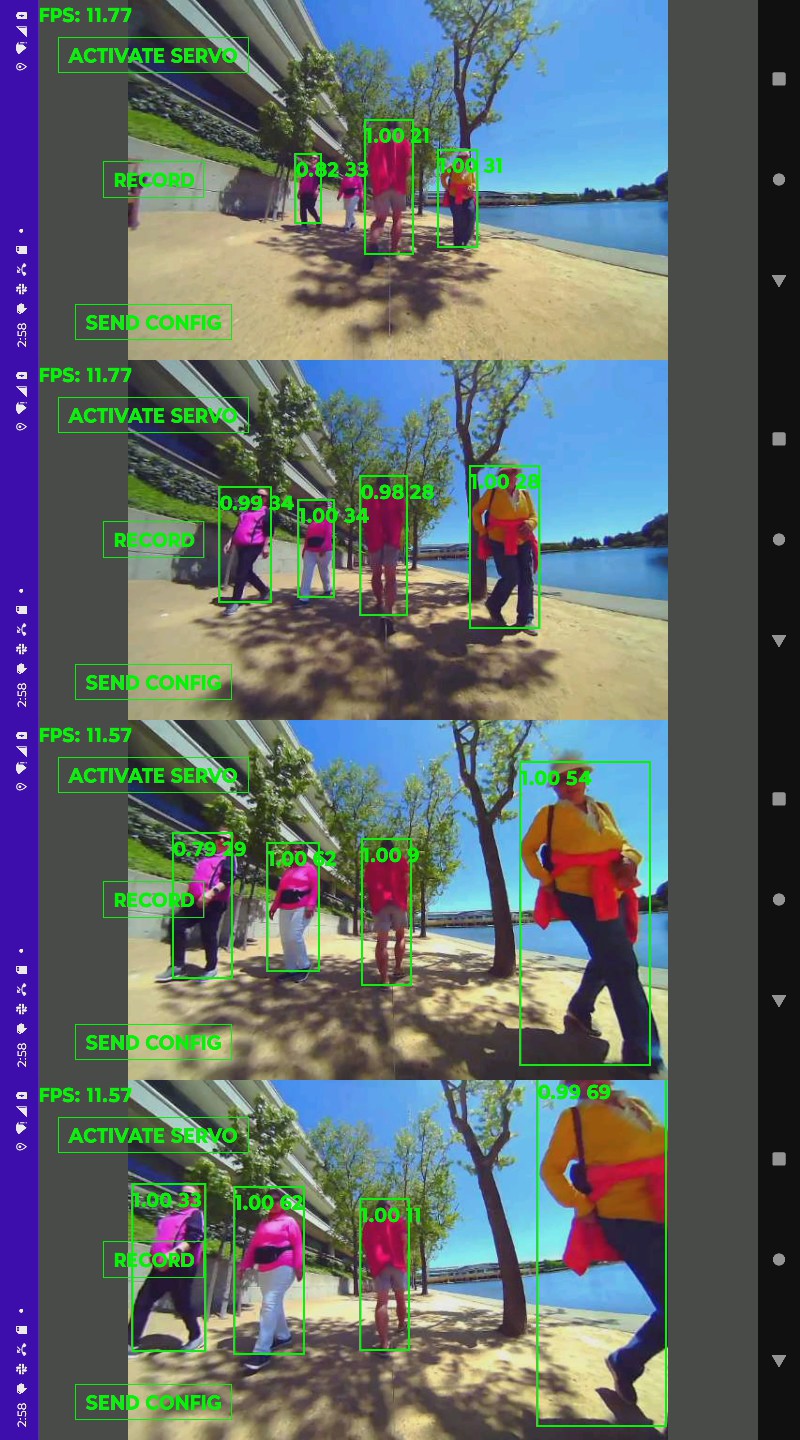

Lions feel the most robust method is going to be manually picking a color in the phone & picking the hit box with the closest average color to that color. This would only be used in cases of more than 1 hit box. It wouldn't automatically compute a new color for the user.

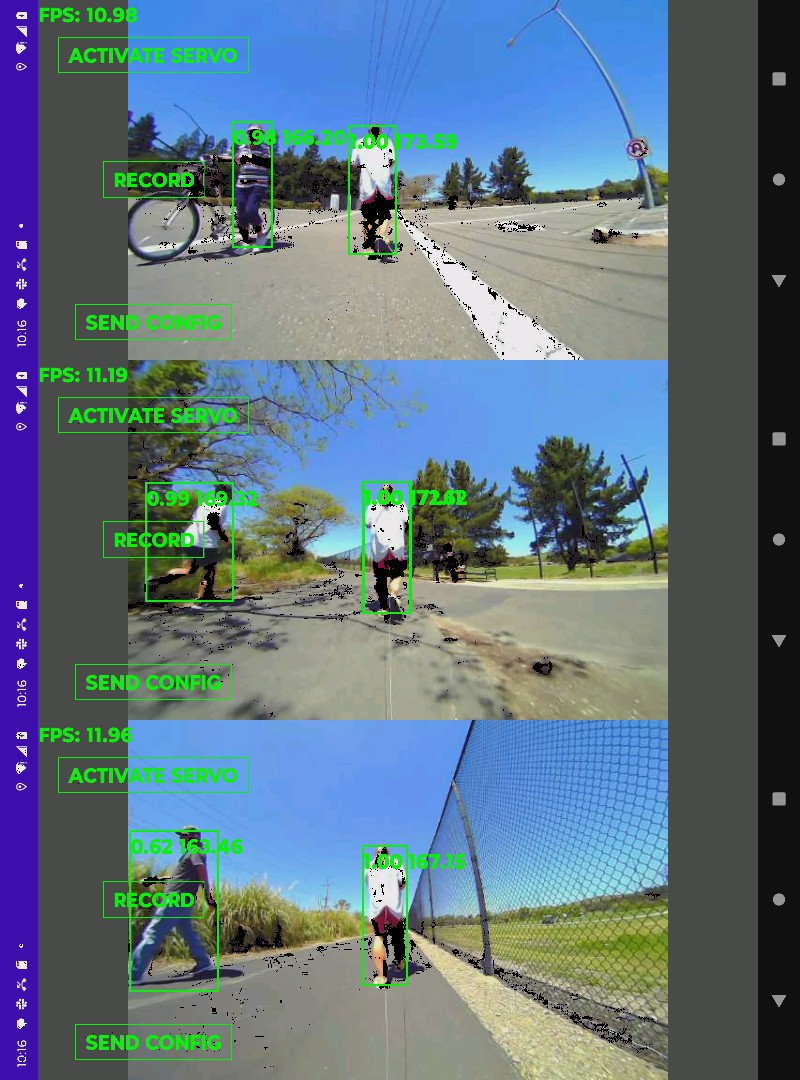

Average color difference would only eliminate 2/3 of the hits in this case.

Average color distance varies greatly when we're dealing with boxes.

Straight hue matching doesn't do well either.

Since most of the video is of a solo lion with short blips of other animals, recomputing a new target color in every frame with 1 hit might work, but it needs to cancel overlapping boxes.

This algorithm fixed all the test cases which would have caused the camera to track the wrong animal. It definitely fixed all the cases where the 2022 algorithm would have failed, since that tracked the largest box.

The only failures would not have caused the camera to track the wrong animal. The other animal was always converging with the lion & a very small box when it failed. The small boxes create a very high variation of colors. It should use the defished resolution for color calculations. Another idea is to recompute the target color when multiple boxes are present.

The big failure modes are if the lion is in a very small box & another animal is in a big box, the color variation could be enough for it to track the wrong animal. If the tracker doesn't detect the lion or if the lion is momentarily behind another animal, it would replace its target color. There could be a moving average to try to defeat dropped lion boxes but this adds a failure mode where the target color doesn't keep up with the lion. In a crowd, the target color is going to drift & it's going to lose the subject.

This might be the algorithm DJI uses, but with semantic segmentation. No-one ever shows DJI in a crowd.

Another test in more demanding environments with more wild animals still overwhelmingly worked. A few near misses & more difficult scenes were shown.

1 trouble spot was a wild animal with similar colored clothing. It had a lot of false detections. A forest environment with constantly changing shadows made the target color very erratic but also created differentiating lighting for different subjects. It was computing a new target color from the best match in every frame, but averaging 15 frames of target color so the failures might have been recoverable if the lion didn't go out of frame.

A red shirt is your biggest ally in this algorithm, until

It somehow missed just 1 frame in this one.

Typical results have large margins. In the heat of battle, the results are never typical though. Surprising how this algorithm causes whatever it sees 1st to become what it follows most of the time, like an animal which identifies its 1st companion as its parent.

Semantic segmentation might improve the results, but it would be a lot of work. It's possible that the extra background information in the hit boxes helps it differentiate objects. A grey shirt might show how important the background information is. There's also matching nearest histogram instead of nearest average color.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.