The aim is to produce a low cost, easy to use system to monitor limb movements.

I intend that the ideas, hardware, software and associated documentation developed as part of this project be available for anyone to replicate and use in the spirit of the MIT software license.

Basically I mean that to mean: if you want to build, implement, experiment with, use, market,

make money from, donate expertise in, sell expertise in or modify a system based on this work,

you should be free to do so, providing you appropriately acknowledge its source and respect the

above intention.

This is the second incarnation of the project. I made an initial version using MPU9150 sensors wired to a Raspberry Pi based controller device and sending its output to a Web User Interface. The system did work but was clumsy to set up and restricted in application primarily due to the fact that all sensors were wired and the processing of all the raw sensor data was done by a single microprocessor potentially limiting its expansion to large assemblies of sensors.

Video examples of the earlier system in operation:

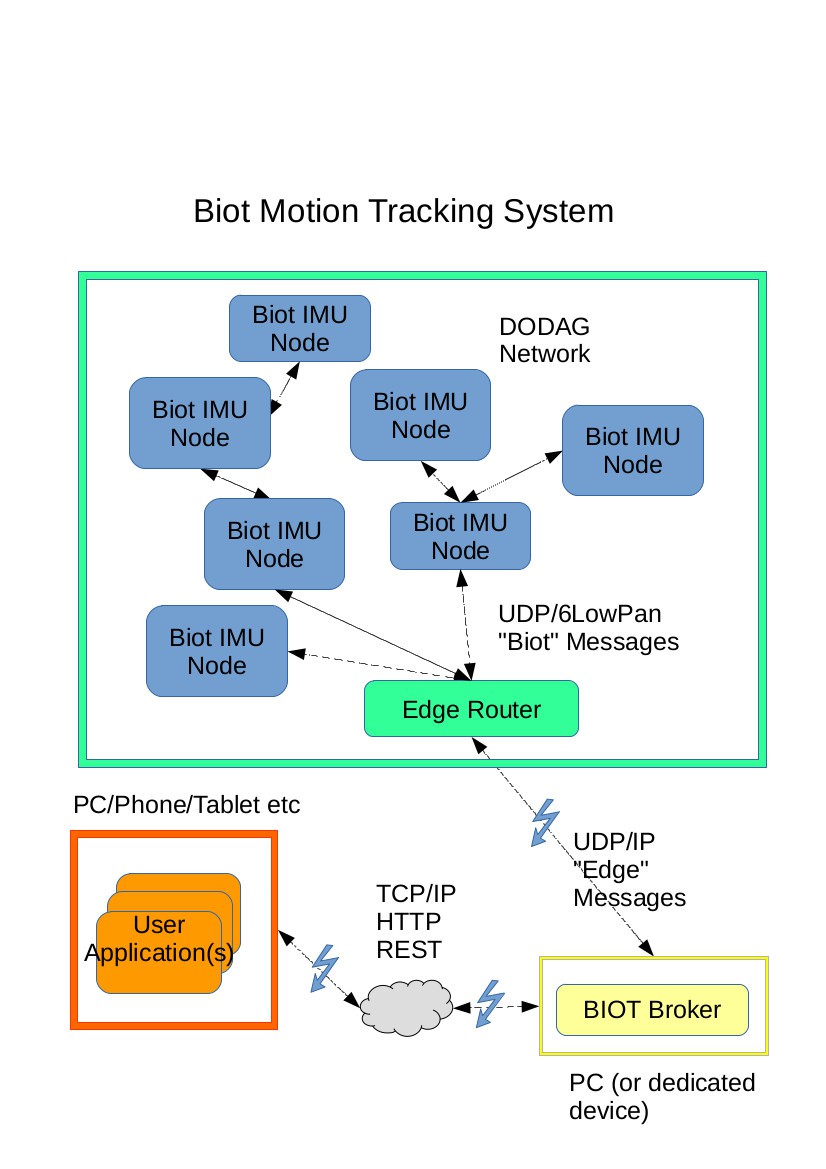

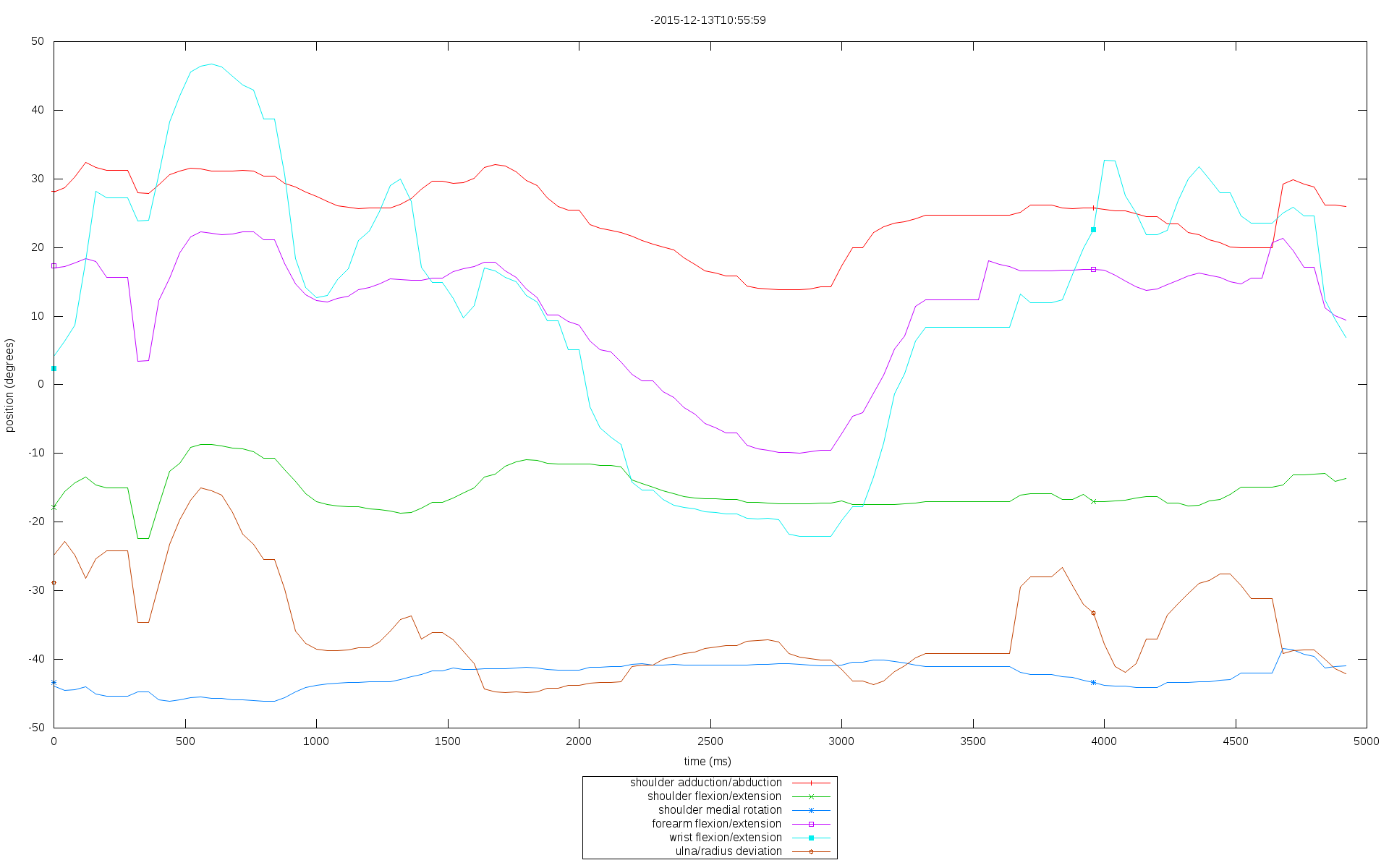

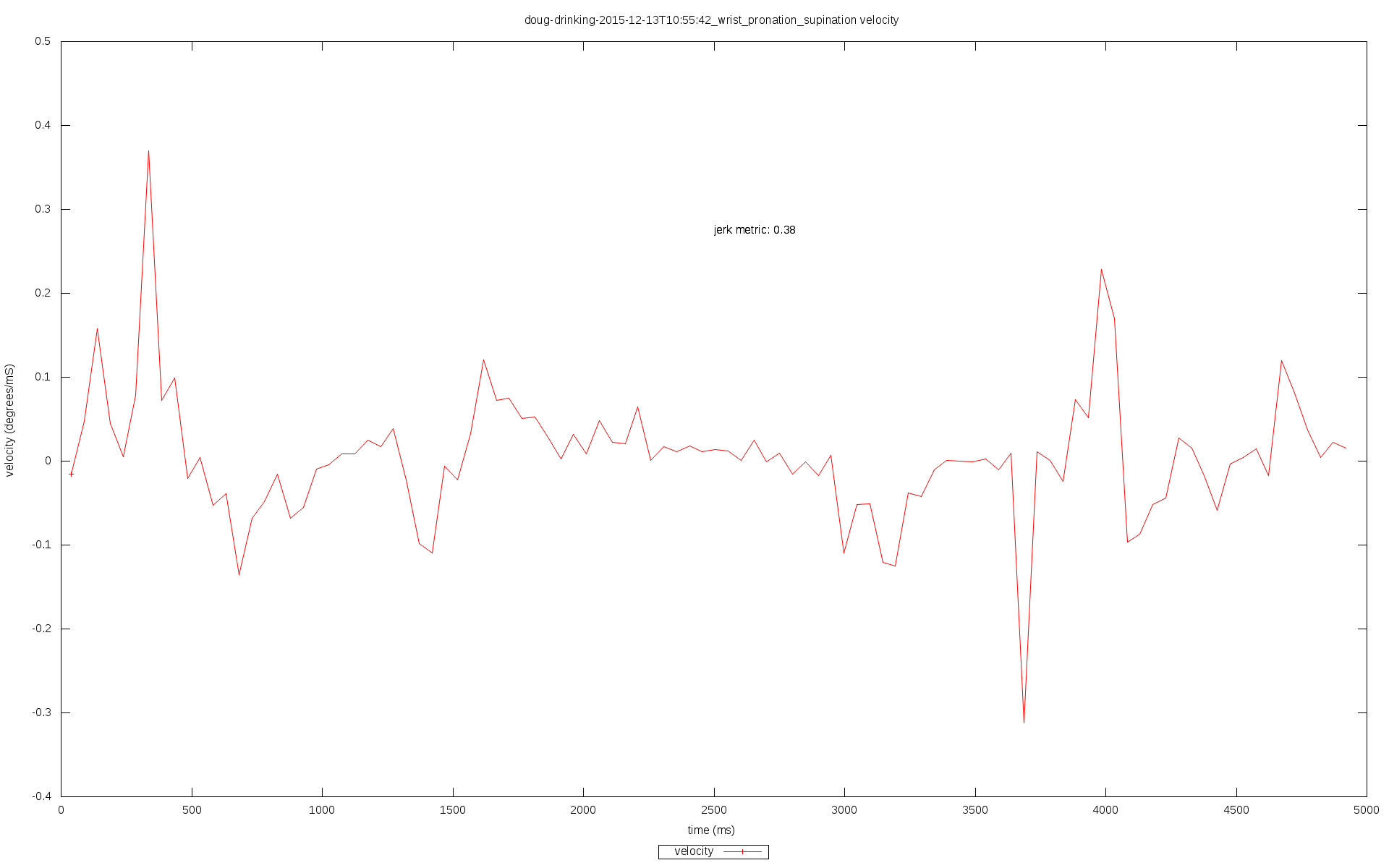

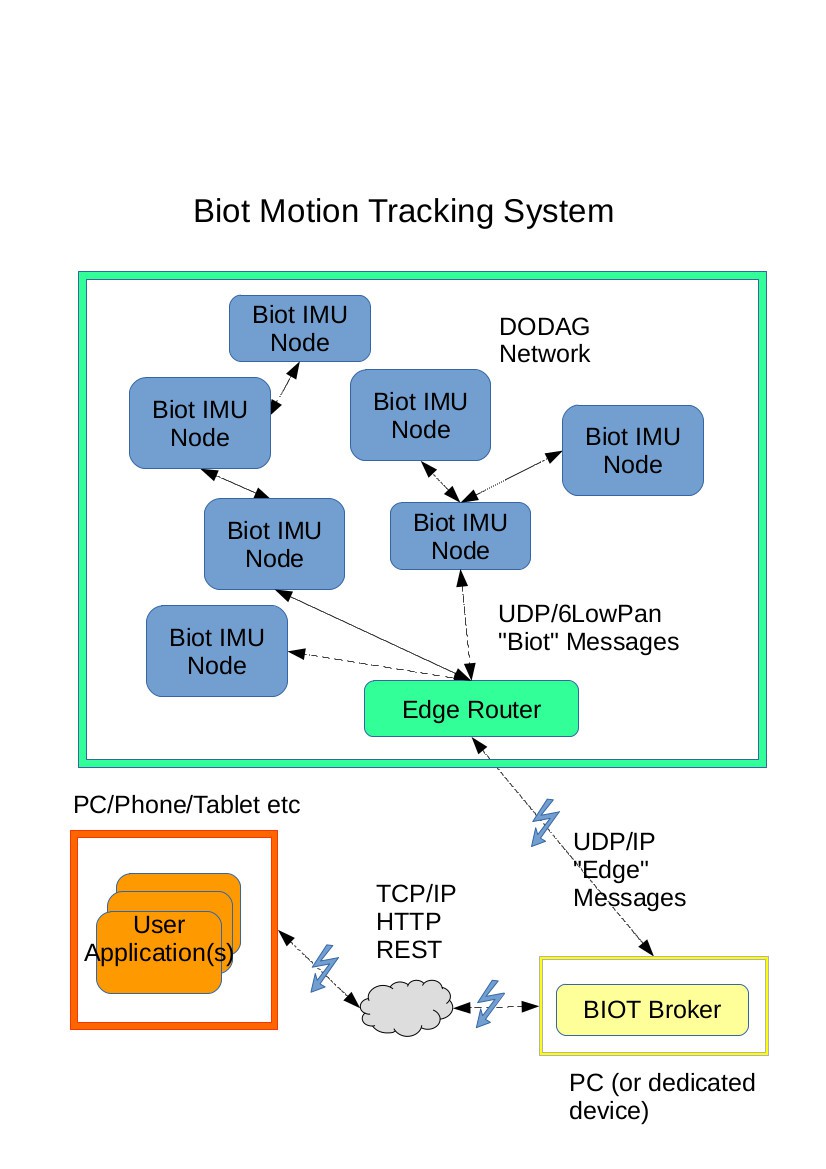

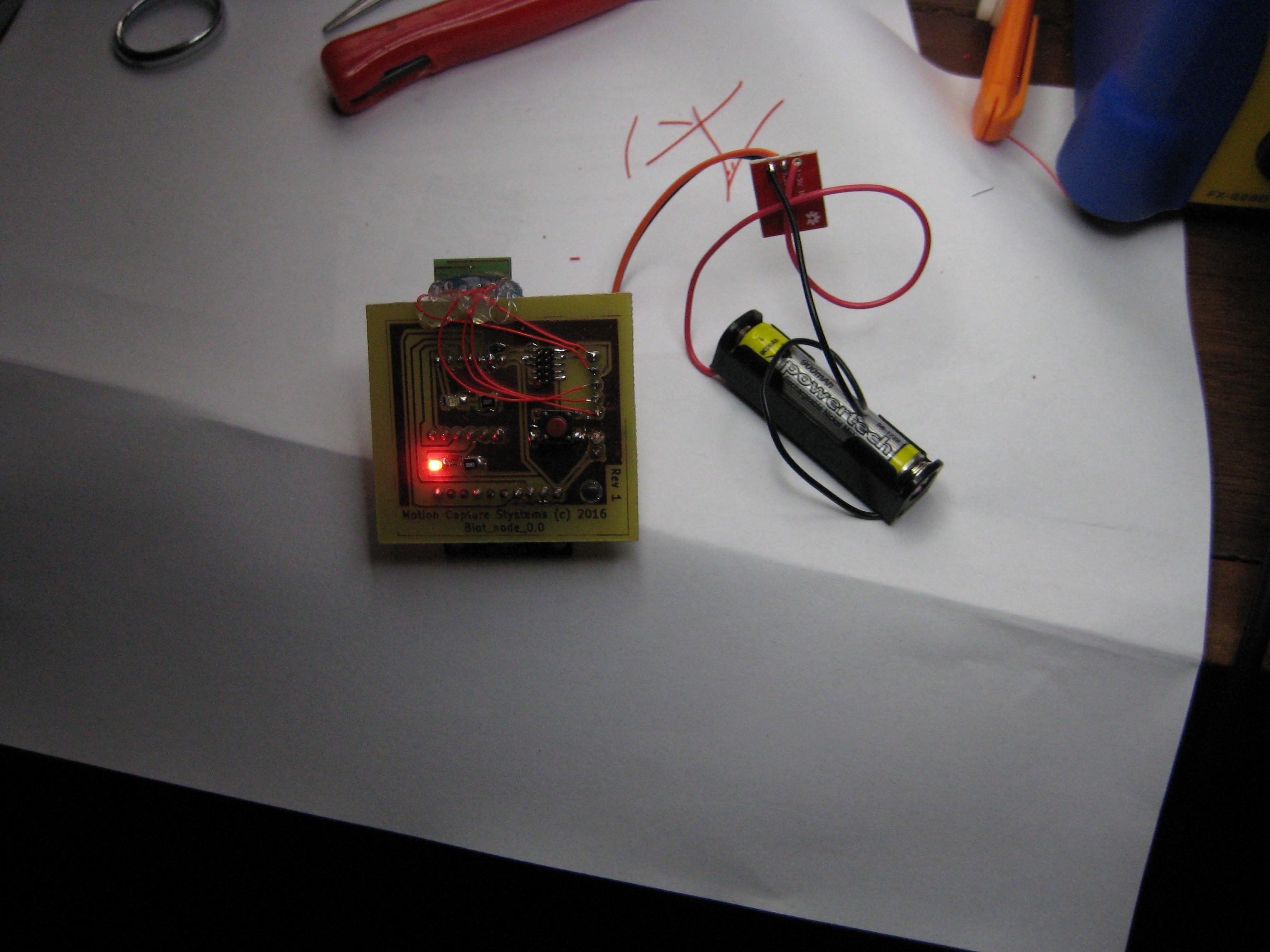

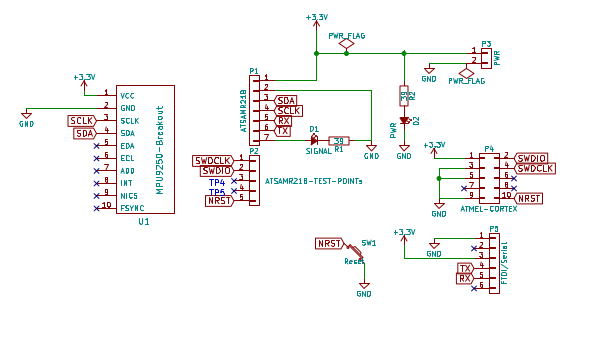

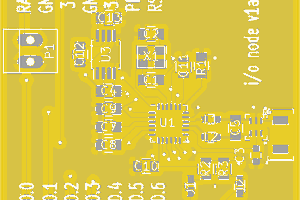

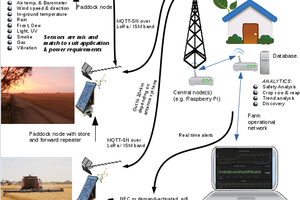

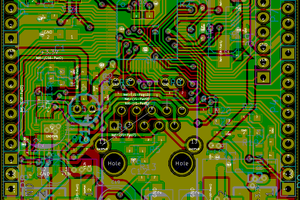

The current system uses MPU9250 IMU sensors, each combined with an ATSAMR21 microcontroller for each sensor node. Each node processes its own orientation data. These nodes now communicate using wireless (6lowPAN) to an edge router. The edge router (currently also using an ATSAMR21 based board) interfaces between the 6lowPAN network of sensors and a standard TCP/IP network.

The system has a simple REST web service API hosted on a PC that communicates with the edge router and provides the ability to read data from and send control data to the IMU nodes.

Finally a browser based user interface, uses the Web Service API, to record and display the movements and allow the user to control the system.

I am calling the nodes "Biotz" - little biological sensing internet of things thingys :)

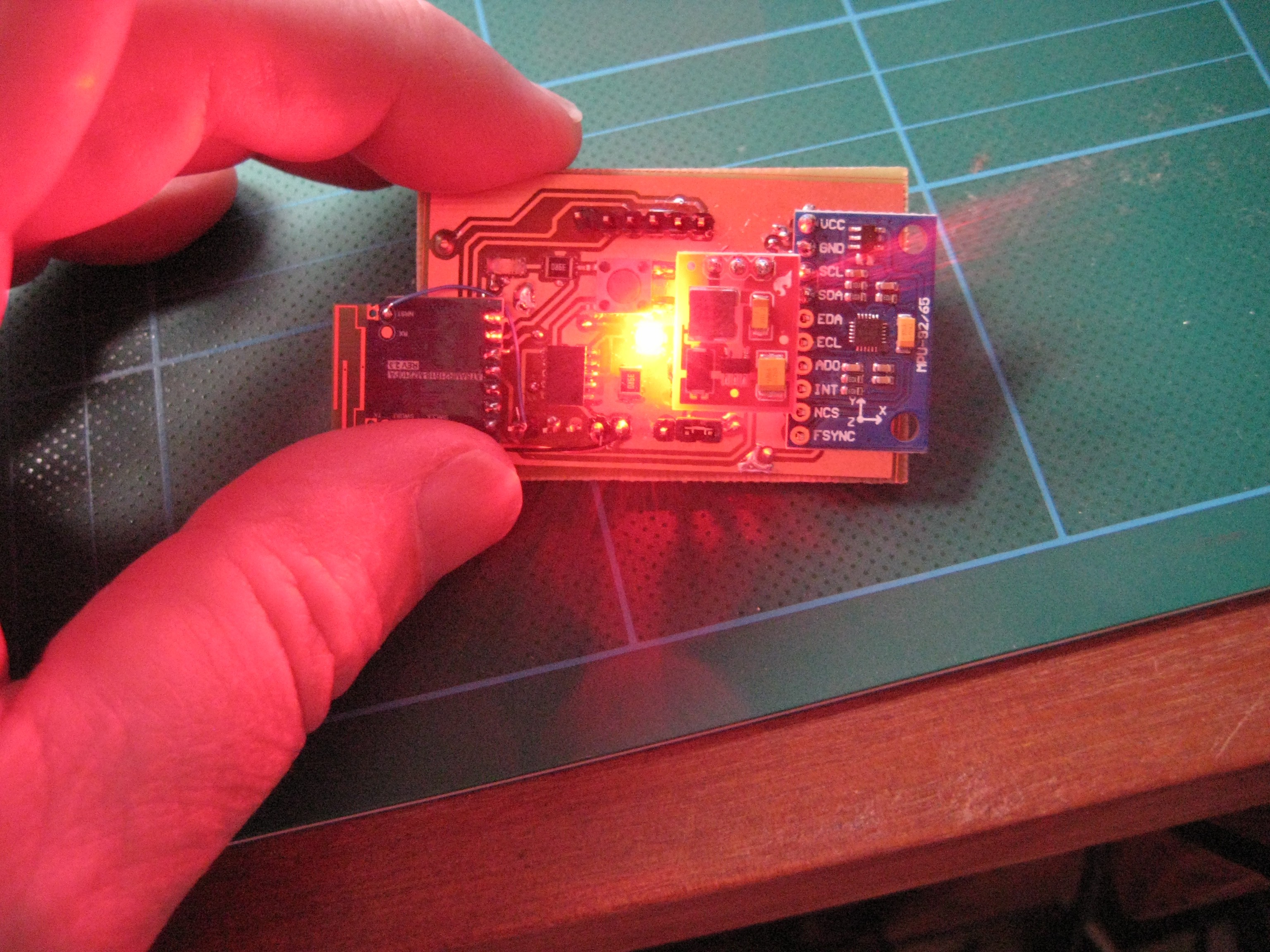

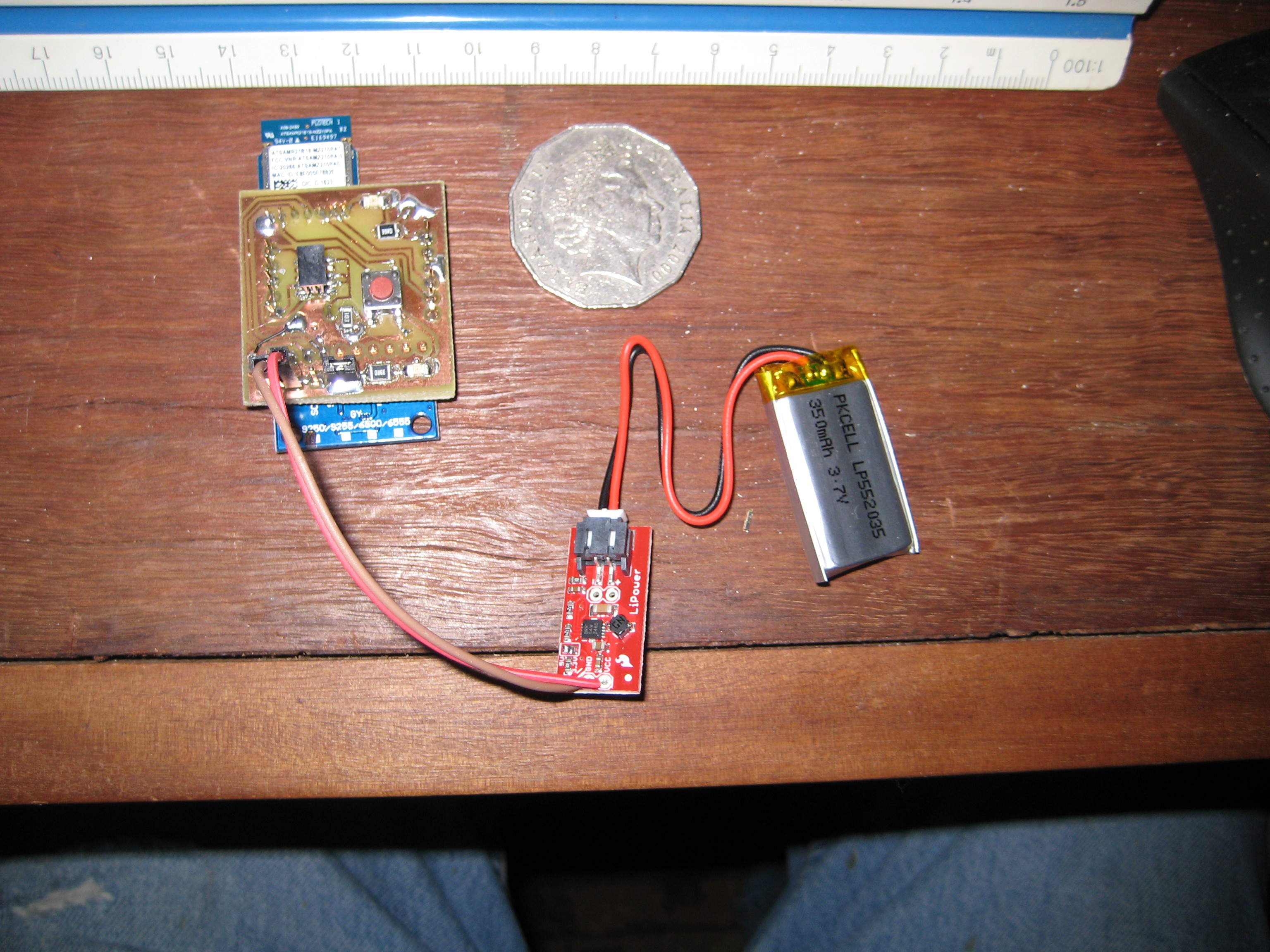

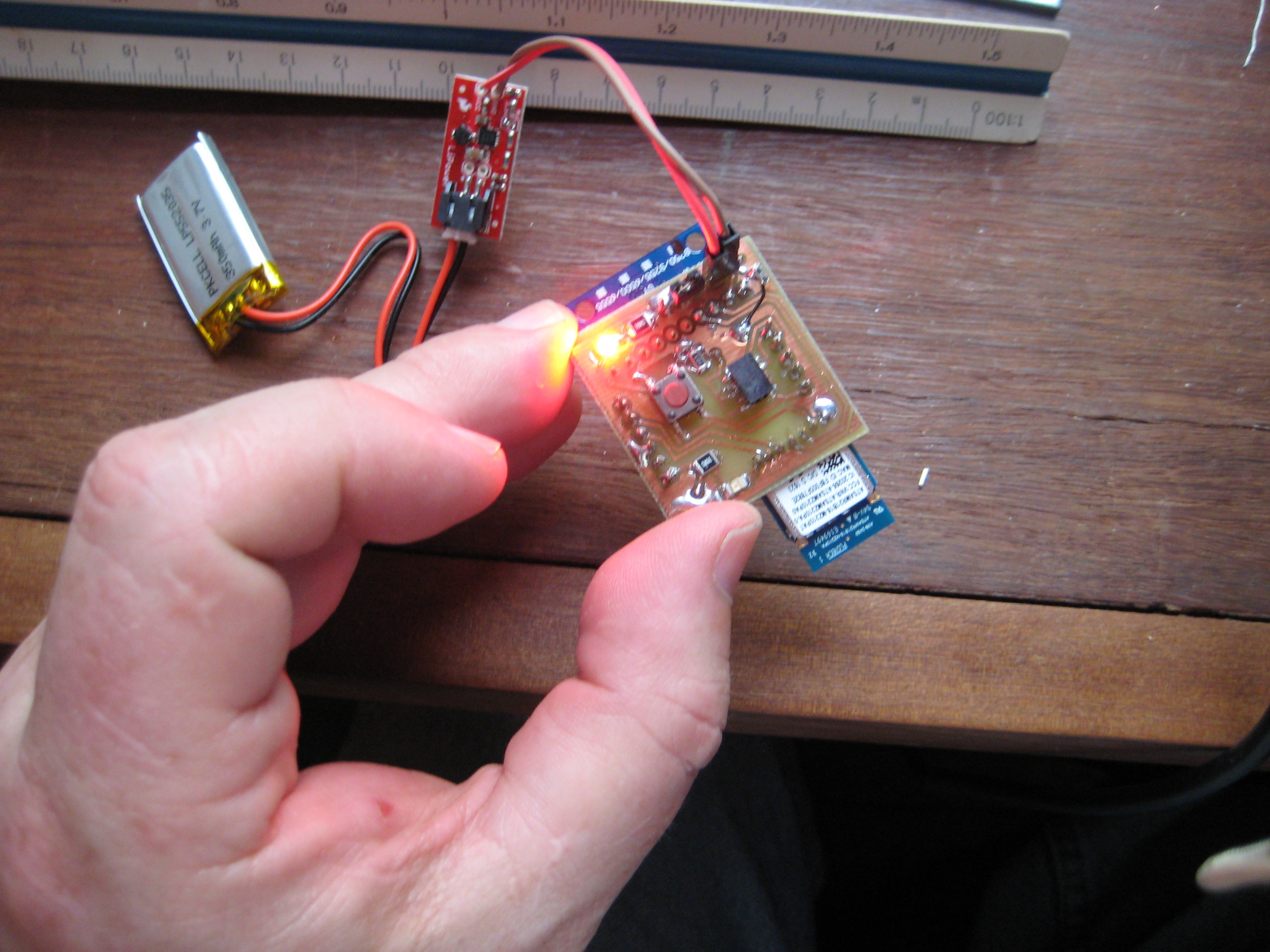

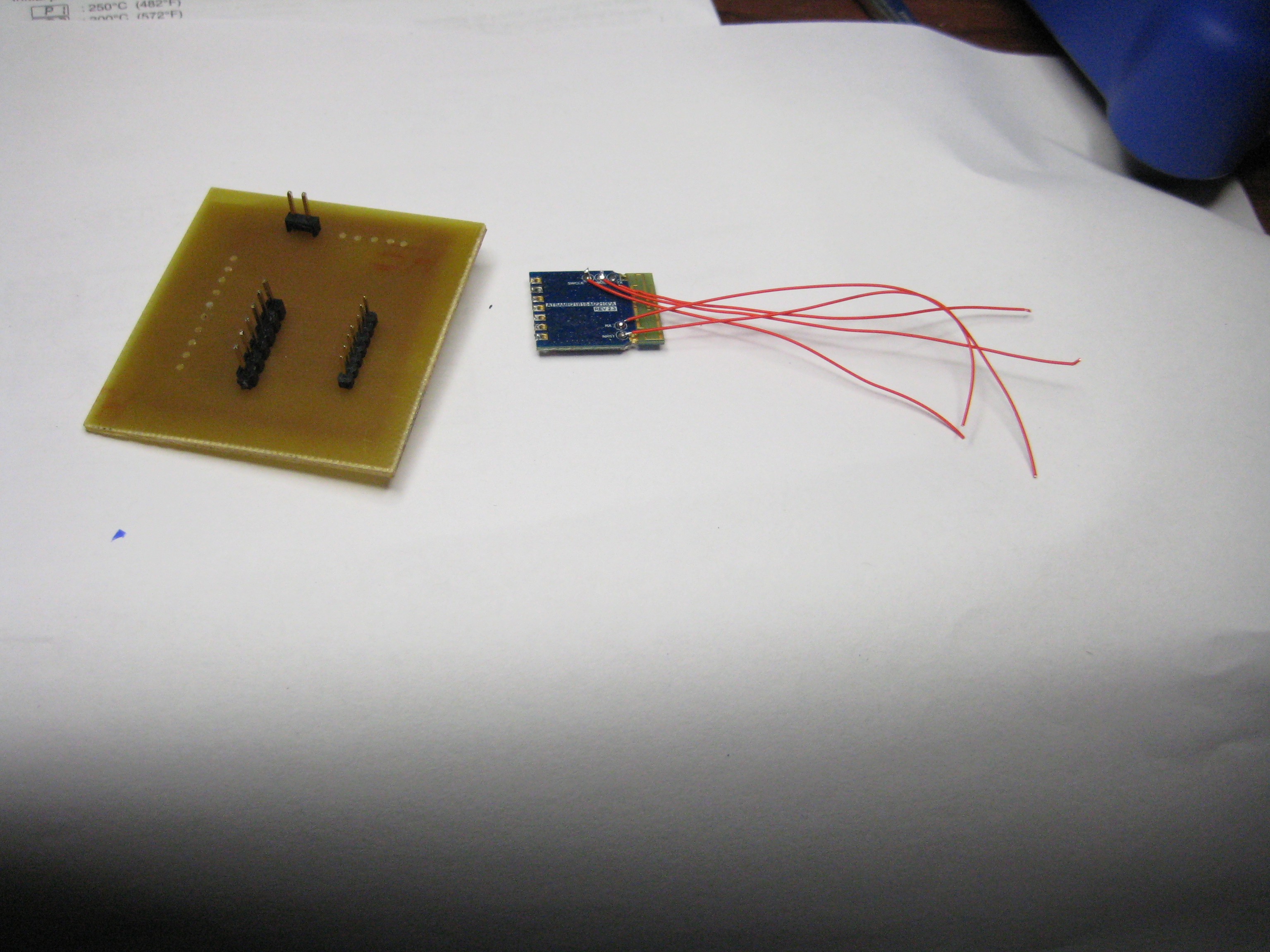

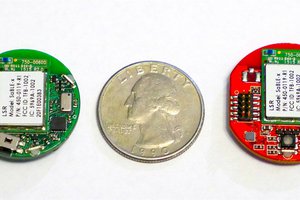

One of the current "proof of concept" wireless IMU sensor nodes - I need to reduce these in size:

The current system does not (yet) have the functionality of the earlier "wired" version but should soon and it also should be much more robust and easier to use. It should also be more scale-able to situations requiring many sensors.

The code, documentation and files describing my hardware design should be available from this project page or my GitHub repository (if you think anything is missing please ask).

NB I am strictly an amateur when it comes to the hardware so I am happy to have people with better ability in that area give criticism or suggestions.

Jonathan Kelly

Jonathan Kelly

Justin

Justin

pastcompute

pastcompute

Trey German

Trey German

Thank you and sure - happy to talk. I am currently working at turning the sensors into wireless units, each one consisting of an IMU sensor and a wireless/processor (currently experimenting with Atmel's ATSAMR21G eg see http://www.atmel.com/Images/Atmel-42486-ATSAMR21B18-MZ210PA_Datasheet.pdf). The idea is the sensors will be stand alone units a bit bigger than a large coin.

It will remove both the need for wires from the sensors to the body worn control unit and also the controller unit itself as each sensor now has the computational power to do its own motion processing of the IMU output and send its derived orientation directly by wireless to whatever interfaces or applications are monitoring the combined motions.