Inspiration

Concussions suck—we're both high school students, and we've had many friends struggle through the aftermath of receiving one though a school sport or an unfortunate circumstance. However, one may almost say they're lucky, as they were people who figured out the had concussions and were able to have them treated. Almost half of all athletes don't report feeling symptoms after receiving a concussive blow, and a total of 1.6-3.8 million concussions occur in the United States every year (estimated by the CDC).

In order to address the major problem of undiagnosed and unreported concussions in youth sports, adult sports, and the mayhem of everyday life, we realized that a better system was needed—one that could be close and accessible, affordable for all teams, and easy to use.

What it does

HeadsUp uses a common diagnostic methodology, the tracking of patient's eye movements in response to stimuli. The difference between HeadsUp and the cheapest hospital equipment? Cost. Commercial hospital equipment runs $5,000 at minimum, and can run up to $25,000, which means HeadsUp is hundreds of times cheaper.

Mihir Garimella

Mihir Garimella

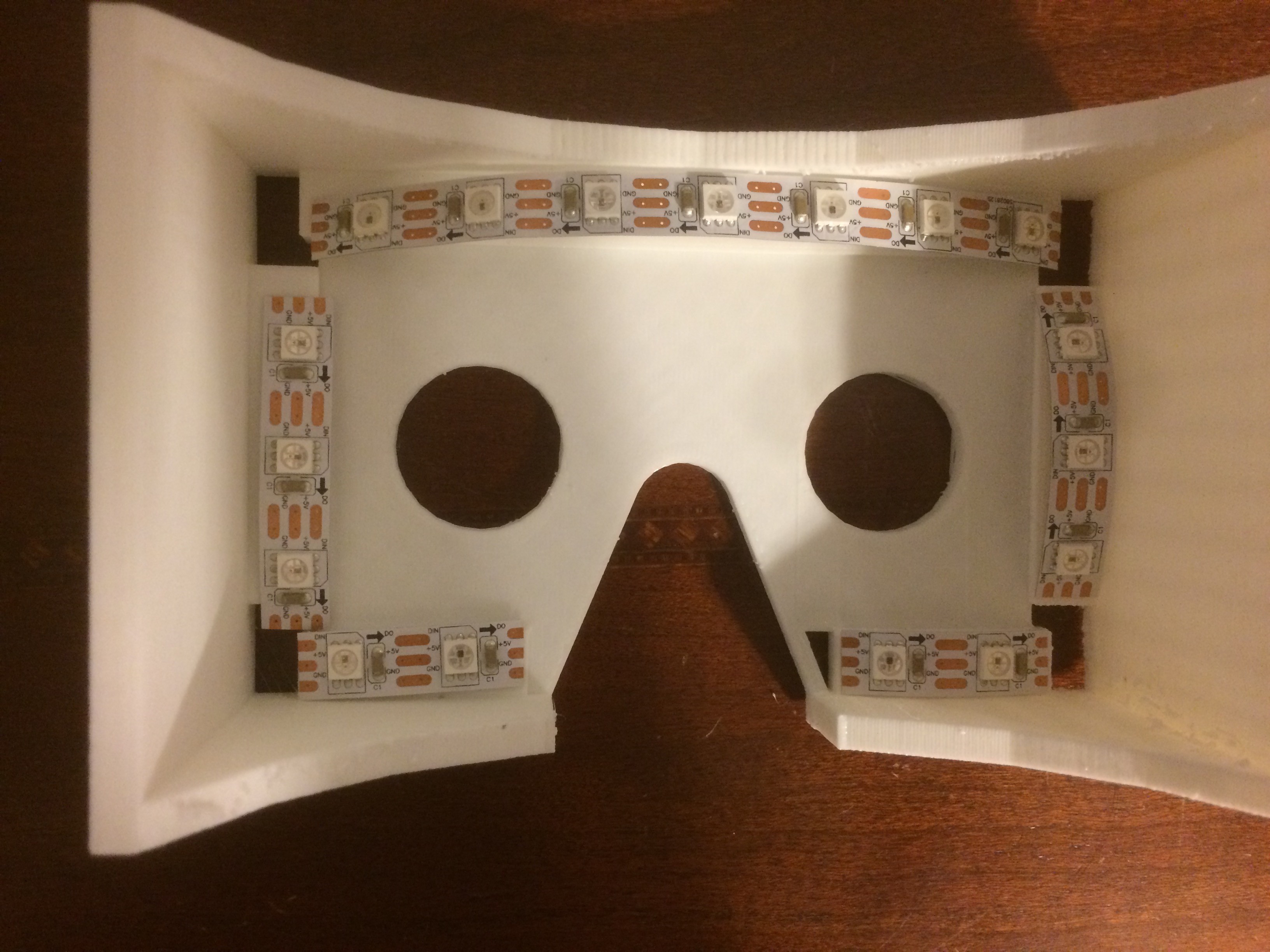

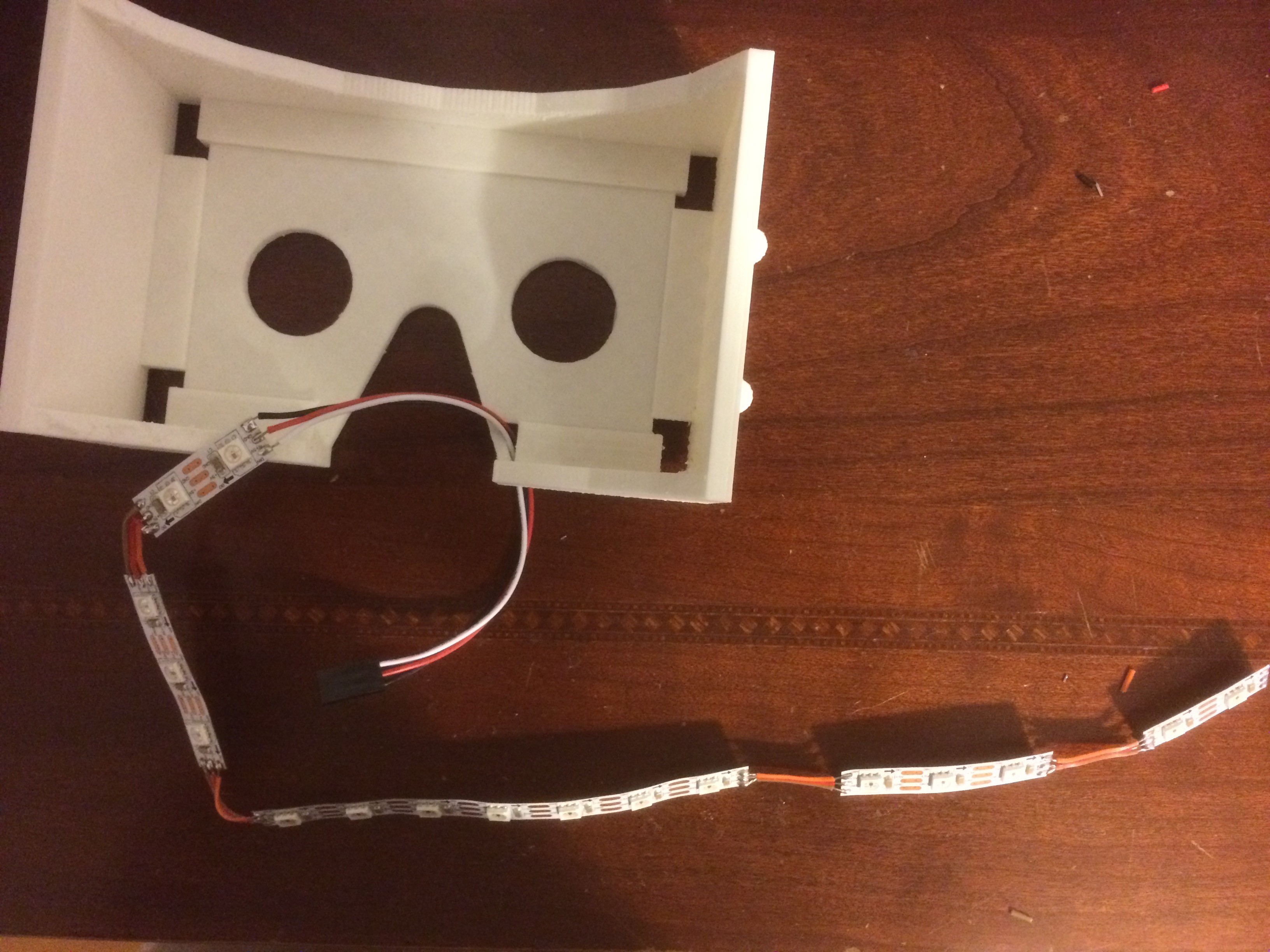

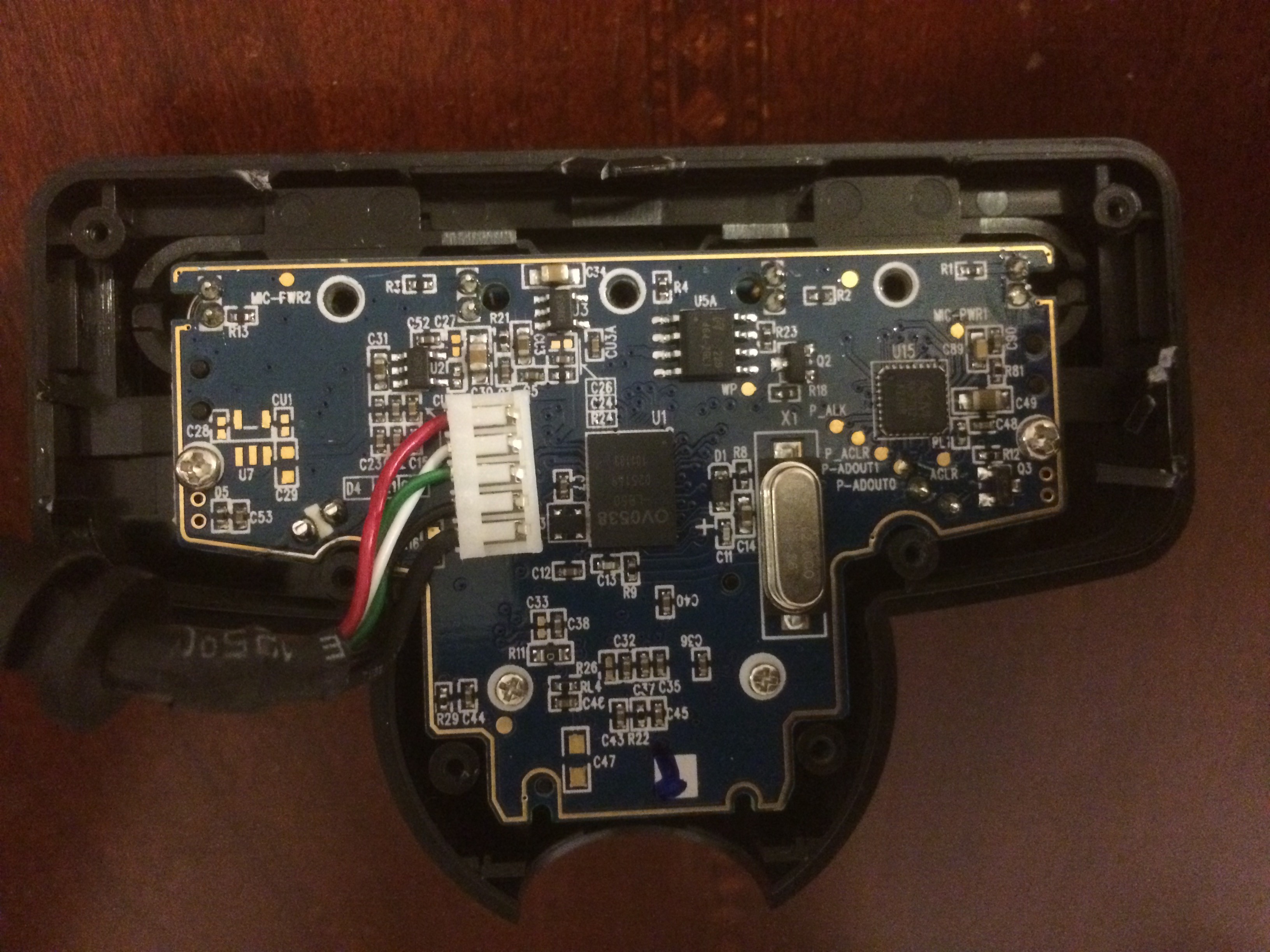

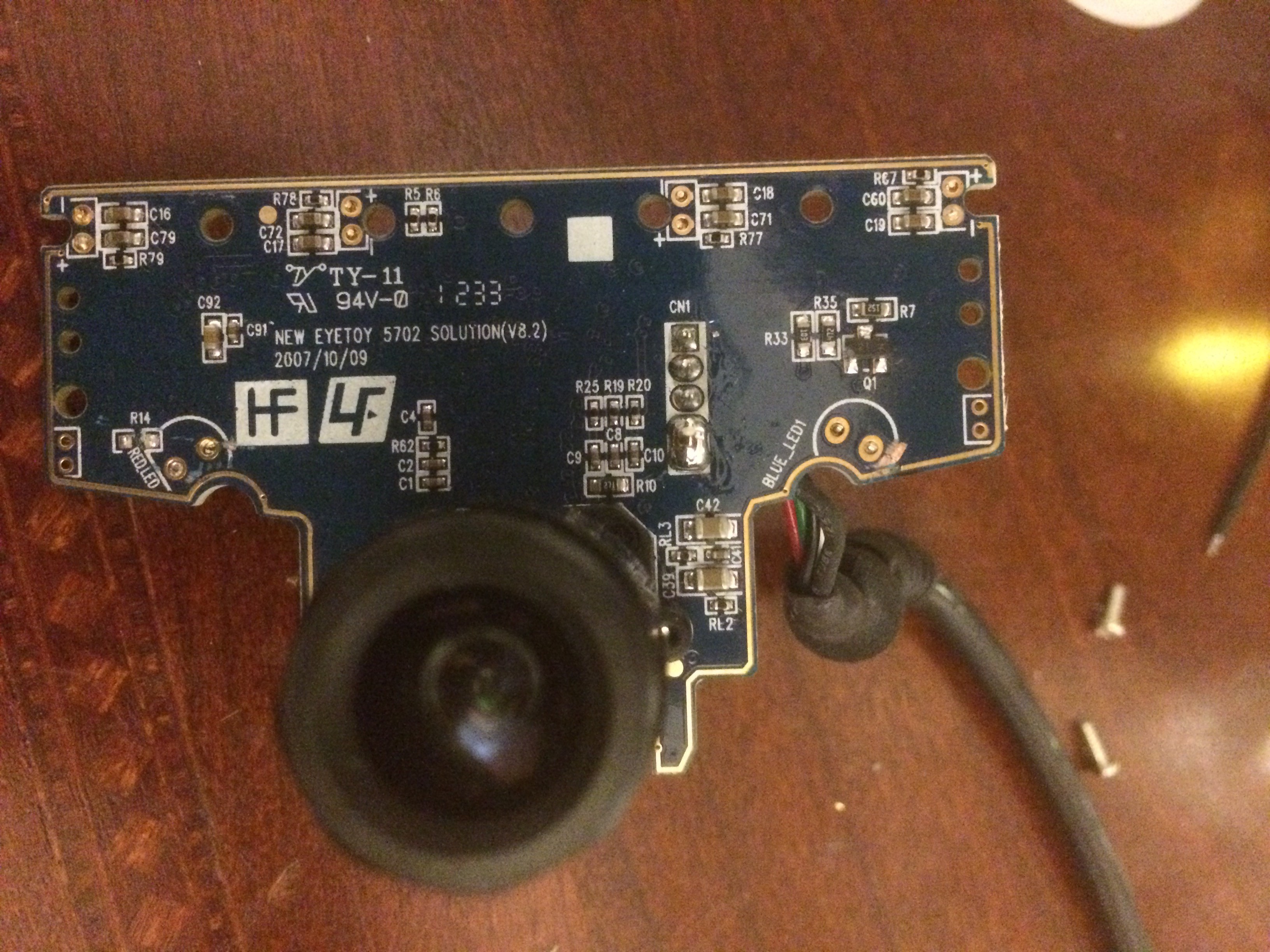

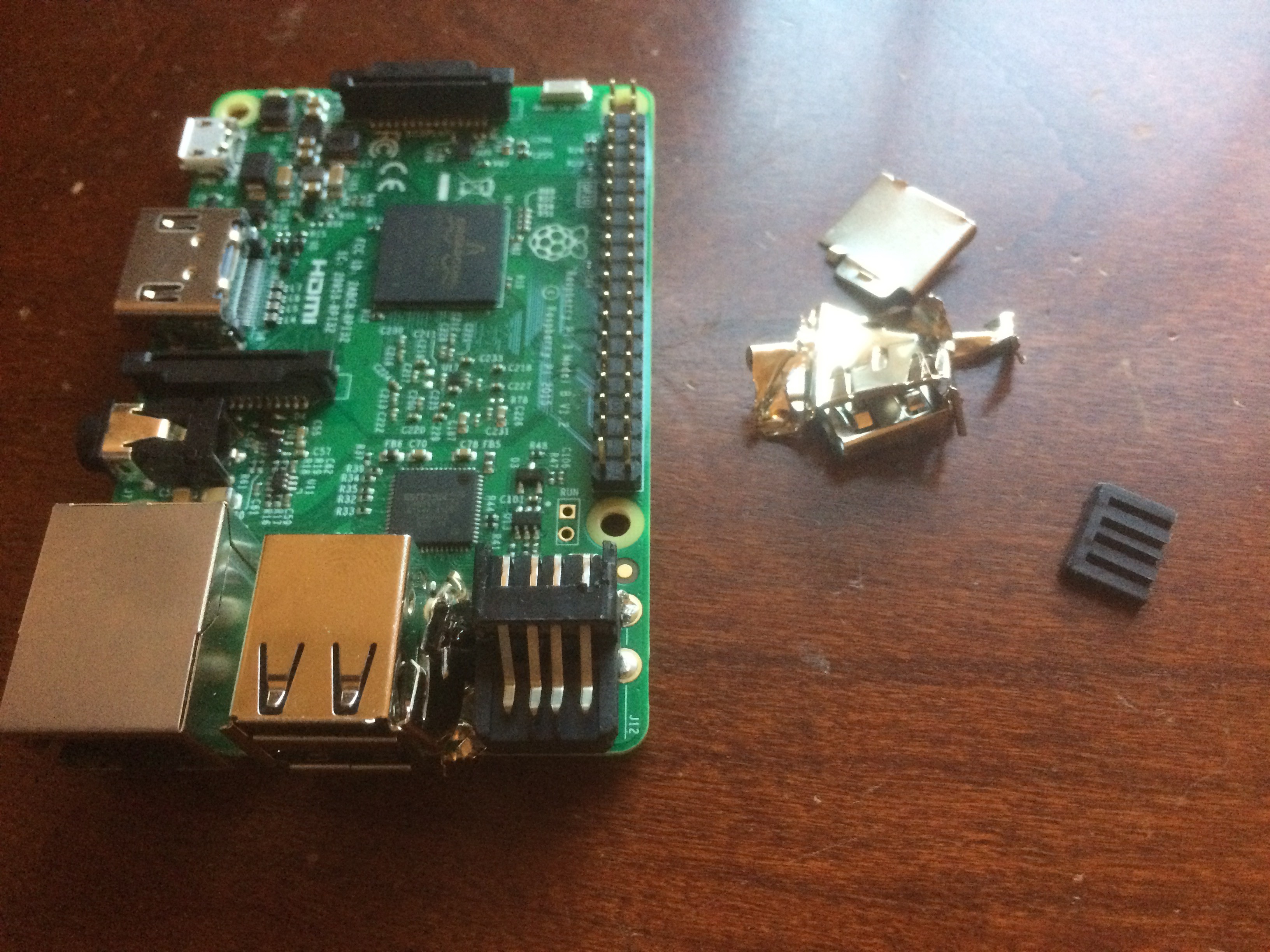

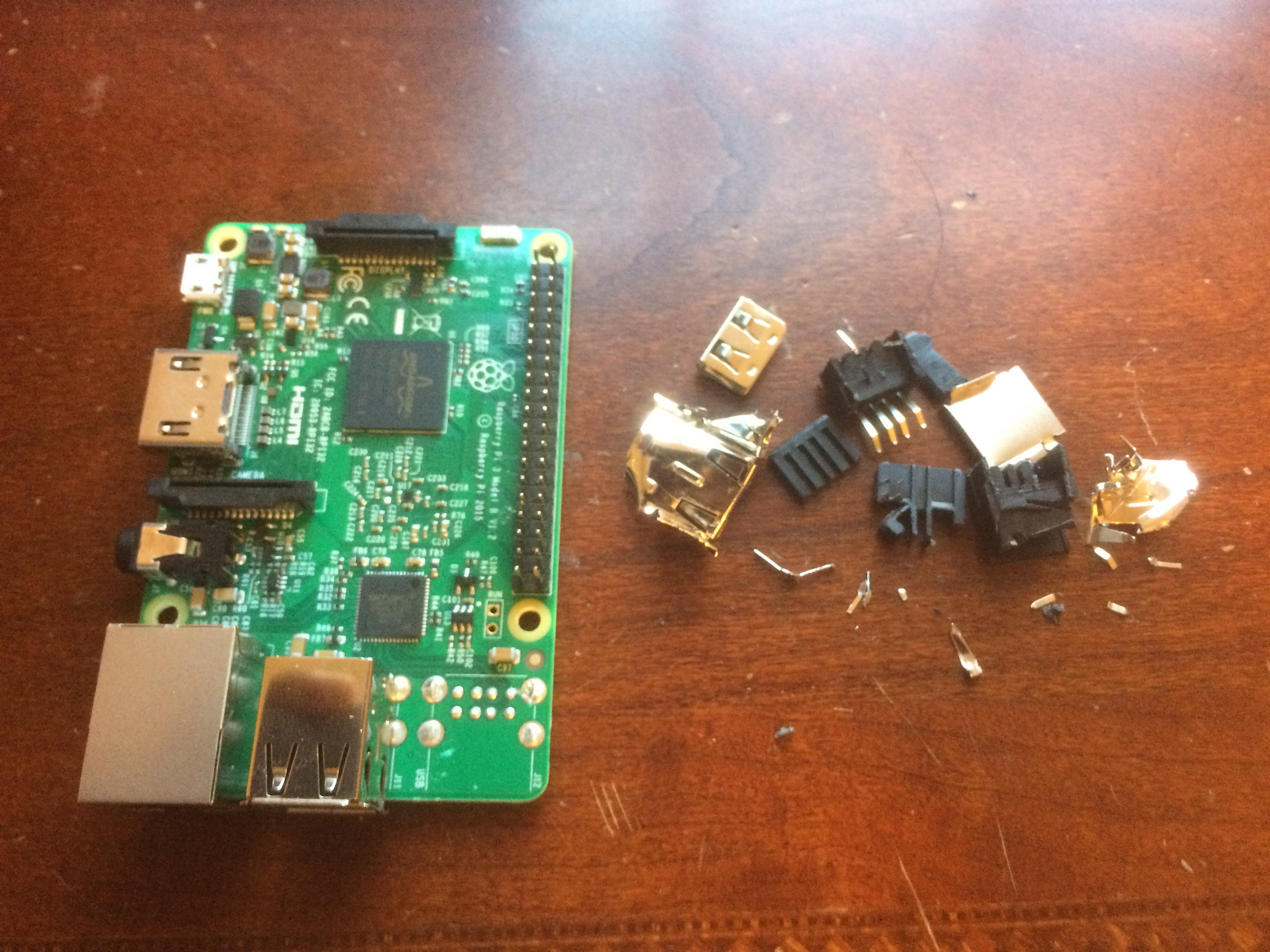

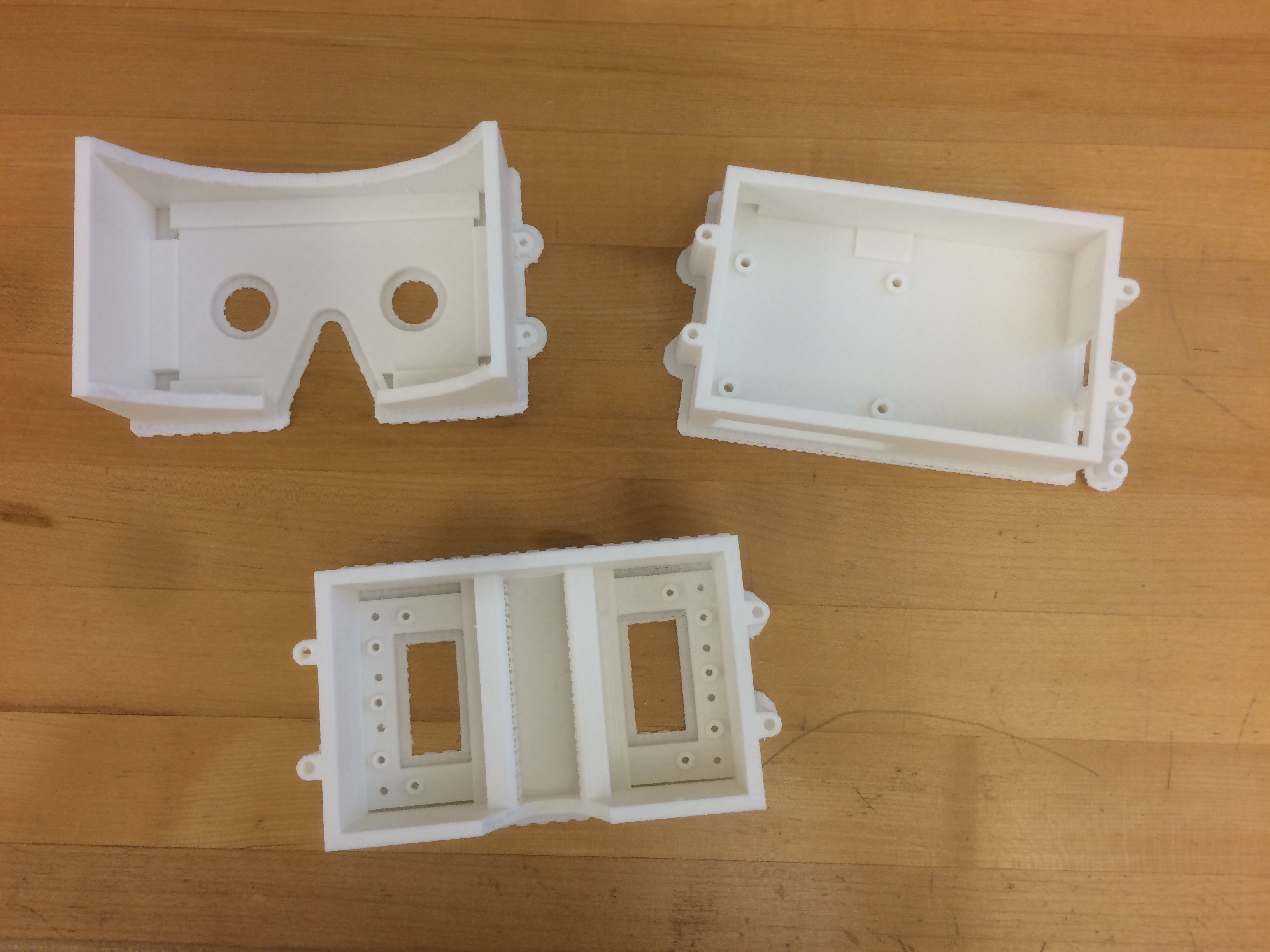

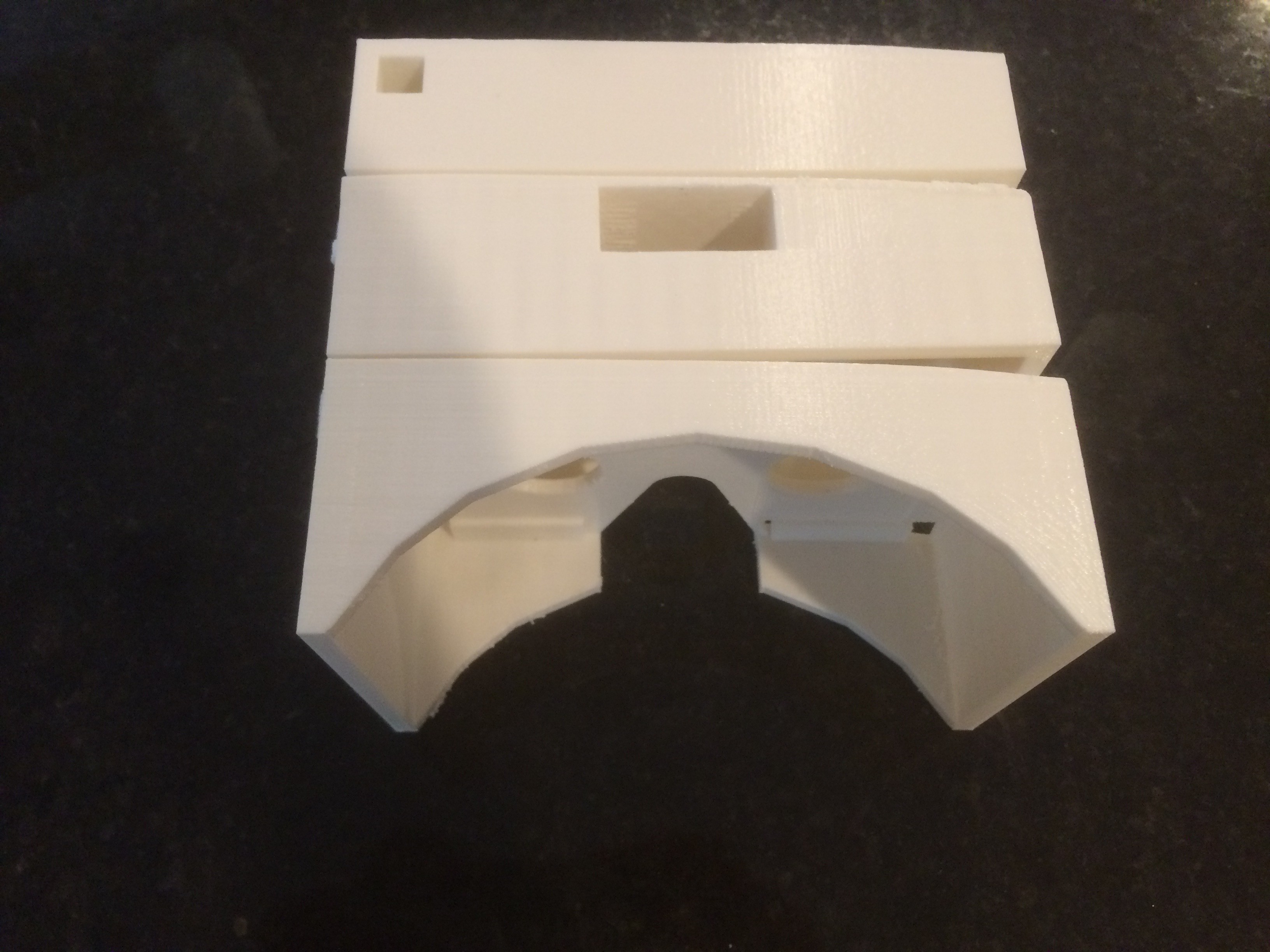

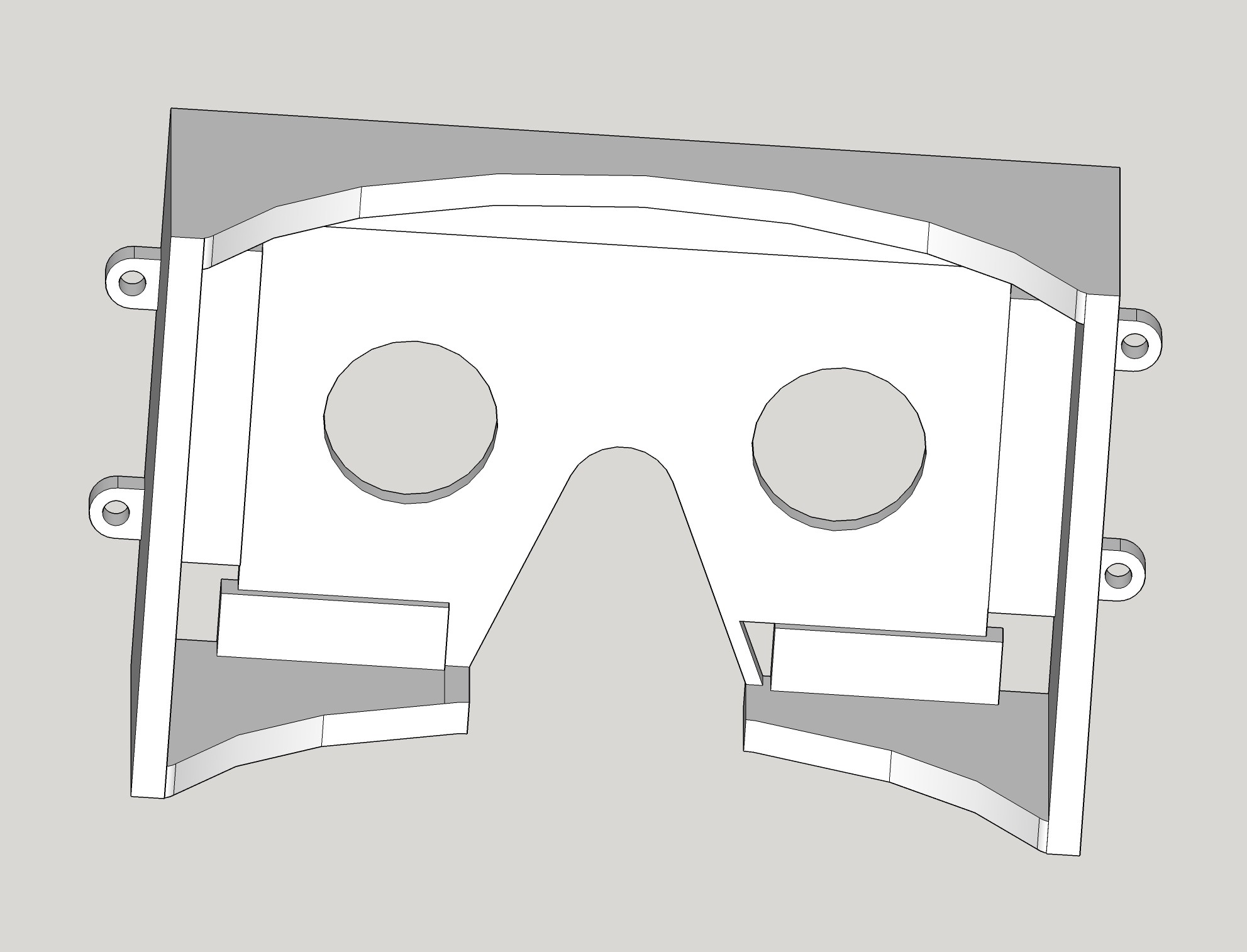

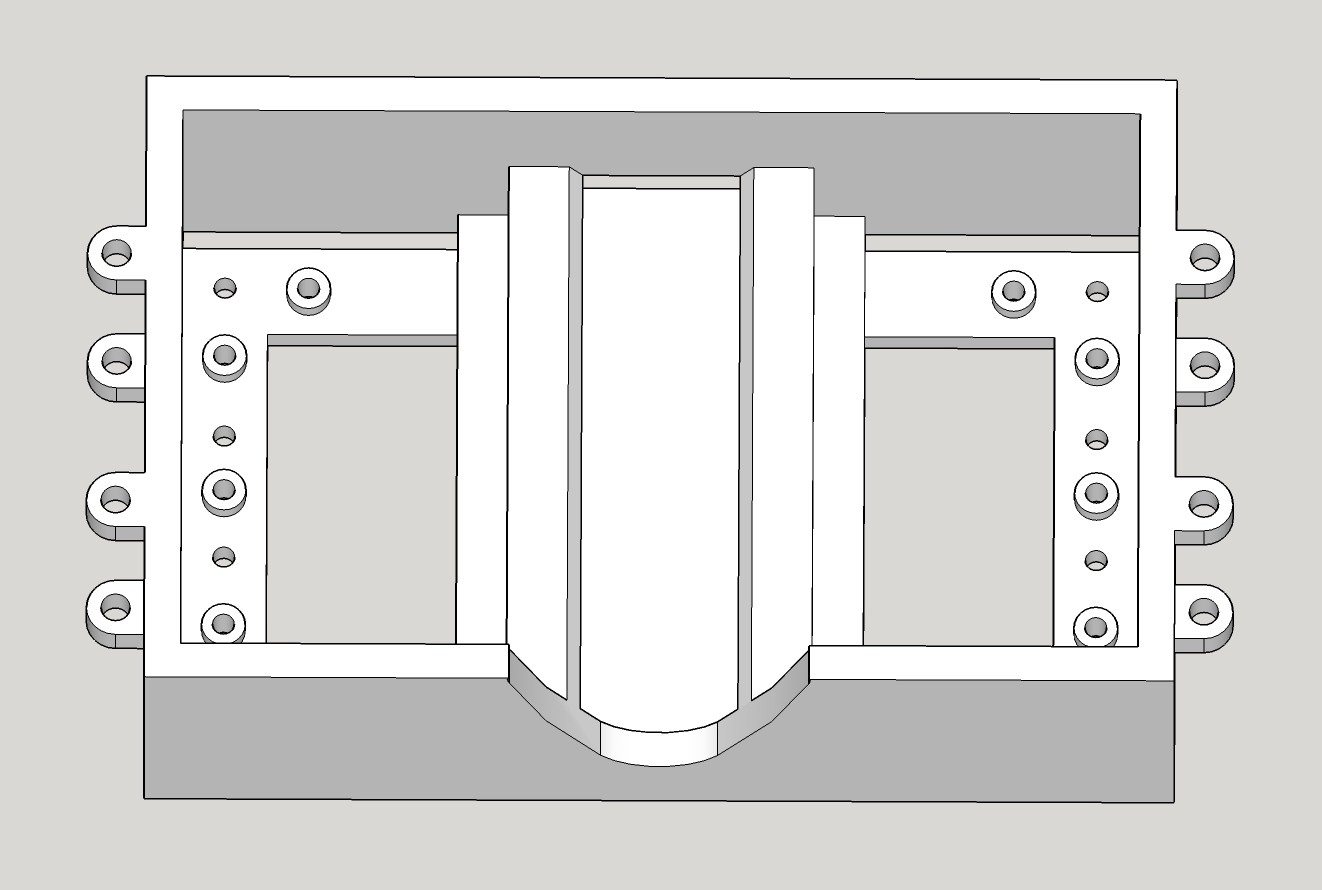

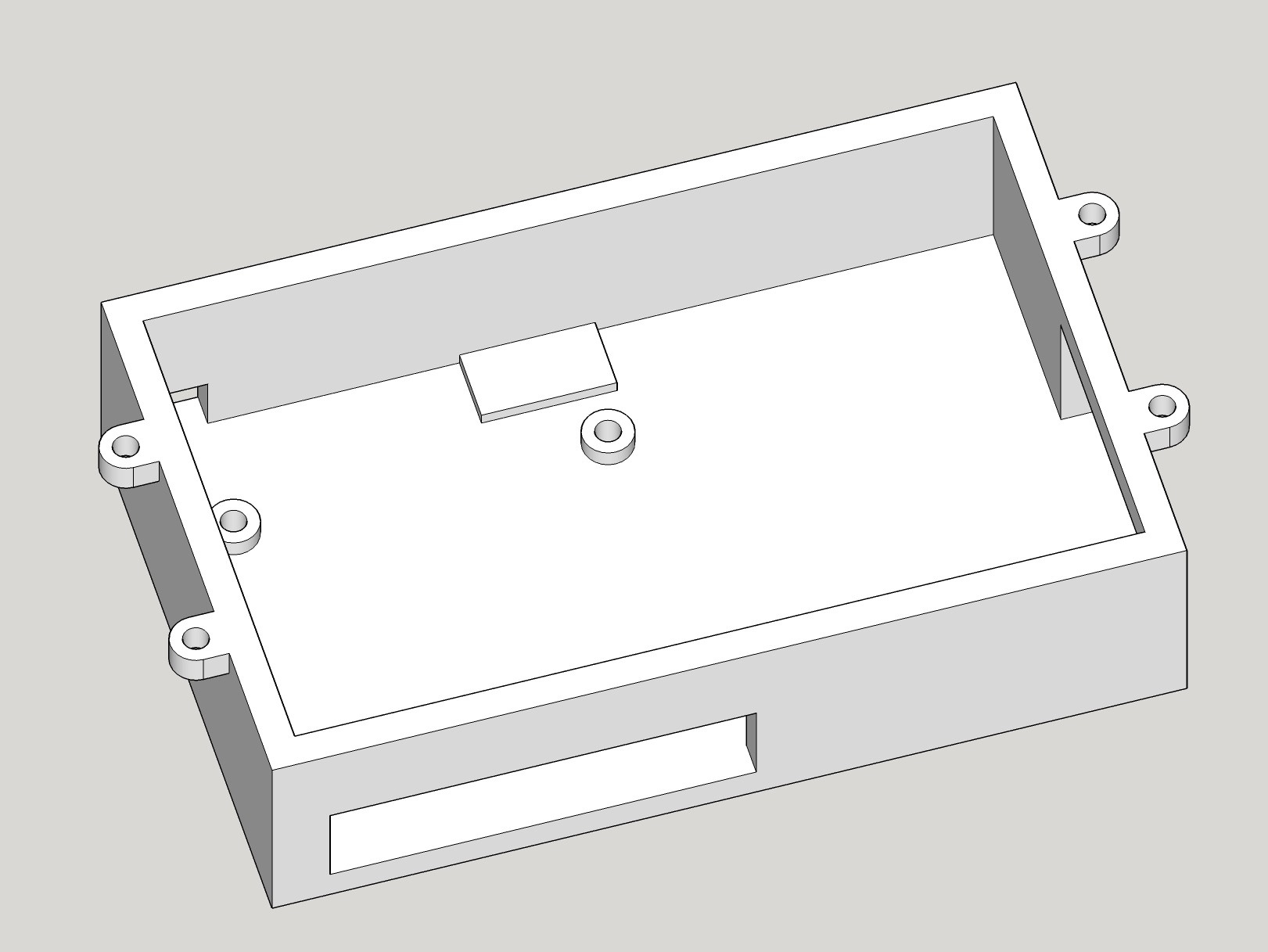

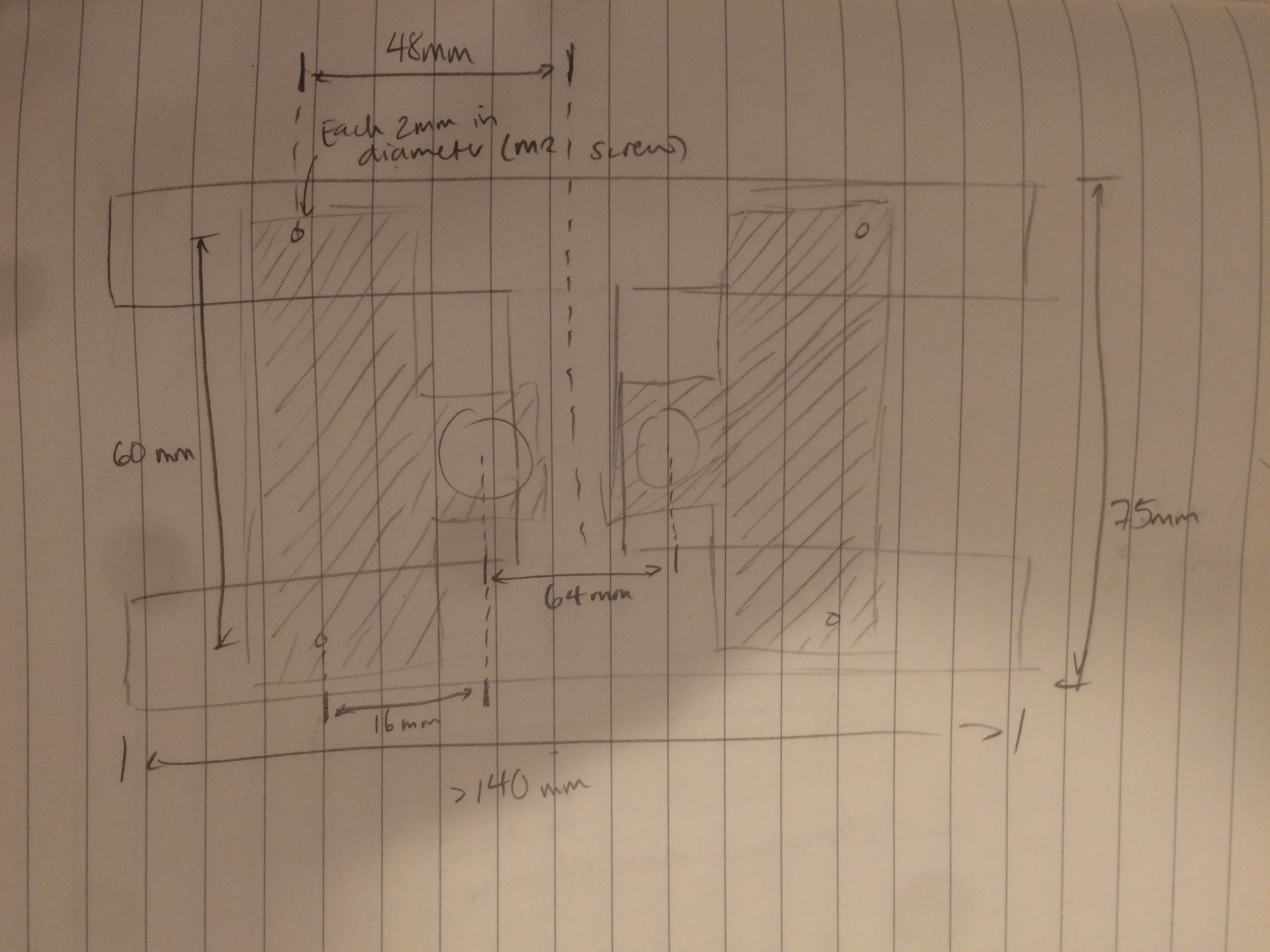

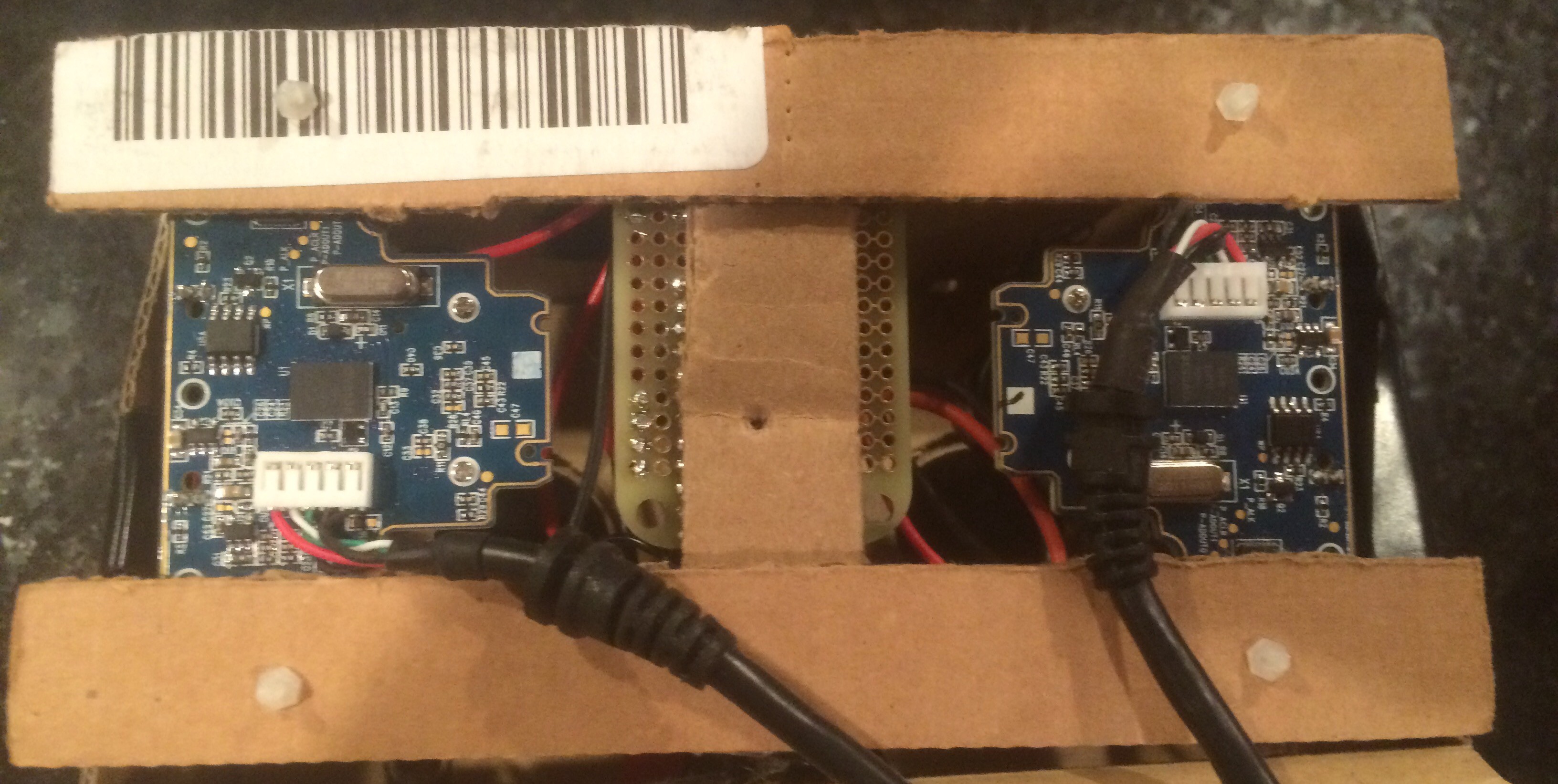

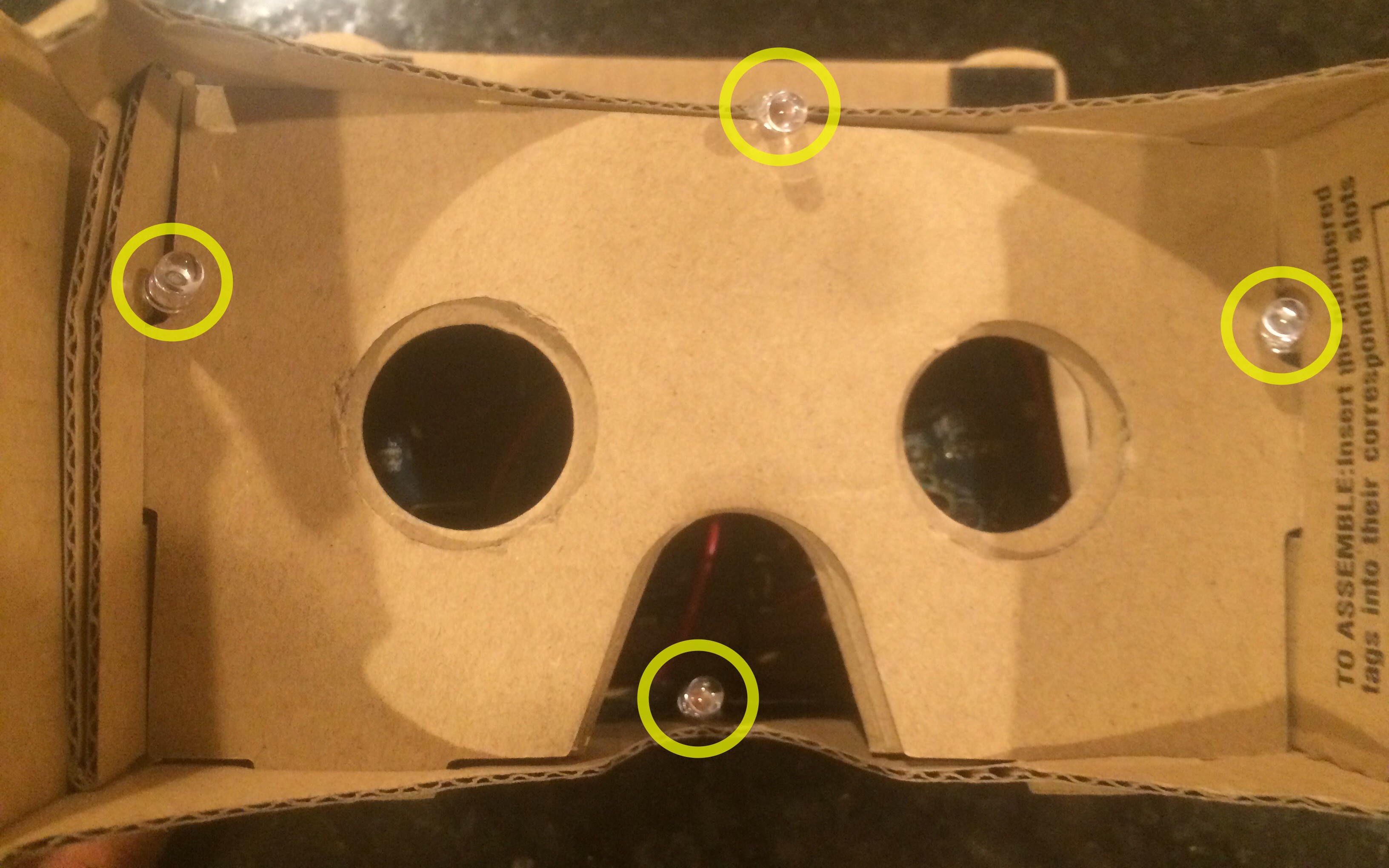

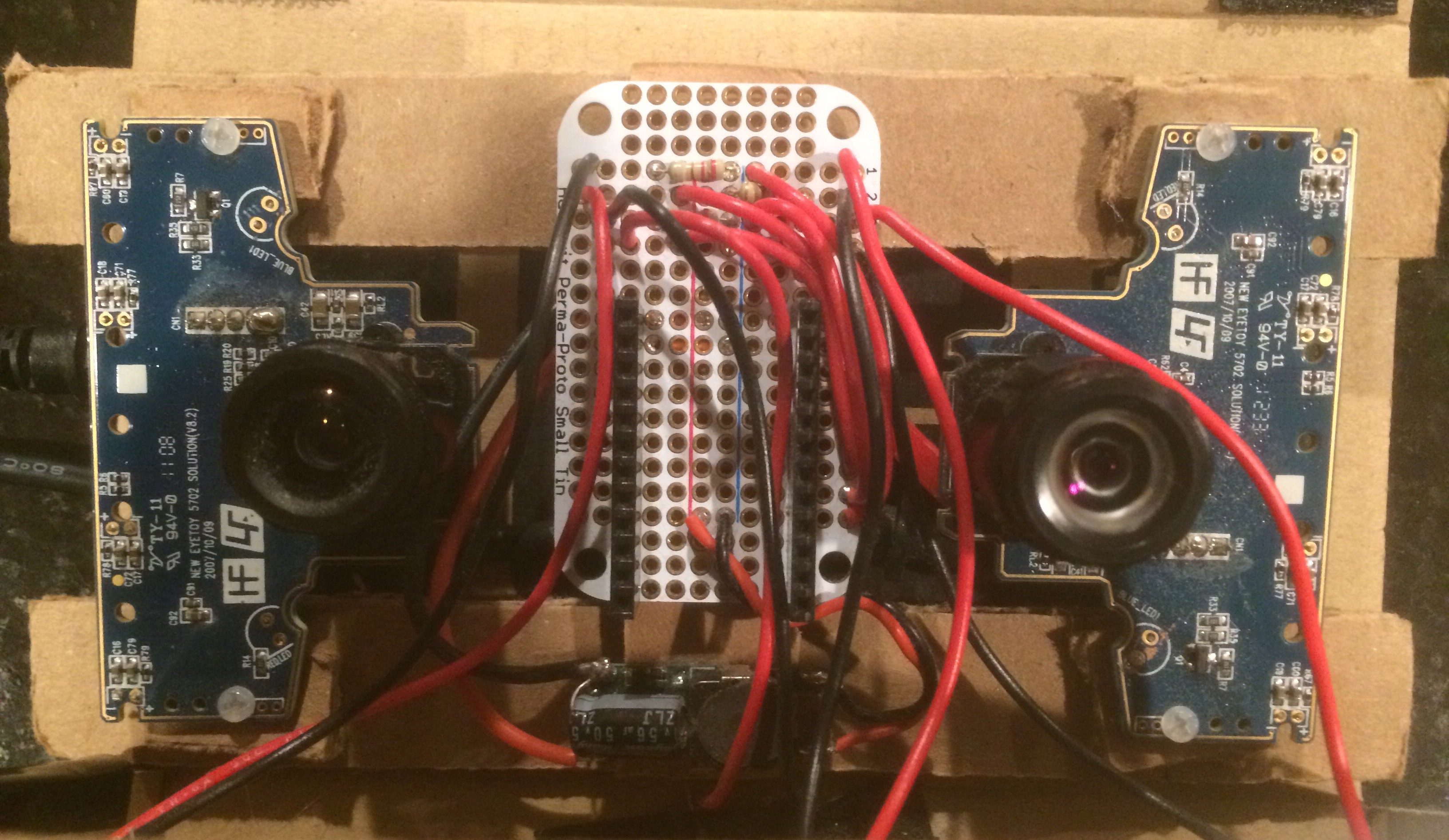

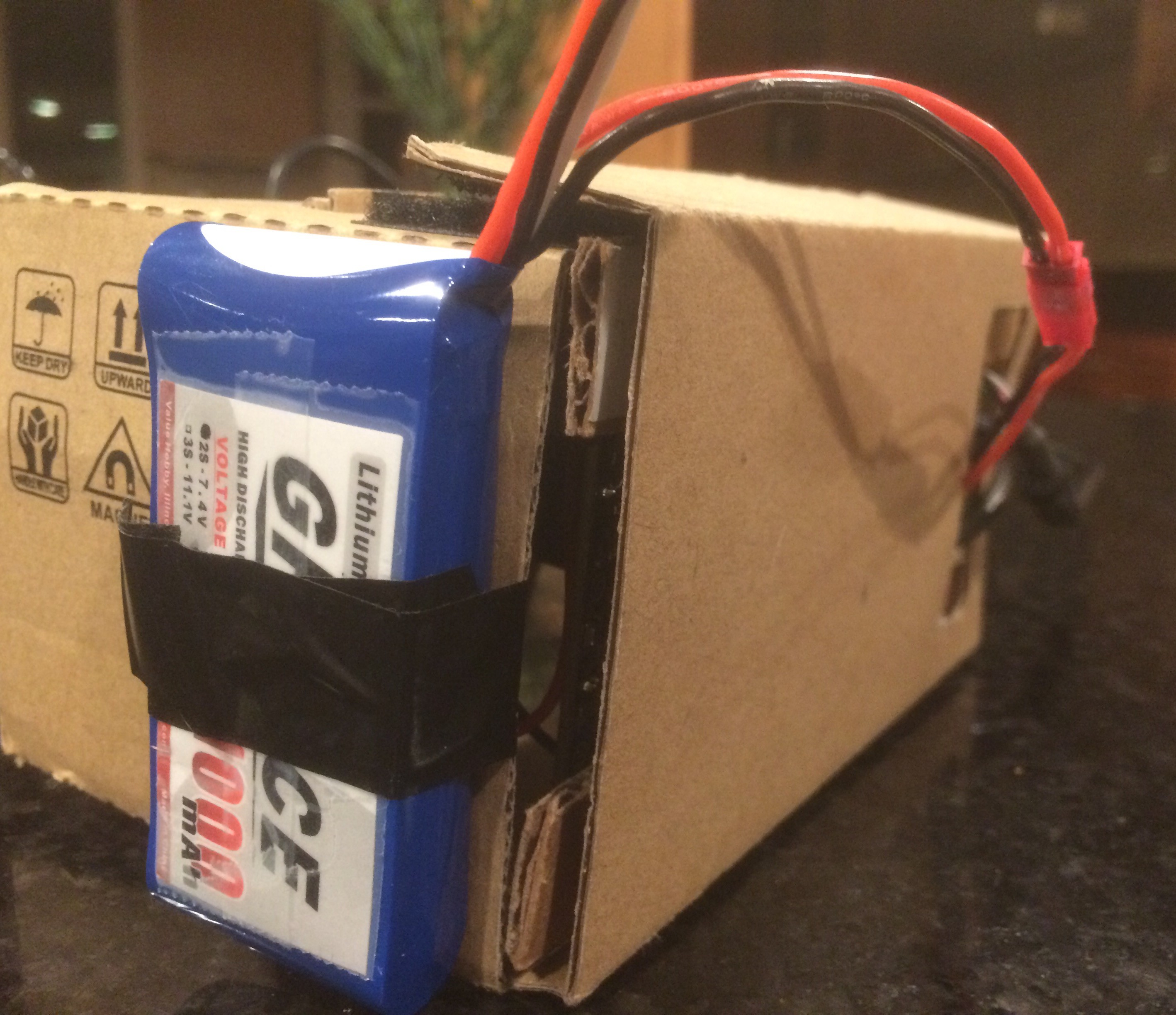

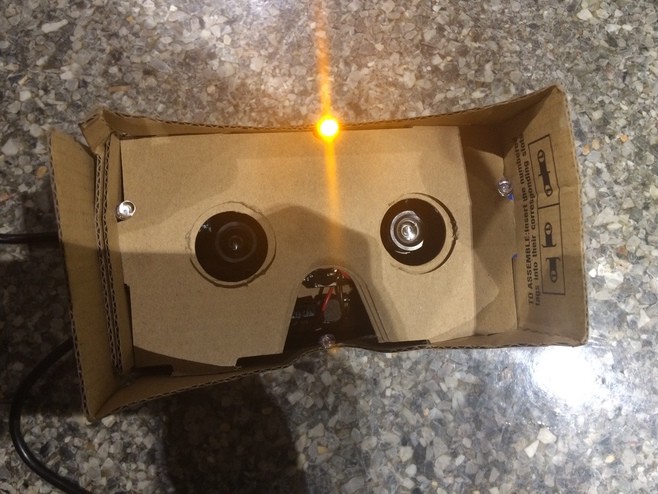

Our prototype was finished! Here's a picture of the completed device (if you look closely, you can see the Playstation cameras and some of the circuitry inside the Cardboard):

Our prototype was finished! Here's a picture of the completed device (if you look closely, you can see the Playstation cameras and some of the circuitry inside the Cardboard):

Audrey Robinel

Audrey Robinel

Arcadia Labs

Arcadia Labs

jdelbe

jdelbe