We all know that bees are under threat from modern farming practices, but did we know about the Asian hornet, slowly spreading north through the united states of Europe closer and closer to the Land of Dragons (where I live) ? The Asian hornet is a voracious predator and will 'hawk' outside bee hives, swooping down on bees in flight on their home coming to feed their brood. Then, as the hive becomes more and more weakened due to diminishing bee numbers, the hornets will enter the hives and gobble up all the innocent youngsters inside.

So what do we do - give up with out a fight? Hell no .... We should fight back against this monstrous predator with everything that we've got! In this case we'll be using so called 'artificial intelligence' to 'infer' the location of the hornet and attempt to destroy it, preferably as dramatically as possible.

Since the hornets have not actually arrived here yet, I'll be attempting to train my machine on detecting the common European wasp, Vespula vulgaris, which also predates on the larvae of honey bees, but is not quite the threat that is the Asian hornet. Below is an Asian hornet hawking a honey bee:

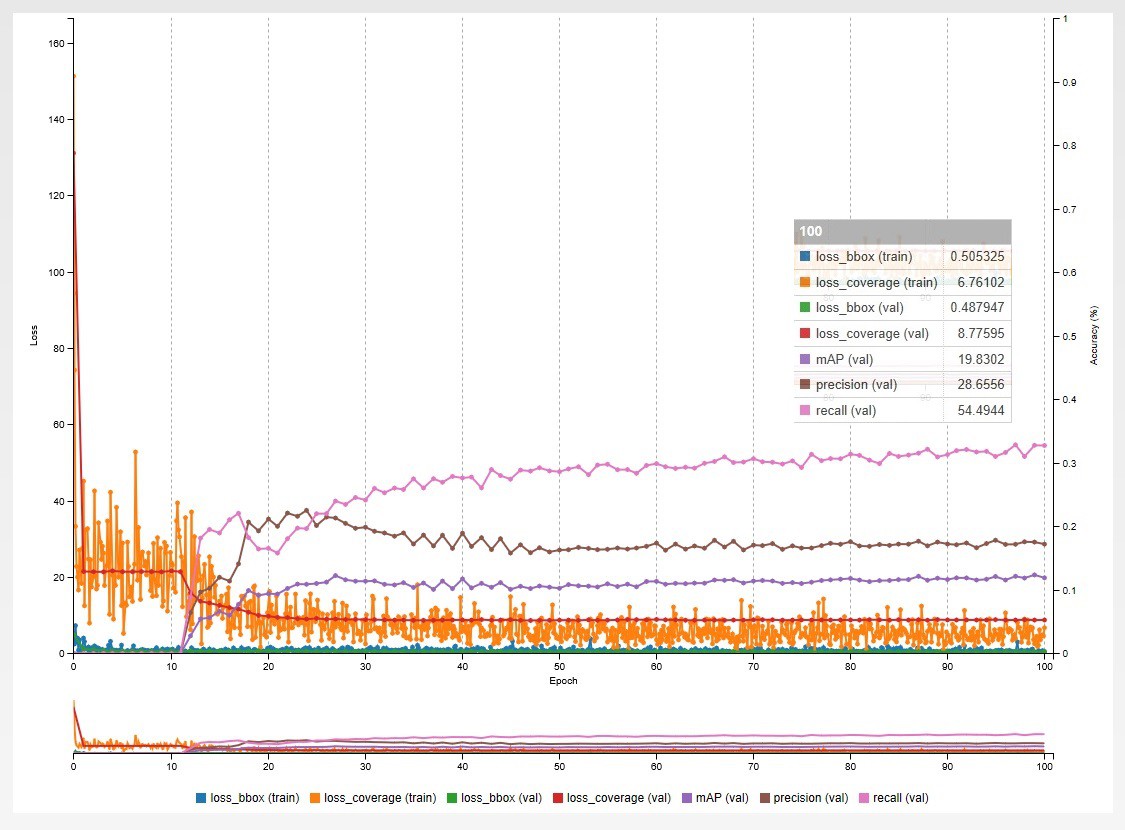

One of the great things about this project is that anybody can get involved and no equipment is required other than your regular PC connected to the internet. This is because most of the work is involved with data processing such as processing images and creating labels. The data can then be uploaded onto the Amazon Web Service (AWS) for processing and nice looking graphs can be produced such as below:

In fact, some companies / individuals run online competitions with quite substantial prizes for doing this kind of work with other image sets, such as photos of whales, where you'd need to adjust the neural network itself to get a chance of winning.

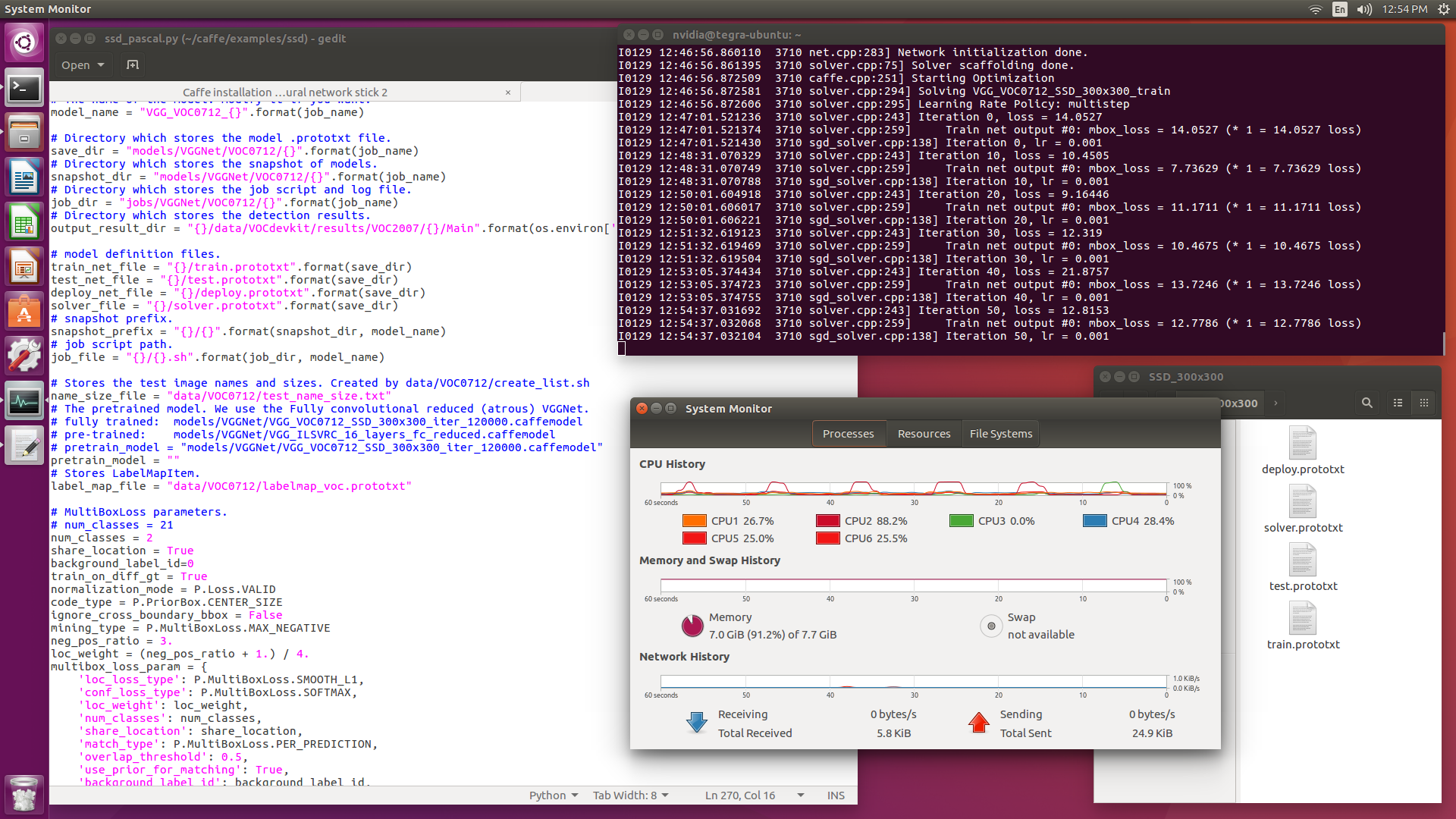

In fact, some companies / individuals run online competitions with quite substantial prizes for doing this kind of work with other image sets, such as photos of whales, where you'd need to adjust the neural network itself to get a chance of winning.Full instructions for using AWS and Nvidia DIGITS are given in the logs. NB. AWS do charge a range of fees for their service, but currently (2018) I can train networks in about 1.5 hours at $3.10 an hour with no flat rates, which is IMO cheap considering the equipment used to do this costs may 1,000s of dollars to buy.

Capt. Flatus O'Flaherty ☠

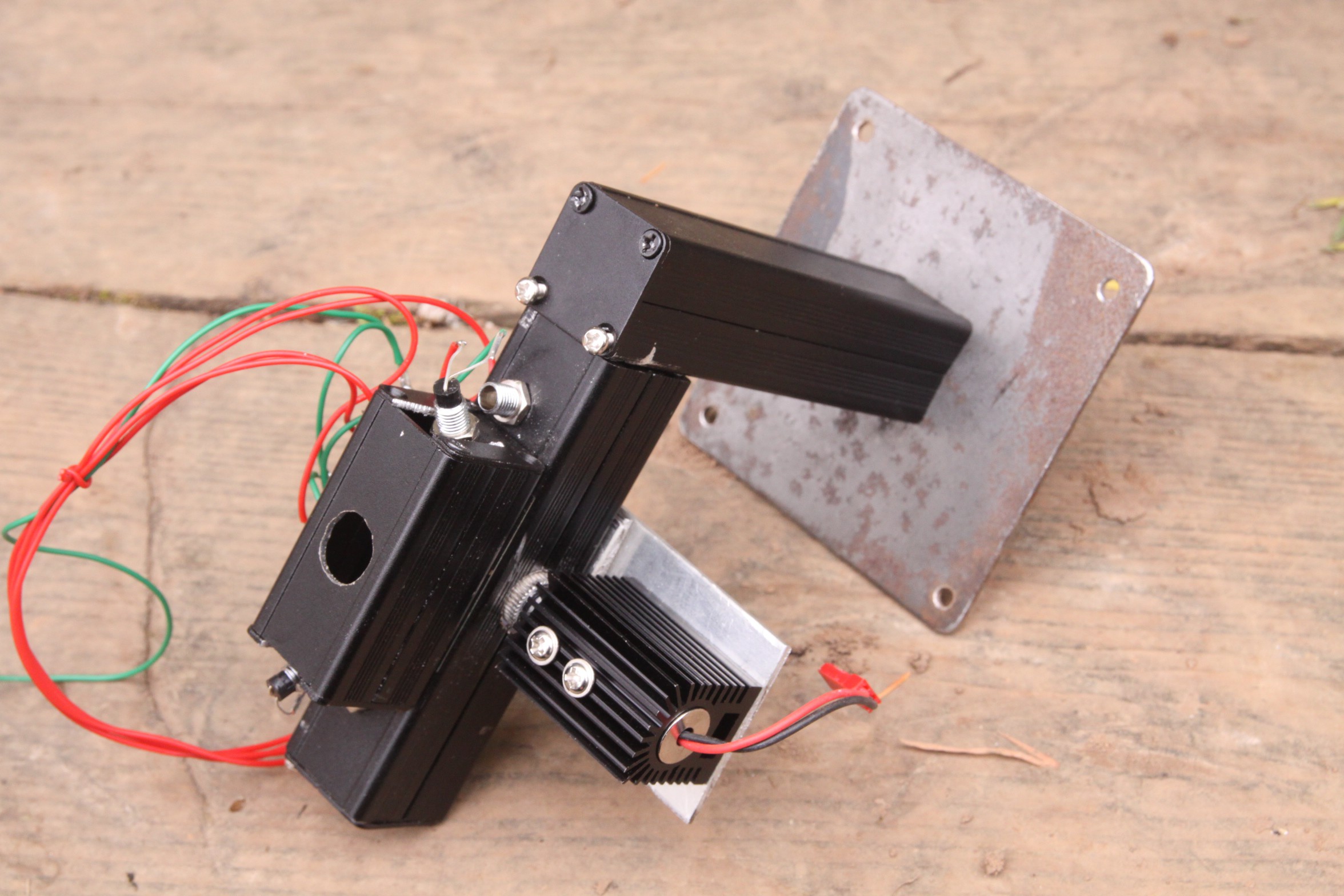

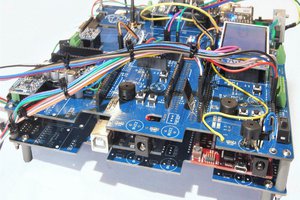

Capt. Flatus O'Flaherty ☠ Aluminum box section has been used to produce a tunnel through which bees will travel to get into the hive. So will the wasps / A. Hornets if they want to attack. Bottom right is a 1 watt laser. Middle left is hole for camera, protected from bees by a glass microscope slide. Wasps detected by the camera will be zapped by the laser and simply fall out of the tunnel. This apparatus will hopefully be attached to a small beehive tomorrow for capturing video footage of the bees inside to create 'null' images fr detection.

Aluminum box section has been used to produce a tunnel through which bees will travel to get into the hive. So will the wasps / A. Hornets if they want to attack. Bottom right is a 1 watt laser. Middle left is hole for camera, protected from bees by a glass microscope slide. Wasps detected by the camera will be zapped by the laser and simply fall out of the tunnel. This apparatus will hopefully be attached to a small beehive tomorrow for capturing video footage of the bees inside to create 'null' images fr detection.

Wajid Ahmad

Wajid Ahmad

Johanna Shi

Johanna Shi

I came across your project today. really interesting. Funny enough I have built something very similar to see if it could work. I trained my model on house flies as they are easy to come by for testing. I made a short video of the machine here- https://youtu.be/z8EO5KvcxNA. I'm now editing images of the asian hornets and bees to train a new model. Happy to chat further if anyone is interested.