Prerequisites for reproducing this project:

- Good familiarly with Linux, ARM based small board computers.

- Basic ability to code in Python and C++ and use of compilers / build systems.

- Good level welding and fabrication skills.

- Good knowledge of Ethernet, WIFI and TCP / IP.

- Basic knowledge of electrics / electronics.

- No expert knowledge required.

As previously mentioned, this machine is deliberately kept to the simplest design possible to enable basic autonomous navigation around a farm. There is currently only one set of sensors - advanced GPS sensors - and these obviously need a platform to work off, so the sensors and associated software are only a small part of the project. A robot developer can then add all the other sensors he might require after getting the basics in place.

As the title suggests, this project is all about providing solutions that are relatively easy and cheap to implement, but still effective enough to get excellent results. People who come to visit on site often ask "What does it actually do", thinking it must weed some crops or such like and we reply "It does absolutely nothing. It's a Do Nothing robot". This is a slight exaggeration, as it could quite happily tow an agricultural 'implement' behind it such as a mower, but essentially all it does is drive around a field autonomously.

The chapters in the instructions are as follows:

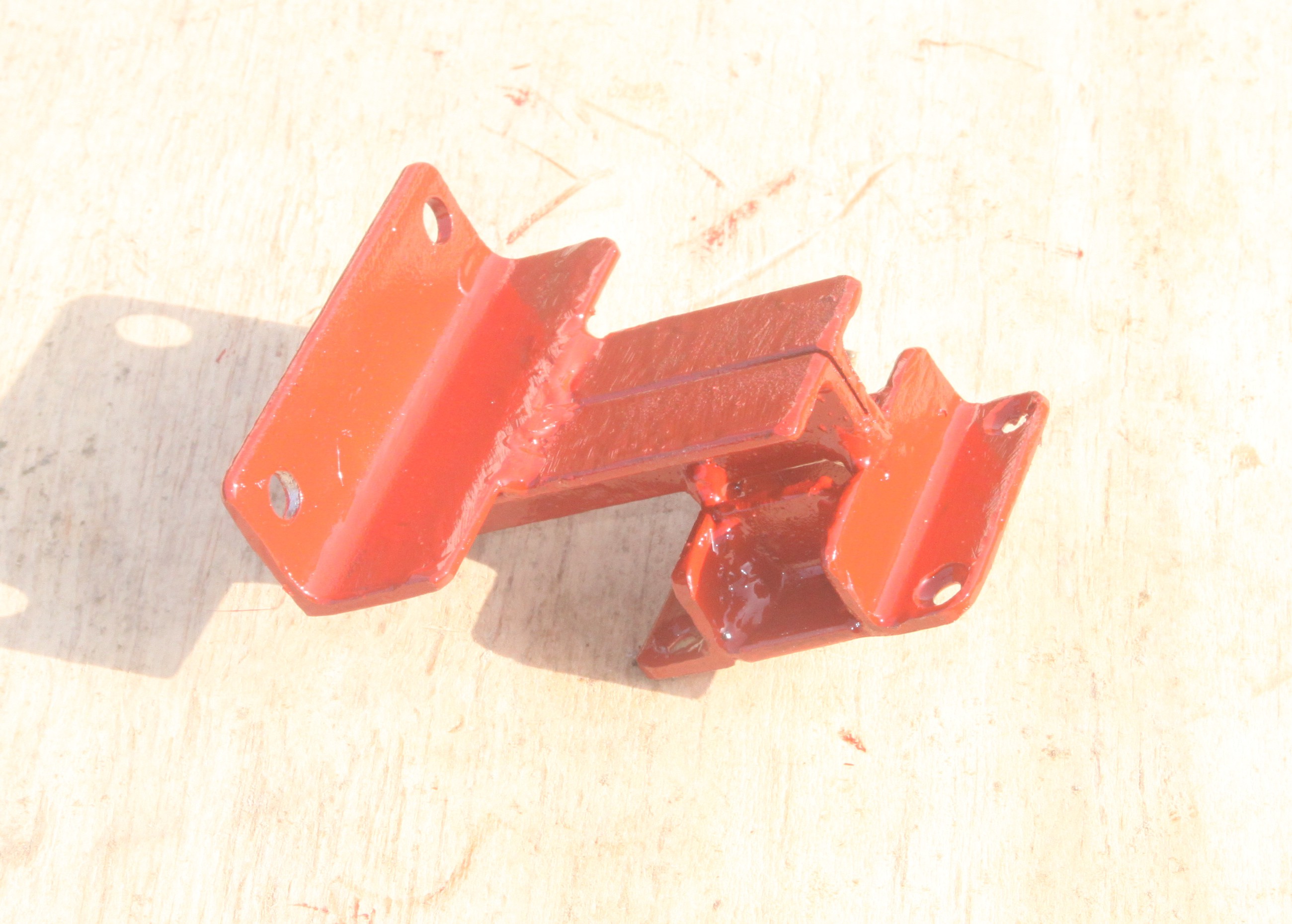

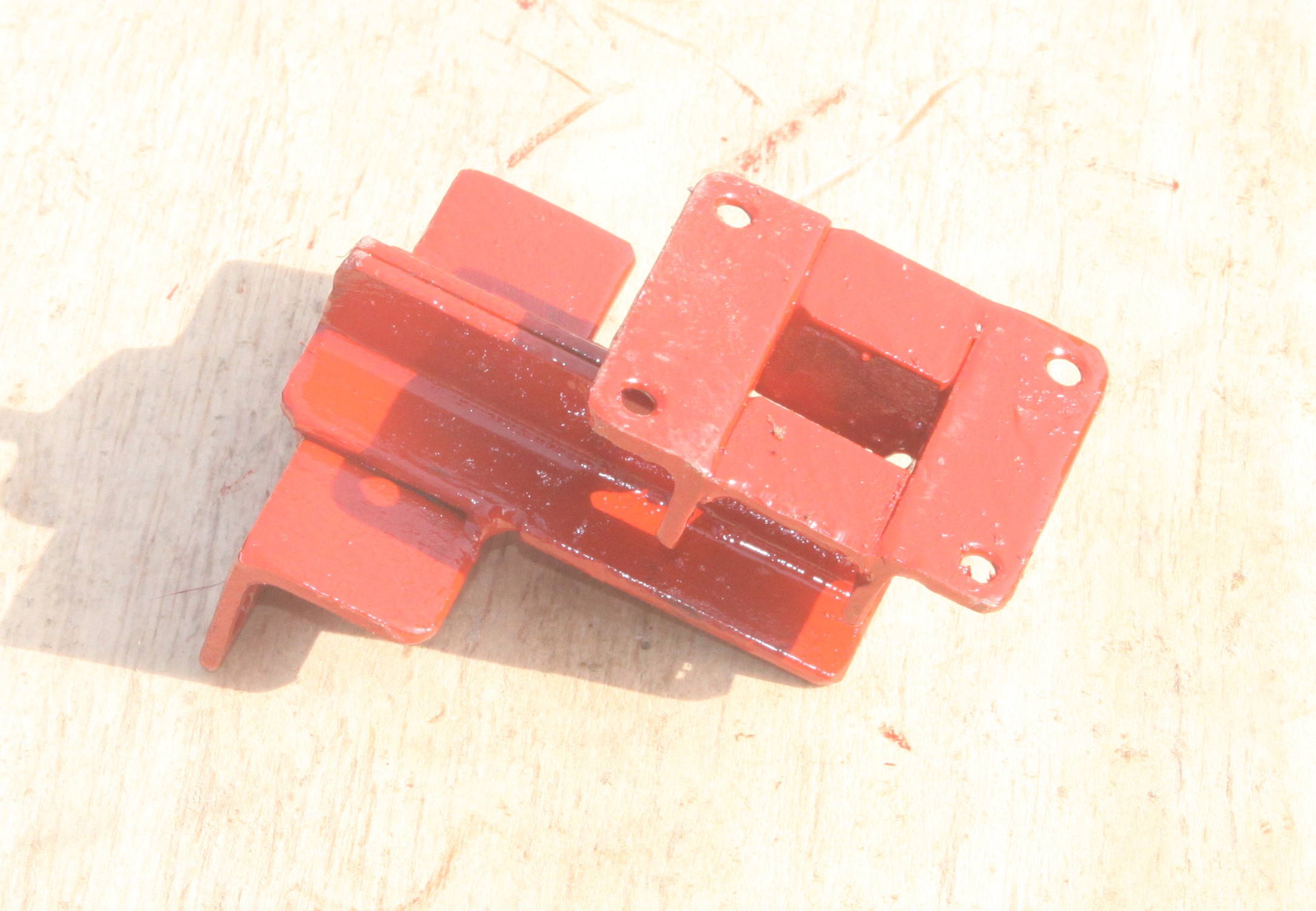

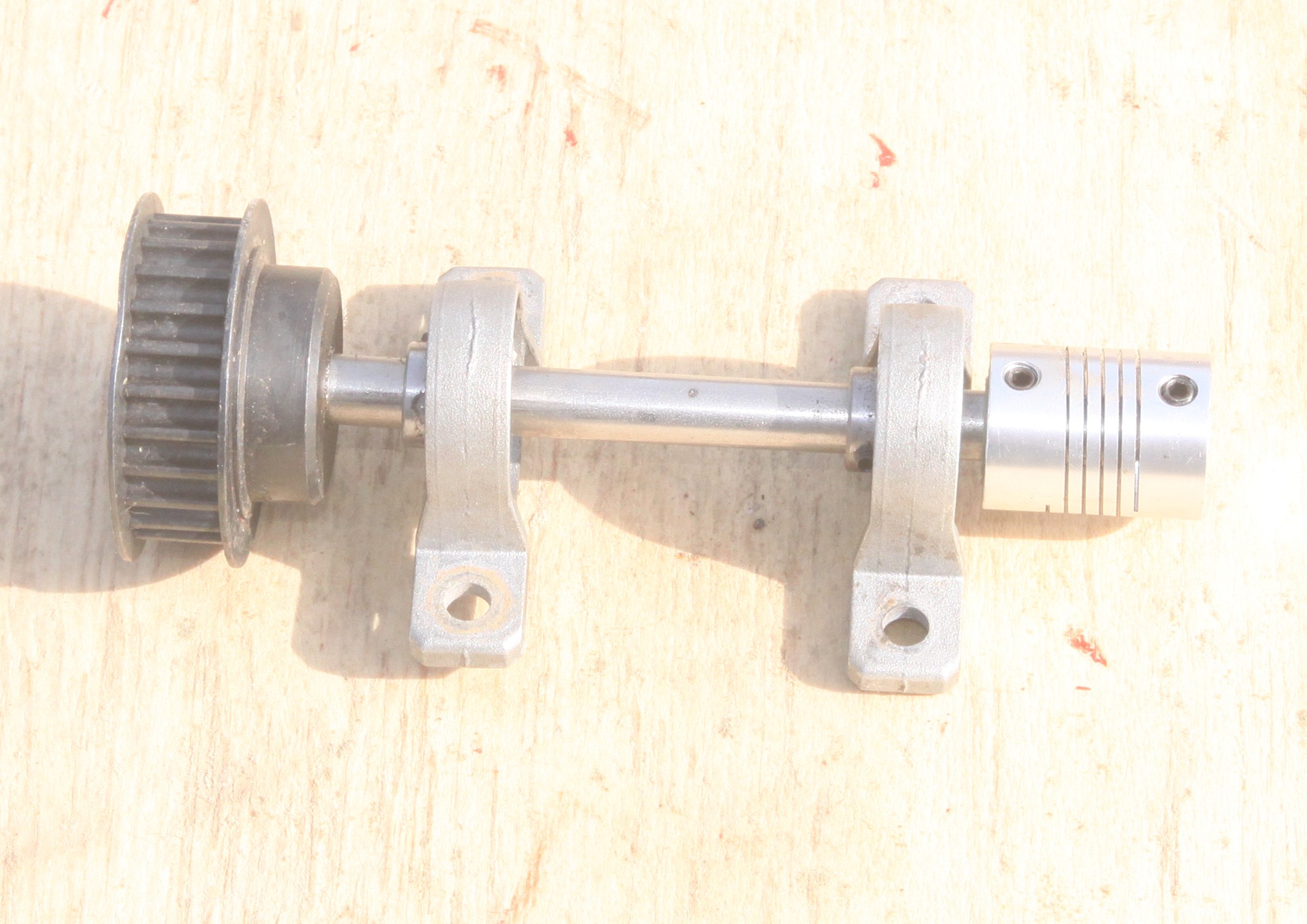

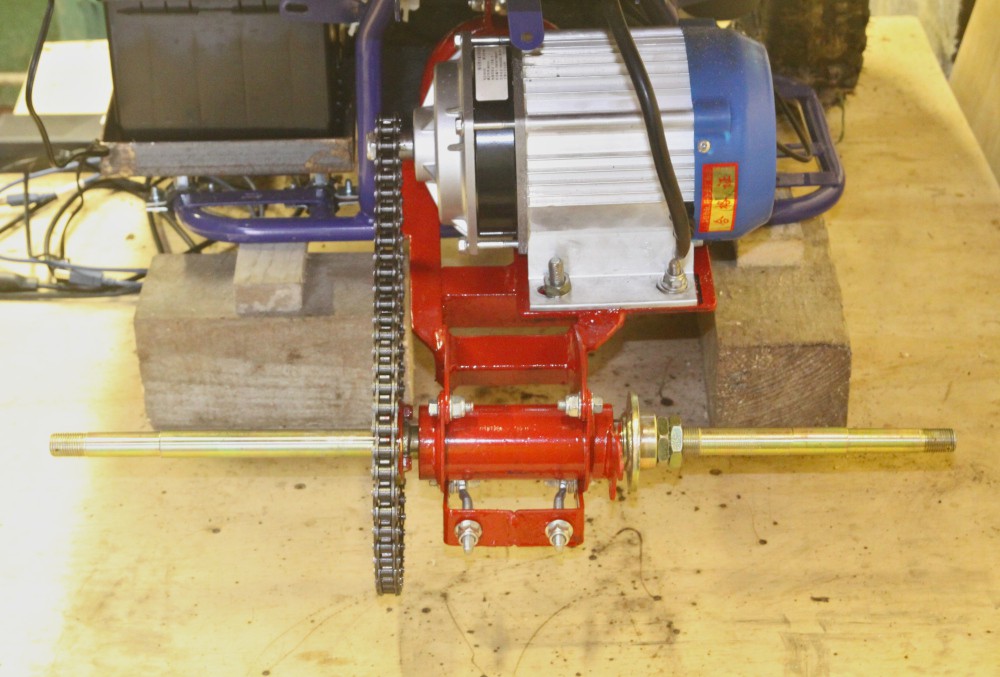

- Mechanicals

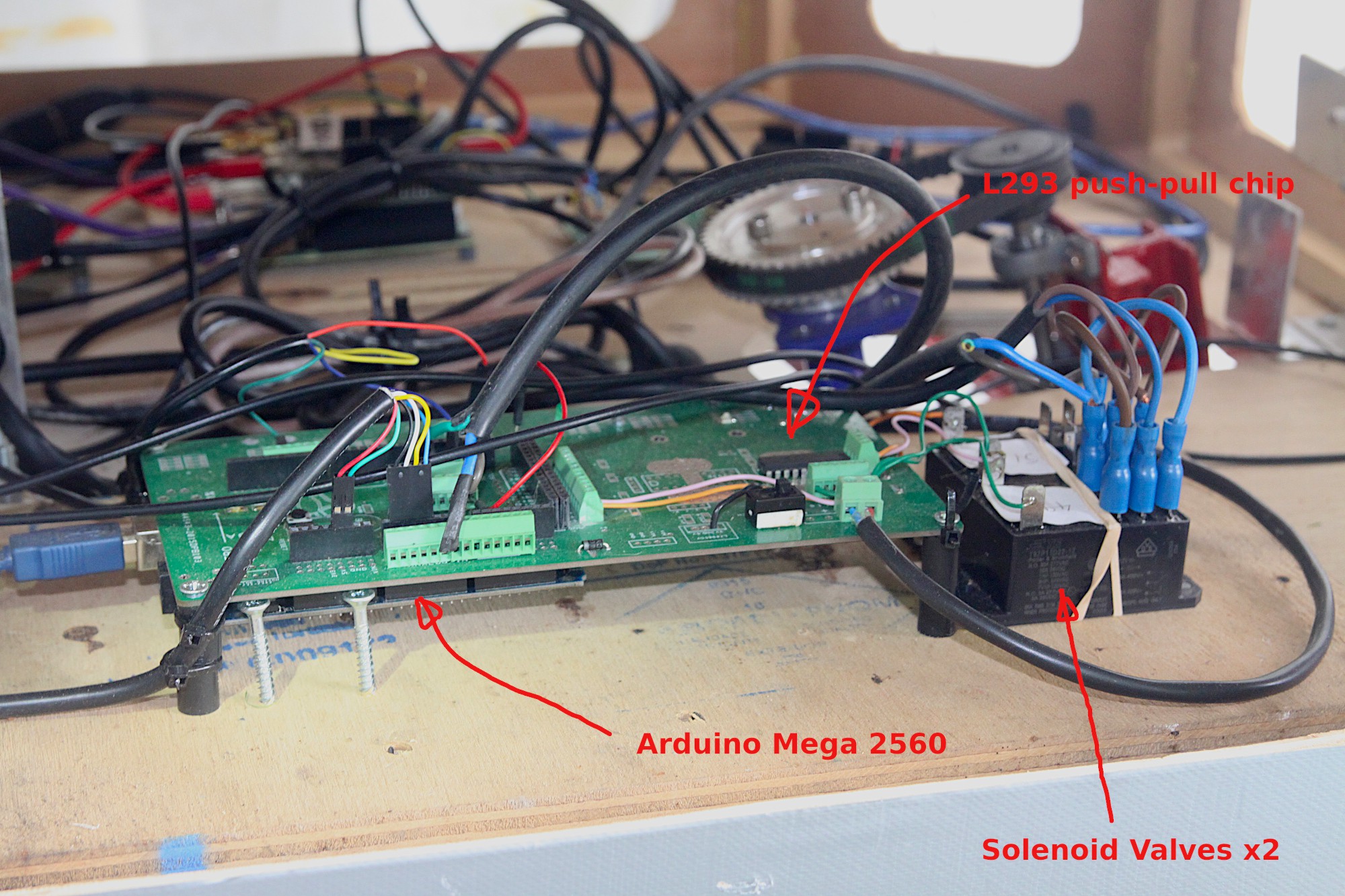

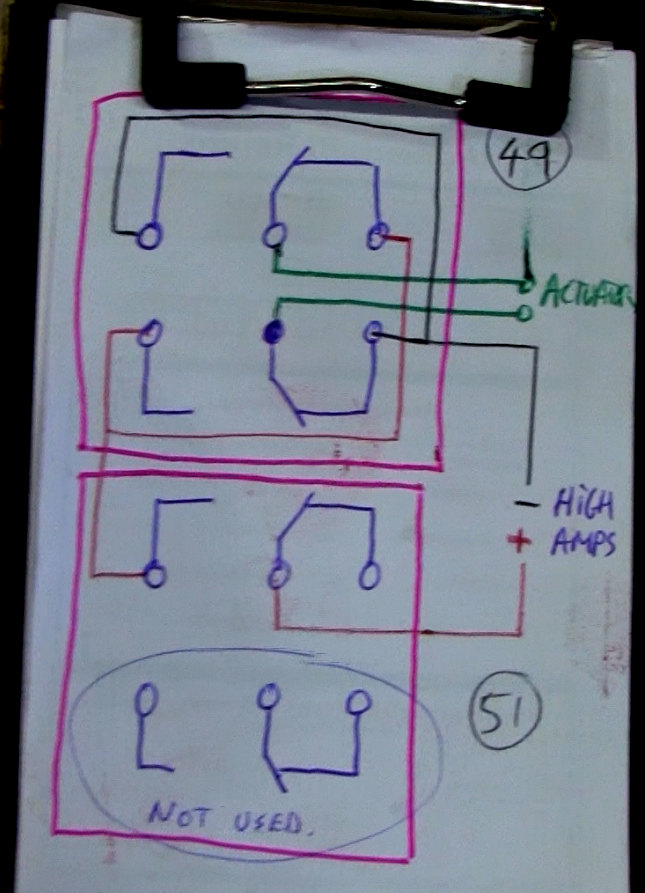

- Electrics / Electronics

- Control system

- Radio communications

- Sensors

- Software

The Instructions section describes the features that WORKED and that we want to keep. The log section describes features that we experimented with and that either failed or we decided not to carry on with for whatever reason. Both successes and failures are extremely valuable in the path forwards and both deserve documenting with equal merit.

Capt. Flatus O'Flaherty ☠

Capt. Flatus O'Flaherty ☠

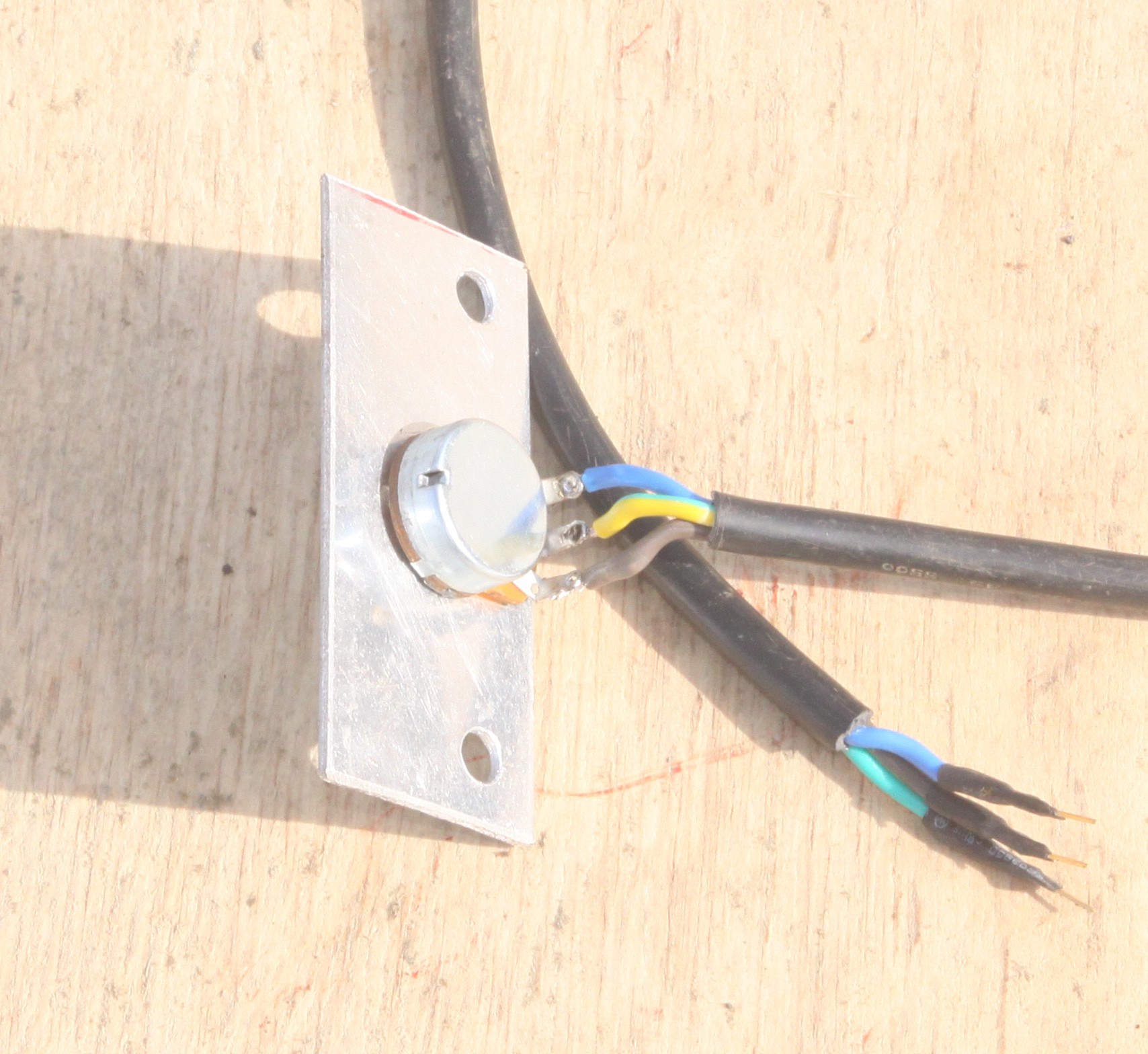

The L293 chip, shown above, is used to step up the current and voltage for the solenoid valves, shown below.

The L293 chip, shown above, is used to step up the current and voltage for the solenoid valves, shown below.

Superb project, I like the choice of chassis a lot. May I ask what length of stroke does the linear actuator have and would you change anything about it?

Thanks