Introduction

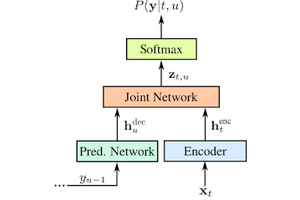

This project uses the Whisper and Codex models to create an AI assistant that translates voice commands into micropython.

It is capable of understanding commands such as: “Create a task that checks the weather every 10 minutes at www.tiempo.com and if it is cold in Alicante, turn on the heating”

To make it more comfortable for the user, the project also has voice recognition and translation, from any language into English, thanks to Whisper, an OpenAI AI that is, at the time of the project, the most advanced in terms of recognition. voice.

Although the project is very promising, it is still a bit slow. Each request can take more than 10 seconds to complete and this makes it a little too slow, but I think the potential is perfectly shown.

Application

The application is defined in main.py and is quite simple. All it does is connect to 2 internet services and display text on the screen. Here we see the main class that initializes and contains all the necessary objects.

In the callbacks is where the actions are performed and these are linked to the buttons defined in the user interface.

Note that once initialized, all it does is periodically call display_driver.process, which internally only calls the LVGL task.

HAL

The hal is defined in hal.py and includes the basic hardware initialization needed to drive the LCD, TSC, and microphone.

LCD

The LCD used is an ST7796s, with a resolution of 480x320, managed by SPI at 20MHz. The code is pretty simple, it does some basic initialization and then just uses the draw function to send an entire buffer. No DMA or anything is used to keep things simple.

TSC

The Touch Screen Controller is tactile and controlled by I2C. We just have to read a register that will tell us if the screen has been pressed and the coordinates. Support more than 1 point, only the sw only use one. In addition this class incorporates the calibration parameters ax, bx, ay, by and swap_xw to convert the TSC measurements to screen coordinates required by LVGL.

MIC

The microphone used is an INMP441, which is connected by I2S and offers 24 bits of resolution. But since it doesn't require any special action, it has no class and it's enough to just initialize the peripheral in HAL.

Note that the selected sample rate is 8KHz.

LVGL

The LVGL library is responsible for representing the entire user interface and is the largest component of the project, although this application is very simple, achieving a good and visually attractive result would not be possible without this library.

To integrate into our project we have to compile micropython from source, but since the ESP32 port is supported in the lv_micropython repository, this is a simple process.

Display driver

Once the firmware is compiled, we will have to tell LVGL how to paint on the LCD and read the TSC that we defined in hal. This is done in display_driver.py, which defines some callbacks and buffers used by LVGL.

Note that the LCD requires 480x320x2 bytes but that LVGL allows you to work with only part of this buffer at the cost of rendering several times. In this case, in which we have a SPIRAM memory, it is not totally necessary, but it is still recommended since we will use a lot of RAM in other parts of the application. (Sending the wav to the internet)

User interface

The user interface defines the different elements of the screen, its style, its composition... this is what the LVGL library provides and it's all calls to its API.

Wave

The WAVE class allows you to create .wav files to save the data captured by the microphone. The header is the first 44 bytes of a wav file, which contains the sample rate, bits, and a few more parameters. When we open a wav, this data is filled in and then we just have to insert samples with the write function. At the end the wave is rewritten since the number of samples field has to be updated.

Recorder

The recorder class allows to collect the data from the microphone (I2S peripheral) and record it in a .wav file. It...

zst123

zst123

robweber

robweber

biemster

biemster