-

VR Full Body Tracking (Part 1)

03/14/2024 at 17:57 • 5 comments![]()

Over the past 10 years, we have witnessed the active development of the VR industry and efforts to implement it in various business and entertainment sectors. It quickly evolved from an exotic, incomprehensible, and very expensive novelty to a common accessory that almost everyone can afford. If you are familiar with platforms like VRChat, Somnium Space, or any other metaverse, you have probably heard of the concept of Full Body Tracking (FBT). This article is specifically about developing trackers. However, for those who have not yet had the chance to explore virtual worlds, I will try to give you an overview before getting to the essence.

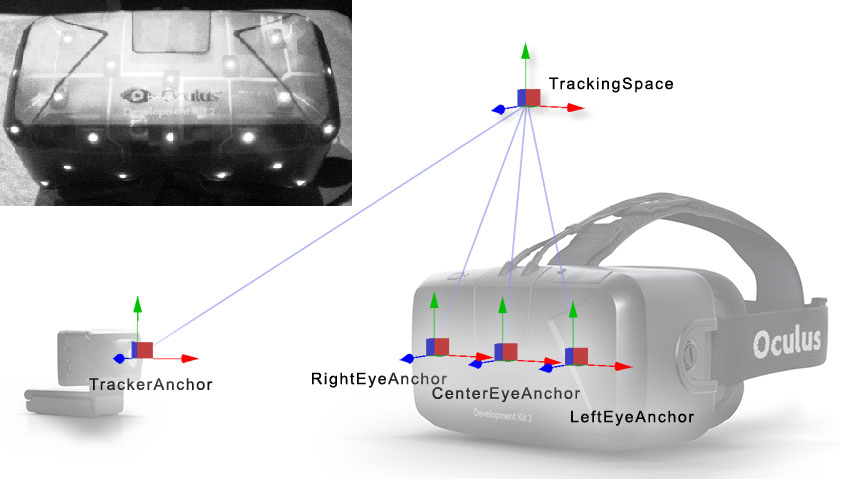

A Bit of History. The first commercial VR headsets were essentially just displays with lenses to create a normal focal distance and implemented only three degrees of freedom (3DoF) through inertial sensors or a magnetometer. This allows you to look around but does not track your movement, similar to phone cardboards. The next development step in VR was the introduction of spatial tracking. For example, in 2014, Oculus was able to add an inexpensive and quite accurate tracking system to its new headset, the Rift DK2. Infrared (IR) LEDs built into the headset acted as points of interest for a simple computer vision system that took images from an IR camera and calculated spatial coordinates along with rotation angles, further refined by inertial sensors (IMUs). This allowed the user to freely move around the play area, rather than being anchored to a fixed position.![]()

Fig. 1. Oculus DK 2, optical tracking system based on IR camera and LEDs.

Although this 6DoF system has its flaws, its relatively low cost makes it an excellent solution for mass-produced headsets like PlayStation VR or Oculus Rift. Moreover, this method allowed the inclusion of trackable controllers. And so, our virtual avatar has not only a floating head but also fully functional hands.

The next significant development in tracking systems was made by HTC in collaboration with Valve, revolutionizing everything by embedding a photodiode array into the headset instead of LEDs. Unlike all previous solutions, the new system introduced in 2015 as SteamVR Tracking does not use cameras, and the PC does not process any data. It was designed from the outset to provide room-scale position tracking without needing to connect sensors to the user's PC. The growing popularity of this system was also helped by the fact that the documentation for this system is open, as is some of the software.

Instead of stations based on IR cameras, new devices called, quite logically and succinctly, "Lighthouse" was introduced. If you want to learn more about their design and history, I suggest paying attention to Brad's video: “Valve's Lighthouse: Past, Present, and Future,” and here I will try to briefly explain their working principles. Positioned at opposite corners of the room, the lighthouses emit wide-angle two-dimensional IR laser beams throughout the room, doing this one axis at a time, meaning from right to left, then from top to bottom. Before each sweep, they emit a powerful IR light flash to synchronize. Each tracked device, whether it be a headset or controller, contains an array of IR photodiodes connected to a microcontroller (MCU), which measures the time between the flash and the laser hitting each photodiode. The tracking system uses simple trigonometry to calculate the position of each sensor with a fraction of a millimeter accuracy.

![]()

Fig. 2. Lighthouse tracking.

One of the main advantages of this approach is that now an unlimited number of devices can be tracked in the room, as long as the laser beam reaches at least a few photodiodes. A little later, the first Vive Tracker was introduced, featuring a standard 1/4 inch thread mount, meant to be attached to almost anything, whether it be a camera to track its...

Read more »

My Projects

My Pages

Projects I Like & Follow

Share this profile

ShareBits

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Valentyn

Valentyn

alexw

alexw Dmitriy

Dmitriy Julian Fernandez

Julian Fernandez Dominik Meffert

Dominik Meffert U.S. Water Rockets

U.S. Water Rockets JP Gleyzes

JP Gleyzes Michael G

Michael G Jules Thuillier

Jules Thuillier Nick Poole

Nick Poole Gabor Horvath

Gabor Horvath mircemk

mircemk andriy.malyshenko

andriy.malyshenko Christopher Xu

Christopher Xu Alan Cyr

Alan Cyr Miroslav Dzúrik

Miroslav Dzúrik Ameer

Ameer