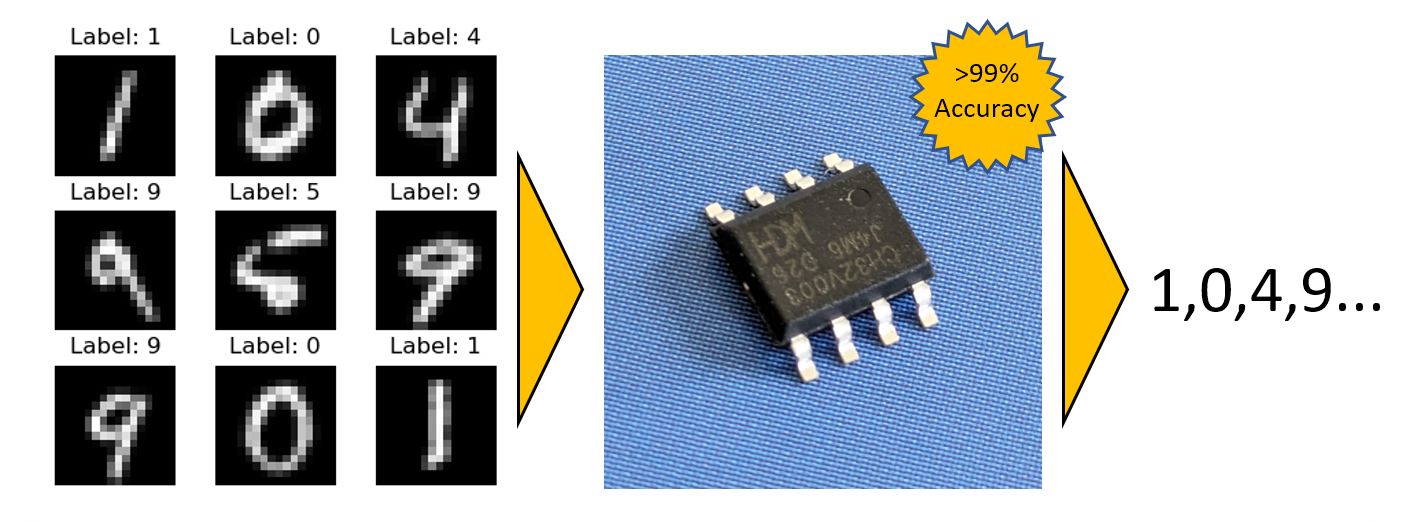

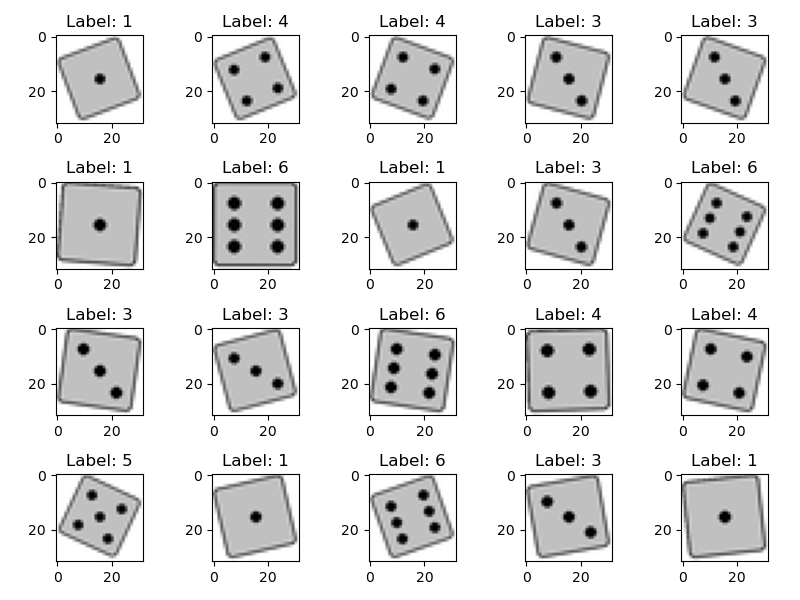

After many retro projects, it is time to look intro the future! It's the year 2023 and the biggest technology trend (hype) is generative AI. Typically this is associated with high performance GPUs. But maybe we can make use of it on a lowly microcontroller? And maybe we can use it to implement just another incarnation of an electronic die?

Table of contents

- Goal and Plan

- Training Dataset Generation and Evaluation Model

- Options for Generative AI Models

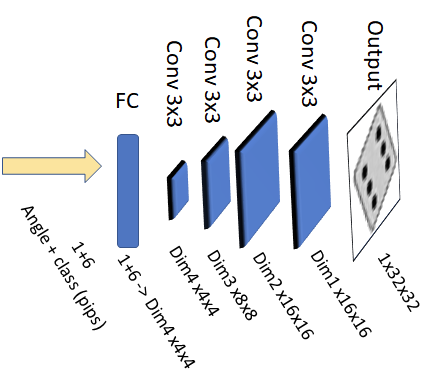

- CVAE: Condititional Variational Autoencoder

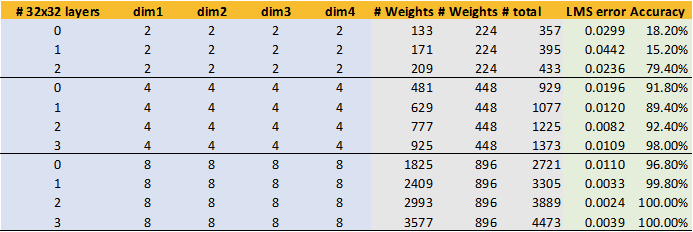

- CVAE: Minimizing the VAE Memory Footprint

- CVAE: Improving Image Quality (More Layers!)

- Tiny Inference Engines for MCU deployment

- Building my own Inference Engine

Tim

Tim

Sumit

Sumit

Nick Bild

Nick Bild

Travis Broadhurst

Travis Broadhurst

Hello Tim, cool project!

Recently I made a library for both learning and inference on MCUs. It's not NN though, just a decision tree. Check it out if you are interested: https://github.com/allexoK/TinyDecisionTreeClassifier. It's available both from Arduino and Platformio(Version 2 is significantly better than Version 1).

Maybe you can make a physical dice with accelerometer inside and teach it to predict the dice roll result after it's thrown based on acceleration data before the throw is settled. That would be kind of cool.

Also it would save some milliseconds of the fellow board games enjoyers lifes (since the dice result would be available a little bit earlier than the dice is settled) and therefore can be treated as life-saving humanitarian project.