Swarmesh NYU Shanghai

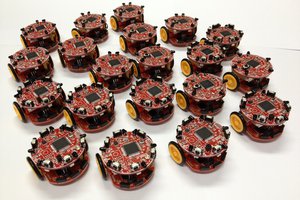

Scalable swarm robot platform using ESP32 MESH capabilities and custom IR location

Scalable swarm robot platform using ESP32 MESH capabilities and custom IR location

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

Abstract

It has been more than one month since our last hackaday update. The Kalman filter and the PID were the obstacles that stopped us, which our team had very little knowledge of. In general, we took a step forward with the grid system we proposed and implemented task assignment, auto-routing, collision avoidance, as well as error correction with PID on the system. To make the framework more comprehensive, we also implemented a simulation platform featuring all functions implemented on the real robot. The following post will discuss updates discussed above respectively.

Task Assignment

We found that the original framework we developed (please refer to log ) was naive and not efficient. It would be better if robots can sort out their target destinations before they are on the move. With suggestions from various professors, we began digging into academia, trying to find existing algorithms to tackle the problem. It didn’t take us long to find that the task assignment among multiple robots is similar to the Multiple Travelling Salesman Problem, which is a variation of the (Single) Travelling Salesman Problem and is NP-hard. Most of the optimal algorithms are beyond our scope of understanding. Then we began looking for algorithms that produce suboptimal results and are easier to implement. The one algorithm that we implemented was the decentralized cooperative auction based on random bid generators. I will briefly explain this algorithm below.

At the very beginning, each robot would receive an array of destinations to go to and would calculate the closest destination relative to their current positions. There is a synchronized random number generator among all robots. Before every round of the bidding, all robots would run this number generator, which outputs none-overlapping numbers between 1 and the total number of robots in the system for each robot. Having generated the number, robots would bid in turn based on the random number they got. When it is one robot’s turn to bid, it would broadcast its bid and its cost among all robots. Robots haven’t yet made the bid needed to process the bid made by other robots. If this bid coincides with its own pending bid, it needs to delete this current bid and reselect the next closest, available destination. The robots need to do this auction for m times, each time one robot would have a different random ID (thus would make bids at different orders). In the end, robots would unanimously pick the round of bidding with the least total distance.

Suppose the size of the task is n, the robots are programmed to do m rounds of auction to find the smallest total distance, the time complexity to produce a suboptimal assignment is only O(mn). This is a much lower complexity than the optimal algorithm that needs factorial time.

Due to the time constraint, we could only implement a simplified version of the algorithm, where the auction would only last for one round.

Figure 1. FLow chart of task assignment

Auto-routing

In a grid system, routing from point A to point B (not considering collision) is easy. We defined the length of each cell in our grid as 30cm; the origin is at the top left corner of the grid, the x coordinate increases as going to the right of the grid, while the y coordinate increases as going to the bottom of the grid. Please refer to figure x for further details of our grid setup. To get from point A to point B, the robot only needs to calculate the differences in x coordinates and y coordinates. The intermediate waypoint would have the same x coordinate as the starting point and the same y coordinate as the destination point.

Figure 2. Grid setup

Yet this brute-force way of routing may lead to collisions at various intersections. In a grid system, collisions occur because more than one robot is about to occupy the same coordinate at the same time. Thus, we assumed collisions could be avoided by dispersing the routes of all robots and making them occupy different...

Read more »Hardware

It has been a month since we last updated the details of our project on Hackaday. Accordingly, several hardware tests and hardware changes had ensued. At the end of the last hardware update, we were hoping to finalize PCB v1.0.2 and we did in the week of July 5. For the most part, we concentrated on component placement, minimizing the number of vias across the board and ensuring no vias under the ESP32 module. As for the malfunctioning IMU, we believe it was due to a short circuit induced between the wireless charging receiver coil and the esp32 as both are on the bottom of the pcb. A lot of small adjustments were made on the board and we hoped this version could be a relatively stable one.

Figure 1. Schematic v1.0.2

Figure 2. Board Layout v1.0.2

We ran some tests on how long the robot can run with a fully charged battery (with WIFI, motors, LED running). The result was nearly 5 hours every time which is adequate for our robot.

We came up with an idea for the placement of the wireless charging receiver on the robot. The following (figure 3) is a 3D model of the receiver holder that will be attached to the bottom of the robot. Ideally, the receiver will be attached to the holder and as the robot moves to a flat charging spot, the battery will then be charged. Therefore, the distance between the receiver and the transmitter is important. The recommended distance is between 2mm to 8mm.

Once we made all the adjustments, we sent PCB v.1.0.2 for manufacturing. Figure 4 is an image of the bottom of PCB v1.0.2. The greatest weakness in this board was the infrared sensors placed at the front of the robot. Other than this, the approximate positions of all other components remained consistent with our final design. The two rows of 6 pins on the PCB are for serial communication, and we decided to use the Ai Thinker USB TO TTL programmer (Figure 5). It was a reliable product, and as expected, we were not disappointed by its performance and consistency. The reset button also worked well (looking back, it was smart to add it). The holes for the switch came out a little too large, which caused some problems in switching on the robot, but in a later version, we fixed this problem. The location of the RGB LEDs was satisfactory.

Figure 6 is an image of the assembled v1.0.2 robot. As you can see, the infrared pairs were in an awkward position on the robot. We had placed holes in the PCB for some separators between the sensors, but it was a hassle. Hence, we spent the next week testing infrared sensors and corresponding resistor values. Due to the trouble that came with the custom separators, we decided to use infrared sensors with dividers already attached to the infrared emitter and receiver pairs. As such, we used ST188 sensors, but (take note) they are not the original sizes; instead, they were 8.7mmx5.9mm, slightly smaller than the official ones.

Figure 6. Assembled v1.0.2 Robot

The resistor values for the infrared emitters were a headache for us. The 10k ohms for the infrared receivers were fine, and we didn’t change them. We set up our apparatus on a breadboard with the esp32 dev kit, five ST188 infrared sensors, and a TIP122. After changing in resistors with varying resistances, we agreed on using the 10-ohm resistors for the emitters as the proximity for detection was huge (this was the decision that caused a big problem for us later).

Before submitting our design (v1.0.3) to the manufacturer, we added an infrared emitter on the back of the robot (LTE-302) in hopes of helping other robots know when there is a robot in front of them. This addition became another one of our mistakes as we were too hasty when adding this on.

Lastly, we added three GPIO pins with +5V and GND in order for the possible addition of servos or LED matrices. We ordered five of PCB v1.0.3 for testing. ...

Read more »The past week was mostly for doing housekeeping with the work done in the past weeks and to look ahead, think about what to do with the platform we have so far.

Following the pattern of week 2, we received PCB v1.0.1 on Monday and assembled our model immediately for testing. We kept our fingers crossed, hoping that everything (or at least most) will work. Having said that, we encountered a number of setbacks as usual.

Figure 1: PCB v1.0.1

Figure 2: Robot v1.0.1

These three revisions then allowed for a successful connection between the programmer and the ESP32, and programs could upload without any issues.

We conducted tests for each component on the PCB individually to check their functionality, and they were all successful, except for the IMU, which we are only inserting 4 out of the 8 pins, VCC GND SCL SDA in the pins for I2C. Surely, it must be another small mistake, but we cannot upload the program onto the ESP32 with the IMU inserted as of now.

We commenced the process of creating our next version PCB v1.0.2. Other than the aforementioned changes, we will also be switching the connectors for sensors modules to holes for actual sensor components (TCRT5000L, IR EMITTERS, IR RECEIVERS).

In between refining the details of component placement, we did some exhaustive tests on the functionalities that have high consumption on 3.3V. We turned on the WIFI functionality, moved the motors, flashed the LEDs, and inserted the IR modules. With these all working simultaneously, we ran the robot until it was out of juice and recharged it for a specific amount of time and measured the run time. We repeated this cycle for only 3 cycles as of now with the results as follows:

10 min charging, 20 min running

60 min charging, 100 min running

60 min charging, 105 min running

We will conduct more tests of this sort, but the pattern seems to be that the run time is twice as charging time with some fluctuations when charging time increases (to be confirmed).

Next week, we will finalize PCB v.1.0.2, hopefully, we will be able to integrate the software we have written with the hardware by the end of the week.

Last week, we tried cleaning up the software by modularizing the code, parsing all essential parts using Object Oriented Programming.

We arranged the robot’s code in the same fashion as its functionalities, divided them into classes of Locomotion, Communication, Tasks. Each of these classes has public functions exposed as interfaces. Details of these interfaces are written in their corresponding header files. In addition to these fundamental functions, we also defined another class, Robot, which holds essential properties of the robots, such as ID, current position, battery level, etc. It also integrates all the instances of the classes mentioned above, exposes interfaces that are easy to understand.

We also used a simulation software named Webots to simulate our project. With the help of Webots, we created a rectangular...

Read more »We received our first PCB prototype on Monday (let’s call it v0.1.0 for Swarmesh 2020)! We assembled the PCB and immediately we could see that there were several improvements to be made for the next version.

Other than these issues, the test board works well and the 3.7V battery is enough to power the robot (assuming the battery has a decent amount of juice). As for the wireless charging, the ones we had were not in the best condition and we bought new ones to test. These new ones charged a 3.7V battery from 2.7V to 4.2V in 5 mins which is very fast and might even be too fast. The wireless charging system we are planning to pursue is still under ongoing tests.

Figure 1a.

Figure 1b.

On Tuesday, we started planning and structuring our new PCB (v.1.0.1). Changes and additions are as follows:

4. ESP32 documentation recommends an output current of 500mA or more when using a single power supply. The MCP1700 LDO mentioned in the last post has an output current of up to 250mA while consuming only 1.6uA of quiescent current, a far lower current than the recommended output current for ESP32. When we employ the Wi-Fi networking functionality on ESP32, it will possibly trigger a brownout or other crashes. To be able to provide at least 500mA peak power for ESP32, we compared different LDOs and eventually settled on the AP2112K-3.3V LDO which has a maximum dropout voltage of 400mV, an output current of 600mA, and a quiescent current of 55uA.

Figure 2. LDO Table

5. We shrunk the size of the PCB to a hexagon with 50mm sides. Accompanying the change to a smaller PCB, we also changed the resistors and capacitors to SMDs. The switch will also be more compact in size. The standoffs are now M2.

6. We decided to remove the devkit and replace it with only the esp32-wroom-32d module and 6 pins for the programmer.

7. Pins for I2C were added which means we can also add an IMU to the robot.

8. Two touch pad sensors

9. Analog pin for battery level

10. Three leds: one for power level, one for charging, one for state of the robot

11. Two sets of pins for two reflective sensors

12. Five sets of pins in the front of the robot for 5 pairs of IR sensors. We used to have a mux to increase the number of analog inputs, but since we have enough analog (ADC1) pins on the ESP32, we refrained from adding a mux to save space.

In the following week, we will be receiving PCB v1.0.1 and assembling it. Hopefully, everything will work as intended.

Figure 3. Schematic for v.1.0.1 Figure 4. PCB layout for v.1.0.1

This past week has witnessed enormous software progress we made. Based on the camera system and tests implemented last week, we now can give the swarm of robots a list of destinations to go to, the robots would then figure out the destination they should go to.

The logic of the system follows the Finite State Machine shown in the last blog post. Robots would receive the absolute position of all robots as well as positions to go to in Json documents delivered by multicast. Each robot would pick the destination with the least Manhattan distance with regard to its current position. When a robot arrives at its destination, it would again send a multicast message specifying certain coordinates are taken. For other...

Read more »It's been half a year since we last updated the entry. Recently we talked again about our direction and progress. We realized to make Swarmesh a candidate for distributed system research, we first need to develop a platform with all functionalities integrated and readily available

Necessary improvements to be made on the system are listed below.

1. Positioning system

Though last year we spent a lot of time & effort on the distributed positioning system using IR, the petal shape IR radiation and the limited detection range were really bothersome. In this iteration, we decided to give up on the IR positioning and turn to having a camera hanging on the ceiling pointing down at the floor. To recognize the robot, each robot carries a unique arUco code on its back. A computer connected to the camera would use OpenCV to analyze the image and output the coordinates of all robots in the image. These coordinates would then be multicasted to an IP address using UDP. The UDP packets would be sent periodically, to guarantee the robots don't go off-course.

2. Communication:

As mentioned above, we are using multicast for terminal-robot communication. The robots would be subscribed to a multicast IP that's dedicated for providing position information. We used mesh for robot-robot communication in the past, yet the tests we ran last year showed the communciation is always unstable and has a very limited range. There is also a chance that we get rid of mesh and turn fully to multicasting. But at this point we have not decided yet.

3. System structure

We are envisioning the system to carry out tasks like shape formation. The robots would receive tasks from the centralized server for coordinates to be taken up with. Based on Manhattan distance, the robots would distributedly calculate the nearest destination. They would move to the destination according to the Manhattan distance calculated.

Plans

The steps to develop the system are shown in the pictures below with order.

Finite State Machine of each robot

Wireless Charging:

We are experimenting with wireless charging modules and 3.7V rechargeable batteries, which is significantly easier than the wired charging with the 7.4V Li-Po battery packs on the previous design iteration. No more fiddling with cables. No wear and tear. However, some possible drawbacks include overheating and slower charging time.

Some tests with the wireless charging modules were conducted on the KittenBot (by soldering the receiver to the battery) to test the capabilities of the system. The results show a consistent pattern where the runtime is twice the charging time. However, the battery on the KittenBot is 2200mAh 3.7V, which is slightly different from the smaller 3.7V batteries (around 850mAh) that we intend to use. We predict that the smaller 14500 3.7 batteries will result in a runtime of around 4-5 hours.

Motors:

Since we changed the previous 7.4V Li-Po batteries to 3.7V Li-ion batteries, we also want to test out the 3V 105rpm gearmotors with encoders. As for improvements in cable management, we will be using the double-ended ZH1.5mm wire connectors, allowing us to easily plug and unplug motor cables from the PCB (if you recall, the wires were soldered onto the PCB in the previous version).

PCB:

We have designed a test PCB on Eagle (and sent it to a manufacturer) that can accommodate the new changes mentioned above. Another addition is an LDO Regulator (MCP1700) to regulate a 3.3V voltage for the ESP32. We are also trying out a new hexagonal shape for our robot. Let’s see if we like it. The plan is to examine the efficacy of the wireless charging system and examine whether the smaller 3.7V batteries can provide enough power to the motors and the ESP32.

OpenCV

We first tried to learn open-cv to become more familiar with this new module, which is probably...

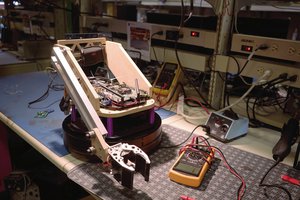

Read more »We started to assemble the infrared relative location circuits but it took us more than hours for the first two pieces. We spent other four hours to test their functions, finding many issues that were conflicting with the design. It was critical to our success that our previous test of the IR location was rigorous (with all the data shown in our research paper) and that we were able to reconfirm the working circuit re-assembling the original prototype. Our next steps move towards a redesign of the circuit to try that modified implementation.

Issue 1: Industrial design

The steps of the original design for the prototype were:

- assemble infrared(IR) receivers, connectors and resistors on the printed circuit board (PCB)

- mount 3D printed base plate

- mount 3D printed reflector cone

- insert 8 emitters (guessing the position of these 16 pins was possible took us 80% of the time)

- solder the 16 pins

- desoldering many of the pins because of guessing their position wrong. Resolder. Solder again.

Issue 2: External capacitors for ADC ultra-low noise pre-amp

Pins 3 and 4 of the ESP32-DevKitC were in our schematic expected to be used as analog read and to be connected with infrared proximity sensors, hence they had pull-up resistors. After digging about the extra functionalities they have, they have functions related to the pre-amplifier of the analog digital converter. The manufacturer suggests to connect a capacitor of 330pF between these two pins to get ultra low noise.

We didn't try that, but we can make sure we wouldn't be able to use the pull-ups and the ADC functions correctly. We noticed this when there was a mysterious grass noise on the readings (about 20% of full scale!) but as the IR were not even installed, we removed the pull-up resistors and the analog values came back to normal.

Issue 3: Circuit design

Pin 2 fo JMUX connector was noted as being Vcc on the IR board. But on the main board that pin was coming from a resistor in order to limit the current through the IR transmitters.

Also we had to resolder all receivers because they were noted as phototransistors but they were inverted diodes instead.

So far we have been switching from MicroPython, Arduino IDE, Platformio to IDF. Now that we are tuning up the first prototype, we are re-exploring the needs of our swarm network.

Espressif provides an insightful explanation about how it handles MESH communication:

https://docs.espressif.com/projects/esp-idf/en/latest/api-guides/mesh.html

So far we have been working with their IDF environment to develop basic tests. Surprisingly enough, there is an Arduino Library that could make our work approachable by beginners: painlessMesh.

With the first tests of this library, one of the essential things that we noticed was that nodes were dropping and re-appearing. Also the switching seemed to be a bit mysterious, so there was a bit of reluctance in our team of handling a black-box. So we decided to investigate MESH a bit more.

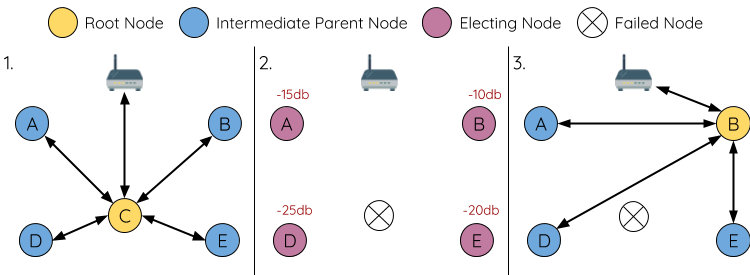

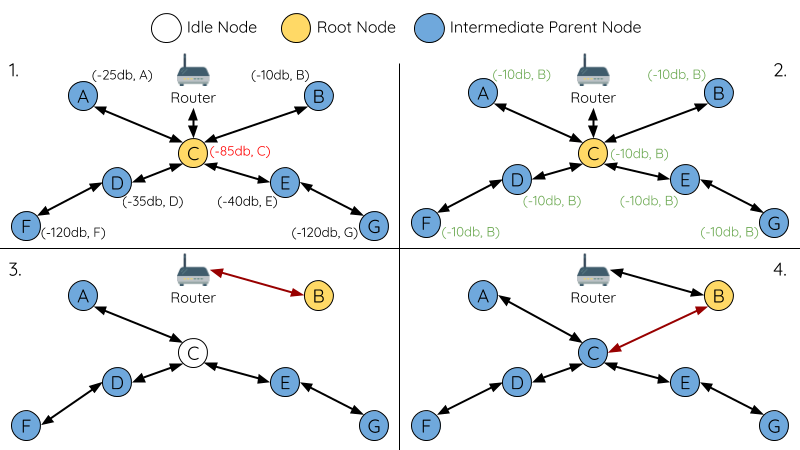

The first key concept that was key to understand the behavior was the automatic root node selection.

Another concept that helped us understand what was going on was this diagram for root node switching failure.

Among these, some of the node behaviors were clearer. Still, the painlessMesh library has many dependencies and among them, the examples use a task scheduler. This was far from ideal for our application, but thankfully we realized that MESH takes care of all the internal switching and that handling the data stream is up to the user software.

There has been a quiet period here but it was mainly because we were working on hardware issues... that were indeed hard. We will at least share that the schematic using ESP32 in a MESH network was tested and the IR location multiplexed was also a success.

The steps we are working on now are to bring to life a whole robot. In order to do this, we used Eagle to design a Schematic, later hard wired it to a perfboard and designed a PCB based on that test. After running a small test on our Protomax, we are now waiting that the factory ships the prototype boards. Let's keep fingers crossed!

The poster features the rendered 3D model of the swarm robots, which come to form the word of IMA. This is demonstrates one of its key abilities, forming shapes or images. It can be used to achieve robotics art in future application.

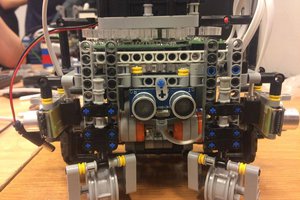

First let us show you how the chassis of our swarm robot looks like:

And this is the 3D model of the chassis that we built through Fusion 360

1. The total size of the chassis cannot be over 80*80mm too much

2. Two wheels with motor on it need to be attached on it

3. There should be four holes to attach the chassis with PCB using screws

4. We'd better leave a hole in the middle of the chassis such that the cables attached to the PCB above have place to go

5. The chassis needs to hold the battery

6. Two balls should be attached on the other two sides in order to keep the robot stable.

Taking all the details into consideration and after several modifications to the prototype, we've come up with the first "most-satisfying" version, fitting all these requirements. Further progress of chassis construction will go along with the whole team's process, let us wait and see :-)

Create an account to leave a comment. Already have an account? Log In.

Is there an issue with using an ultrasonic emitter/sensor? Many bot designs put a servo under the device to scan the area in front of the bot by rotating the device. It is a proven technique.

That's a very good question, the reason that we are not using Ultrasonic Sensor is because 1) It uses Time of Flight (ToF) to measure the distances between the robots, which takes some time to finish, using IR will be much faster 2) Ultrasonic doesn't carry any identity information (at least with our design), so we don't know which robot is sending, which robot is receiving or which robot reflects the signal, 3) when multiple robots emits ultrasonic signal, they will interfere each other. We've also considered the possibilities of using Computer Vision, but we need more powerful chips to do that. Thank you for your comment, I hope that answers your question ^_^

-Z

Become a member to follow this project and never miss any updates

Jack Qiao

Jack Qiao

Radu Motisan

Radu Motisan

Keith Elliott

Keith Elliott

maks.przybylski

maks.przybylski

I'm excited to see how this works out!!!